A Transformer Is A Deep-learning Neural Network Architecture that has revolutionized various fields, including natural language processing (NLP), computer vision, and even bioinformatics. This innovative architecture, which learns context and meaning by tracking relationships in sequential data, has become a cornerstone of modern AI. Discover how transformers are reshaping the landscape of machine learning and explore the opportunities available at LEARNS.EDU.VN to deepen your understanding of this transformative technology.

1. Understanding Transformer Models: A Deep Dive

A transformer model is a type of neural network that excels at understanding context and relationships within sequential data, such as words in a sentence. Unlike previous models that processed data sequentially, transformers leverage a mechanism called “attention” or “self-attention.” This allows them to simultaneously consider all parts of the input data, capturing subtle dependencies and long-range relationships.

1.1. The Core Concept of Attention

The attention mechanism enables the model to focus on the most relevant parts of the input sequence when making predictions. It does this by assigning weights to each element in the sequence, indicating its importance in relation to other elements. These weights are learned during training, allowing the model to automatically discover the most crucial relationships within the data.

1.2. Origins of Transformer Architecture

The transformer architecture was first introduced in a groundbreaking 2017 paper titled “Attention is All You Need” by researchers at Google. This paper demonstrated the superiority of the transformer model over traditional recurrent neural networks (RNNs) and convolutional neural networks (CNNs) in machine translation tasks.

1.3. Transformer AI: A New Era of Machine Learning

The advent of transformers has ushered in a new era of machine learning, often referred to as “transformer AI.” These models have become the foundation for many state-of-the-art applications, driving significant advancements in various domains.

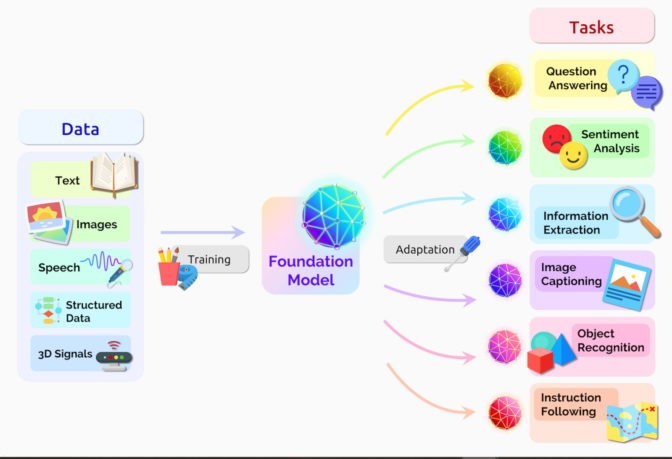

1.4. Foundation Models: Stanford’s Perspective

Researchers at Stanford University have dubbed transformers as “foundation models” in their August 2021 paper, highlighting their potential to drive a paradigm shift in AI. They emphasize the sheer scale and scope of these models, which have expanded our imagination of what is possible with AI.

2. Applications of Transformer Models: Transforming Industries

Transformer models are versatile tools with applications spanning numerous industries. Here are some notable examples:

2.1. Natural Language Processing (NLP)

- Machine Translation: Transformers have revolutionized machine translation, enabling near real-time translation of text and speech.

- Text Summarization: These models can condense lengthy documents into concise summaries, saving time and effort.

- Question Answering: Transformers can accurately answer questions based on provided text, making them valuable for information retrieval.

- Sentiment Analysis: They can analyze text to determine the sentiment expressed (e.g., positive, negative, or neutral).

- Chatbots and Virtual Assistants: Transformers power sophisticated chatbots and virtual assistants that can understand and respond to user queries in a natural and engaging way.

2.2. Computer Vision

- Image Recognition: Transformers are used to identify objects and scenes in images with high accuracy.

- Object Detection: They can locate and classify multiple objects within an image.

- Image Generation: Transformers can generate realistic images from text descriptions.

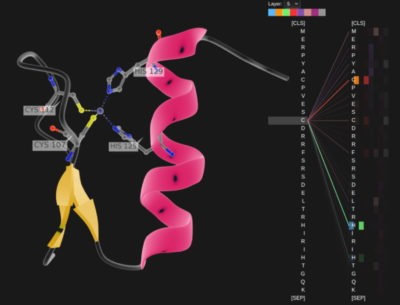

2.3. Bioinformatics

- Drug Discovery: Transformers help researchers understand the complex relationships between genes in DNA and amino acids in proteins, accelerating the process of drug design.

- Protein Structure Prediction: They can predict the three-dimensional structure of proteins, which is crucial for understanding their function.

2.4. Other Applications

- Fraud Detection: Transformers can detect trends and anomalies in financial data to prevent fraud.

- Manufacturing Optimization: They can streamline manufacturing processes by identifying inefficiencies and predicting equipment failures.

- Personalized Recommendations: Transformers can analyze user behavior to provide personalized recommendations for products, services, and content.

- Healthcare Improvement: They can extract insights from medical records to improve patient care and accelerate medical research.

3. The Virtuous Cycle of Transformer AI: Driving Continuous Improvement

Transformer models benefit from a virtuous cycle of continuous improvement. They are trained on vast datasets, which enables them to make accurate predictions. These accurate predictions drive wider adoption, generating even more data that can be used to further refine the models.

3.1. Self-Supervised Learning: A Key Enabler

Transformers have made self-supervised learning possible, allowing AI to learn from unlabeled data. This has significantly accelerated the development of AI models, as it eliminates the need for costly and time-consuming manual labeling.

3.2. NVIDIA’s Perspective on Transformer AI

NVIDIA founder and CEO Jensen Huang emphasized the transformative impact of transformers, stating that they have propelled AI to “warp speed.”

4. Transformers Replace CNNs and RNNs: A Paradigm Shift

In many cases, transformers are replacing convolutional neural networks (CNNs) and recurrent neural networks (RNNs), which were the dominant deep learning models just a few years ago.

4.1. Dominance in AI Research

A significant portion of AI research papers now mentions transformers, indicating their widespread adoption and impact.

4.2. Shift from Traditional Models

This represents a significant shift from previous years when RNNs and CNNs were the most popular models for pattern recognition.

5. Advantages of Transformers: Why They Excel

Transformers offer several key advantages over traditional neural networks:

5.1. No Labeled Data Required

Transformers can be trained on unlabeled data, making them more versatile and cost-effective.

5.2. Parallel Processing

The mathematical operations used by transformers lend themselves to parallel processing, enabling faster training and inference.

5.3. Superior Performance

Transformers consistently achieve top rankings on performance leaderboards for various tasks, such as language processing.

6. How Transformers Pay Attention: A Technical Overview

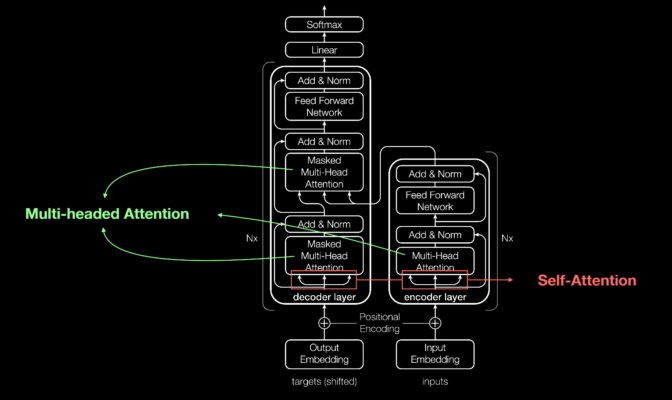

Transformer models are essentially large encoder/decoder blocks that process data. The key to their power lies in the “attention” or “self-attention” mechanism.

6.1. Positional Encoders

Transformers use positional encoders to tag data elements as they enter and exit the network. These tags provide information about the position of each element in the sequence.

6.2. Attention Units

Attention units follow these tags, calculating a kind of algebraic map of how each element relates to the others. This allows the model to capture dependencies and relationships between different parts of the input sequence.

6.3. Multi-Headed Attention

Attention queries are typically executed in parallel using a technique called multi-headed attention. This involves calculating multiple attention maps simultaneously, allowing the model to capture a wider range of relationships within the data.

7. Self-Attention Finds Meaning: Understanding Context

The self-attention mechanism allows transformers to understand the meaning of words and phrases in context.

7.1. Example: Resolving Ambiguity

Consider the following sentences:

- “She poured water from the pitcher to the cup until it was full.”

- “She poured water from the pitcher to the cup until it was empty.”

In the first sentence, “it” refers to the cup, while in the second sentence, “it” refers to the pitcher. The self-attention mechanism allows the model to understand these subtle differences in meaning based on the context.

7.2. Learning Relationships

Meaning is a result of relationships between things, and self-attention is a general way of learning relationships.

8. The Origin of the Name “Transformer”: A Moment of Inspiration

The name “Transformer” was chosen because it reflects the model’s ability to transform representations of data.

8.1. Alternative Names Considered

The Google researchers initially considered calling the model “Attention Net,” but they ultimately decided that “Transformer” was a more fitting and exciting name.

9. The Birth of Transformers: A Collaborative Effort

The transformer architecture was the result of an intense collaborative effort by a team of researchers at Google.

9.1. Training on NVIDIA GPUs

The team trained their model in just 3.5 days on eight NVIDIA GPUs, demonstrating the efficiency of the architecture.

9.2. A Game-Changing Innovation

One of the researchers, Ashish Vaswani, predicted that the transformer architecture would be a “huge deal” and “game-changing.”

10. A Moment for Machine Learning: Surpassing Expectations

The transformer architecture surpassed similar work published by a Facebook team using CNNs, marking a significant milestone in machine learning.

10.1. BERT: A Bidirectional Transformer

Another Google team developed BERT (Bidirectional Encoder Representations from Transformers), a model that processes text sequences both forward and backward to capture more relationships among words.

10.2. Impact on Google Search

BERT became part of the algorithm behind Google search, demonstrating its practical value.

11. Putting Transformers to Work: Applications in Science and Healthcare

Transformer models are being applied to a wide range of problems in science and healthcare.

11.1. AlphaFold2: Protein Structure Prediction

DeepMind developed AlphaFold2, a transformer-based model that predicts the three-dimensional structure of proteins.

11.2. MegaMolBART: Drug Discovery

AstraZeneca and NVIDIA developed MegaMolBART, a transformer tailored for drug discovery.

11.3. GatorTron: Medical Insights

The University of Florida’s academic health center collaborated with NVIDIA researchers to create GatorTron, a transformer model that extracts insights from clinical data to accelerate medical research.

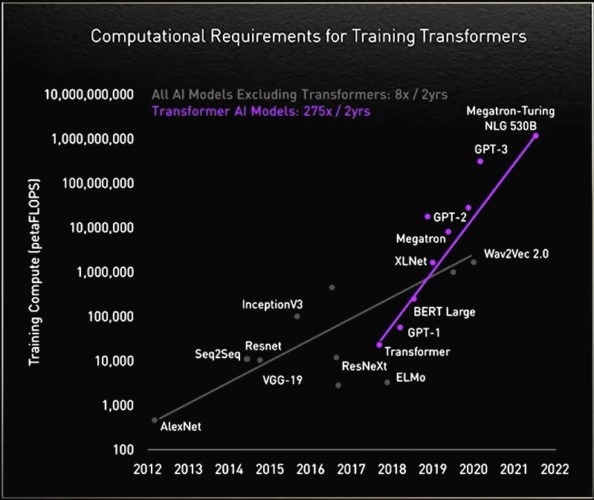

12. Transformers Grow Up: Scaling for Performance

Researchers have found that larger transformers generally perform better.

12.1. Rostlab: Protein Analysis

Researchers at the Rostlab at the Technical University of Munich used natural language processing to understand proteins.

12.2. GPT-3: A Massive Language Model

OpenAI’s GPT-3 has 175 billion parameters and can respond to user queries even on tasks it was not specifically trained to handle.

13. Tale of a Mega Transformer: MT-NLG

NVIDIA and Microsoft announced the Megatron-Turing Natural Language Generation model (MT-NLG) with 530 billion parameters.

13.1. NVIDIA NeMo Megatron

It debuted along with a new framework, NVIDIA NeMo Megatron, that aims to let any business create its own billion- or trillion-parameter transformers.

13.2. TJ the Toy Jensen Avatar

MT-NLG had its public debut as the brain for TJ, the Toy Jensen avatar that gave part of the keynote at NVIDIA’s November 2021 GTC.

14. Trillion-Parameter Transformers: The Future of AI

Many AI engineers are working on trillion-parameter transformers and applications for them.

14.1. Exploring the Limits

Researchers are constantly exploring how these big models can deliver better applications and investigating their limitations.

14.2. NVIDIA H100 Tensor Core GPU

The NVIDIA H100 Tensor Core GPU packs a Transformer Engine and supports a new FP8 format, which speeds up training while preserving accuracy.

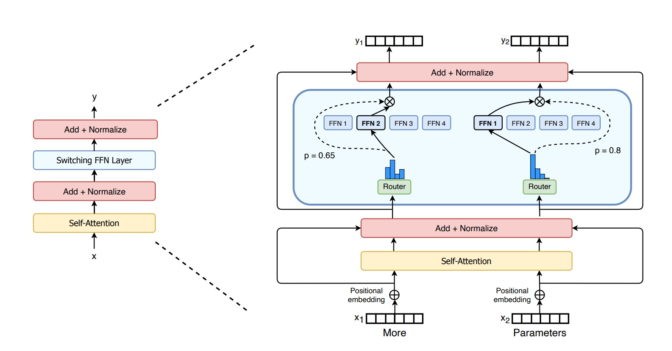

15. MoE Means More for Transformers: Mixture of Experts

Google researchers described the Switch Transformer, one of the first trillion-parameter models, which uses AI sparsity and a mixture-of-experts (MoE) architecture.

15.1. Microsoft Translator

Microsoft Azure worked with NVIDIA to implement an MoE transformer for its Translator service.

16. Tackling Transformers’ Challenges: Simpler and More Efficient Models

Some researchers aim to develop simpler transformers with fewer parameters that deliver performance similar to the largest models.

16.1. Retrieval-Based Models

Retrieval-based models learn by submitting queries to a database, offering a more efficient approach to learning.

16.2. Learning from Context

The ultimate goal is to make these models learn like humans do from context in the real world with very little data.

17. Safe, Responsible Models: Addressing Bias and Toxicity

Researchers are studying ways to eliminate bias or toxicity in transformer models.

17.1. Center for Research on Foundation Models

Stanford created the Center for Research on Foundation Models to explore these issues.

17.2. Addressing Subtle Issues

Researchers are working to identify and address subtle issues that may lead to bias or harmful language.

18. Beyond the Horizon: The Future of Transformers

Transformers hold the potential to achieve some of the goals people talked about when they coined the term “general artificial intelligence.”

18.1. Simple Methods, New Capabilities

We are in a time where simple methods like neural networks are giving us an explosion of new capabilities.

18.2. NVIDIA H100 GPU

Transformer training and inference will get significantly accelerated with the NVIDIA H100 GPU.

19. Why Should You Learn About Transformer Models?

Learning about transformer models opens up a world of opportunities. Here’s why:

- High Demand: Expertise in transformer models is highly sought after in various industries.

- Career Advancement: Mastering transformer models can significantly boost your career prospects.

- Innovation: You can contribute to cutting-edge research and development in AI.

- Problem Solving: You can apply transformer models to solve complex real-world problems.

20. How LEARNS.EDU.VN Can Help You Master Transformer Models

LEARNS.EDU.VN offers a comprehensive range of resources to help you learn about transformer models, regardless of your current level of knowledge.

20.1. Courses and Tutorials

We provide structured courses and tutorials that cover the fundamentals of transformer models, as well as advanced topics.

20.2. Expert Instructors

Our instructors are experienced professionals with deep expertise in the field of AI.

20.3. Hands-On Projects

We offer hands-on projects that allow you to apply your knowledge and gain practical experience.

20.4. Community Support

You’ll have access to a supportive community of learners who can help you along the way.

20.5. Stay Updated

| New Method | Description | Benefits |

|---|---|---|

| Knowledge Distillation | Transferring knowledge from a large, complex model to a smaller, more efficient model. | Reduces model size and computational cost while maintaining performance. Ideal for deployment on resource-constrained devices. |

| Quantization | Reducing the precision of numerical values (e.g., from 32-bit floating point to 8-bit integers). | Reduces memory footprint and speeds up computation. Simplifies hardware requirements, making models more accessible. |

| Pruning | Removing unimportant connections or parameters from the model. | Decreases model complexity and improves efficiency. Reduces overfitting by focusing on the most relevant features. |

| Attention Sparsity | Making the attention mechanism more efficient by only attending to a subset of the input sequence. | Reduces computational cost and memory usage. Enables longer sequence processing without excessive resource consumption. |

| Neural Architecture Search (NAS) | Automating the design of neural network architectures. | Discovers novel and efficient architectures tailored to specific tasks. Optimizes for performance, size, and energy efficiency. |

| Hardware Acceleration | Using specialized hardware (e.g., GPUs, TPUs) to accelerate the training and inference of transformer models. | Significantly speeds up computation and allows for training larger models. Reduces the time and cost associated with AI development and deployment. |

21. Success Stories: Real-World Impact

Here are some examples of how individuals have benefited from learning about transformer models:

- Career Change: A software engineer transitioned to a role in AI research after completing a transformer models course at LEARNS.EDU.VN.

- Startup Success: A group of entrepreneurs used their knowledge of transformer models to build a successful chatbot startup.

- Research Breakthrough: A student made a significant breakthrough in protein structure prediction by applying transformer models to the problem.

22. The Importance of Continuous Learning

The field of AI is constantly evolving, so it’s essential to stay up-to-date with the latest advancements. LEARNS.EDU.VN provides ongoing learning opportunities to help you stay ahead of the curve.

22.1. New Courses and Tutorials

We regularly add new courses and tutorials to our platform, covering the latest topics in transformer models and AI.

22.2. Industry Insights

We share industry insights and news to keep you informed about the latest trends and developments.

22.3. Community Events

We host community events where you can connect with other learners and experts in the field.

23. Addressing Common Concerns About Learning Transformer Models

Some people may feel intimidated by the complexity of transformer models, but it’s important to remember that anyone can learn these concepts with the right resources and support.

23.1. No Prior AI Experience Required

Our introductory courses are designed for beginners with no prior experience in AI.

23.2. Step-by-Step Guidance

We provide step-by-step guidance and clear explanations to help you understand the concepts.

23.3. Supportive Community

Our community is a safe and supportive environment where you can ask questions and get help from others.

24. Future Trends in Transformer Models: What to Expect

The future of transformer models is bright, with ongoing research and development pushing the boundaries of what’s possible.

24.1. Larger and More Powerful Models

We can expect to see even larger and more powerful transformer models emerge in the coming years.

24.2. More Efficient Architectures

Researchers are working on developing more efficient transformer architectures that require less computational resources.

24.3. Wider Range of Applications

Transformer models will likely be applied to an even wider range of problems in the future, transforming various industries.

25. Testimonials: Hear From Our Learners

“LEARNS.EDU.VN provided me with the knowledge and skills I needed to launch my career in AI. The courses were well-structured, and the instructors were incredibly helpful.” – John S.

“I was able to build a successful chatbot startup thanks to the transformer models course I took at LEARNS.EDU.VN. The hands-on projects were invaluable.” – Jane D.

“LEARNS.EDU.VN is a great resource for anyone who wants to learn about transformer models. The community is supportive, and the learning materials are excellent.” – Michael L.

26. Resources for Further Learning

Here are some additional resources to help you deepen your understanding of transformer models:

- Research Papers: Read the original research papers that introduced the transformer architecture and related concepts.

- Online Courses: Explore online courses offered by universities and other institutions.

- Tutorials and Blog Posts: Find tutorials and blog posts that explain transformer models in a clear and accessible way.

- Open-Source Code: Experiment with open-source code implementations of transformer models.

27. The Ethical Considerations of Transformer Models

As transformer models become more powerful and widely used, it’s essential to consider the ethical implications.

27.1. Bias and Fairness

Transformer models can perpetuate and amplify biases present in the data they are trained on.

27.2. Misinformation and Manipulation

Transformer models can be used to generate fake news and other forms of misinformation.

27.3. Privacy and Security

Transformer models can be used to extract sensitive information from data.

28. Join the LEARNS.EDU.VN Community Today

Ready to embark on your journey into the world of transformer models? Join the LEARNS.EDU.VN community today and start learning.

28.1. Explore Our Courses

Browse our catalog of courses and find the perfect one for you.

28.2. Connect With Other Learners

Join our online forum and connect with other learners from around the world.

28.3. Start Your Free Trial

Sign up for a free trial and experience the LEARNS.EDU.VN difference.

29. The Role of Transformers in the Future of Education

Transformer models have the potential to transform the future of education.

29.1. Personalized Learning

Transformer models can be used to create personalized learning experiences tailored to the individual needs of each student.

29.2. Automated Assessment

Transformer models can be used to automate the assessment of student work, freeing up teachers to focus on other tasks.

29.3. Intelligent Tutoring Systems

Transformer models can be used to create intelligent tutoring systems that provide students with personalized feedback and support.

30. Transformers: A Catalyst for Innovation

Transformer models are not just a technological advancement; they are a catalyst for innovation across various fields.

30.1. Driving New Discoveries

Transformer models are helping researchers make new discoveries in science, medicine, and other areas.

30.2. Creating New Industries

Transformer models are enabling the creation of new industries and business models.

30.3. Transforming Existing Industries

Transformer models are transforming existing industries by improving efficiency, productivity, and customer experience.

31. Addressing the Skills Gap in Transformer Technology

As transformer technology becomes more prevalent, a skills gap is emerging. LEARNS.EDU.VN is committed to addressing this gap by providing high-quality education and training in transformer models.

31.1. Upskilling and Reskilling

We offer programs designed to upskill and reskill individuals for careers in transformer technology.

31.2. Industry Partnerships

We partner with industry leaders to ensure that our curriculum is relevant and aligned with the needs of the market.

31.3. Career Services

We provide career services to help our learners find jobs in the field of transformer technology.

32. Demystifying Transformer Complexity

While transformer models can seem complex, they are built upon fundamental concepts that can be understood with the right approach.

32.1. Breaking Down Concepts

We break down complex concepts into smaller, more manageable pieces.

32.2. Visual Aids and Examples

We use visual aids and real-world examples to illustrate the concepts.

32.3. Hands-On Practice

We provide hands-on practice to help you solidify your understanding.

33. The Transformative Power of Attention Mechanisms

At the heart of transformer models lies the attention mechanism, a revolutionary concept that has transformed the way machines process information.

33.1. Selective Focus

Attention mechanisms allow models to selectively focus on the most relevant parts of the input, ignoring irrelevant information.

33.2. Contextual Understanding

Attention mechanisms enable models to understand the context of words and phrases, leading to more accurate and nuanced interpretations.

33.3. Long-Range Dependencies

Attention mechanisms can capture long-range dependencies between words and phrases, even when they are far apart in the input sequence.

34. The Economic Impact of Transformer Technology

Transformer technology is poised to have a significant economic impact, creating new jobs, driving innovation, and boosting productivity.

34.1. Job Creation

The demand for professionals with expertise in transformer technology is growing rapidly, creating new job opportunities in various industries.

34.2. Productivity Gains

Transformer technology can automate tasks, improve decision-making, and enhance productivity across various sectors.

34.3. Innovation and Growth

Transformer technology is fostering innovation and driving economic growth by enabling the development of new products, services, and business models.

35. The Role of Transfer Learning in Transformer Models

Transfer learning is a powerful technique that allows transformer models to be adapted to new tasks with minimal training data.

35.1. Pre-trained Models

Transfer learning leverages pre-trained transformer models that have been trained on massive datasets.

35.2. Fine-Tuning

These pre-trained models can be fine-tuned on smaller datasets to adapt them to specific tasks.

35.3. Efficiency and Effectiveness

Transfer learning significantly reduces the training time and data requirements for new tasks, making transformer models more efficient and effective.

36. Unlocking Creativity with Transformer Models

Transformer models are not just tools for automation and problem-solving; they can also be used to unlock creativity and generate novel content.

36.1. Text Generation

Transformer models can generate realistic and coherent text, including articles, stories, poems, and scripts.

36.2. Art and Music Creation

Transformer models can be used to create art and music in various styles and genres.

36.3. Idea Generation

Transformer models can assist in idea generation by providing novel perspectives and combinations of concepts.

37. The Democratization of AI with Transformer Models

Transformer models are becoming increasingly accessible to individuals and organizations of all sizes, democratizing the power of AI.

37.1. Open-Source Tools and Libraries

Open-source tools and libraries make it easier to develop and deploy transformer models.

37.2. Cloud-Based AI Platforms

Cloud-based AI platforms provide access to the computing resources and expertise needed to work with transformer models.

37.3. Educational Resources

Educational resources like LEARNS.EDU.VN empower individuals to learn about transformer models and apply them to their own projects.

38. Transformers: Shaping the Future of Technology

Transformer models are not just a passing trend; they are a foundational technology that will shape the future of technology for years to come.

38.1. Continued Innovation

Ongoing research and development will continue to push the boundaries of what’s possible with transformer models.

38.2. Widespread Adoption

Transformer models will become increasingly integrated into various applications and industries.

38.3. Transformative Impact

Transformer models will continue to transform the way we live, work, and interact with technology.

Ready to unlock your potential in the world of transformer models? Visit LEARNS.EDU.VN today and discover the resources and opportunities that await you. Address: 123 Education Way, Learnville, CA 90210, United States. Whatsapp: +1 555-555-1212. Website: LEARNS.EDU.VN.

FAQ: Transformer Models

- What is a transformer model?

A transformer model is a deep-learning neural network architecture that learns context and meaning by tracking relationships in sequential data. - What are the key advantages of transformer models?

Key advantages include the ability to process data in parallel, handle long-range dependencies, and achieve state-of-the-art results in various tasks. - What are some applications of transformer models?

Applications include machine translation, text summarization, question answering, image recognition, and drug discovery. - How does the attention mechanism work in transformer models?

The attention mechanism allows the model to focus on the most relevant parts of the input sequence when making predictions. - What is self-supervised learning, and how does it relate to transformer models?

Self-supervised learning allows AI to learn from unlabeled data, which has been significantly enabled by transformers. - How are transformer models being used in healthcare?

They are used to extract insights from medical records, accelerate medical research, and improve patient care. - What are the ethical considerations surrounding transformer models?

Ethical considerations include bias, misinformation, and privacy. - How can I learn more about transformer models?

learns.edu.vn offers courses, tutorials, and resources to help you learn about transformer models. - What is the future of transformer models?

The future includes larger and more powerful models, more efficient architectures, and a wider range of applications. - What is transfer learning, and how does it relate to transformer models?

Transfer learning allows transformer models to be adapted to new tasks with minimal training data by leveraging pre-trained models.