Here’s a comprehensive guide answering how many data points are needed for machine learning, brought to you by LEARNS.EDU.VN, where we empower your learning journey with expert insights and resources. Discover the factors influencing dataset size and strategies for dealing with data scarcity, ensuring your machine learning models achieve optimal performance and accuracy.

1. What Is The Optimal Number Of Data Points For Machine Learning?

The ideal number of data points for machine learning is not a one-size-fits-all answer; it depends on several factors, but a common starting point is the 10 times rule, suggesting you need at least ten times more data points than the number of parameters in your model. To understand this, let’s break down the factors that influence this number and explore how to determine the optimal dataset size for your specific machine learning project, as LEARNS.EDU.VN emphasizes, quality data is just as crucial as quantity.

- Model Complexity: The more intricate your model, the more data it requires.

- Algorithm Type: Complex algorithms like deep learning demand more data than simpler ones.

- Labeling Needs: The number of labels your algorithm needs to predict affects data requirements.

- Error Tolerance: Projects with low-error tolerance necessitate larger, more accurate datasets.

- Input Diversity: Diverse and unpredictable inputs call for more extensive data.

1.1. Understanding The 10 Times Rule In Detail

The 10 times rule is a heuristic that suggests the number of data points (examples) should be at least ten times the number of degrees of freedom (parameters) in a model. For instance, if a model uses 500 parameters to distinguish between different types of images, at least 5,000 images are needed to train the model effectively. While this rule is a useful starting point, especially for simpler models, it may not hold true for more complex scenarios like deep learning, where the volume and diversity of data play a more significant role.

1.2. Why Data Quality Is As Important As Data Quantity

The old adage “quality over quantity” is particularly relevant in machine learning. An algorithm learns relationships and patterns from the data, and if the data is flawed, biased, or irrelevant, the results will be unreliable, regardless of the size of the dataset. Data quality encompasses several dimensions:

- Accuracy: Data should be free of errors and inconsistencies.

- Completeness: All relevant fields and attributes should be populated.

- Consistency: Data should be uniform across different sources and formats.

- Relevance: Data should be pertinent to the problem being addressed.

- Timeliness: Data should be up-to-date and reflective of the current situation.

1.3. The Pitfalls Of Insufficient Data

Insufficient data can lead to a condition known as underfitting, where the model is too simplistic to capture the underlying patterns in the data. An underfit model will perform poorly on both the training data and new, unseen data. Symptoms of underfitting include high bias and low variance. In other words, the model makes overly simplistic assumptions about the data and fails to generalize well.

2. How Does Model Complexity Influence Data Requirements?

Model complexity significantly influences the amount of data required for effective training. More complex models, characterized by a higher number of parameters, necessitate larger datasets to generalize well and avoid overfitting. LEARNS.EDU.VN understands that a nuanced approach to model complexity and data size is crucial for successful machine learning outcomes.

- Simple Models: Linear regression or logistic regression, require less data because they have fewer parameters to estimate.

- Complex Models: Neural networks, decision trees, and ensemble methods, demand more data due to their intricate architectures and numerous parameters.

2.1. The Relationship Between Model Parameters And Data Points

The number of parameters in a model determines its capacity to learn complex relationships within the data. A model with too few parameters may be unable to capture the underlying patterns, leading to underfitting. Conversely, a model with too many parameters may memorize the training data, including noise and irrelevant details, resulting in overfitting.

2.2. Overfitting Vs. Underfitting: Striking The Right Balance

- Overfitting: Occurs when a model learns the training data too well, capturing noise and irrelevant details that do not generalize to new data. An overfit model will have high variance and low bias.

- Underfitting: Occurs when a model is too simple to capture the underlying patterns in the data. An underfit model will have high bias and low variance.

2.3. Regularization Techniques To Mitigate Overfitting

Regularization techniques are used to prevent overfitting by adding a penalty term to the model’s loss function. These techniques encourage the model to learn simpler, more generalizable patterns. Common regularization methods include:

- L1 Regularization (Lasso): Adds a penalty proportional to the absolute value of the coefficients.

- L2 Regularization (Ridge): Adds a penalty proportional to the square of the coefficients.

- Elastic Net Regularization: Combines L1 and L2 regularization.

- Dropout: Randomly deactivates neurons during training to prevent them from becoming too specialized.

3. Does The Choice Of Algorithm Impact The Number Of Data Points Needed?

Yes, the choice of algorithm significantly impacts the number of data points needed for effective machine learning. Simpler algorithms like linear regression can perform well with relatively small datasets, while more complex algorithms such as deep neural networks typically require vast amounts of data to avoid overfitting and achieve optimal performance. At LEARNS.EDU.VN, we guide you through selecting the right algorithm and dataset size for your specific needs.

- Simple Algorithms: Linear regression, logistic regression, and Naive Bayes classifiers, can often achieve good performance with smaller datasets.

- Complex Algorithms: Deep learning models, random forests, and gradient boosting machines, demand larger datasets to avoid overfitting and to learn intricate patterns in the data.

3.1. Data Efficiency Of Different Machine Learning Algorithms

Some algorithms are inherently more data-efficient than others, meaning they can achieve a certain level of performance with less data. Data efficiency depends on several factors, including the algorithm’s inductive bias, the complexity of the problem, and the quality of the data.

- Linear Models: Exhibit high data efficiency because they make strong assumptions about the linearity of the data.

- Tree-Based Models: Have moderate data efficiency, as they can capture non-linear relationships but are prone to overfitting with small datasets.

- Deep Learning Models: Typically have low data efficiency and require very large datasets to avoid overfitting and to learn meaningful representations.

3.2. When To Use Simple Algorithms With Limited Data

Using simple algorithms with limited data is appropriate when the problem is relatively straightforward, and the data exhibits clear, linear relationships. Simple algorithms are also useful as a baseline model to compare against more complex algorithms.

- Linear Regression: Suitable for predicting a continuous outcome variable based on one or more predictor variables.

- Logistic Regression: Suitable for binary classification problems, where the goal is to predict one of two possible outcomes.

- Naive Bayes: A simple probabilistic classifier that assumes independence between features, making it computationally efficient and suitable for high-dimensional data.

3.3. Leveraging Transfer Learning To Compensate For Data Scarcity

Transfer learning is a technique that involves using a pre-trained model on a related task and fine-tuning it on a new, smaller dataset. This approach can significantly reduce the amount of data needed to train a model effectively, as the pre-trained model has already learned useful features and representations from a large dataset.

4. How Do Labeling Needs Affect The Required Number Of Data Points?

The number of labels or classes an algorithm needs to predict directly impacts the required number of data points. Problems with many classes or complex labeling schemes require substantially more data to train effectively. LEARNS.EDU.VN emphasizes the importance of understanding the relationship between labeling complexity and dataset size to ensure optimal model performance.

- Binary Classification: Sorting cats from dogs requires the algorithm to learn relatively simple internal representations, needing less data.

- Multi-Class Classification: Identifying squares and triangles involves simpler representations, requiring a smaller amount of data.

4.1. The Impact Of Class Imbalance On Data Requirements

Class imbalance occurs when the number of examples in one class is significantly different from the number of examples in other classes. Class imbalance can negatively impact model performance, especially for the minority class. To address class imbalance, several techniques can be used:

- Resampling: Involves either oversampling the minority class or undersampling the majority class.

- Cost-Sensitive Learning: Assigns different misclassification costs to different classes, giving more weight to the minority class.

- Ensemble Methods: Use ensemble methods, such as random forests or gradient boosting, which can handle class imbalance more effectively.

4.2. Strategies For Handling Multi-Class Classification Problems

Multi-class classification problems, where the goal is to assign an example to one of several possible classes, require more data than binary classification problems. To handle multi-class classification problems effectively, several strategies can be used:

- One-Vs-All (OVA): Involves training a separate binary classifier for each class, treating it as the positive class and all other classes as the negative class.

- One-Vs-One (OVO): Involves training a binary classifier for each pair of classes.

- Softmax Regression: A generalization of logistic regression that can handle multi-class classification problems directly.

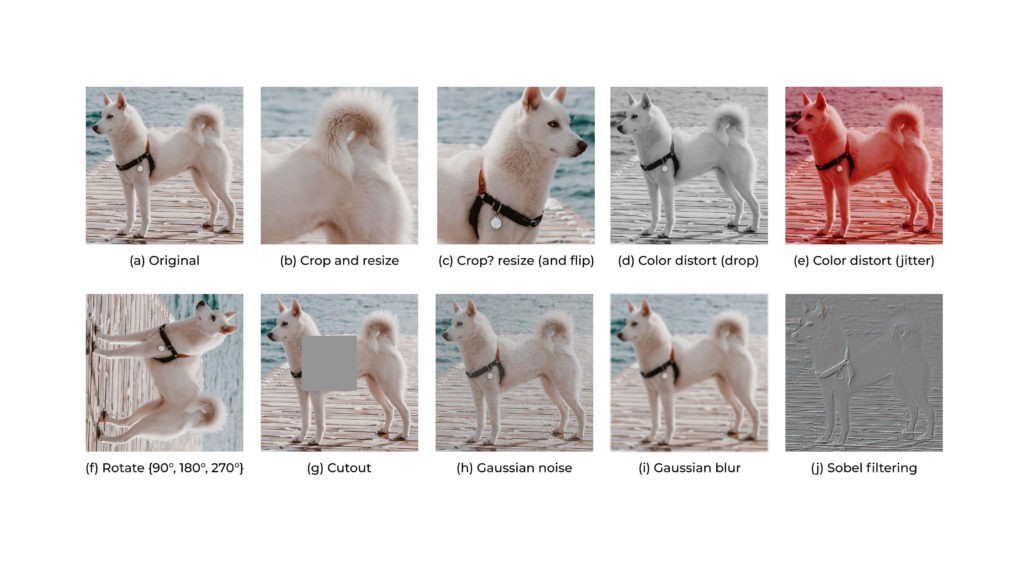

4.3. The Role Of Data Augmentation In Label-Intensive Tasks

Data augmentation is a technique that involves creating new training examples by applying various transformations to existing examples. Data augmentation can be particularly useful in label-intensive tasks, where the goal is to train a model to recognize a large number of different classes or categories.

- Image Augmentation: Includes techniques such as rotation, scaling, cropping, and flipping.

- Text Augmentation: Includes techniques such as synonym replacement, back translation, and random insertion.

- Audio Augmentation: Includes techniques such as time stretching, pitch shifting, and adding noise.

5. How Does Acceptable Error Margin Influence Data Needs?

The acceptable error margin, or the level of accuracy required for a machine learning model, directly affects the amount of data needed. Projects with a low tolerance for errors demand more data to ensure higher accuracy and reliability. LEARNS.EDU.VN recognizes that understanding this relationship is vital for aligning data collection efforts with project goals.

- High-Stakes Applications: Medical diagnoses or financial predictions require extremely high accuracy.

- Low-Stakes Applications: Weather forecasting, can tolerate some level of error.

5.1. Quantifying The Cost Of Errors In Different Applications

The cost of errors varies depending on the application. In high-stakes applications, the cost of errors can be extremely high, potentially leading to significant financial losses, reputational damage, or even loss of life. Quantifying the cost of errors involves assessing the potential impact of incorrect predictions on various stakeholders.

- Medical Diagnoses: A false negative can result in delayed treatment and adverse health outcomes, while a false positive can lead to unnecessary interventions and anxiety.

- Financial Predictions: An inaccurate stock market prediction can lead to significant financial losses for investors.

- Autonomous Vehicles: Errors in object detection or path planning can result in accidents and injuries.

5.2. Achieving Statistical Significance With Larger Datasets

Statistical significance refers to the likelihood that the results of an experiment or study are not due to chance alone. Larger datasets provide more statistical power, making it easier to detect true effects and to distinguish them from random noise.

5.3. Calibration Techniques For Improving Model Confidence

Calibration is the process of aligning a model’s predicted probabilities with the true likelihood of the event occurring. A well-calibrated model will accurately reflect the uncertainty in its predictions.

- Platt Scaling: A simple calibration method that involves training a logistic regression model to map the model’s predicted probabilities to calibrated probabilities.

- Isotonic Regression: A non-parametric calibration method that involves fitting a piecewise constant function to the model’s predicted probabilities.

- Temperature Scaling: A calibration method that involves dividing the model’s logits (the unnormalized output of the final layer) by a temperature parameter.

6. How Does Input Diversity Impact The Size Of Data Sets?

The diversity of input data significantly impacts the size of datasets needed for machine learning. Algorithms trained on diverse data are more robust and generalize better to new, unseen data. LEARNS.EDU.VN highlights that understanding and accounting for input diversity is essential for creating effective and reliable machine learning models.

- Online Virtual Assistant: Should understand the varied ways website visitors ask questions.

- Self-Driving Cars: Must function in diverse and unpredictable driving conditions.

6.1. Addressing Data Bias To Ensure Generalizability

Data bias refers to systematic errors or distortions in the data that can lead to unfair or inaccurate predictions. Data bias can arise from various sources, including biased sampling, biased labeling, and biased feature selection.

- Sampling Bias: Occurs when the data is not representative of the population of interest.

- Labeling Bias: Occurs when the labels are assigned inconsistently or unfairly.

- Feature Selection Bias: Occurs when the features used to train the model are not representative of the underlying phenomenon.

6.2. Techniques For Generating Diverse And Representative Datasets

Generating diverse and representative datasets is crucial for training models that generalize well to new, unseen data. Several techniques can be used to generate diverse and representative datasets:

- Data Augmentation: Involves creating new training examples by applying various transformations to existing examples.

- Active Learning: Involves selectively sampling the most informative examples to label.

- Synthetic Data Generation: Involves creating synthetic data that mimics the characteristics of real-world data.

6.3. The Importance Of Real-World Data Vs. Synthetic Data

Real-world data is data that is collected from actual events or observations. Synthetic data is data that is artificially generated to mimic the characteristics of real-world data. Both real-world data and synthetic data have their advantages and disadvantages:

- Real-World Data: More accurate and representative of the true underlying phenomenon but can be expensive and time-consuming to collect.

- Synthetic Data: Less expensive and easier to generate but may not accurately reflect the characteristics of real-world data.

7. What Are Data Augmentation Techniques And When Should They Be Used?

Data augmentation techniques are methods used to increase the size and diversity of training datasets by creating modified versions of existing data. These techniques help improve the robustness and generalization ability of machine learning models. LEARNS.EDU.VN recognizes data augmentation as a powerful tool for overcoming data limitations and enhancing model performance.

- Image Classification: Enhancing image segmentation to improve training data.

- Natural Language Processing: Employing various text manipulation methods to add versatility to training data.

7.1. Common Image Augmentation Techniques

Image augmentation techniques involve applying various transformations to images, such as:

- Rotation: Rotating the image by a certain angle.

- Scaling: Increasing or decreasing the size of the image.

- Cropping: Selecting a random portion of the image.

- Flipping: Flipping the image horizontally or vertically.

- Color Jittering: Adjusting the brightness, contrast, or saturation of the image.

7.2. Data Augmentation For Natural Language Processing

Data augmentation techniques for NLP involve applying various transformations to text, such as:

- Synonym Replacement: Replacing words with their synonyms.

- Back Translation: Translating the text to another language and then back to the original language.

- Random Insertion: Inserting random words into the text.

- Random Deletion: Deleting random words from the text.

- Shuffle Sentence Orders: Shuffling the order of sentences in the text to receive new samples and exclude the duplicates

7.3. Balancing Data Augmentation With Data Quality

Data augmentation can be a powerful tool for increasing the size and diversity of training datasets, but it’s important to balance data augmentation with data quality. Over-augmenting the data can lead to overfitting and reduced generalization ability.

8. How Can Synthetic Data Generation Help In Machine Learning?

Synthetic data generation involves creating artificial data that mimics the characteristics of real-world data. This technique is particularly useful when real data is scarce, expensive to obtain, or subject to privacy restrictions. LEARNS.EDU.VN understands the strategic importance of synthetic data in expanding training datasets and enabling machine learning in sensitive domains.

- AI-Based Healthcare Solutions: Generating data that respects patient privacy laws.

- Fintech Solutions: Creating data for financial models without exposing real financial records.

8.1. Techniques For Creating Realistic Synthetic Data

Creating realistic synthetic data requires careful consideration of the underlying data distribution and the relationships between variables. Several techniques can be used to create realistic synthetic data:

- Generative Adversarial Networks (GANs): Neural networks that can learn to generate realistic synthetic data.

- Variational Autoencoders (VAEs): Probabilistic models that can learn to generate synthetic data by encoding and decoding real data.

- Statistical Models: Used to simulate data based on pre-existing patterns in the training data.

8.2. Addressing The Synthetic Data Vs. Real Data Issue

One of the main challenges with synthetic data is ensuring that it accurately reflects the characteristics of real-world data. If the synthetic data is not realistic, it can lead to models that perform poorly on real-world data.

8.3. Mitigating Bias In Synthetic Data Generation

Bias in synthetic data can arise from various sources, including biased training data, biased model assumptions, and biased data generation processes. Mitigating bias in synthetic data generation involves carefully considering the potential sources of bias and taking steps to minimize their impact.

9. What Is Transfer Learning And How Does It Reduce Data Requirements?

Transfer learning is a technique that involves using knowledge gained from solving one problem to solve a different but related problem. It is particularly useful when the target task has limited data. LEARNS.EDU.VN emphasizes that transfer learning can significantly reduce the amount of data needed to train effective models by leveraging pre-existing knowledge.

- Image Recognition: Training a model on a large dataset of general images and then fine-tuning it on a smaller dataset of specific images.

- Natural Language Processing: Using pre-trained language models, to build effective NLP applications with limited data.

9.1. Pre-Trained Models And Their Applications

Pre-trained models are machine learning models that have been trained on large datasets and can be used as a starting point for solving new problems. Pre-trained models can significantly reduce the amount of data needed to train effective models and can also improve model performance.

- ImageNet: A large dataset of labeled images that has been used to train many popular pre-trained models for image recognition.

- BERT: A pre-trained language model that has been used to solve a wide range of NLP problems.

9.2. Fine-Tuning Pre-Trained Models For Specific Tasks

Fine-tuning involves taking a pre-trained model and training it further on a smaller dataset that is specific to the target task. Fine-tuning allows the model to adapt its knowledge to the specific characteristics of the target task.

9.3. When Is Transfer Learning Most Effective?

Transfer learning is most effective when the source task and the target task are related, and when the source task has a large amount of data, and the target task has a limited amount of data. Transfer learning can also be effective when the source task is more general than the target task.

10. How Is Data Quality Assured In Healthcare Machine Learning Projects?

Data quality is of utmost importance in healthcare machine learning projects, where errors can have serious consequences. Ensuring data quality involves careful data collection, cleaning, and validation procedures. LEARNS.EDU.VN recognizes the critical need for high-quality data in healthcare to build reliable and trustworthy machine learning models.

- IBM & Merge Healthcare: Securing vast amounts of medical imaging data to enhance research and solutions.

- Roche & Flatiron Health: Driving personalized cancer care through comprehensive data analytics.

10.1. Challenges In Healthcare Data Collection And Accessibility

Collecting and accessing healthcare data can be challenging due to several factors:

- Heterogeneous Data Types: Healthcare data comes in various formats, including laboratory tests, medical images, vital signs, and genomics data.

- Data Accessibility: Medical datasets are often not widely accessible due to privacy concerns and regulatory restrictions.

10.2. Techniques For Data Cleaning And Preprocessing In Healthcare

Data cleaning and preprocessing are essential steps in ensuring data quality in healthcare machine learning projects. These steps involve:

- Missing Value Imputation: Filling in missing values using various techniques, such as mean imputation, median imputation, or k-nearest neighbors imputation.

- Outlier Detection And Removal: Identifying and removing outliers that may be due to errors or anomalies.

- Data Normalization And Standardization: Scaling the data to a common range to prevent features with larger values from dominating the model.

10.3. The Role Of Data Governance In Maintaining Data Integrity

Data governance refers to the policies, procedures, and processes that are used to ensure the quality, integrity, and security of data. Data governance is essential for maintaining data integrity in healthcare machine learning projects.

Conclusion:

Determining the right number of data points for machine learning is a nuanced process that depends on the project’s specifics. At LEARNS.EDU.VN, we’re committed to providing you with the insights and resources needed to navigate these complexities successfully. Whether you’re dealing with model complexity, algorithm selection, or data scarcity, understanding these factors will help you build more effective and reliable machine-learning models.

Ready to dive deeper and unlock the full potential of machine learning? Visit LEARNS.EDU.VN today to explore our comprehensive courses and expert resources.

- Explore: Detailed guides on data augmentation, synthetic data generation, and transfer learning techniques.

- Connect: With our community of learners and experts to gain real-world insights and support.

- Transform: Your skills with our specialized courses tailored to meet the demands of today’s data-driven world.

Don’t let data limitations hold you back. Join LEARNS.EDU.VN and turn your data challenges into opportunities for growth and innovation.

Contact us at: 123 Education Way, Learnville, CA 90210, United States. Whatsapp: +1 555-555-1212. Website: learns.edu.vn

Frequently Asked Questions (FAQ)

1. How many data points are generally considered sufficient for a machine learning project?

There is no fixed number, but the “10 times rule” suggests having at least ten times more data points than the number of parameters in your model as a starting guideline.

2. What factors should I consider when determining the amount of data needed for my machine-learning model?

Consider the complexity of the model, the type of algorithm, the number of labels, the acceptable error margin, and the diversity of the input data.

3. What is data augmentation, and how does it help in machine learning?

Data augmentation is a technique to increase the size of your dataset by creating modified versions of existing data. It helps improve the robustness and generalization of machine learning models.

4. When is it appropriate to use synthetic data in place of real-world data?

Synthetic data is useful when real data is scarce, expensive, or subject to privacy restrictions. It’s commonly used in healthcare and finance.

5. How can transfer learning reduce the amount of data needed for a machine learning task?

Transfer learning allows you to use knowledge gained from a pre-trained model to solve a related task, reducing the amount of data needed to train a new model from scratch.

6. What are some common techniques for ensuring data quality in machine learning projects?

Techniques include missing value imputation, outlier detection, data normalization, and rigorous data governance policies.

7. How does class imbalance affect the performance of a machine learning model, and how can it be addressed?

Class imbalance can lead to poor performance on the minority class. It can be addressed through resampling techniques, cost-sensitive learning, or ensemble methods.

8. What role does input diversity play in the effectiveness of a machine learning model?

Input diversity ensures that the model is trained on a wide range of scenarios, improving its ability to generalize to new, unseen data and reducing bias.

9. What are some common challenges in collecting and accessing healthcare data for machine learning projects?

Challenges include heterogeneous data types, data accessibility restrictions due to privacy concerns, and regulatory compliance issues.

10. How can I calibrate my machine learning model to improve its confidence in predictions?

Calibration techniques include Platt scaling, isotonic regression, and temperature scaling, which align predicted probabilities with the true likelihood of events.