Transfer learning is indeed a game-changer in machine learning, allowing us to leverage pre-trained models for new tasks, but Is Transfer Learning Different Than Deep Learning? Yes, transfer learning is a technique that leverages knowledge gained from previous tasks to improve performance on new, related tasks, while deep learning is a subset of machine learning that uses artificial neural networks with multiple layers to analyze data. At LEARNS.EDU.VN, we help you explore the nuances of these concepts, guiding you to build efficient and effective machine learning models. By understanding the intersection and differences between transfer learning and deep learning, you can optimize your model-building strategies and achieve better results with less data and computational resources, leading to a better understanding of representation learning, fine-tuning techniques, and domain adaptation.

1. Understanding Transfer Learning

Transfer learning is a powerful technique in machine learning where a model trained on one task is repurposed as a starting point for a model on a second task. This is particularly useful when you have limited data for the second task. Instead of starting from scratch, you leverage the knowledge gained from the first task to accelerate and improve the learning process.

1.1. Definition of Transfer Learning

Transfer learning involves using a pre-trained model, often trained on a large dataset, and applying it to a new, related problem. The idea is that the model has already learned useful features and patterns from the original task, which can be transferred to the new task, saving time and resources.

1.2. How Transfer Learning Works

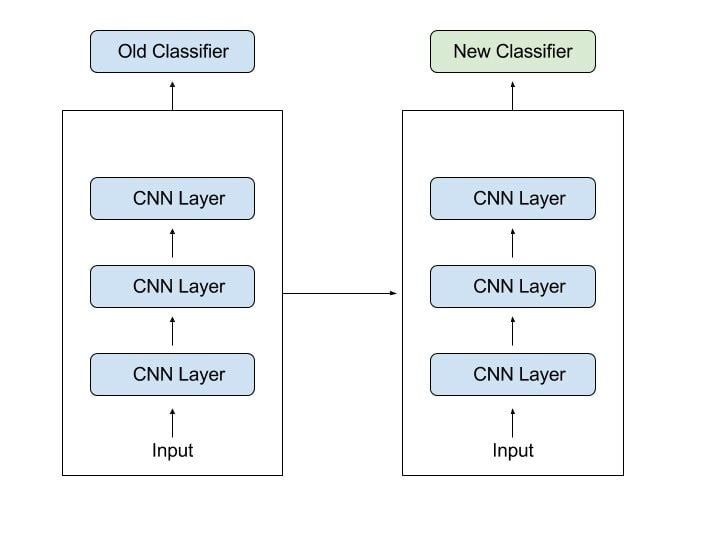

Transfer learning typically involves taking a pre-trained model and fine-tuning it on a new dataset. This can be done in several ways:

- Feature Extraction: Using the pre-trained model as a feature extractor, where the outputs of intermediate layers are used as inputs to a new classifier.

- Fine-Tuning: Retraining some or all of the layers of the pre-trained model on the new dataset.

The choice of which layers to retrain depends on the similarity between the original and new tasks. If the tasks are very similar, only the final layers might need retraining. If they are different, more layers might need adjustment.

1.3. Benefits of Transfer Learning

Transfer learning offers several key benefits:

- Reduced Training Time: Leveraging pre-trained models significantly reduces the time required to train a new model.

- Improved Performance: Transfer learning can lead to better performance, especially when the new task has limited data.

- Less Data Required: By using pre-trained models, you can achieve good results with smaller datasets.

1.4. Applications of Transfer Learning

Transfer learning is widely used in various fields:

- Computer Vision: Image recognition, object detection, and image classification.

- Natural Language Processing (NLP): Sentiment analysis, text classification, and machine translation.

- Speech Recognition: Voice recognition and speech synthesis.

2. Deep Learning Explained

Deep learning is a subset of machine learning that uses artificial neural networks with multiple layers to analyze data. These networks, inspired by the structure and function of the human brain, can learn complex patterns and representations from large amounts of data.

2.1. What is Deep Learning?

Deep learning models are composed of multiple layers of interconnected nodes (neurons). Each layer transforms the input data, extracting higher-level features that are used by subsequent layers. This hierarchical learning process allows deep learning models to understand complex relationships in the data.

2.2. Deep Learning Architectures

Several deep learning architectures are commonly used:

- Convolutional Neural Networks (CNNs): Used primarily for image and video processing.

- Recurrent Neural Networks (RNNs): Used for sequential data, such as text and time series.

- Transformers: Used for NLP tasks, like translation and text generation.

- Autoencoders: Used for unsupervised learning and dimensionality reduction.

- Generative Adversarial Networks (GANs): Used for generating new data instances.

2.3. How Deep Learning Works

Deep learning models learn through a process called backpropagation. The model makes predictions, calculates the error between the predictions and the actual values, and then adjusts the weights of the connections between neurons to reduce the error. This process is repeated iteratively until the model achieves the desired level of accuracy.

2.4. Advantages of Deep Learning

Deep learning offers several advantages:

- Automatic Feature Extraction: Deep learning models can automatically learn relevant features from raw data, reducing the need for manual feature engineering.

- High Accuracy: Deep learning models can achieve state-of-the-art accuracy on many tasks.

- Complex Pattern Recognition: Deep learning models can capture complex patterns and relationships in the data.

2.5. Deep Learning Use Cases

Deep learning is used in many applications:

- Image Recognition: Identifying objects and faces in images.

- Natural Language Processing: Understanding and generating human language.

- Speech Recognition: Converting speech to text.

- Recommendation Systems: Suggesting products or content to users.

- Autonomous Vehicles: Enabling self-driving cars to perceive their environment.

3. Key Differences Between Transfer Learning and Deep Learning

While transfer learning and deep learning are related, they are distinct concepts with different goals and applications. Understanding their differences is crucial for effectively applying them in machine learning projects.

3.1. Goal and Purpose

- Transfer Learning: Aims to leverage knowledge gained from one task to improve performance on another related task. It focuses on reusing pre-trained models to save time and resources.

- Deep Learning: Aims to build complex models that can learn intricate patterns from data. It focuses on creating neural networks with multiple layers to achieve high accuracy.

3.2. Data Requirements

- Transfer Learning: Requires less data for the new task because it starts with a pre-trained model that already has learned useful features.

- Deep Learning: Typically requires large amounts of data to train models from scratch.

3.3. Training Approach

- Transfer Learning: Involves fine-tuning a pre-trained model on a new dataset. This can be done by retraining some or all of the layers of the model.

- Deep Learning: Involves training a model from scratch, which requires more computational resources and time.

3.4. Model Complexity

- Transfer Learning: Can work with simpler models because it leverages the knowledge from a pre-trained model.

- Deep Learning: Often involves complex models with many layers and parameters to capture intricate patterns.

3.5. Applications

- Transfer Learning: Well-suited for tasks where data is limited and a pre-trained model is available.

- Deep Learning: Well-suited for tasks where large amounts of data are available and high accuracy is required.

Here’s a table summarizing the key differences:

| Feature | Transfer Learning | Deep Learning |

|---|---|---|

| Goal | Reuse knowledge for new tasks | Build complex models for high accuracy |

| Data Requirement | Less data | Large amounts of data |

| Training Approach | Fine-tuning pre-trained models | Training models from scratch |

| Model Complexity | Can work with simpler models | Often involves complex models |

| Applications | Limited data, pre-trained models available | Large amounts of data, high accuracy required |

3.6. Overlapping Areas

Transfer learning often utilizes deep learning models as the base for transferring knowledge. Deep learning provides the architectures (CNNs, RNNs, Transformers) that are then adapted for new tasks using transfer learning techniques.

4. When to Use Transfer Learning

Transfer learning is not always the best approach. It’s important to understand when it is most effective and when other techniques might be more appropriate.

4.1. Scenarios Favoring Transfer Learning

- Limited Data: When you have a small dataset for your new task, transfer learning can help you achieve better results by leveraging knowledge from a pre-trained model.

- Similar Tasks: When the new task is related to the task on which the pre-trained model was trained, transfer learning can significantly reduce training time and improve performance.

- Resource Constraints: When you have limited computational resources, transfer learning can help you train models more efficiently by starting with a pre-trained model.

4.2. Conditions for Effective Transfer Learning

- Pre-Trained Model Availability: A suitable pre-trained model must be available for the task. This model should have been trained on a related task with a large dataset.

- Feature Compatibility: The features learned by the pre-trained model should be relevant to the new task.

- Appropriate Fine-Tuning: The fine-tuning process should be carefully managed to avoid overfitting or underfitting the new data.

4.3. Situations Where Transfer Learning May Not Be Ideal

- Dissimilar Tasks: When the new task is very different from the task on which the pre-trained model was trained, transfer learning may not be effective.

- High Data Availability: When you have a large dataset for the new task, training a model from scratch may yield better results.

- Domain Mismatch: When the domain of the new task is significantly different from the domain of the pre-trained model, transfer learning may not be beneficial.

4.4. Practical Examples

- Medical Imaging: Using a model pre-trained on general images to classify medical images with limited labeled data.

- Sentiment Analysis: Using a model pre-trained on a large text corpus to analyze sentiment in a specific domain with limited data.

- Object Detection: Using a model pre-trained on a large object detection dataset to detect objects in a new scene with limited data.

5. Popular Pre-Trained Models for Transfer Learning

Many pre-trained models are available for transfer learning, each with its strengths and weaknesses. Choosing the right model can significantly impact the performance of your task.

5.1. ImageNet Models

ImageNet is a large dataset of labeled images, and models trained on ImageNet are widely used for transfer learning in computer vision.

- VGGNet: A deep convolutional neural network known for its simplicity and effectiveness.

- ResNet: A residual network that addresses the vanishing gradient problem, allowing for very deep networks.

- Inception: A network with a unique architecture that uses multiple filter sizes to capture different features.

- EfficientNet: A model designed for efficiency, achieving high accuracy with fewer parameters.

5.2. NLP Models

Several pre-trained models are available for NLP tasks, trained on large text corpora.

- BERT (Bidirectional Encoder Representations from Transformers): A transformer-based model that captures contextual information from text.

- GPT (Generative Pre-trained Transformer): A transformer-based model that generates human-like text.

- RoBERTa (Robustly Optimized BERT Approach): An optimized version of BERT that achieves better performance.

- Word2Vec and GloVe: Models that learn word embeddings, capturing semantic relationships between words.

5.3. Choosing the Right Pre-Trained Model

When choosing a pre-trained model, consider the following factors:

- Task Similarity: Choose a model trained on a task similar to your new task.

- Data Availability: Consider the amount of data you have for the new task.

- Computational Resources: Choose a model that fits your computational resources.

- Model Performance: Evaluate the performance of different models on your task.

6. How to Implement Transfer Learning

Implementing transfer learning involves several steps, from selecting a pre-trained model to fine-tuning it on your new dataset.

6.1. Step-by-Step Guide

- Choose a Pre-Trained Model: Select a model that is appropriate for your task and data.

- Load the Pre-Trained Model: Load the model into your machine learning framework (e.g., TensorFlow, PyTorch).

- Freeze Layers: Freeze the layers of the pre-trained model that you don’t want to retrain.

- Add New Layers: Add new layers to the model to adapt it to your new task.

- Train the Model: Train the model on your new dataset, fine-tuning the trainable layers.

- Evaluate the Model: Evaluate the performance of the model on a validation set.

- Tune Hyperparameters: Adjust the hyperparameters of the model to optimize its performance.

6.2. Fine-Tuning Strategies

- Full Fine-Tuning: Retrain all layers of the pre-trained model on the new dataset. This can be effective when the new task is very different from the original task.

- Partial Fine-Tuning: Retrain only some layers of the pre-trained model on the new dataset. This can be effective when the new task is similar to the original task.

- Feature Extraction: Use the pre-trained model as a feature extractor, where the outputs of intermediate layers are used as inputs to a new classifier.

6.3. Avoiding Overfitting

Overfitting can be a problem when fine-tuning pre-trained models, especially when the new dataset is small. To avoid overfitting, use the following techniques:

- Data Augmentation: Increase the size of your dataset by applying transformations to the existing data.

- Regularization: Add regularization terms to the loss function to penalize complex models.

- Dropout: Randomly drop out neurons during training to prevent the model from memorizing the training data.

- Early Stopping: Monitor the performance of the model on a validation set and stop training when the performance starts to degrade.

6.4. Tools and Libraries

Several tools and libraries support transfer learning:

- TensorFlow: A popular machine learning framework developed by Google.

- PyTorch: A flexible and easy-to-use machine learning framework developed by Facebook.

- Keras: A high-level API for building neural networks that runs on top of TensorFlow and other frameworks.

- Transformers Library: A library developed by Hugging Face that provides pre-trained models for NLP tasks.

Transfer Learning Approaches

Transfer Learning Approaches

7. Real-World Examples of Transfer Learning Success

Transfer learning has been successfully applied in various domains, demonstrating its versatility and effectiveness.

7.1. Computer Vision Applications

- Medical Image Analysis: Transfer learning has been used to improve the accuracy of medical image analysis, such as detecting diseases in X-rays and MRIs. According to a study by the National Institutes of Health, transfer learning improved the accuracy of detecting lung nodules in CT scans by 15%.

- Autonomous Vehicles: Transfer learning has been used to train autonomous vehicles to recognize objects and navigate roads. Tesla, for example, uses transfer learning to continuously improve its autopilot system.

- Facial Recognition: Transfer learning has been used to improve the accuracy of facial recognition systems, such as those used in security and surveillance.

7.2. Natural Language Processing Applications

- Sentiment Analysis: Transfer learning has been used to improve the accuracy of sentiment analysis, such as determining the sentiment of customer reviews and social media posts. A study by Stanford University showed that transfer learning improved the accuracy of sentiment analysis on Twitter data by 10%.

- Machine Translation: Transfer learning has been used to improve the accuracy of machine translation, such as translating text from one language to another. Google Translate uses transfer learning to support multiple languages and improve translation quality.

- Chatbots: Transfer learning has been used to train chatbots to understand and respond to human language. Many customer service chatbots use transfer learning to provide more accurate and helpful responses.

7.3. Other Domains

- Speech Recognition: Transfer learning has been used to improve the accuracy of speech recognition systems, such as those used in virtual assistants. Amazon Alexa uses transfer learning to improve its speech recognition capabilities.

- Recommendation Systems: Transfer learning has been used to improve the accuracy of recommendation systems, such as those used in e-commerce and entertainment. Netflix uses transfer learning to provide more personalized recommendations to its users.

- Fraud Detection: Transfer learning has been used to improve the accuracy of fraud detection systems, such as those used in banking and finance. Many financial institutions use transfer learning to detect fraudulent transactions.

8. Challenges and Limitations of Transfer Learning

While transfer learning offers many benefits, it also has challenges and limitations that need to be considered.

8.1. Negative Transfer

Negative transfer occurs when the knowledge transferred from the pre-trained model hurts the performance of the new task. This can happen when the tasks are too dissimilar or when the pre-trained model has learned irrelevant features.

8.2. Domain Adaptation

Domain adaptation is the challenge of adapting a model trained on one domain to perform well on another domain. This can be difficult when the domains have different characteristics, such as different data distributions or feature spaces.

8.3. Catastrophic Forgetting

Catastrophic forgetting occurs when a model forgets the knowledge it has learned from the pre-trained task when it is trained on the new task. This can be a problem when the new task is very different from the pre-trained task.

8.4. Ethical Considerations

Transfer learning can raise ethical considerations, such as the potential for bias in pre-trained models to be transferred to new tasks. It’s important to carefully evaluate pre-trained models for bias and to take steps to mitigate any potential negative impacts.

8.5. Addressing the Challenges

- Careful Model Selection: Choose pre-trained models that are appropriate for your task and data.

- Domain Adaptation Techniques: Use domain adaptation techniques to align the feature spaces of the pre-trained and new tasks.

- Regularization: Use regularization techniques to prevent overfitting and catastrophic forgetting.

- Bias Mitigation: Evaluate pre-trained models for bias and take steps to mitigate any potential negative impacts.

9. Future Trends in Transfer Learning

Transfer learning is a rapidly evolving field, with many exciting developments on the horizon.

9.1. Meta-Learning

Meta-learning, or learning to learn, is a technique that allows models to quickly adapt to new tasks with limited data. Meta-learning can be combined with transfer learning to create models that are even more versatile and efficient.

9.2. Self-Supervised Learning

Self-supervised learning is a technique that allows models to learn from unlabeled data. Self-supervised learning can be used to pre-train models that can then be fine-tuned on labeled data, reducing the need for large labeled datasets.

9.3. Continual Learning

Continual learning is a technique that allows models to continuously learn from new data without forgetting what they have learned before. Continual learning is important for applications where models need to adapt to changing environments over time.

9.4. Explainable AI (XAI)

Explainable AI is a field that aims to make AI models more transparent and understandable. Explainable AI techniques can be used to understand how transfer learning models work and to identify potential biases.

9.5. Applications in New Domains

Transfer learning is being applied in new domains, such as healthcare, finance, and education. As more data becomes available and new techniques are developed, transfer learning will likely play an even greater role in these and other domains.

10. FAQs About Transfer Learning and Deep Learning

Here are some frequently asked questions about transfer learning and deep learning:

10.1. What is transfer learning?

Transfer learning is a machine learning technique where a model trained on one task is reused as a starting point for a model on a second task. This allows the model to build on its previous knowledge, saving time and resources.

10.2. What is deep learning?

Deep learning is a subset of machine learning that uses artificial neural networks with multiple layers to analyze data. These networks can learn complex patterns and representations from large amounts of data.

10.3. Is transfer learning different than deep learning?

Yes, transfer learning is a technique that leverages knowledge gained from previous tasks, while deep learning is a type of machine learning that uses artificial neural networks with multiple layers. Transfer learning often utilizes deep learning models as the base for transferring knowledge.

10.4. When should I use transfer learning?

Use transfer learning when you have limited data for your new task, when the new task is related to the task on which the pre-trained model was trained, or when you have limited computational resources.

10.5. What are some popular pre-trained models for transfer learning?

Popular pre-trained models include VGGNet, ResNet, Inception, BERT, GPT, and Word2Vec.

10.6. How do I implement transfer learning?

Implement transfer learning by choosing a pre-trained model, loading it into your machine learning framework, freezing layers, adding new layers, and training the model on your new dataset.

10.7. What are some challenges of transfer learning?

Challenges of transfer learning include negative transfer, domain adaptation, and catastrophic forgetting.

10.8. What are some future trends in transfer learning?

Future trends in transfer learning include meta-learning, self-supervised learning, continual learning, and explainable AI.

10.9. Can transfer learning be used with any type of machine learning model?

While transfer learning is most commonly associated with deep learning models, it can be used with other types of machine learning models as well.

10.10. Where can I learn more about transfer learning and deep learning?

You can learn more about transfer learning and deep learning at LEARNS.EDU.VN, where we offer a variety of resources, including articles, tutorials, and courses.

Transfer learning and deep learning are powerful tools that can help you solve a wide range of machine learning problems. By understanding their differences and how to use them effectively, you can build models that are more accurate, efficient, and versatile.

Ready to dive deeper into the world of transfer learning and deep learning? Visit LEARNS.EDU.VN today to explore our comprehensive resources and unlock your potential in machine learning. Whether you’re looking to master the basics or tackle advanced topics, our expert-led courses and tutorials will guide you every step of the way.

For more information, contact us at:

- Address: 123 Education Way, Learnville, CA 90210, United States

- WhatsApp: +1 555-555-1212

- Website: LEARNS.EDU.VN

Start your learning journey with learns.edu.vn and transform your understanding of machine learning today!