Unlock the power of data-driven decision-making with A/B testing machine learning. This guide explores the concepts, design principles, and practical applications of A/B testing, empowering you to optimize your machine learning models and business strategies. At LEARNS.EDU.VN, we are dedicated to offering accessible and insightful educational content, ensuring you gain a comprehensive understanding of A/B testing and its transformative potential. Master A/B testing, optimize models, boost performance.

1. Understanding A/B Testing in Machine Learning

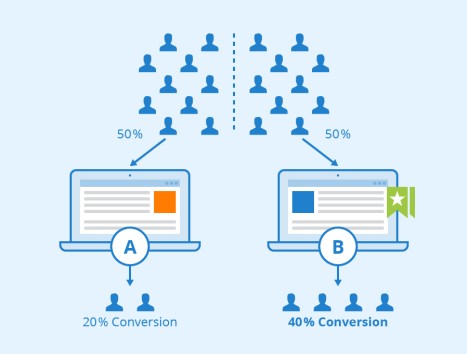

A/B testing, also known as split testing or bucket testing, is a powerful method for comparing two versions of something to determine which performs better. In the context of machine learning, A/B testing, or A/B testing machine learning, is used to compare the performance of different models, algorithms, or features in a real-world setting. It’s a core statistical method to improve conversion rate optimization.

1.1. What is A/B Testing?

A/B testing is a randomized experiment with two or more variants: A (control) and B (treatment). Users are randomly assigned to one of the variants, and their interaction with each variant is measured. The goal is to determine which variant performs better based on a predefined metric. This method reduces reliance on guesswork, promotes experimentation, and accelerates the pace of improvement.

1.2. Why is A/B Testing Important in Machine Learning?

A/B testing is crucial in machine learning for several reasons:

- Data-Driven Decisions: It allows for objective, data-driven decisions regarding model selection and optimization.

- Real-World Performance: It evaluates models in a real-world environment, providing insights into how they perform with actual users.

- Continuous Improvement: It enables continuous improvement by identifying areas for optimization and refinement.

- Risk Mitigation: It reduces the risk of deploying a poorly performing model by validating its performance before a full-scale rollout.

- Resource Optimization: It ensures that resources are allocated to the most effective models, maximizing ROI.

1.3. Historical Context and Evolution

The concept of A/B testing dates back to the early 20th century, with applications in agriculture and marketing. Farmers would divide their fields into sections to test different treatments and improve crop yields. In the digital age, A/B testing gained prominence with the rise of the internet and e-commerce. Companies like Google and Amazon embraced A/B testing to optimize their websites and improve user experience. In recent years, A/B testing has expanded into machine learning, where it is used to compare the performance of different models and algorithms.

1.4. Key Terminology

- Control Group (A): The existing version or baseline.

- Treatment Group (B): The new version or variation being tested.

- Overall Evaluation Criterion (OEC): The primary metric used to evaluate the performance of each variant.

- Significance Level (α): The probability of rejecting the null hypothesis when it is true (false positive rate).

- Power (β): The probability of rejecting the null hypothesis when it is false (true positive rate).

- Minimum Detectable Effect (δ): The smallest difference in the OEC that the test is designed to detect.

- Sample Size (n): The number of observations needed to achieve statistical significance.

2. Designing an Effective A/B Test

Designing an effective A/B test requires careful planning and consideration of several factors. Here’s a step-by-step guide to designing a robust A/B test machine learning.

2.1. Defining the Objective and Hypothesis

The first step in designing an A/B test is to define a clear objective and hypothesis. The objective should be specific, measurable, achievable, relevant, and time-bound (SMART). The hypothesis should be a testable statement about the expected outcome of the experiment.

- Objective Example: Increase the conversion rate of the website from 2% to 3% within one month.

- Hypothesis Example: Implementing a new recommendation algorithm will increase the click-through rate by 10%.

2.2. Selecting the Overall Evaluation Criterion (OEC)

The OEC is the primary metric used to evaluate the performance of each variant. It should be aligned with the objective and reflect the desired outcome of the experiment. Common OECs include:

- Conversion Rate: The percentage of users who complete a desired action (e.g., purchase, sign-up).

- Click-Through Rate (CTR): The percentage of users who click on a specific link or button.

- Revenue: The total amount of money generated.

- Engagement: The level of user interaction with the product or service.

- Retention Rate: The percentage of users who continue to use the product or service over time.

- Customer Satisfaction: A measure of how satisfied customers are with the product or service, often measured through surveys or feedback forms.

- Error Rate: The percentage of errors or failures that occur.

The OEC should be chosen carefully, as it will guide the decision-making process.

2.3. Determining the Minimum Detectable Effect (MDE)

The MDE is the smallest difference in the OEC that the test is designed to detect. It should be based on business considerations and the practical significance of the difference.

- Example: If the current conversion rate is 2%, a 1% increase may be considered the MDE.

The MDE should be determined before the experiment to ensure that the sample size is sufficient to detect the desired difference.

2.4. Setting the Significance Level (α) and Power (β)

The significance level (α) and power (β) are statistical parameters that determine the level of confidence in the results of the experiment. The significance level is the probability of rejecting the null hypothesis when it is true (false positive rate), while the power is the probability of rejecting the null hypothesis when it is false (true positive rate).

- Common Values: α = 0.05, β = 0.8

The significance level and power should be set based on the risk tolerance of the organization.

2.5. Calculating the Sample Size (n)

The sample size is the number of observations needed to achieve statistical significance. It depends on the significance level, power, and MDE. There are several online calculators and statistical tools that can be used to calculate the sample size.

- Example: Using a sample size calculator, it may be determined that 1,000 observations are needed per variant to achieve statistical significance.

2.6. Randomly Assigning Subjects

Random assignment is a critical component of A/B testing. Subjects should be randomly assigned to either the control group (A) or the treatment group (B) to ensure that the groups are comparable. Random assignment minimizes the risk of bias and ensures that any observed differences are due to the treatment being tested.

2.7. Running the Experiment

Once the sample size has been determined and subjects have been randomly assigned, the experiment can be run. It is important to monitor the experiment closely and ensure that data is being collected accurately. The experiment should be run for a sufficient period to capture any seasonal or temporal effects.

2.8. Analyzing the Results

After the experiment has been run, the results should be analyzed to determine whether there is a statistically significant difference between the control group and the treatment group. Statistical tests, such as t-tests or chi-square tests, can be used to determine the significance of the results.

- Example: A t-test may be used to compare the means of the two groups.

- Statistical Significance: If the p-value is less than the significance level (α), the results are considered statistically significant.

2.9. Implementing the Winning Variant

If the treatment group performs significantly better than the control group, the winning variant should be implemented. The results of the experiment should be communicated to stakeholders, and the changes should be documented.

2.10. Documenting and Sharing the Findings

Documenting and sharing the findings of the A/B test is essential for knowledge sharing and continuous improvement. The documentation should include the objective, hypothesis, OEC, MDE, significance level, power, sample size, results, and conclusions. The findings should be shared with stakeholders to inform future decisions.

3. Practical Considerations for A/B Testing

While A/B testing is a powerful tool, there are several practical considerations that should be taken into account to ensure the validity and reliability of the results.

3.1. Splitting Subjects and Avoiding Bias

When splitting subjects between models, ensure the process is truly random. Any bias in group assignments can invalidate the results. Also, ensure the assignment is consistent, so each subject always gets the same treatment.

- Example: A specific customer should not get different prices every time they reload the pricing page.

- Randomization Method: Evaluate if the randomization method causes an unintended bias.

3.2. Running A/A Tests

Run an A/A test, where both groups are control or treatment groups. This can help surface unintentional biases or errors in the processing and can give a better feeling for how random variations can affect intermediate results.

- Purpose: Identify biases or errors in processing.

- Insight: Understand how random variations can affect results.

3.3. Avoiding Premature Conclusions

Resist the temptation to peek at the results early and draw conclusions or stop the experiment before the minimum sample size is reached. Sometimes, the “wrong” model can get lucky for a while.

- Rationale: Run the test long enough to be confident that the behavior you see is really representative and not just a weird fluke.

- Statistical Significance: Ensure that you achieve statistical significance before making decisions.

3.4. The Sensitivity of the Test

The more sensitive a test is, the longer it will take. The resolution of an A/B test (how small a delta effect size you can detect) increases as the square of the samples. In other words, if you want to halve the delta effect size you can detect, you have to quadruple your sample size.

- Example: Detecting a smaller delta effect size requires a larger sample size.

- Impact: The time required for the test increases significantly with the desired sensitivity.

4. Advanced A/B Testing Techniques

Beyond the basic A/B testing methodology, there are several advanced techniques that can be used to improve the efficiency and effectiveness of experiments.

4.1. Bayesian A/B Tests

The Bayesian approach takes the data from a single run as a given and asks, “What OEC values are consistent with what I’ve observed?” The general steps for a Bayesian analysis are roughly:

- Specify prior beliefs about possible values of the OEC for the experiment groups.

- Define a statistical model using a Bayesian analysis tool and flat, uninformative, or equal priors for each group.

- Collect data and update the beliefs on possible values for the OEC parameters as you go.

- Continue the experiment as long as it seems valuable to refine the estimates of the OEC.

- Advantage: Quantifies uncertainties in the experiment more straightforwardly.

- Limitation: Does not necessarily make the test any shorter.

4.2. Multi-Armed Bandits

Multi-armed bandits dynamically adjust the percentage of new requests that go to each option, based on that option’s past performance. Essentially, the better performing a model is, the more traffic it gets—but some small amount of traffic still goes to poorly performing models, so the experiment can still collect information about them.

- Benefit: Balances the trade-off between exploitation (extracting maximal value) and exploration (collecting information).

- Use Case: Useful when you can’t run a test long enough to achieve statistical significance.

5. A/B Testing in Production with Wallaroo

The Wallaroo ML deployment platform can help you get your A/B test up and running quickly and easily. The platform provides specialized pipeline configurations for setting up production experiments, including A/B tests. All the models in an experimentation pipeline receive data via the same endpoint; the pipeline takes care of allocating the requests to each of the models as desired.

5.1. Random Split

For an A/B test, you would use a random split. Requests are distributed randomly in the proportions you specify: 50-50, 80-20, or whatever is appropriate. If session information is provided, the pipeline ensures that it is respected.

- Advantage: Simple and effective for A/B testing.

- Consideration: Ensure session information is respected for consistent user experience.

5.2. Key Split

Requests are distributed according to the value of a key, or query attribute. This is not a good way to split for A/B tests but can be useful for other situations, for example, a slow rollout of a new model.

- Use Case: Useful for controlled rollouts of new models.

- Limitation: Not suitable for A/B tests requiring random assignment.

5.3. Shadow Deployments

All the models in the experiment pipeline get all the data, and all inferences are logged. However, the pipeline only outputs the inferences from one model–the default, or champion model.

- Purpose: Sanity checking a model before it goes truly live.

- Benefit: Ensures new models meet desired accuracy and performance requirements before production deployment.

5.4. Monitoring and Evaluation

The Wallaroo pipeline keeps track of which requests have been routed to each model and the resulting inferences. This information can then be used to calculate OECs to determine each model’s performance.

- Data Tracking: Tracks requests and inferences for each model.

- Performance Measurement: Calculates OECs to evaluate model performance.

6. Real-World Applications of A/B Testing Machine Learning

A/B testing is used across various industries to optimize machine learning models and improve business outcomes.

6.1. E-commerce

- Recommendation Algorithms: Testing different recommendation algorithms to increase click-through rates and sales.

- Product Pricing: Comparing different pricing strategies to maximize revenue.

- Website Design: Optimizing website layouts and designs to improve user engagement and conversion rates.

- Personalized Recommendations: A/B testing personalized product recommendations to increase sales. According to a study by McKinsey, personalization can increase revenue by 5-15%.

- Checkout Process: Optimizing the checkout process to reduce cart abandonment rates. Baymard Institute reports that the average cart abandonment rate is nearly 70%.

6.2. Marketing

- Email Campaigns: Testing different subject lines, content, and call-to-actions to improve email open rates and click-through rates.

- Ad Campaigns: Comparing different ad creatives, targeting strategies, and bidding strategies to maximize ROI.

- Landing Pages: Optimizing landing pages to improve conversion rates and lead generation.

- Ad Copy: A/B testing different ad copies to increase click-through rates. A study by HubSpot found that companies that A/B test their ads see a 30-40% increase in conversion rates.

- Customer Segmentation: Testing different customer segmentation strategies to improve targeting and personalization. According to research by Bain & Company, companies that excel at customer segmentation generate 10% more profit than companies that don’t.

6.3. Healthcare

- Treatment Protocols: Testing different treatment protocols to improve patient outcomes.

- Medication Adherence: Comparing different interventions to improve medication adherence.

- Patient Engagement: Optimizing patient engagement strategies to improve patient satisfaction and health outcomes.

- Telemedicine Platforms: A/B testing features to improve patient engagement. A study by the American Medical Association found that telemedicine can improve patient outcomes and reduce healthcare costs.

- Medication Reminders: Testing different reminders to improve medication adherence. The World Health Organization reports that medication non-adherence results in approximately $300 billion in avoidable healthcare costs annually.

6.4. Finance

- Fraud Detection Models: Testing different fraud detection models to improve accuracy and reduce false positives.

- Credit Scoring Models: Comparing different credit scoring models to improve risk assessment.

- Customer Service Chatbots: Optimizing customer service chatbots to improve customer satisfaction and resolution rates.

- Algorithmic Trading: A/B testing different algorithms to optimize trading strategies. A report by Greenwich Associates found that algorithmic trading accounts for over 60% of equity trading volume in the U.S.

- Loan Application Processes: Optimizing the loan application process to improve approval rates and customer satisfaction. A study by Accenture found that improving the customer experience in financial services can increase revenue by 10-20%.

6.5. Education

- Learning Platforms: Testing different features on learning platforms to improve student engagement and learning outcomes.

- Teaching Methods: Comparing different teaching methods to improve student performance.

- Educational Content: Optimizing educational content to improve student understanding and retention.

- Online Courses: A/B testing course structures to increase completion rates. A study by MIT found that well-designed online courses can improve learning outcomes by 20-30%.

- Personalized Learning Paths: Testing different paths to improve student performance. According to a report by McKinsey, personalized learning can improve student outcomes by up to 20%.

7. Common Pitfalls to Avoid

While A/B testing is a valuable technique, there are several common pitfalls that can compromise the validity and reliability of the results.

7.1. Insufficient Sample Size

Using a sample size that is too small can lead to statistically insignificant results and false conclusions.

- Solution: Use a sample size calculator to determine the appropriate sample size based on the significance level, power, and MDE.

7.2. Biased Sample Selection

If the sample is not representative of the target population, the results may not be generalizable.

- Solution: Use random assignment to ensure that the control and treatment groups are comparable.

7.3. Peeking at Results

Stopping the experiment early or making decisions based on preliminary results can lead to false conclusions.

- Solution: Wait until the experiment has reached the predetermined sample size and statistical significance before making any decisions.

7.4. Ignoring External Factors

External factors, such as seasonality, marketing campaigns, or economic conditions, can influence the results of the experiment.

- Solution: Monitor external factors and account for their potential impact on the results.

7.5. Not Validating Results

Failing to validate the results of the experiment can lead to implementing changes that do not actually improve performance.

- Solution: Replicate the experiment or use a holdout group to validate the results before implementing any changes.

7.6. Poorly Defined Objectives

Without clear objectives, the A/B test may lack focus and fail to provide actionable insights.

- Solution: Define clear, measurable objectives before starting the A/B test to guide the process and ensure relevant results.

7.7. Neglecting Statistical Significance

Focusing solely on the magnitude of change without considering statistical significance can lead to incorrect conclusions.

- Solution: Always assess the statistical significance of the results to ensure that the observed changes are not due to random chance.

8. The Future of A/B Testing

As technology continues to evolve, A/B testing is likely to become even more sophisticated and integrated into the machine learning lifecycle.

8.1. AI-Powered A/B Testing

AI and machine learning can be used to automate and optimize the A/B testing process, including sample size calculation, experimental design, and result analysis.

8.2. Personalization at Scale

A/B testing can be used to personalize experiences for individual users or segments of users, tailoring content, offers, and recommendations to their specific needs and preferences.

8.3. Real-Time Optimization

A/B testing can be integrated into real-time decision-making systems, allowing for dynamic optimization of models and strategies based on continuous feedback.

8.4. Integration with MLOps Platforms

A/B testing is increasingly integrated with MLOps platforms, streamlining the process of deploying, monitoring, and evaluating machine learning models in production.

8.5. Ethical Considerations

As A/B testing becomes more pervasive, ethical considerations, such as privacy, transparency, and fairness, will become increasingly important.

9. How LEARNS.EDU.VN Can Help

At LEARNS.EDU.VN, we are committed to providing high-quality educational content that empowers individuals to succeed in the field of machine learning. Our comprehensive resources, expert instructors, and hands-on projects can help you master A/B testing and other essential machine-learning skills.

9.1. Comprehensive Courses

Our courses cover a wide range of topics, including A/B testing, statistical analysis, experimental design, and machine learning deployment.

9.2. Expert Instructors

Our instructors are experienced professionals with deep expertise in machine learning and data science.

9.3. Hands-On Projects

Our projects provide hands-on experience with A/B testing and other machine-learning techniques, allowing you to apply your knowledge to real-world problems.

9.4. Community Support

Our community forum provides a platform for students to connect, collaborate, and share their knowledge and experiences.

9.5. Personalized Learning Paths

We offer personalized learning paths tailored to your specific goals and interests, ensuring that you get the most out of your learning experience.

10. Frequently Asked Questions (FAQs)

1. What is the primary goal of A/B testing in machine learning?

A/B testing aims to determine which version of a machine learning model performs better in a real-world setting.

2. What is the Overall Evaluation Criterion (OEC)?

The OEC is the primary metric used to evaluate the performance of each variant in an A/B test.

3. How do you calculate the sample size for an A/B test?

The sample size depends on the significance level, power, and minimum detectable effect (MDE), and can be calculated using online calculators or statistical tools.

4. What is the significance level (α) in A/B testing?

The significance level (α) is the probability of rejecting the null hypothesis when it is true (false positive rate).

5. What is the power (β) in A/B testing?

The power (β) is the probability of rejecting the null hypothesis when it is false (true positive rate).

6. What are the common pitfalls to avoid in A/B testing?

Common pitfalls include insufficient sample size, biased sample selection, peeking at results, and ignoring external factors.

7. What are Bayesian A/B tests?

Bayesian A/B tests use Bayesian statistics to quantify uncertainties in the experiment and provide a more intuitive understanding of the results.

8. What are multi-armed bandits?

Multi-armed bandits dynamically adjust the percentage of new requests that go to each option based on past performance, balancing exploration and exploitation.

9. How can LEARNS.EDU.VN help me learn A/B testing?

LEARNS.EDU.VN offers comprehensive courses, expert instructors, hands-on projects, community support, and personalized learning paths to help you master A/B testing.

10. What are some real-world applications of A/B testing in machine learning?

*A/B testing is used in e-commerce, marketing, healthcare, finance, and education to optimize models and improve business outcomes.*A/B testing is a crucial tool for data-driven decision-making in machine learning. By following the principles outlined in this guide, you can design and implement effective A/B tests that improve your models and drive business success. Enhance your skills, transform your strategies.

Ready to take your machine learning skills to the next level? Visit LEARNS.EDU.VN today to explore our comprehensive courses and resources. Whether you’re looking to master A/B testing, delve into advanced machine learning techniques, or enhance your data analysis capabilities, LEARNS.EDU.VN offers the tools and support you need to succeed. Our expert instructors, hands-on projects, and personalized learning paths ensure you gain practical, real-world experience that sets you apart in the competitive field of data science.

For further inquiries, contact us at:

- Address: 123 Education Way, Learnville, CA 90210, United States

- WhatsApp: +1 555-555-1212

- Website: LEARNS.EDU.VN

Unlock your potential and transform your career with learns.edu.vn!