Deep learning hardware is essential for accelerating model training and inference, and understanding the optimal components can significantly enhance performance. At LEARNS.EDU.VN, we provide a comprehensive breakdown of the necessary hardware, offering solutions for maximizing your deep learning capabilities. Explore the best hardware configurations, including GPUs, CPUs, RAM, and storage, to build an efficient and powerful system, and discover expert guidance on building robust AI infrastructure and hardware optimization.

1. Understanding the GPU: The Heart of Deep Learning

The Graphics Processing Unit (GPU) is undeniably the cornerstone of any deep learning setup. Opting out of using a GPU when building or upgrading a system for deep learning would be imprudent. The speed enhancements GPUs offer are simply too significant to ignore. GPUs are designed to perform parallel computations, making them exceptionally well-suited for the matrix operations that form the backbone of deep learning algorithms. CPUs, on the other hand, are designed for general-purpose computing and perform tasks sequentially.

For instance, training a deep neural network on a large dataset like ImageNet might take weeks on a CPU, whereas a GPU could accomplish the same task in a matter of days or even hours. This acceleration is critical for researchers and practitioners aiming to iterate quickly and tackle complex problems.

When selecting a GPU, you should consider these primary factors:

-

Cost/Performance Ratio: Balancing cost with performance is essential to avoid overspending on hardware that offers marginal gains.

-

Memory Capacity: Adequate memory is needed to accommodate large models and datasets.

-

Cooling Efficiency: Efficient cooling solutions are needed to prevent overheating and ensure consistent performance, especially with multiple GPUs.

GPU

GPU

For those seeking a balance between cost and performance, the NVIDIA RTX series (such as RTX 2070 or RTX 2080 Ti) can be excellent options. These cards support 16-bit precision, allowing for larger models to be trained within the same memory footprint compared to older cards. Additionally, consider exploring the used market for cards like the GTX 1070, GTX 1080, GTX 1070 Ti, and GTX 1080 Ti on platforms like eBay. Be sure to check their condition and reliability before purchasing.

The memory requirements depend on the type of work you plan to undertake:

| Application | Memory Requirement |

|---|---|

| State-of-the-art research | >=11 GB |

| Researching interesting architectures | >=8 GB |

| General research | 8 GB |

| Kaggle competitions | 4 – 8 GB |

| Startup prototyping | 8 GB |

| Company prototyping | 8 GB |

| Company training | >=11 GB |

1.1 Cooling Considerations for GPUs

Cooling becomes particularly critical when using multiple GPUs, especially if they are installed in adjacent PCIe slots. Insufficient cooling can lead to thermal throttling, reducing performance by up to 30% and potentially shortening the lifespan of your GPUs.

For multi-GPU setups, consider using GPUs with a blower-style fan design, which exhausts hot air out of the back of the case. This prevents the recirculation of hot air within the system, maintaining lower temperatures.

2. RAM: Balancing Speed and Size

Random Access Memory (RAM) plays a pivotal role in the efficiency of your deep learning workstation. While RAM speed has a marginal impact on deep learning performance, having an adequate amount of RAM ensures a seamless prototyping experience.

2.1 Understanding RAM Clock Rate

RAM clock rates are often marketed as a critical factor, but in reality, they yield minimal performance gains in most deep learning tasks. The Linus Tech Tips video “Does RAM speed REALLY matter?” provides an insightful explanation of this phenomenon.

The speed of RAM is largely irrelevant for CPU RAM to GPU RAM transfers, primarily because of pinned memory. Pinned memory allows mini-batches to be transferred to the GPU without CPU intervention. When pinned memory isn’t used, the performance gains from faster RAM are typically in the 0-3% range. It’s better to allocate your budget to components that yield more substantial improvements.

2.2 Determining RAM Size

RAM size does not directly affect deep learning computational performance. However, it is essential for preventing bottlenecks and ensuring smooth operation. Insufficient RAM can lead to swapping to disk, which significantly slows down processing.

It’s generally recommended to have at least as much RAM as the memory of your largest GPU. For example, if you have a GPU with 24 GB of memory, you should have at least 24 GB of RAM. This ensures that you can comfortably handle datasets and models without running into memory limitations.

However, consider these additional strategies for managing RAM effectively:

- Matching GPU Memory: Start by matching your RAM to the memory of your largest GPU.

- Adjusting Based on Dataset Size: If you are working with extremely large datasets, consider increasing your RAM accordingly.

- Psychological Factors: More RAM can reduce the need for constant memory management, conserving mental resources for more complex problem-solving. This can be particularly beneficial in competitive environments like Kaggle, where efficient feature engineering is crucial.

Investing in more RAM can save time and boost productivity by avoiding bottlenecks associated with memory constraints, especially during data preprocessing.

3. Central Processing Unit (CPU): Cores and PCIe Lanes

The Central Processing Unit (CPU) is vital for managing data preprocessing and initiating GPU function calls in deep learning. Often, people overemphasize the significance of PCIe lanes, but in reality, the number of CPU cores and the CPU’s ability to support multiple GPUs are more critical.

3.1 CPU and PCI-Express Lanes

PCIe lanes are often a point of concern, but their impact on deep learning performance is minimal. PCIe lanes are primarily used for transferring data from CPU RAM to GPU RAM. The transfer speeds are relatively fast, even with fewer lanes.

For a mini-batch of 32 images from ImageNet using 32-bit precision, the transfer times are as follows:

- 16 PCIe lanes: Approximately 1.1 milliseconds

- 8 PCIe lanes: Approximately 2.3 milliseconds

- 4 PCIe lanes: Approximately 4.5 milliseconds

These timings indicate that even with fewer lanes, the overhead is negligible compared to the actual computation time on the GPU. For example, with a ResNet-152 model, the forward and backward pass takes about 216 milliseconds. Therefore, the difference between using 4 and 16 PCIe lanes results in a performance increase of only about 3.2%. Furthermore, if you use pinned memory with PyTorch’s data loader, the performance gain is virtually zero.

When selecting a CPU and motherboard, make sure they support the number of GPUs you intend to use. Focus on compatibility rather than maximizing the number of PCIe lanes.

3.2 PCIe Lanes and Multi-GPU Parallelism

While PCIe lanes become more significant with a large number of GPUs, they are less critical for systems with four or fewer GPUs. According to research presented at ICLR2016, PCIe lanes are crucial when using 96 GPUs but have a reduced impact on smaller setups.

For systems with 2-3 GPUs, PCIe lanes are not a significant concern. With 4 GPUs, ensuring each GPU has access to at least 8 PCIe lanes (totaling 32 PCIe lanes) is advisable. However, for most practical applications, it is not necessary to invest heavily in maximizing PCIe lanes per GPU.

3.3 Determining the Number of CPU Cores

The CPU’s primary role in deep learning is to manage data preprocessing and initiate GPU function calls. The computational load on the CPU is relatively low when the deep neural networks are run on a GPU.

Data preprocessing is a critical task for the CPU, and there are two common strategies:

- Preprocessing During Training: In this strategy, each mini-batch is preprocessed in real-time during the training loop.

- Preprocessing Before Training: Here, the entire dataset is preprocessed before the training loop begins.

The first strategy benefits significantly from a CPU with many cores, while the second strategy has lower CPU requirements.

For the first strategy, a minimum of 4 threads per GPU (typically two cores per GPU) is recommended. You might see an additional performance gain of about 0-5% per additional core per GPU. For the second strategy, a minimum of 2 threads per GPU (one core per GPU) is adequate, with minimal performance gains from additional cores.

3.4 CPU Clock Rate (Frequency)

The clock rate is often considered a primary indicator of CPU speed, with higher GHz values generally implying better performance. However, this comparison is most accurate when comparing processors with the same architecture.

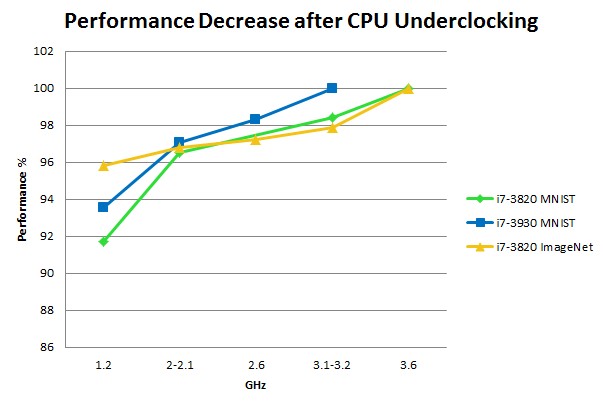

In deep learning, the CPU handles relatively little computation, mainly managing variables, evaluating Boolean expressions, and making function calls to the GPU. While the CPU may show 100% usage during deep learning tasks, underclocking experiments have shown that the clock rate has a limited impact on overall performance.

For example, underclocking experiments on MNIST and ImageNet datasets showed only a small performance impact, even with significant reductions in CPU core clock rates. This suggests that investing in a higher clock rate might not yield substantial improvements in deep learning tasks compared to other hardware upgrades.

4. Hard Drive/SSD: Storage Solutions for Deep Learning

The hard drive or Solid State Drive (SSD) is not usually a bottleneck in deep learning, but improper usage can negatively affect performance.

4.1 Understanding Storage Performance

Reading data directly from a traditional hard drive when it is needed (blocking wait) can be detrimental. For example, a 100 MB/s hard drive can add about 185 milliseconds for an ImageNet mini-batch of size 32. However, if you asynchronously fetch data before it is used (e.g., using PyTorch vision loaders), the mini-batch can be loaded in the background while the current batch is being computed.

SSDs significantly improve the overall experience due to faster program start times and quicker preprocessing of large files. NVMe SSDs offer even better performance compared to regular SSDs.

4.2 Optimal Storage Setup

The ideal setup involves a large, slower hard drive for storing datasets and an SSD for productivity and running programs.

5. Power Supply Unit (PSU): Ensuring Stable Power Delivery

Selecting an appropriate Power Supply Unit (PSU) is vital to accommodate all your current and future GPUs. GPUs tend to become more energy-efficient over time, making a good PSU a worthwhile long-term investment.

5.1 Calculating Required Wattage

Calculate the required wattage by summing the Thermal Design Power (TDP) of your CPU and GPUs, adding an additional 10% for other components and a buffer for power spikes. For example, if you have 4 GPUs with 250 watts TDP each and a CPU with 150 watts TDP, the calculation would be:

4 x 250 + 150 + 100 = 1250 watts

Adding another 10% as a safety margin results in 1375 watts. In this case, it would be prudent to round up and select a 1400-watt PSU.

5.2 Key Considerations for PSU Selection

- PCIe Connectors: Ensure the PSU has enough PCIe 8-pin or 6-pin connectors to support all your GPUs.

- Power Efficiency Rating: High power efficiency ratings are important, especially when running multiple GPUs for extended periods. Lower efficiency can result in significant electricity costs.

For example, running a 4 GPU system at full power (1000-1500 watts) for two weeks can consume 300-500 kWh. With electricity costs at 20 cents per kWh, this would amount to 60-100€. An 80% efficient power supply could increase these costs by an additional 18-26€.

5.3 Environmental Responsibility

Using multiple GPUs around the clock increases your carbon footprint. Consider options to offset this impact, such as going carbon neutral.

6. CPU and GPU Cooling: Managing Heat

Effective cooling is crucial to preventing performance bottlenecks caused by overheating. While standard heat sinks or all-in-one (AIO) water cooling solutions are suitable for CPUs, GPUs require careful consideration.

6.1 Air Cooling GPUs

Air cooling is a reliable choice for single GPUs or multiple GPUs with sufficient space between them. However, cooling 3-4 GPUs can be challenging. Modern GPUs will increase their speed and power consumption until they reach a temperature threshold, typically around 80°C, at which point they will throttle their performance to prevent overheating.

6.2 Linux Fan Control and Blower-Style Fans

NVIDIA GPUs are primarily optimized for Windows, where fan schedules can be easily adjusted. In Linux, configuring fan speeds can be more complex.

If you run 3-4 GPUs on air cooling, the fan design is a key consideration. Blower-style fans push hot air out of the back of the case, ensuring that fresh, cooler air is drawn in. Non-blower fans, on the other hand, suck in air from the vicinity of the GPU, which can lead to recirculation of hot air and increased temperatures in multi-GPU setups. Avoid non-blower fans in 3-4 GPU setups to prevent thermal throttling.

6.3 Water Cooling GPUs

Water cooling is a more expensive but effective option for keeping GPUs cool, especially in dense multi-GPU configurations. Water cooling ensures that even high-performance GPUs remain cool in a 4 GPU setup, which is difficult to achieve with air cooling. Water cooling also operates more quietly, which is beneficial in shared workspaces.

Water cooling typically costs around $100 per GPU, along with additional upfront costs. While it requires more effort to assemble, numerous detailed guides are available. Maintenance is generally straightforward.

6.4 Case Selection for Cooling

Large tower cases with additional fans for the GPU area may offer a slight temperature decrease (2-5°C), but the improvement is often not worth the additional cost and bulkiness. Focus on the cooling solution directly on the GPU rather than the case’s cooling capabilities.

6.5 Cooling Conclusion

For a single GPU, air cooling is best. For multiple GPUs, opt for blower-style air cooling or invest in water cooling. Air cooling offers simplicity, while water cooling provides superior thermal performance and quieter operation. All-in-one (AIO) water cooling solutions for GPUs are also a viable option.

7. Motherboard: Ensuring Compatibility and Expansion

Your motherboard must have enough PCIe ports to support the number of GPUs you plan to use. Most GPUs occupy two PCIe slots, so select a motherboard with adequate spacing between slots.

7.1 Key Considerations for Motherboard Selection

- PCIe Slots: Ensure the motherboard has enough physical PCIe slots.

- GPU Support: Verify that the motherboard supports the desired GPU configuration. Check the specifications on Newegg or the manufacturer’s website to confirm compatibility.

8. Computer Case: Space and Airflow

When selecting a case, ensure it supports full-length GPUs that sit on top of your motherboard. While most cases support full-length GPUs, verify the dimensions and specifications, especially if you are considering a smaller case. If you plan to use custom water cooling, ensure the case has enough space for the radiators.

9. Monitors: Enhancing Productivity

The investment in multiple monitors can significantly enhance productivity. Having multiple displays allows for better organization and management of various tasks, such as coding, reviewing papers, and monitoring system performance.

9.1 Monitor Layout

A typical monitor layout for deep learning might include:

- Left Monitor: For papers, Google searches, email, and Stack Overflow.

- Middle Monitor: For coding.

- Right Monitor: For output windows, R, folders, system monitors, GPU monitors, to-do lists, and other small applications.

10. Building Your PC: A Valuable Skill

Many people are hesitant to build their own computers, but the process is relatively straightforward. Components are designed to fit together in a specific way, reducing the risk of incorrect assembly. Motherboard manuals provide detailed instructions, and numerous online guides and videos offer step-by-step assistance.

Building a computer provides a comprehensive understanding of its components and how they interact. This knowledge can be valuable for future upgrades and troubleshooting.

11. Optimizing Your Deep Learning Hardware with LEARNS.EDU.VN

At LEARNS.EDU.VN, we provide expert guidance and resources to optimize your deep learning hardware setup. Our platform offers detailed tutorials, hardware recommendations, and troubleshooting tips to help you build and maintain a high-performance system. Whether you’re a student, researcher, or industry professional, LEARNS.EDU.VN equips you with the knowledge and skills to maximize your deep learning capabilities.

11.1 Leveraging Expert Resources

- In-Depth Tutorials: Access step-by-step guides on hardware selection, assembly, and optimization.

- Hardware Recommendations: Discover the best hardware configurations for various deep learning tasks and budgets.

- Troubleshooting Tips: Learn how to diagnose and resolve common hardware issues to ensure consistent performance.

12. Conclusion / TL;DR

- GPU: Opt for RTX 2070 or RTX 2080 Ti for a balance of cost and performance. Consider used GTX 1070, GTX 1080, GTX 1070 Ti, and GTX 1080 Ti from eBay.

- CPU: Choose 1-2 cores per GPU, depending on data preprocessing methods. Ensure a clock rate > 2GHz and support for the number of GPUs you intend to run. PCIe lanes are not critical.

- RAM: Prioritize size over clock rates. Match CPU RAM to the RAM of your largest GPU, and increase as needed for large datasets.

- Hard drive/SSD: Use a hard drive for data storage (>= 3TB) and an SSD for productivity and preprocessing small datasets.

- PSU: Calculate the required wattage by adding the TDP of GPUs and CPU, then multiplying by 110%. Ensure a high efficiency rating and sufficient PCIe connectors.

- Cooling: Use standard CPU coolers or AIO water cooling solutions for CPUs. For GPUs, use air cooling with blower-style fans for multiple GPUs or water cooling for superior thermal performance. Set the coolbits flag in your Xorg config to control fan speeds.

- Motherboard: Select a motherboard with enough PCIe slots for your (future) GPUs, typically supporting a maximum of 4 GPUs per system.

- Monitors: Additional monitors can significantly enhance productivity.

By carefully considering these hardware components and strategies, you can build a powerful and efficient deep learning workstation tailored to your specific needs.

FAQ: Deep Learning Hardware Guide

1. What is the most important hardware component for deep learning?

The GPU (Graphics Processing Unit) is the most critical hardware component for deep learning due to its parallel processing capabilities, which significantly accelerate model training and inference.

2. How much RAM do I need for deep learning?

You should have at least as much RAM as the memory of your largest GPU. For example, if your GPU has 24 GB of memory, you should have at least 24 GB of RAM. Consider more RAM for large datasets and complex preprocessing tasks.

3. Do PCIe lanes matter for deep learning?

PCIe lanes have a minimal impact on deep learning performance, especially with four or fewer GPUs. Focus on ensuring your CPU and motherboard support the number of GPUs you intend to use.

4. What is the role of the CPU in deep learning?

The CPU primarily manages data preprocessing and initiates GPU function calls. The number of CPU cores is more important than the clock rate for deep learning tasks.

5. Is an SSD necessary for deep learning?

While not strictly necessary, an SSD (Solid State Drive) significantly improves productivity by reducing program load times and accelerating data preprocessing. It is recommended to use an SSD for your operating system and frequently accessed data.

6. How do I calculate the required wattage for my PSU?

Add up the TDP (Thermal Design Power) of your CPU and GPUs, then multiply the total by 110% to account for other components and power spikes. Ensure the PSU has enough PCIe connectors for all your GPUs.

7. What type of cooling is best for multiple GPUs?

For multiple GPUs, blower-style air cooling or water cooling are the most effective options. Blower-style fans exhaust hot air out of the back of the case, while water cooling provides superior thermal performance and quieter operation.

8. How can I optimize my deep learning hardware setup?

- Select a GPU with adequate memory and a good cost/performance ratio.

- Ensure you have enough RAM to match your GPU memory.

- Choose a CPU that supports the number of GPUs you intend to use.

- Use an SSD for your operating system and frequently accessed data.

- Implement an effective cooling solution to prevent thermal throttling.

9. What are the key considerations when choosing a motherboard for deep learning?

Ensure the motherboard has enough PCIe slots for your GPUs and supports the desired GPU configuration. Check the manufacturer’s specifications for compatibility.

10. How important are multiple monitors for deep learning?

Multiple monitors can significantly enhance productivity by allowing you to organize and manage various tasks, such as coding, reviewing papers, and monitoring system performance.

Ready to optimize your deep learning hardware? Visit LEARNS.EDU.VN today to explore our comprehensive guides, tutorials, and expert recommendations. Maximize your deep learning capabilities with the right hardware and the knowledge to use it effectively. Contact us at 123 Education Way, Learnville, CA 90210, United States, or WhatsApp at +1 555-555-1212. Let learns.edu.vn be your partner in achieving deep learning success.