Does Boston Dynamics Use Machine Learning? Discover how this robotics pioneer leverages machine learning, reinforcement learning, and other cutting-edge techniques to create innovative and adaptable robots at LEARNS.EDU.VN. Explore the integration of model predictive control and reinforcement learning for enhanced robot locomotion and problem-solving. Delve into the world of machine learning in robotics!

1. Introduction: Boston Dynamics and Machine Learning in Robotics

Boston Dynamics, a leader in robotics, is constantly pushing the boundaries of what robots can do. Does Boston Dynamics use machine learning in its robots? The answer is a resounding yes. They employ various machine learning techniques, including reinforcement learning, to enhance the capabilities of their robots, like Spot, making them more adaptable and robust in diverse environments. Machine learning algorithms have enabled Boston Dynamics to solve complex problems in robotics.

2. Boston Dynamics: A Pioneer in Robotics Innovation

Boston Dynamics is renowned for its innovative and dynamic robots. These robots are not just pre-programmed machines; they are intelligent systems capable of learning and adapting to their surroundings. Machine learning plays a pivotal role in this adaptability.

2.1. Spot: The Agile and Versatile Robot

Spot, one of Boston Dynamics’ most famous creations, is a prime example of the company’s commitment to advanced robotics.

Spot robot performing inspection

Spot robot performing inspection

Spot’s legged mobility allows it to navigate complex terrains, making it suitable for various applications. It is deployed in factory inspections, nuclear plant decommissioning, and even arctic pit mines. Spot robots have collectively walked over 250,000 km, demonstrating their reliability and endurance.

2.2. Applications of Spot in Diverse Environments

Spot’s versatility extends to numerous industries. Its ability to perform multi-floor factory inspections helps companies like Nestlé Purina ensure quality and safety. In Fukushima Daiichi, Spot navigates dust and debris during nuclear plant decommissioning, minimizing human exposure to hazardous conditions. Spot also enhances safety and efficiency in arctic mining operations for LNS.

Here’s a table illustrating some of Spot’s applications:

| Industry | Application | Benefit |

|---|---|---|

| Manufacturing | Factory inspections | Improved quality control and safety |

| Nuclear Energy | Nuclear plant decommissioning | Reduced human exposure to hazardous conditions |

| Mining | Arctic pit mining | Enhanced safety and efficiency in harsh environments |

| Construction | Site surveying and progress monitoring | Real-time data collection and analysis for better project management |

| Public Safety | Remote inspection and surveillance | Increased safety for law enforcement and emergency responders |

3. The Role of Model Predictive Control (MPC) in Boston Dynamics Robots

Historically, Boston Dynamics’ legged robots have leveraged Model Predictive Control (MPC). This control strategy predicts and optimizes the future states of the robot to decide what action to take.

3.1. How MPC Works

MPC works by creating a model of the robot and its environment. This model is then used to predict how the robot will behave in the future based on different actions it could take. The controller selects the action that optimizes a predefined objective, such as maintaining balance or following a specific path.

3.2. Advantages of MPC

MPC is intuitive and debuggable, allowing engineers to tune robot behaviors for both practical and aesthetic goals. It works well when the controller’s model accurately reflects the physical system.

Here’s a list of the advantages of MPC:

- Intuitive and easy to understand

- Debuggable and tunable

- Effective when the model accurately represents the system

- Allows for optimization of various objectives and constraints

4. Challenges in Real-World Applications

Despite the effectiveness of MPC, real-world conditions present challenges that are difficult to observe and model accurately. For example, Spot might need to traverse the edge of a container used for liquid containment, where it risks tripping or slipping on a wet or greasy floor.

4.1. The Need for Adaptive Control Systems

To address these challenges, Boston Dynamics seeks control software that can adapt to handle more and more real-world scenarios without degrading performance in other situations. This is where machine learning, particularly reinforcement learning, comes into play.

4.2. Examples of Challenging Scenarios

Here are some examples of scenarios that challenge traditional control systems:

- Traversing slippery or uneven surfaces

- Navigating obstacles with unpredictable shapes and sizes

- Maintaining balance in windy or unstable conditions

- Adapting to changes in the environment, such as moving objects or varying lighting conditions

5. Locomotion Control on Spot: A Multi-Scale Approach

Spot’s locomotion control system makes decisions at multiple time scales. It decides what path the robot should take, what pattern of steps it should use to move along that path, and how to make high-speed adjustments to posture and step timing to maintain balance.

5.1. Evaluating Multiple MPC Controllers

Spot’s production controller evaluates many MPC controllers simultaneously. In less than a millisecond, Spot assesses dozens of individual predictive horizons, each with its own unique step trajectory reference that considers both the robot and environment state.

5.2. Scoring and Selecting the Best Controller

A system “scores” the output of each controller, selecting the one with the maximum value and using its output to control the robot. This approach has yielded a high mean time between falls.

Here’s a table summarizing the multi-scale decision-making process:

| Level | Decision | Time Scale | Input | Output |

|---|---|---|---|---|

| High-Level | Path planning | Seconds to Minutes | Mission objectives, map of the environment | Sequence of waypoints |

| Mid-Level | Step pattern generation | Milliseconds to Seconds | Path, robot state, terrain information | Trajectory of each foot |

| Low-Level | Posture and step timing adjustments | Microseconds to Milliseconds | Robot state, environment state, desired foot trajectories | Motor commands to maintain balance and execute desired foot trajectories |

| Controller Selection | Choosing the best MPC controller | Less than a millisecond | Outputs from dozens of MPC controllers, robot and environment state | Selected controller output to control the robot |

6. Drawbacks of the Legacy Locomotion Control System

Despite its successes, the legacy locomotion control system has some drawbacks. Evaluating multiple MPC instances in parallel consumes significant onboard compute, requiring additional time and energy. Making changes to the selection function to address new failure modes can be nontrivial and risks reducing performance in other situations.

6.1. Computational Complexity

The need to evaluate multiple MPC instances in parallel leads to high computational complexity. This can limit the robot’s ability to perform other tasks simultaneously and reduce its battery life.

6.2. Difficulty in Addressing New Failure Modes

Modifying the selection function to handle new failure modes is complex and can inadvertently affect performance in other areas. This makes it challenging to continuously improve the robot’s performance in diverse environments.

7. Reinforcement Learning (RL) for Locomotion

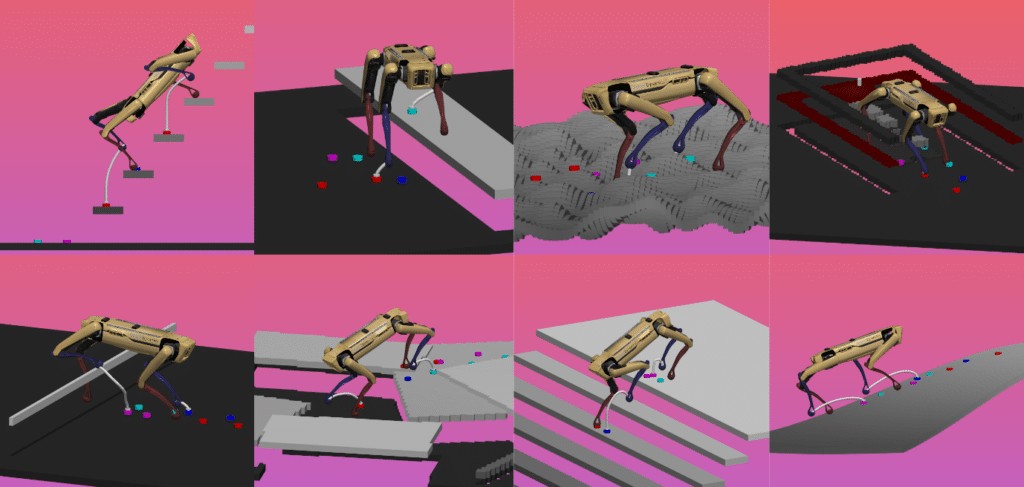

To address these challenges, Boston Dynamics has employed a data-driven approach to robot control design called reinforcement learning. This approach optimizes the control strategy through trial and error in a simulator.

7.1. How Reinforcement Learning Works

In reinforcement learning, a policy (the controller) is implemented in a neural network whose parameters are optimized by the RL algorithm. Engineers need only simulate scenarios of interest and define a performance objective (a reward function) to be optimized.

7.2. Advantages of Reinforcement Learning

Reinforcement learning is advantageous in situations where problem conditions are easy to simulate, but good strategies are difficult to describe. It allows the robot to learn from its experiences and adapt to new situations without explicit programming.

Here’s a table comparing traditional programming and reinforcement learning:

| Feature | Traditional Programming | Reinforcement Learning |

|---|---|---|

| Strategy | Devised by human programmers in source code | Optimized through trial and error in a simulator |

| Policy Implementation | Source code (e.g., C++) | Neural network |

| Engineer’s Role | Devise detailed strategy in source code | Simulate scenarios and define a reward function |

| Advantage | Effective for well-defined problems with known solutions | Effective for complex problems that are hard to describe |

8. Applying RL to Spot’s Locomotion Control Stack

To apply RL to Spot’s locomotion control stack, Boston Dynamics defined the inputs and outputs of the policy in a specific way. This allows them to leverage their existing model-based locomotion controller while focusing learning on the part of the strategy that is harder to program.

8.1. Benefits of Integrating RL

Integrating RL has multiple benefits. It takes advantage of the existing model-based locomotion controller and reduces the computational complexity of the production controller by removing the need to run multiple MPC instances in parallel.

8.2. Training the Policy Through Simulation

The policy is trained by running over a million simulations on a compute cluster. Simulation environments are generated randomly with varying physical properties, and the objective maximized by the RL algorithm includes terms that reflect the robot’s ability to follow navigation commands while not falling or bumping into the environment.

9. Evaluating Learned Policies with Robustness Testing

To ensure the learned policies are effective and reliable, Boston Dynamics uses robustness testing. This involves both simulation and hardware testing to evaluate all software changes before they are deployed to customers.

9.1. Simulation Testing

Parallel simulations are used to benchmark the robot’s performance on a set of scenarios with increasing difficulty. Statistics are collected to validate that the learned policies improve performance in specific scenarios while maintaining performance in others.

9.2. Hardware Testing

Once simulation results meet standards, the policy is deployed to an internal robustness fleet of Spot robots. These robots operate 24/7 in various conditions, and their performance is tracked using a digital dashboard and logs.

9.3. Robustifying the Policy

Falls and general mobility issues that are reproducible within a physics simulation are recreated in simulation and become part of the training or evaluation set. Retraining a policy effectively robustifies it to failure modes that have already been seen.

Here’s a table summarizing the robustness testing process:

| Stage | Method | Objective |

|---|---|---|

| Simulation | Parallel simulations with increasing difficulty | Benchmark performance, validate improvements, and identify failure modes |

| Hardware | Deployment to internal robustness fleet operating 24/7 | Test in real-world conditions, track performance metrics, and identify issues |

| Retraining | Recreate failure modes in simulation and add them to training or evaluation set | Robustify the policy to known failure modes, improve overall reliability, and ensure consistent performance across various scenarios |

10. Results with RL: Improved Locomotion and Reliability

The implementation of RL has resulted in significant improvements in Spot’s locomotion and reliability. Spot is now less likely to fall, even on extremely slick or irregular surfaces.

10.1. Benefits for Customers

This improved locomotion means customers can more easily deploy Spot in areas of their facilities that would have otherwise been challenging to access. It also reduces the likelihood of operator interventions or damage resulting from a fall.

10.2. Automatic Performance Improvements

Current Spot users automatically benefit from the performance improvements of the RL policy following software updates. Boston Dynamics continues to iterate with the data they collect to further refine the policy and expand the capabilities.

11. What’s Next for Boston Dynamics and Machine Learning?

Boston Dynamics is actively exploring and testing totally different architectures to unlock new capabilities and be tolerant in scenarios that excessively violate the assumptions of the models.

11.1. Exploring New Control Architectures

While a hybrid control strategy that leverages both a model predictive controller and a learned policy is proving effective, it largely imposes the limitations of the modeling on the full control system. To overcome these limitations, Boston Dynamics is exploring new control architectures.

11.2. Enabling the Robotics Research Community

Boston Dynamics is also committed to enabling the broader robotics research community. They have announced the new Spot RL Researcher Kit in collaboration with NVIDIA and the AI Institute to provide robot hardware, a high-performance AI computer, and a simulator where the robot can be trained.

11.3. Spot RL Researcher Kit

The Spot RL Researcher Kit includes a license for joint level control API, a payload based on the NVIDIA Jetson AGX Orin for deploying the RL policy, and a GPU accelerated Spot simulation environment based on NVIDIA Isaac Lab. This kit enables developers to create advanced skills for Spot, such as new gaits that allow the robot to walk much faster than the standard version.

12. Machine Learning: The Future of Robotics at Boston Dynamics

As Boston Dynamics’ robots continue to expand into new environments and perform more types of work, machine learning will play an increasing role in their robot behavior development. The company will continue to build on the processes, experience, and unique data they generate to make their robots go further and do more.

12.1. Expanding Robot Capabilities with Machine Learning

Machine learning is not just a tool for improving existing capabilities; it is also a catalyst for creating entirely new ones. By leveraging machine learning, Boston Dynamics can develop robots that are more adaptable, intelligent, and capable of solving complex problems.

12.2. Continuous Improvement Through Data and Experience

Boston Dynamics’ commitment to continuous improvement means that their robots will continue to evolve and improve over time. By collecting and analyzing data from their robots in the field, they can identify areas for improvement and refine their machine learning algorithms to enhance performance.

13. How LEARNS.EDU.VN Can Help You Learn More About Robotics and Machine Learning

Are you fascinated by the intersection of robotics and machine learning? Do you want to learn more about the technologies that power Boston Dynamics’ innovative robots? LEARNS.EDU.VN offers a wealth of resources to help you expand your knowledge and skills in these exciting fields.

13.1. Comprehensive Educational Resources

LEARNS.EDU.VN provides detailed articles and guides on a wide range of topics, including robotics, machine learning, and artificial intelligence. Whether you’re a student, a professional, or simply curious, you’ll find valuable information to help you understand these complex subjects.

13.2. Expert Insights and Analysis

Our team of education experts and industry professionals curate content that is both informative and insightful. We provide in-depth analysis of the latest trends and developments in robotics and machine learning, helping you stay ahead of the curve.

13.3. Practical Learning Opportunities

LEARNS.EDU.VN goes beyond theory by offering practical learning opportunities. Our courses and tutorials provide hands-on experience with robotics and machine learning tools, allowing you to apply your knowledge and develop valuable skills.

13.4. Connect with a Community of Learners

Join our community of learners and connect with other enthusiasts, students, and professionals who share your passion for robotics and machine learning. Exchange ideas, ask questions, and collaborate on projects to enhance your learning experience.

13.5. Addressing Your Learning Challenges

We understand the challenges you face when trying to learn new and complex topics. LEARNS.EDU.VN is designed to provide clear, concise, and easy-to-understand explanations, breaking down complex concepts into manageable pieces.

13.6. Providing the Services You Need

At LEARNS.EDU.VN, we offer services tailored to meet your specific learning needs. Whether you’re looking for foundational knowledge, advanced techniques, or career guidance, we have the resources to help you succeed.

14. Call to Action: Explore the World of Robotics and Machine Learning with LEARNS.EDU.VN

Ready to dive deeper into the world of robotics and machine learning? Visit LEARNS.EDU.VN today to access our comprehensive resources, expert insights, and practical learning opportunities.

14.1. Discover Detailed Guides and Articles

Explore our extensive library of articles and guides on robotics, machine learning, and related topics. Learn about the history, principles, and applications of these technologies from our team of experts.

14.2. Enroll in Hands-On Courses and Tutorials

Take your learning to the next level by enrolling in our hands-on courses and tutorials. Gain practical experience with robotics and machine learning tools, and develop the skills you need to succeed in this exciting field.

14.3. Connect with a Community of Like-Minded Learners

Join our community of learners and connect with other enthusiasts, students, and professionals who share your passion for robotics and machine learning. Collaborate on projects, exchange ideas, and expand your network.

14.4. Start Your Learning Journey Today

Don’t wait any longer to explore the world of robotics and machine learning. Visit LEARNS.EDU.VN today and start your learning journey. Unlock your potential and discover the endless possibilities that await you in this dynamic field.

Visit us at:

- Address: 123 Education Way, Learnville, CA 90210, United States

- WhatsApp: +1 555-555-1212

- Website: LEARNS.EDU.VN

15. Conclusion: The Future of Robotics is Intelligent and Adaptive

Boston Dynamics’ use of machine learning in its robots, like Spot, exemplifies the future of robotics. By combining model-based control with data-driven approaches like reinforcement learning, robots can become more adaptable, reliable, and capable of solving complex problems in diverse environments. As machine learning continues to advance, we can expect to see even more innovative and intelligent robots emerge, transforming industries and improving our lives.

15.1. The Transformative Potential of Machine Learning in Robotics

Machine learning is revolutionizing the field of robotics, enabling robots to perform tasks that were once thought impossible. From navigating complex terrains to collaborating with humans, machine learning is unlocking new possibilities and driving innovation across industries.

15.2. Embrace the Future of Robotics with LEARNS.EDU.VN

As the world of robotics continues to evolve, it’s essential to stay informed and develop the skills needed to thrive in this dynamic field. LEARNS.EDU.VN is your partner in learning, providing the resources, insights, and opportunities you need to succeed. Embrace the future of robotics and start your learning journey with us today.

16. FAQ: Does Boston Dynamics Use Machine Learning?

Here are some frequently asked questions about Boston Dynamics and their use of machine learning:

16.1. Does Boston Dynamics use machine learning in all of its robots?

Yes, Boston Dynamics incorporates machine learning techniques, including reinforcement learning, into the development of their robots to enhance adaptability and performance in diverse environments.

16.2. What specific types of machine learning does Boston Dynamics use?

Boston Dynamics primarily uses reinforcement learning (RL) to optimize robot control strategies through trial and error in simulated environments. They also leverage model predictive control (MPC) in conjunction with RL for enhanced locomotion.

16.3. How does reinforcement learning improve robot performance?

Reinforcement learning allows robots to learn from their experiences and adapt to new situations without explicit programming. By simulating various scenarios and defining a reward function, the robot can optimize its control strategy and improve its performance over time.

16.4. What is Model Predictive Control (MPC), and how does it work?

Model Predictive Control (MPC) is a control strategy that predicts and optimizes the future states of the robot to decide what action to take in the moment. It involves creating a model of the robot and its environment, predicting future behavior based on different actions, and selecting the action that optimizes a predefined objective.

16.5. What are the advantages of using machine learning in robotics?

The advantages of using machine learning in robotics include increased adaptability, improved performance in complex environments, reduced need for explicit programming, and the ability to learn from experience and continuously improve over time.

16.6. How does Boston Dynamics evaluate the performance of its learned policies?

Boston Dynamics evaluates the performance of its learned policies through robustness testing, which involves both simulation and hardware testing. Parallel simulations are used to benchmark performance in various scenarios, and hardware testing is conducted using an internal fleet of robots operating in diverse conditions.

16.7. What is the Spot RL Researcher Kit?

The Spot RL Researcher Kit is a package that provides robot hardware, a high-performance AI computer, and a simulator where the robot can be trained. It includes a license for joint level control API, a payload based on the NVIDIA Jetson AGX Orin, and a GPU accelerated Spot simulation environment based on NVIDIA Isaac Lab.

16.8. How can I learn more about robotics and machine learning?

You can learn more about robotics and machine learning by exploring the comprehensive resources, expert insights, and practical learning opportunities offered by learns.edu.vn.

16.9. What are some real-world applications of Spot robots?

Spot robots are used in various industries, including manufacturing, nuclear energy, mining, construction, and public safety. They perform tasks such as factory inspections, nuclear plant decommissioning, arctic pit mining, site surveying, and remote inspection and surveillance.

16.10. How will machine learning impact the future of robotics at Boston Dynamics?

Machine learning will play an increasing role in robot behavior development at Boston Dynamics. The company will continue to build on the processes, experience, and unique data they generate to make their robots go further and do more, ultimately transforming industries and improving our lives.