Ensemble Learning Neptune Ia is a robust machine learning technique that combines multiple individual models to create a stronger, more accurate predictive model. At LEARNS.EDU.VN, we believe in equipping you with the knowledge and skills to master this powerful approach, enabling you to solve complex problems and achieve exceptional results. Ensemble methods offer enhanced performance, improved generalization, and increased robustness, making them invaluable in various domains such as finance, healthcare, and marketing. Dive in to learn how ensemble learning works, its various types, and how to implement it effectively using Neptune IA.

1. Understanding Ensemble Learning Neptune IA

Ensemble learning involves training multiple individual models (often called “base learners”) and then combining their predictions to make a final prediction. This approach leverages the diversity among the base learners to reduce errors and improve overall performance. The core idea is that by aggregating the predictions of multiple models, the ensemble can compensate for the weaknesses of individual models and achieve better accuracy and stability.

1.1. The Power of Diversity

The effectiveness of ensemble learning relies on the diversity of its base learners. Diversity can be achieved through various methods, such as:

- Different Algorithms: Using different types of machine learning algorithms (e.g., decision trees, neural networks, support vector machines) as base learners.

- Different Training Data: Training each base learner on a different subset of the training data (e.g., through bootstrapping or random feature selection).

- Different Hyperparameters: Training base learners with different hyperparameters to create variations in their learning process.

1.2. Benefits of Ensemble Learning

Ensemble learning offers several key advantages:

- Improved Accuracy: Combining multiple models often leads to higher prediction accuracy compared to using a single model.

- Enhanced Robustness: Ensembles are less sensitive to noise and outliers in the data because the errors of individual models tend to cancel each other out.

- Better Generalization: Ensembles can generalize better to unseen data by reducing overfitting.

- Increased Stability: The combined prediction of an ensemble is more stable and less prone to drastic changes due to small variations in the training data.

2. Types of Ensemble Learning Methods

There are several popular ensemble learning methods, each with its unique approach to combining base learners. Here are some of the most common types:

2.1. Bagging (Bootstrap Aggregating)

Bagging involves training multiple base learners on different subsets of the training data, created through a process called bootstrapping. Bootstrapping involves randomly sampling data points from the original training set with replacement. Each base learner is trained on a different bootstrap sample, and their predictions are combined through averaging (for regression) or voting (for classification).

2.1.1. Random Forest

Random Forest is a popular bagging-based ensemble method that uses decision trees as base learners. In addition to bootstrapping, Random Forest introduces randomness in the feature selection process. When building each decision tree, a random subset of features is considered at each split. This further increases the diversity among the trees and improves the ensemble’s performance.

Alt Text: Diagram illustrating the structure of a Random Forest ensemble with multiple decision trees.

2.1.2. Advantages of Bagging and Random Forest

- Reduces Variance: Bagging helps reduce the variance of individual models, leading to more stable and accurate predictions.

- Handles High Dimensionality: Random Forest can handle high-dimensional data effectively due to the random feature selection process.

- Provides Feature Importance: Random Forest can estimate the importance of each feature in the dataset, which can be useful for feature selection and understanding the underlying relationships in the data.

2.2. Boosting

Boosting is an ensemble learning method that sequentially trains base learners, with each learner focusing on correcting the errors of its predecessors. Unlike bagging, boosting assigns weights to the training data points, with higher weights given to misclassified instances. This forces subsequent learners to pay more attention to the difficult-to-classify examples.

2.2.1. AdaBoost (Adaptive Boosting)

AdaBoost is one of the earliest and most well-known boosting algorithms. It works by iteratively training base learners (typically decision stumps, which are decision trees with only one split) and adjusting the weights of the training data points. After each iteration, the weights of misclassified instances are increased, while the weights of correctly classified instances are decreased. This ensures that subsequent learners focus on the instances that were previously misclassified.

2.2.2. Gradient Boosting

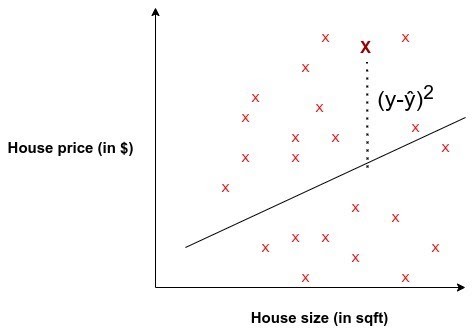

Gradient Boosting is another popular boosting algorithm that builds an ensemble of decision trees in a stage-wise manner. At each iteration, a new decision tree is trained to predict the residuals (the differences between the actual values and the predicted values) of the previous ensemble. This allows the ensemble to gradually improve its accuracy by focusing on the areas where it is making the most errors.

Alt Text: Illustration of the Gradient Boosting process, showing sequential model training and error correction.

2.2.3. XGBoost, LightGBM, and CatBoost

XGBoost (Extreme Gradient Boosting), LightGBM (Light Gradient Boosting Machine), and CatBoost are advanced implementations of gradient boosting that offer significant improvements in terms of speed, accuracy, and scalability. These algorithms incorporate various techniques such as regularization, tree pruning, and handling of categorical features to optimize the boosting process.

2.2.4. Advantages of Boosting

- High Accuracy: Boosting algorithms often achieve state-of-the-art accuracy on a wide range of machine learning tasks.

- Feature Importance: Boosting algorithms provide insights into feature importance, helping to identify the most relevant predictors in the data.

- Handles Complex Relationships: Boosting can capture complex non-linear relationships in the data, making it suitable for challenging prediction problems.

2.3. Stacking (Stacked Generalization)

Stacking is an ensemble learning method that combines the predictions of multiple base learners using a meta-learner. The base learners are trained on the original training data, and their predictions are then used as input features for the meta-learner. The meta-learner learns how to best combine the predictions of the base learners to make a final prediction.

2.3.1. The Stacking Process

- Base Learner Training: Train multiple diverse base learners on the original training data.

- Prediction Generation: Generate predictions from each base learner on a held-out validation set or through cross-validation.

- Meta-Learner Training: Train a meta-learner using the predictions from the base learners as input features and the original target variable as the target.

- Final Prediction: Use the trained base learners to generate predictions on new, unseen data, and then feed those predictions to the meta-learner to obtain the final prediction.

2.3.2. Advantages of Stacking

- Flexibility: Stacking allows you to combine diverse base learners, potentially capturing different aspects of the data.

- High Performance: Stacking can often achieve higher accuracy than individual base learners or simpler ensemble methods like bagging and boosting.

- Adaptability: The meta-learner can adapt to the strengths and weaknesses of the base learners, learning how to best combine their predictions.

3. Implementing Ensemble Learning with Neptune IA

Neptune IA is a powerful platform that can help you streamline the process of implementing and managing ensemble learning models. Here’s how you can leverage Neptune IA to enhance your ensemble learning workflows:

3.1. Experiment Tracking

Neptune IA allows you to track and log all aspects of your ensemble learning experiments, including:

- Hyperparameters: Track the hyperparameters of each base learner and the ensemble method itself.

- Metrics: Log performance metrics such as accuracy, precision, recall, F1-score, and AUC-ROC for both the individual base learners and the ensemble model.

- Models: Save the trained models for each base learner and the ensemble model for later use.

- Code: Track the code used to train and evaluate the models, ensuring reproducibility.

- Visualizations: Create and log visualizations of model performance, such as learning curves, confusion matrices, and ROC curves.

By tracking these details, you can easily compare different ensemble configurations, identify the most effective base learners, and optimize the ensemble’s performance.

3.2. Model Versioning

Neptune IA provides robust model versioning capabilities, allowing you to manage and track different versions of your ensemble models. This is particularly useful when you are experimenting with different base learners, hyperparameters, or data preprocessing techniques.

You can easily compare the performance of different model versions, roll back to previous versions if necessary, and track the lineage of each model.

3.3. Collaboration and Sharing

Neptune IA facilitates collaboration among team members by providing a centralized platform for managing and sharing ensemble learning experiments. Team members can easily access each other’s experiments, compare results, and share insights.

This can significantly improve the efficiency of your ensemble learning projects and help you build better models faster.

3.4. Deployment and Monitoring

Neptune IA can also help you deploy and monitor your ensemble models in production. You can use Neptune IA to track the performance of your models in real-time, identify potential issues, and trigger retraining when necessary.

This ensures that your ensemble models continue to perform optimally over time.

4. Real-World Applications of Ensemble Learning Neptune IA

Ensemble learning techniques are widely used in various industries to solve complex prediction problems. Here are some notable examples:

4.1. Finance

- Credit Risk Assessment: Ensemble models can be used to predict the likelihood of loan defaults by combining various financial and demographic data.

- Fraud Detection: Ensemble methods can identify fraudulent transactions by analyzing patterns in transaction data and combining the predictions of multiple fraud detection models.

- Algorithmic Trading: Ensemble models can be used to make trading decisions by combining predictions from various technical indicators and market sentiment analysis models.

4.2. Healthcare

- Disease Diagnosis: Ensemble models can assist in diagnosing diseases by combining the predictions of multiple diagnostic tests and clinical observations.

- Drug Discovery: Ensemble methods can be used to predict the effectiveness of drug candidates by combining data from various sources, such as genomic data, chemical structures, and clinical trial results.

- Patient Outcome Prediction: Ensemble models can predict patient outcomes by analyzing patient data and combining the predictions of multiple predictive models.

4.3. Marketing

- Customer Churn Prediction: Ensemble models can predict which customers are likely to churn by analyzing customer behavior and demographic data.

- Personalized Recommendations: Ensemble methods can provide personalized recommendations by combining the predictions of multiple recommendation models.

- Marketing Campaign Optimization: Ensemble models can optimize marketing campaigns by predicting the effectiveness of different marketing strategies and targeting the most receptive customers.

5. Best Practices for Ensemble Learning

To maximize the effectiveness of ensemble learning, consider these best practices:

- Data Preparation: Clean and preprocess your data thoroughly. Handle missing values, outliers, and inconsistencies to ensure data quality.

- Feature Engineering: Create relevant and informative features that capture the underlying patterns in the data. Feature selection techniques can help reduce dimensionality and improve model performance.

- Model Selection: Choose base learners that are diverse and complementary. Experiment with different types of algorithms and hyperparameters.

- Ensemble Combination: Select an appropriate ensemble combination method based on the characteristics of your data and the base learners. Consider techniques like averaging, voting, and stacking.

- Hyperparameter Tuning: Optimize the hyperparameters of the ensemble method and the base learners using techniques like cross-validation and grid search.

- Evaluation: Evaluate the performance of your ensemble model using appropriate metrics and compare it to the performance of individual base learners.

- Regularization: Apply regularization techniques to prevent overfitting, especially when using complex base learners like neural networks.

- Monitoring: Continuously monitor the performance of your ensemble model in production and retrain it as needed to maintain accuracy.

6. The Role of Data Quality and Feature Engineering

High-quality data is the foundation of any successful machine-learning project, and ensemble learning is no exception. Ensure your dataset is clean, well-structured, and representative of the problem you’re trying to solve. Feature engineering plays a crucial role in extracting meaningful information from the raw data, enabling the base learners to make accurate predictions. Techniques such as feature scaling, normalization, and creation of interaction terms can significantly improve the performance of ensemble models.

7. Addressing Bias and Variance in Ensemble Learning

Bias and variance are two common sources of error in machine learning models. Bias refers to the tendency of a model to consistently make errors in the same direction, while variance refers to the sensitivity of a model to small variations in the training data. Ensemble learning can help reduce both bias and variance by combining the predictions of multiple models.

- Reducing Bias: Boosting algorithms are particularly effective at reducing bias by sequentially training base learners to correct the errors of their predecessors.

- Reducing Variance: Bagging algorithms are effective at reducing variance by training multiple base learners on different subsets of the training data and averaging their predictions.

8. Ensemble Learning and Explainable AI (XAI)

As machine learning models become more complex, it’s increasingly important to understand how they make predictions. Explainable AI (XAI) techniques can help you interpret the decisions of ensemble models and gain insights into the underlying relationships in the data.

- Feature Importance: Ensemble methods like Random Forest and Gradient Boosting provide insights into feature importance, indicating which features have the most significant impact on the model’s predictions.

- SHAP Values: SHAP (SHapley Additive exPlanations) values can be used to explain the contribution of each feature to the prediction of an individual instance.

- LIME: LIME (Local Interpretable Model-agnostic Explanations) can provide local explanations for the predictions of any ensemble model by approximating it with a linear model in the vicinity of a specific instance.

9. The Future of Ensemble Learning

Ensemble learning continues to evolve as researchers explore new techniques and algorithms. Some emerging trends in ensemble learning include:

- Deep Ensembles: Combining deep neural networks in ensemble methods to leverage their power and reduce overfitting.

- Multi-Objective Ensemble Learning: Optimizing ensemble models for multiple objectives, such as accuracy, diversity, and interpretability.

- Online Ensemble Learning: Training ensemble models incrementally as new data becomes available, without requiring retraining from scratch.

- Automated Machine Learning (AutoML) for Ensemble Learning: Using AutoML tools to automate the process of selecting base learners, optimizing hyperparameters, and combining models in ensemble methods.

10. FAQs About Ensemble Learning Neptune IA

- What is ensemble learning Neptune IA?

Ensemble learning Neptune IA is a machine learning technique that combines multiple individual models to create a stronger, more accurate predictive model. - What are the benefits of ensemble learning?

Improved accuracy, enhanced robustness, better generalization, and increased stability. - What are the main types of ensemble learning methods?

Bagging, boosting, and stacking. - How does bagging work?

Training multiple base learners on different subsets of the training data created through bootstrapping. - What is Random Forest?

A bagging-based ensemble method that uses decision trees as base learners with random feature selection.

Alt Text: Illustration of a Random Forest model, highlighting its ability to capture complex patterns.

- How does boosting work?

Sequentially training base learners, with each learner focusing on correcting the errors of its predecessors. - What is AdaBoost?

An early boosting algorithm that adjusts the weights of training data points after each iteration. - What is Gradient Boosting?

A boosting algorithm that builds an ensemble of decision trees in a stage-wise manner to predict residuals. - What are XGBoost, LightGBM, and CatBoost?

Advanced implementations of gradient boosting with optimizations for speed, accuracy, and scalability. - How does stacking work?

Combining the predictions of multiple base learners using a meta-learner.

Ensemble learning Neptune IA offers a powerful approach to building high-performance predictive models by leveraging the diversity and strengths of multiple individual models. By understanding the different types of ensemble methods and following best practices, you can unlock the full potential of this technique and achieve exceptional results in your machine learning projects.

11. Ready to Master Ensemble Learning?

Are you ready to take your machine-learning skills to the next level? At LEARNS.EDU.VN, we offer a wide range of resources to help you master ensemble learning and other cutting-edge machine-learning techniques.

Visit our website at LEARNS.EDU.VN to explore our comprehensive courses, tutorials, and articles. Whether you’re a beginner or an experienced practitioner, we have something for everyone.

Contact us today:

- Address: 123 Education Way, Learnville, CA 90210, United States

- WhatsApp: +1 555-555-1212

- Website: LEARNS.EDU.VN

Unlock the power of ensemble learning and transform your machine-learning projects with learns.edu.vn!