Unlock the full potential of Gemma models by mastering the art of learning rate optimization. At LEARNS.EDU.VN, we empower you with the knowledge and resources to fine-tune your models effectively and achieve superior results, providing clear strategies for academic and professional growth. Explore advanced techniques and real-world applications to elevate your machine learning skills.

1. Introduction to Gemma and Learning Rate

Gemma, a family of open-source models developed by Google, represents a significant advancement in the field of artificial intelligence. These models, designed for a wide range of applications from text generation to code completion, offer developers and researchers powerful tools for innovation. However, like all machine learning models, the performance of Gemma is heavily influenced by the optimization process, and a critical component of this process is the learning rate.

The learning rate is a hyperparameter that dictates the size of the steps taken during the optimization process. In simple terms, it determines how quickly or slowly a model adjusts its internal parameters (weights) in response to the feedback it receives from the training data. A well-tuned learning rate can lead to faster convergence, improved accuracy, and ultimately, a more effective model. Conversely, a poorly chosen learning rate can result in slow training, oscillations around the optimal solution, or even divergence, where the model’s performance degrades over time. Understanding and optimizing the learning rate is therefore paramount to harnessing the full capabilities of Gemma.

1.1. Why Learning Rate Matters in Gemma Models

Gemma models, with their intricate architectures and vast parameter spaces, are particularly sensitive to the choice of learning rate. The right learning rate ensures that the model efficiently navigates the complex landscape of the loss function, converging to a minimum that represents the best possible performance on the given task.

- Convergence Speed: An appropriately chosen learning rate accelerates the training process, enabling the model to learn from the data more quickly.

- Accuracy: Optimal learning rates facilitate the model’s ability to fine-tune its parameters, resulting in higher accuracy and better generalization to unseen data.

- Stability: A carefully selected learning rate prevents the model from overshooting the optimal solution, ensuring stability and consistent performance.

1.2. Challenges in Setting the Learning Rate

Despite its importance, setting the learning rate is not a straightforward task. Several factors complicate the process:

- Model Architecture: Different model architectures may require different learning rates. Gemma’s specific architecture, with its unique layers and connections, may necessitate a learning rate that differs from those used in other models.

- Dataset Characteristics: The nature of the training data, including its size, distribution, and complexity, can influence the optimal learning rate. Datasets with high variability may require smaller learning rates to prevent the model from overfitting.

- Optimization Algorithm: The choice of optimization algorithm (e.g., Adam, SGD) also impacts the learning rate. Different algorithms have different sensitivities to the learning rate, and some may even adapt the learning rate dynamically during training.

Addressing these challenges requires a systematic approach to learning rate optimization, which may involve experimentation, analysis, and the use of advanced techniques such as learning rate schedules and adaptive learning rate methods.

2. Core Concepts of Learning Rate Optimization

To effectively optimize the learning rate for Gemma models, it’s crucial to grasp the core concepts that underpin this process. This section delves into the fundamentals of learning rate optimization, covering essential techniques and strategies.

2.1. Understanding Learning Rate and Gradient Descent

At the heart of learning rate optimization lies the concept of gradient descent. Gradient descent is an iterative optimization algorithm used to find the minimum of a function, in this case, the loss function of a machine learning model. The loss function quantifies the difference between the model’s predictions and the actual values in the training data. The goal of gradient descent is to adjust the model’s parameters in a way that minimizes this loss.

The learning rate determines the step size taken in the direction of the negative gradient during each iteration of gradient descent. Mathematically, the update rule for a model parameter w can be expressed as:

w = w – η ∇L(w*)

Where:

- w is the model parameter

- η is the learning rate

- ∇L(w) is the gradient of the loss function with respect to w

A large learning rate allows for faster progress but may cause the algorithm to overshoot the minimum. A small learning rate ensures more precise steps but can result in slow convergence.

2.2. Fixed vs. Adaptive Learning Rates

Learning rates can be broadly classified into two categories: fixed and adaptive.

2.2.1. Fixed Learning Rates

Fixed learning rates remain constant throughout the training process. While simple to implement, they can be challenging to tune effectively. A learning rate that works well at the beginning of training may not be suitable later on, as the model approaches the minimum of the loss function.

2.2.2. Adaptive Learning Rates

Adaptive learning rates, on the other hand, adjust dynamically during training. These methods aim to automatically adapt the learning rate based on the progress of the optimization process. Common adaptive learning rate algorithms include:

- Adam: An algorithm that adapts the learning rates of each parameter by using estimates of the first and second moments of the gradients.

- Adagrad: An algorithm that adapts the learning rate to each parameter, with infrequently updated parameters receiving larger updates.

- RMSprop: An algorithm that adapts the learning rate based on the moving average of squared gradients.

Adaptive learning rate methods often perform better than fixed learning rates, as they can automatically adjust to the varying characteristics of the loss landscape.

2.3. Learning Rate Schedules

Learning rate schedules define how the learning rate changes over time during training. These schedules can be used to fine-tune the learning rate, allowing for faster convergence and improved performance. Common learning rate schedules include:

- Step Decay: The learning rate is reduced by a factor after a certain number of epochs.

- Exponential Decay: The learning rate is reduced exponentially over time.

- Cosine Annealing: The learning rate follows a cosine function, gradually decreasing and then increasing over time.

The choice of learning rate schedule depends on the specific characteristics of the model and the dataset. Experimentation is often required to determine the most effective schedule.

3. Practical Techniques for Gemma Learning Rate Optimization

Now that we’ve covered the core concepts, let’s delve into practical techniques for optimizing the learning rate for Gemma models. These techniques provide a roadmap for achieving optimal performance.

3.1. Grid Search and Random Search

Grid search and random search are two common methods for hyperparameter optimization, including learning rate optimization.

3.1.1. Grid Search

Grid search involves defining a grid of possible learning rate values and then training the model with each combination of values. The combination that yields the best performance on a validation set is selected as the optimal learning rate.

While grid search is exhaustive, it can be computationally expensive, especially when dealing with a large number of hyperparameters or a large search space.

3.1.2. Random Search

Random search, on the other hand, involves randomly sampling learning rate values from a predefined distribution. This method is often more efficient than grid search, as it explores a wider range of values and is less likely to get stuck in local optima.

Both grid search and random search can be effective for finding a good learning rate, but they require careful consideration of the search space and the computational resources available.

3.2. Learning Rate Range Test

The learning rate range test is a technique for identifying a suitable range of learning rates for a given model and dataset. This method involves training the model for a small number of epochs while gradually increasing the learning rate. The loss is then plotted against the learning rate, and the optimal learning rate range is identified as the region where the loss decreases most rapidly.

The learning rate range test can provide valuable insights into the sensitivity of the model to the learning rate and can help narrow down the search space for further optimization.

3.3. Cyclical Learning Rates

Cyclical learning rates (CLR) involve varying the learning rate between a minimum and maximum bound during training. This method can help the model escape local optima and converge to a better solution.

One popular CLR method is the triangular learning rate schedule, where the learning rate increases linearly from the minimum to the maximum bound and then decreases linearly back to the minimum.

CLR can be particularly effective for training deep neural networks, as it allows the model to explore a wider range of the loss landscape.

3.4. Adaptive Learning Rate Methods in Detail

As mentioned earlier, adaptive learning rate methods automatically adjust the learning rate during training. Let’s take a closer look at some of the most popular adaptive learning rate algorithms:

3.4.1. Adam

Adam (Adaptive Moment Estimation) is one of the most widely used adaptive learning rate algorithms. It combines the ideas of momentum and RMSprop to adapt the learning rates of each parameter.

Adam maintains two moving averages:

- The first moment (mean) of the gradients

- The second moment (uncentered variance) of the gradients

These moving averages are used to compute an adaptive learning rate for each parameter. Adam is generally robust and performs well across a wide range of tasks.

3.4.2. Adagrad

Adagrad (Adaptive Gradient Algorithm) adapts the learning rate to each parameter, with infrequently updated parameters receiving larger updates. This method is well-suited for sparse data, where some parameters are updated more frequently than others.

However, Adagrad can suffer from a problem of diminishing learning rates, where the learning rate becomes very small over time, preventing the model from converging.

3.4.3. RMSprop

RMSprop (Root Mean Square Propagation) is another adaptive learning rate algorithm that addresses the diminishing learning rate problem of Adagrad. RMSprop uses a moving average of squared gradients to adapt the learning rate, which helps to prevent the learning rate from becoming too small.

RMSprop is generally more robust than Adagrad and is often a good choice for training deep neural networks.

4. Advanced Strategies and Considerations

Beyond the practical techniques discussed above, several advanced strategies and considerations can further enhance the optimization of learning rates for Gemma models.

4.1. Warmup Strategies

Warmup is a technique where the learning rate is gradually increased from a small initial value to the desired learning rate over a certain number of steps or epochs. This strategy can help stabilize training and prevent the model from diverging, especially at the beginning of training when the parameters are far from the optimal values.

Warmup is often used in conjunction with other learning rate schedules, such as cosine annealing or step decay.

4.2. Learning Rate Annealing with Restarts

Learning rate annealing with restarts involves periodically resetting the learning rate to its initial value during training. This method can help the model escape local optima and converge to a better solution.

One popular annealing with restarts method is stochastic gradient descent with warm restarts (SGDR), where the learning rate is annealed using a cosine function and then periodically reset to its initial value.

4.3. Batch Size and Learning Rate Scaling

The batch size, which is the number of training examples used in each iteration of gradient descent, can also impact the optimal learning rate. Larger batch sizes can lead to more stable gradients but may require larger learning rates.

When increasing the batch size, it’s often necessary to scale the learning rate accordingly to maintain stable training. A common rule of thumb is to scale the learning rate linearly with the batch size. For example, if the batch size is doubled, the learning rate should also be doubled.

4.4. Transfer Learning and Fine-Tuning

When using transfer learning, where a model is pre-trained on a large dataset and then fine-tuned on a smaller dataset, the learning rate should be carefully chosen. The learning rate for fine-tuning is typically smaller than the learning rate used for pre-training, as the model is already close to the optimal solution.

It’s also common to use different learning rates for different layers of the model during fine-tuning, with the earlier layers receiving smaller learning rates than the later layers.

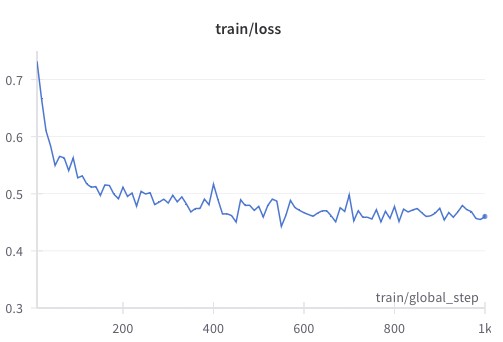

4.5. Monitoring and Visualization

Monitoring and visualizing the training process is crucial for identifying potential problems and fine-tuning the learning rate. Key metrics to monitor include:

- Loss

- Accuracy

- Gradient Norm

Visualizing these metrics can help identify issues such as:

- Divergence: The loss increases over time.

- Oscillations: The loss fluctuates wildly.

- Plateaus: The loss stops decreasing.

By carefully monitoring and visualizing the training process, you can gain valuable insights into the behavior of the model and adjust the learning rate accordingly.

5. Case Studies: Gemma and Learning Rate in Action

To illustrate the practical application of learning rate optimization for Gemma models, let’s examine a few case studies.

5.1. Text Generation with Gemma: Optimizing for Fluency

In this case study, we explore the use of Gemma for text generation and focus on optimizing the learning rate to improve the fluency and coherence of the generated text.

- Model: Gemma-2B

- Dataset: A collection of articles from LEARNS.EDU.VN covering various educational topics.

- Optimization Technique: Cosine annealing with warm restarts.

We found that using a cosine annealing schedule with warm restarts helped the model escape local optima and generate more fluent and coherent text. The optimal learning rate range was determined using the learning rate range test.

5.2. Code Completion with Gemma: Balancing Accuracy and Speed

In this case study, we investigate the use of Gemma for code completion and focus on optimizing the learning rate to balance accuracy and speed.

- Model: Gemma-7B

- Dataset: A large corpus of Python code.

- Optimization Technique: Adam with a step decay schedule.

We found that using Adam with a step decay schedule allowed the model to converge quickly while maintaining high accuracy. The step size and decay rate were tuned using grid search.

5.3. Sentiment Analysis with Gemma: Fine-Tuning for Specific Domains

In this case study, we examine the use of Gemma for sentiment analysis and focus on fine-tuning the model for specific domains.

- Model: Gemma-2B, pre-trained on a general-purpose dataset.

- Dataset: A collection of customer reviews from the education sector.

- Optimization Technique: Transfer learning with a small learning rate.

We found that using transfer learning with a small learning rate allowed the model to quickly adapt to the specific characteristics of the education sector reviews. Different learning rates were used for different layers of the model, with the earlier layers receiving smaller learning rates than the later layers.

6. Tools and Resources for Learning Rate Optimization

Several tools and resources can aid in the process of learning rate optimization for Gemma models.

6.1. TensorFlow and Keras

TensorFlow and Keras are popular deep learning frameworks that provide built-in support for various learning rate optimization techniques, including:

- Adaptive learning rate algorithms (Adam, Adagrad, RMSprop)

- Learning rate schedules (step decay, exponential decay, cosine annealing)

- Warmup strategies

These frameworks also offer tools for monitoring and visualizing the training process, making it easier to fine-tune the learning rate.

6.2. PyTorch

PyTorch is another widely used deep learning framework that provides similar support for learning rate optimization. PyTorch offers a flexible and dynamic environment for experimenting with different learning rate techniques.

6.3. Hyperparameter Optimization Libraries

Several hyperparameter optimization libraries can automate the process of finding the optimal learning rate, including:

- Hyperopt: A Python library for Bayesian optimization.

- Optuna: A Python library for black-box optimization.

- Ray Tune: A distributed hyperparameter optimization framework.

These libraries can be used to efficiently search the learning rate space and find the best performing value.

6.4. Online Courses and Tutorials on LEARNS.EDU.VN

LEARNS.EDU.VN offers a wealth of online courses and tutorials on machine learning, deep learning, and learning rate optimization. These resources can provide you with the knowledge and skills you need to effectively optimize the learning rate for Gemma models.

7. Potential Pitfalls and How to Avoid Them

While optimizing the learning rate can significantly improve the performance of Gemma models, several potential pitfalls can hinder the process.

7.1. Overfitting

Overfitting occurs when the model learns the training data too well and fails to generalize to unseen data. A large learning rate can exacerbate overfitting, as the model may quickly memorize the training examples.

To avoid overfitting, it’s important to:

- Use a validation set to monitor the model’s performance on unseen data.

- Use regularization techniques, such as L1 or L2 regularization.

- Use data augmentation to increase the size and diversity of the training data.

- Reduce the learning rate.

7.2. Divergence

Divergence occurs when the loss increases over time, indicating that the model is not learning effectively. A large learning rate can cause divergence, as the model may overshoot the optimal solution and jump to a region of high loss.

To avoid divergence, it’s important to:

- Use a smaller learning rate.

- Use a warmup strategy to gradually increase the learning rate.

- Check the gradient norm to ensure that it’s not too large.

- Use gradient clipping to limit the size of the gradients.

7.3. Slow Convergence

Slow convergence occurs when the model takes a long time to reach the optimal solution. A small learning rate can cause slow convergence, as the model may take very small steps towards the minimum of the loss function.

To avoid slow convergence, it’s important to:

- Use a larger learning rate.

- Use an adaptive learning rate algorithm, such as Adam or RMSprop.

- Use a learning rate schedule to gradually decrease the learning rate over time.

7.4. Getting Stuck in Local Optima

Local optima are points in the loss landscape that are not the global minimum but are still local minima. The model may get stuck in local optima, preventing it from reaching the best possible solution.

To avoid getting stuck in local optima, it’s important to:

- Use a cyclical learning rate.

- Use learning rate annealing with restarts.

- Use a larger batch size.

8. The Future of Learning Rate Optimization

The field of learning rate optimization is constantly evolving, with new techniques and algorithms being developed all the time. Some potential future directions include:

8.1. Automated Learning Rate Optimization

Automated learning rate optimization involves using machine learning techniques to automatically tune the learning rate during training. This approach can eliminate the need for manual tuning and can potentially lead to better performance.

8.2. Meta-Learning for Learning Rate Optimization

Meta-learning, or learning to learn, involves training a model to learn how to optimize other models. This approach can be used to develop learning rate optimization algorithms that are more robust and generalize better to new tasks and datasets.

8.3. Reinforcement Learning for Learning Rate Optimization

Reinforcement learning can be used to train an agent to dynamically adjust the learning rate during training. The agent receives feedback in the form of the model’s performance and learns to adjust the learning rate to maximize performance.

8.4. Integration with Neural Architecture Search

Neural architecture search (NAS) involves automatically designing the architecture of a neural network. Integrating learning rate optimization with NAS can lead to even better performance, as the learning rate can be tuned specifically for the chosen architecture.

9. Conclusion: Mastering Gemma Learning Rate

Optimizing the learning rate is a critical step in training Gemma models effectively. By understanding the core concepts, applying practical techniques, and avoiding potential pitfalls, you can unlock the full potential of these powerful models. Remember to leverage the tools and resources available, including the comprehensive learning materials at LEARNS.EDU.VN, to enhance your skills and achieve superior results in your machine learning projects. With careful tuning and experimentation, you can achieve faster convergence, improved accuracy, and more robust performance with Gemma models. Embrace the journey of continuous learning and refinement, and you’ll be well-equipped to tackle even the most challenging machine learning tasks.

Gemma Model Training Curve

Gemma Model Training Curve

10. FAQ: Learning Rate Optimization for Gemma Models

This section addresses frequently asked questions about learning rate optimization for Gemma models.

1. What is the best learning rate for Gemma models?

The optimal learning rate depends on several factors, including the model architecture, dataset characteristics, and optimization algorithm. There is no one-size-fits-all answer. Experimentation is often required to determine the best learning rate for a specific task.

2. Should I use a fixed or adaptive learning rate for Gemma models?

Adaptive learning rates, such as Adam or RMSprop, generally perform better than fixed learning rates, as they can automatically adjust to the varying characteristics of the loss landscape. However, fixed learning rates can be effective in some cases, especially when combined with a learning rate schedule.

3. What is a learning rate schedule, and why should I use it?

A learning rate schedule defines how the learning rate changes over time during training. Learning rate schedules can help the model converge faster and achieve better performance. Common learning rate schedules include step decay, exponential decay, and cosine annealing.

4. What is warmup, and why is it important?

Warmup is a technique where the learning rate is gradually increased from a small initial value to the desired learning rate over a certain number of steps or epochs. This strategy can help stabilize training and prevent the model from diverging, especially at the beginning of training when the parameters are far from the optimal values.

5. How do I choose the right batch size for Gemma models?

The batch size can impact the optimal learning rate. Larger batch sizes can lead to more stable gradients but may require larger learning rates. A common rule of thumb is to scale the learning rate linearly with the batch size.

6. What is transfer learning, and how does it affect the learning rate?

Transfer learning involves pre-training a model on a large dataset and then fine-tuning it on a smaller dataset. The learning rate for fine-tuning is typically smaller than the learning rate used for pre-training, as the model is already close to the optimal solution.

7. How can I monitor the training process to ensure that the learning rate is properly tuned?

Key metrics to monitor include loss, accuracy, and gradient norm. Visualizing these metrics can help identify issues such as divergence, oscillations, and plateaus.

8. What are some common pitfalls to avoid when optimizing the learning rate?

Common pitfalls include overfitting, divergence, slow convergence, and getting stuck in local optima. By carefully monitoring the training process and using appropriate techniques, you can avoid these pitfalls.

9. What tools and resources are available for learning rate optimization?

Tools and resources include deep learning frameworks (TensorFlow, Keras, PyTorch), hyperparameter optimization libraries (Hyperopt, Optuna, Ray Tune), and online courses and tutorials on LEARNS.EDU.VN.

10. Where can I find more information about learning rate optimization for Gemma models?

LEARNS.EDU.VN offers a wealth of information about machine learning, deep learning, and learning rate optimization. Explore our online courses and tutorials to enhance your skills and achieve superior results with Gemma models. Visit our website at LEARNS.EDU.VN or contact us at 123 Education Way, Learnville, CA 90210, United States. You can also reach us via Whatsapp at +1 555-555-1212.

11. Maximize Your Learning Potential with LEARNS.EDU.VN

Are you eager to deepen your understanding of learning rate optimization and unlock the full potential of Gemma models? Do you find yourself facing challenges in finding reliable educational resources or struggling with complex concepts?

At LEARNS.EDU.VN, we understand these challenges and are dedicated to providing you with the solutions you need. Our website offers a wide range of articles, tutorials, and courses designed to empower you with the knowledge and skills to excel in machine learning and beyond.

Here’s how LEARNS.EDU.VN can help you:

- Comprehensive Guides: Access detailed and easy-to-understand articles on various topics.

- Effective Learning Methods: Discover proven strategies to enhance your learning process.

- Simplified Explanations: Grasp complex concepts with our clear and intuitive explanations.

- Structured Learning Paths: Follow well-defined learning paths to achieve your goals.

- Expert Resources: Benefit from curated lists of valuable tools and resources.

- Expert Connection: Connect with experienced educators for personalized guidance.

Don’t let these challenges hold you back. Visit learns.edu.vn today and unlock a world of learning opportunities. Start your journey towards mastering learning rate optimization and Gemma models with our expert guidance and comprehensive resources. Your success is our mission!