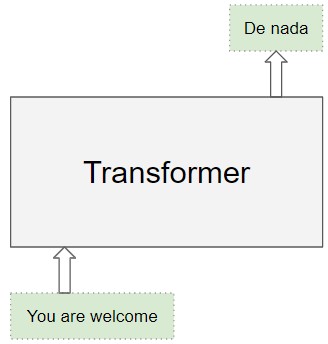

Transformers have revolutionized Natural Language Processing (NLP). This article delves into how transformers work in machine learning, exploring their architecture, the pivotal role of attention mechanisms, and their advantages over traditional recurrent neural networks (RNNs).

Understanding the Transformer Architecture

Transformers excel at processing sequential data like text. They employ an encoder-decoder structure, where both the encoder and decoder consist of stacked identical layers.

The encoder processes the input sequence, capturing contextual information and relationships between words. Each encoder layer incorporates a self-attention mechanism and a feed-forward network. Self-attention allows the model to weigh the importance of different words in the input when processing a specific word, enabling it to understand relationships and context.

The decoder then generates the output sequence, utilizing the encoded information and its own self-attention mechanism to focus on relevant parts of the input and previously generated output. Crucially, the decoder also includes an encoder-decoder attention layer, allowing it to attend to the most relevant parts of the encoded input sequence.

Both encoder and decoder layers utilize residual connections and layer normalization for improved training stability and performance.

The Power of Attention in Transformers

The core innovation of transformers lies in their attention mechanism. Instead of processing words sequentially like RNNs, attention allows the model to consider all words simultaneously, weighing their relevance to each other. This enables the model to capture long-range dependencies between words effectively, a significant limitation of RNNs.

For instance, in the sentence “The cat sat on the mat because it was comfortable,” attention allows the model to directly connect “it” with “mat,” despite their separation in the sentence. Transformers utilize multi-head attention, allowing the model to capture different aspects of the relationships between words.

Training and Inference with Transformers

During training, the transformer receives both the input and the desired output sequences. The model learns to predict the output sequence by minimizing the difference between its prediction and the actual target sequence. This process often employs teacher forcing, where the decoder receives the correct previous words as input, even if the model’s previous prediction was incorrect. This aids in faster and more stable training.

During inference, the transformer generates the output sequence autoregressively, one word at a time. It starts with a start-of-sequence token and uses the previously generated words as input to predict the next word in the sequence. This process continues until an end-of-sequence token is generated.

Transformers vs. RNNs: A Paradigm Shift

Transformers offer significant advantages over RNNs:

- Parallelization: Transformers process input sequences in parallel, significantly speeding up training and inference compared to the sequential processing of RNNs.

- Long-Range Dependencies: The attention mechanism effectively captures long-range dependencies, overcoming a major limitation of RNNs.

These advantages have led to transformers becoming the dominant architecture in various NLP tasks, including machine translation, text summarization, and question answering.

Applications of Transformers

Transformers power a wide array of NLP applications:

- Machine Translation: Accurately translating text between languages.

- Text Summarization: Condensing large texts into concise summaries.

- Question Answering: Providing accurate answers to questions based on given context.

- Sentiment Analysis: Determining the emotional tone of text.

Conclusion

Transformers, with their attention mechanism and parallel processing capabilities, have fundamentally changed the landscape of NLP. Their ability to capture complex relationships within text has led to significant advancements in various language-based tasks, paving the way for more sophisticated and powerful AI systems. As research continues, we can expect even more innovative applications and refinements to the transformer architecture in the future.