Transformers have revolutionized Natural Language Processing (NLP). This article delves into the mechanics of transformers, explaining how these powerful models process and understand text data. We’ll cover their architecture, the crucial role of attention mechanisms, training and inference processes, and real-world applications.

Understanding the Transformer Architecture

At its core, a transformer consists of an encoder and a decoder, each comprised of stacked layers. Both the encoder and decoder utilize self-attention and feed-forward networks. The encoder processes the input sequence, while the decoder generates the output sequence. Crucially, an encoder-decoder attention mechanism allows the decoder to focus on relevant parts of the input. Residual connections and layer normalization are also integrated within each layer for improved training stability and performance.

The Power of Attention

The key innovation of transformers lies in their use of attention mechanisms. Attention allows the model to weigh the importance of different words in the input sequence when processing a specific word. This enables the model to capture long-range dependencies and contextual relationships effectively, something traditional recurrent neural networks (RNNs) struggled with.

For example, in the sentence “The cat sat on the mat because it was comfortable,” the attention mechanism helps the model understand that “it” refers to “mat” and not “cat.” Transformers employ multi-head attention, allowing the model to attend to different parts of the input sequence with different learned weights, capturing various aspects of the relationship between words.

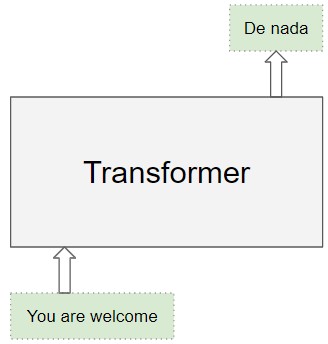

Training and Inference: Two Distinct Processes

Training: During training, the transformer receives both the input and the desired output sequence. The encoder processes the input, and the decoder attempts to generate the output, using teacher forcing. Teacher forcing involves feeding the correct output sequence to the decoder during training, guiding it to learn the correct mappings.

Inference: During inference, only the input sequence is provided. The decoder generates the output sequence one word at a time, using its previously generated words as input for subsequent predictions. This process continues until an end-of-sentence token is generated.

Applications of Transformers

Transformers power a wide range of NLP applications:

- Machine Translation: Translating text between languages.

- Text Summarization: Condensing lengthy text into concise summaries.

- Question Answering: Providing answers to questions based on given text.

- Sentiment Analysis: Determining the emotional tone of a piece of text.

Transformers vs. RNNs: A Paradigm Shift

Transformers offer significant advantages over RNNs:

- Parallelization: Transformers process the entire input sequence simultaneously, allowing for faster training and inference compared to the sequential processing of RNNs.

- Long-Range Dependencies: The attention mechanism enables transformers to effectively capture relationships between words regardless of their distance in the sequence.

Conclusion

Transformers have become the dominant architecture in NLP due to their ability to effectively process sequential data, capturing long-range dependencies and contextual relationships. Their parallel processing capabilities enable faster training and inference, leading to significant performance improvements across various NLP tasks. As research continues, we can expect even more innovative applications and advancements in transformer-based models.