Deep learning, a revolutionary subset of machine learning, is transforming industries worldwide. At LEARNS.EDU.VN, we unravel the complexities of How Does Deep Learning Work, exploring its mechanics, applications, and benefits. Dive in to master neural networks, understand data processing, and unlock new possibilities with these advanced learning methods.

1. Understanding Deep Learning: The Basics

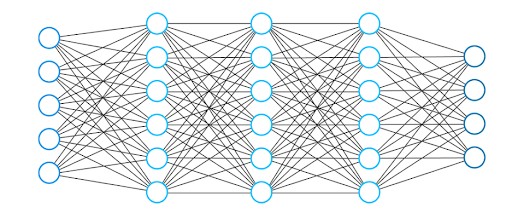

Deep learning is a sophisticated subset of machine learning (ML) within the broader field of artificial intelligence (AI). It distinguishes itself by using artificial neural networks with multiple layers (hence “deep”) to analyze data and extract complex patterns. These neural networks are inspired by the structure and function of the human brain, enabling deep learning models to learn intricate representations of data. This capability allows them to perform tasks such as image and speech recognition, natural language processing, and predictive analytics with remarkable accuracy.

1.1 Deep Learning vs. Machine Learning vs. Artificial Intelligence

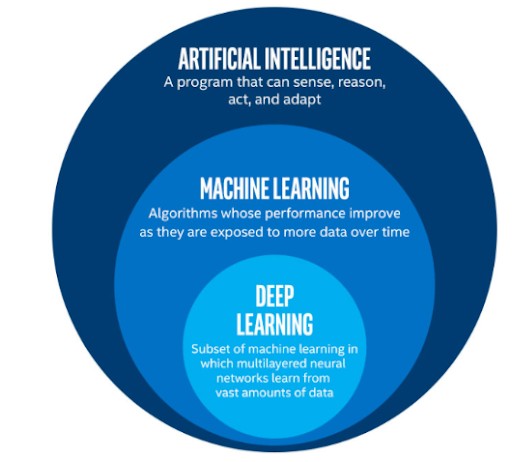

To fully grasp deep learning, it’s crucial to understand its relationship with machine learning and artificial intelligence.

- Artificial Intelligence (AI): This is the overarching concept of creating machines that can perform tasks that typically require human intelligence. AI encompasses a wide range of techniques, including rule-based systems, expert systems, and machine learning.

- Machine Learning (ML): A subset of AI, machine learning involves training algorithms to learn from data without being explicitly programmed. ML algorithms can identify patterns, make predictions, and improve their performance over time as they are exposed to more data.

- Deep Learning (DL): A specialized field within machine learning, deep learning uses artificial neural networks with multiple layers to analyze data. These deep neural networks can automatically learn hierarchical representations of data, enabling them to solve complex problems such as image and speech recognition.

1.2 The Inspiration: The Human Brain

Deep learning models are inspired by the structure and function of the human brain. The human brain consists of billions of interconnected neurons that transmit signals to each other. Similarly, deep neural networks consist of interconnected artificial neurons (also called nodes) organized in layers.

1.3 Key Applications of Deep Learning

Deep learning is powering many of the technological advancements we see today. Some notable applications include:

- Image Recognition: Identifying objects, people, and scenes in images and videos.

- Natural Language Processing (NLP): Understanding and generating human language, enabling tasks like machine translation, sentiment analysis, and chatbots.

- Speech Recognition: Converting spoken language into text, used in virtual assistants and voice-controlled devices.

- Recommendation Systems: Suggesting products, movies, or music based on user preferences.

- Self-Driving Cars: Enabling vehicles to perceive their surroundings and navigate without human intervention.

2. How Deep Learning Algorithms Work: A Detailed Look

Deep learning algorithms work by processing data through multiple layers of artificial neural networks. Each layer extracts increasingly complex features from the data, allowing the model to learn intricate patterns and make accurate predictions.

2.1 Neural Networks: The Building Blocks

Neural networks are the fundamental building blocks of deep learning models. These networks consist of interconnected nodes (neurons) organized in layers. The connections between nodes have weights associated with them, which are adjusted during the learning process.

2.1.1 Components of a Neural Network

- Input Layer: Receives the raw data that the network will process.

- Hidden Layers: Perform complex computations on the input data, extracting features and patterns. Deep learning models have multiple hidden layers, allowing them to learn hierarchical representations of data.

- Output Layer: Produces the final prediction or classification based on the processed data.

- Nodes (Neurons): Processing units that perform mathematical operations on the input they receive.

- Weights: Numerical values associated with the connections between nodes, representing the strength of the connection.

- Biases: Additional parameters that allow each neuron to adjust its output independently of the input.

- Activation Functions: Introduce non-linearity into the network, enabling it to learn complex relationships in the data.

2.2 The Learning Process: From Input to Output

- Input: The data is fed into the input layer of the neural network.

- Forward Propagation: The input data is passed through each layer of the network. Each neuron applies a mathematical operation to its input, combining it with weights and biases, and then applies an activation function to produce an output.

- Loss Function: The output of the network is compared to the actual target value using a loss function, which measures the difference between the predicted output and the true value.

- Backpropagation: The error (loss) is propagated back through the network, and the weights and biases are adjusted to reduce the error.

- Optimization: An optimization algorithm, such as gradient descent, is used to update the weights and biases iteratively until the network achieves a desired level of accuracy.

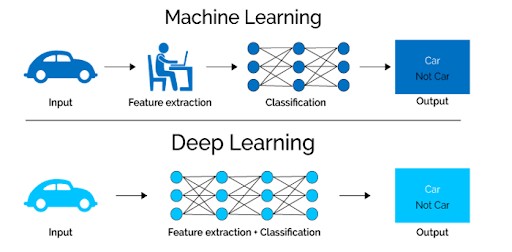

2.3 Feature Extraction: The Deep Learning Advantage

One of the key advantages of deep learning over traditional machine learning is its ability to automatically learn features from raw data. In traditional machine learning, feature extraction is a manual process that requires domain expertise. Deep learning models, on the other hand, can learn relevant features directly from the data, eliminating the need for manual feature engineering.

2.3.1 Manual Feature Extraction

In traditional machine learning, the process of feature extraction often requires significant effort and domain-specific knowledge. For example, in image recognition, features like edges, corners, and textures might be manually extracted from images before being fed into a machine learning algorithm.

2.3.2 Automatic Feature Extraction

Deep learning models can automatically learn hierarchical representations of data through multiple layers of neural networks. Lower layers learn simple features, while higher layers learn more complex features by combining the outputs of the lower layers.

This automatic feature extraction process allows deep learning models to handle complex tasks with greater accuracy and efficiency compared to traditional machine learning algorithms.

3. Why Deep Learning is Popular: Advantages and Benefits

Deep learning has gained immense popularity due to its ability to solve complex problems with high accuracy and efficiency. There are several reasons why deep learning has become the preferred approach for many tasks.

3.1 Redundancy of Feature Extraction

Deep learning models eliminate the need for manual feature extraction, which is a time-consuming and labor-intensive process. The layers in a deep neural network can automatically learn an implicit representation of the raw data directly and on their own.

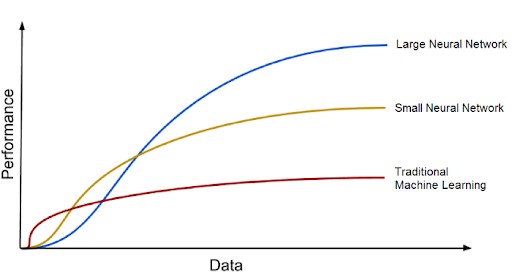

3.2 Accuracy with Big Data

Deep learning models tend to increase their accuracy with the increasing amount of training data. Traditional machine learning models, such as SVM and naive Bayes classifiers, often stop improving after a saturation point.

3.3 Solving Complex Problems

Deep learning models can solve complex problems that are difficult or impossible for traditional machine learning algorithms. This is because deep learning models can learn hierarchical representations of data, allowing them to capture intricate patterns and relationships.

3.4 Real-World Impact

Deep learning is driving innovation in a wide range of industries, including healthcare, finance, transportation, and entertainment. From diagnosing diseases to predicting stock prices to enabling self-driving cars, deep learning is transforming the way we live and work.

3.5. Benefits in Education

Deep learning is also making significant strides in education, personalizing learning experiences and automating administrative tasks. Imagine AI tutors providing customized feedback, intelligent systems grading assignments, and predictive models identifying students at risk of falling behind. To explore these applications further, LEARNS.EDU.VN offers courses and articles detailing how AI and deep learning are reshaping education.

4. Deep Learning Neural Network Architecture: A Closer Look

The architecture of a deep learning neural network is a critical factor in its performance. Understanding the different layers and components of a neural network is essential for designing and training effective deep learning models.

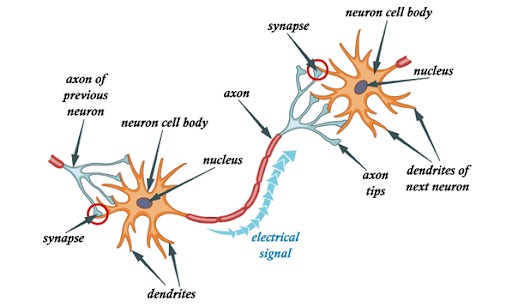

4.1 Biological Neural Networks: Inspiration and Parallels

Artificial neural networks are inspired by the biological neurons found in our brains. Biological neural networks consist of numerous neurons that transmit signals to each other. Artificial neural networks simulate some basic functionalities of biological neural networks, but in a very simplified way.

4.1.1 Components of a Biological Neuron

- Cell Body (Soma): The main body of the neuron, which contains the nucleus and other cellular organelles.

- Dendrites: Thin structures that emerge from the cell body and receive signals from other neurons.

- Axon: A cellular extension that emerges from the cell body and transmits signals to other neurons.

- Synapses: Junctions between neurons where signals are transmitted from the axon of one neuron to the dendrite of another.

4.2 Artificial Neural Networks: Simulating Biological Neurons

Artificial neural networks consist of interconnected nodes (neurons) organized in layers. These artificial neurons loosely model the biological neurons of our brain.

4.2.1 Components of an Artificial Neuron

- Input: The data that the neuron receives from other neurons or from the input layer.

- Weights: Numerical values associated with the connections between neurons, representing the strength of the connection.

- Bias: An additional parameter that allows each neuron to adjust its output independently of the input.

- Activation Function: Introduces non-linearity into the neuron, enabling it to learn complex relationships in the data.

- Output: The result of the neuron’s computation, which is passed to other neurons in the network.

4.3 Common Neural Network Architectures

There are several common neural network architectures used in deep learning, each with its own strengths and weaknesses.

4.3.1 Feedforward Neural Networks

Feedforward neural networks are the simplest type of neural network architecture. In a feedforward network, data flows in one direction, from the input layer to the output layer, without any loops or cycles.

4.3.2 Convolutional Neural Networks (CNNs)

Convolutional neural networks are specifically designed for processing data that has a grid-like structure, such as images and videos. CNNs use convolutional layers to extract features from the input data, and pooling layers to reduce the dimensionality of the feature maps.

4.3.3 Recurrent Neural Networks (RNNs)

Recurrent neural networks are designed for processing sequential data, such as text and time series. RNNs have feedback connections that allow them to maintain a memory of previous inputs, making them well-suited for tasks such as natural language processing and speech recognition.

4.3.4 Generative Adversarial Networks (GANs)

Generative adversarial networks consist of two neural networks, a generator and a discriminator, that are trained in a competitive manner. The generator tries to create realistic data samples, while the discriminator tries to distinguish between real and generated samples.

5. Deep Learning Neural Network Layer Connections: Building the Network

The connections between layers in a deep learning neural network are critical for its ability to learn and process data. Understanding how layers are connected and how data flows through the network is essential for designing effective deep learning models.

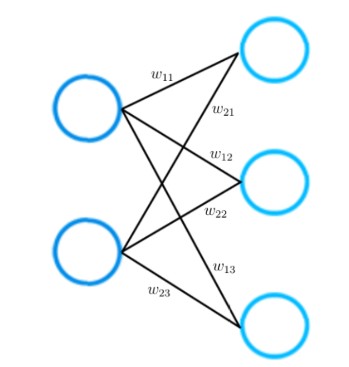

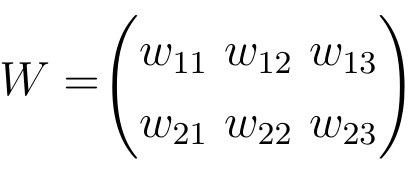

5.1 Layer Connections: Weights and Biases

Each connection between two neurons in a neural network has a weight associated with it. The weight represents the strength of the connection and is adjusted during the learning process. In addition to weights, each neuron also has a bias, which is an additional parameter that allows the neuron to adjust its output independently of the input.

5.2 Weight Matrix

All weights between two neural network layers can be represented by a matrix called the weight matrix. A weight matrix has the same number of entries as there are connections between neurons.

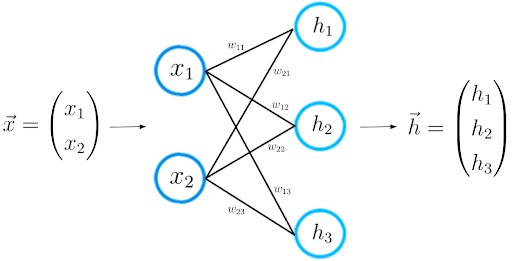

5.3 Forward Propagation: Passing Data Through the Network

Forward propagation is the process of passing data through the neural network, from the input layer to the output layer. During forward propagation, each neuron applies a mathematical operation to its input, combining it with weights and biases, and then applies an activation function to produce an output.

6. Learning a Deep Learning Neural Network’s Process: Training the Model

The learning process, also known as training, is the heart of deep learning. It involves adjusting the weights and biases of the neural network to minimize the difference between the predicted output and the actual target value.

6.1 Forward Propagation: Making Predictions

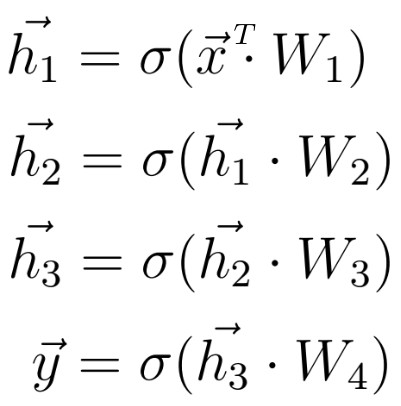

For a given input feature vector x, the neural network calculates a prediction vector, which we call h. This step is called forward propagation.

6.2 Activation Functions: Introducing Non-Linearity

An activation function is a non-linear function that performs a non-linear mapping from z to h. Activation functions introduce non-linearity into the network, enabling it to learn complex relationships in the data. Common activation functions include tanh, sigmoid, and ReLU.

6.3 Deeper Architectures: Expanding the Network

The equations remain the same as we expand our knowledge to a deeper architecture that consists of five layers. As before, we calculate the dot product between the input x and the first weight matrix W1, and apply an activation function to the resulting vector to obtain the first hidden vector h1.

7. Loss Functions in Deep Learning: Measuring Error

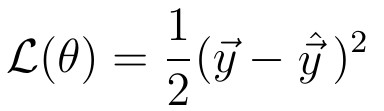

After obtaining the prediction of the neural network, we must compare this prediction vector to the actual ground truth label. A loss function measures the difference between the predicted output and the true value.

7.1 Quadratic Loss: Measuring the Difference

The value of this loss function depends on the difference between y_hat and y. A higher difference means a higher loss value, and a smaller difference means a smaller loss value.

7.2 Cross-Entropy Loss: For Classification Tasks

The cross-entropy loss is commonly used for classification tasks. It measures the difference between the predicted probability distribution and the true distribution.

7.3 Mean Squared Error (MSE) Loss: For Regression Tasks

The mean squared error loss is commonly used for regression tasks. It measures the average squared difference between the predicted values and the true values.

8. Gradient Descent in Deep Learning: Minimizing the Loss

Gradient descent is an iterative optimization algorithm used to minimize the loss function by adjusting the weights and biases of the neural network.

8.1 Basic Concept: Finding the Minimum

During gradient descent, we use the gradient of a loss function (the derivative, in other words) to improve the weights of a neural network.

8.2 Simple Neural Network Example

Let’s consider a basic example of a neural network consisting of only one input and one output neuron connected by a weight value w.

8.3 Gradient Descent Step: Updating the Weights

Finally, we perform one gradient descent step as an attempt to improve our weights. We use this negative gradient to update your current weight in the direction of the weights for which the value of the loss function decreases, according to the negative gradient.

9. Deep Learning in Education: Revolutionizing Learning

Deep learning is revolutionizing education by providing personalized learning experiences, automating administrative tasks, and improving student outcomes. Here are some specific ways deep learning is transforming education:

9.1 Personalized Learning

Deep learning algorithms can analyze student data, such as learning styles, performance history, and preferences, to create personalized learning experiences. AI-powered tutoring systems can provide customized feedback and support, adapting to each student’s individual needs.

9.2 Automated Grading

Deep learning models can automate the grading of assignments, saving teachers time and effort. These models can accurately assess essays, multiple-choice questions, and other types of assessments, providing students with timely feedback on their performance.

9.3 Student Performance Prediction

Deep learning can predict student performance, allowing educators to identify students at risk of falling behind. By analyzing various factors, such as attendance, grades, and engagement levels, deep learning models can provide early warnings and enable timely interventions.

9.4 Enhanced Content Creation

Deep learning can assist in creating educational content, generating practice questions, and summarizing complex topics. This technology helps educators develop high-quality learning materials more efficiently.

9.5 Accessibility

Deep learning-powered tools can translate content into multiple languages and provide real-time captioning for videos, making educational materials more accessible to students with diverse needs.

Table: Deep Learning Applications in Education

| Application | Description | Benefits |

|---|---|---|

| Personalized Learning | AI tutors adapt to individual student needs and learning styles. | Enhanced engagement, improved comprehension, tailored support. |

| Automated Grading | Deep learning models grade assignments and provide timely feedback. | Reduced workload for educators, faster feedback for students, consistent evaluation. |

| Student Performance Prediction | Predictive models identify students at risk based on various factors. | Early intervention, targeted support, improved student outcomes. |

| Enhanced Content Creation | AI assists in generating practice questions and summarizing topics. | Increased efficiency, high-quality learning materials, diverse content options. |

| Accessibility | Real-time translation and captioning make content accessible to diverse learners. | Inclusive learning environment, support for language learners, enhanced comprehension for students with disabilities. |

10. Latest Trends in Deep Learning

Staying up-to-date with the latest trends in deep learning is essential for anyone working in the field. Here are some of the most exciting trends to watch:

10.1 Self-Supervised Learning

Self-supervised learning is a technique where the model learns from unlabeled data by creating its own labels. This approach is particularly useful when labeled data is scarce or expensive to obtain.

10.2 Explainable AI (XAI)

Explainable AI focuses on making deep learning models more transparent and interpretable. XAI techniques provide insights into how the model makes decisions, which can help build trust and confidence in AI systems.

10.3 Federated Learning

Federated learning enables training models on decentralized data sources, such as mobile devices or edge devices, without sharing the raw data. This approach preserves privacy and reduces communication costs.

10.4 Transformer Networks

Transformer networks have revolutionized natural language processing and are now being applied to other domains, such as computer vision. Transformers use attention mechanisms to weigh the importance of different parts of the input data, allowing them to capture long-range dependencies.

10.5 Quantum Deep Learning

Quantum deep learning explores the intersection of quantum computing and deep learning. Quantum algorithms may be able to accelerate the training of deep learning models and solve problems that are intractable for classical computers.

11. Resources at LEARNS.EDU.VN

At LEARNS.EDU.VN, we are committed to providing high-quality educational resources on deep learning. Here are some of the resources you can find on our website:

- Articles and Tutorials: We offer a wide range of articles and tutorials on various deep learning topics, from introductory concepts to advanced techniques.

- Courses: Our deep learning courses provide hands-on training on building and deploying deep learning models using popular frameworks such as TensorFlow and PyTorch.

- Community Forum: Our community forum is a place where you can connect with other deep learning enthusiasts, ask questions, and share your knowledge.

- Expert Insights: LEARNS.EDU.VN features content from leading experts in AI and deep learning. Stay updated with cutting-edge research and industry trends through our expert insights.

Our resources are designed to help you learn at your own pace and gain the skills you need to succeed in the field of deep learning. Visit our website today to start your deep learning journey.

12. Frequently Asked Questions (FAQ)

12.1 What is deep learning?

Deep learning is a subset of machine learning that uses artificial neural networks with multiple layers to analyze data and solve complex problems. These neural networks consist of interconnected artificial nodes and neurons, which help process information.

12.2 What is the difference between machine learning and deep learning?

Machine learning is a type of artificial intelligence designed to learn from data on its own and adapt to new tasks without explicitly being programmed to. Deep learning is a subset of machine learning that uses artificial neural networks to mimic the structure and problem-solving capabilities of the human brain.

12.3 How does deep learning work?

Deep learning algorithms process data through multiple layers of artificial neural networks. Each layer extracts increasingly complex features from the data, allowing the model to learn intricate patterns and make accurate predictions.

12.4 What are the key applications of deep learning?

Key applications include image recognition, natural language processing, speech recognition, recommendation systems, and self-driving cars.

12.5 What are the advantages of deep learning over traditional machine learning?

Deep learning models eliminate the need for manual feature extraction, can handle large amounts of data, and can solve complex problems that are difficult or impossible for traditional machine learning algorithms.

12.6 What is a neural network?

A neural network is a fundamental building block of deep learning models. It consists of interconnected nodes (neurons) organized in layers, with connections between nodes having weights that are adjusted during the learning process.

12.7 What is gradient descent?

Gradient descent is an iterative optimization algorithm used to minimize the loss function by adjusting the weights and biases of the neural network.

12.8 What are loss functions?

Loss functions measure the difference between the predicted output of the neural network and the true value. Common loss functions include quadratic loss, cross-entropy loss, and mean squared error (MSE) loss.

12.9 How is deep learning used in education?

Deep learning is used in education to provide personalized learning experiences, automate grading, predict student performance, enhance content creation, and improve accessibility.

12.10 What are some latest trends in deep learning?

Latest trends include self-supervised learning, explainable AI (XAI), federated learning, transformer networks, and quantum deep learning.

Conclusion

Deep learning is a rapidly evolving field with the potential to transform industries and solve some of the world’s most challenging problems. At LEARNS.EDU.VN, we are dedicated to providing you with the resources and knowledge you need to succeed in this exciting field. Whether you are a student, researcher, or industry professional, we invite you to explore our website and discover the power of deep learning.

Ready to explore the power of deep learning? Visit LEARNS.EDU.VN today! Discover more articles, tutorials, and courses designed to help you master this transformative technology. For any questions or further assistance, contact us at 123 Education Way, Learnville, CA 90210, United States or via Whatsapp at +1 555-555-1212. Join the learns.edu.vn community and start your deep learning journey today!