Accuracy, precision, and recall are essential metrics for evaluating classification models in machine learning, each offering a unique perspective on model quality. Choosing the appropriate metric depends on the specific application and the relative costs of different types of errors. This article delves into the intricacies of accuracy, precision, and recall, providing a comprehensive guide to understanding and calculating these metrics. At LEARNS.EDU.VN, we believe in empowering learners with the knowledge and tools necessary to excel in the field of machine learning. Understanding accuracy calculation, performance metrics, and model evaluation is a cornerstone of that mission. Let’s explore model performance evaluation, error analysis techniques, and predictive accuracy assessment.

1. Grasping the Fundamentals of Accuracy in Machine Learning

Accuracy, a fundamental metric, quantifies the overall correctness of a machine learning model. The accuracy score, error rate, and model effectiveness are closely related. In simpler terms, it measures the ratio of correct predictions to the total number of predictions. The formula is straightforward: Accuracy = (Number of Correct Predictions) / (Total Number of Predictions). At LEARNS.EDU.VN, we simplify complex topics to ensure everyone can learn.

2. Deep Dive into the Accuracy Metric

Accuracy represents the frequency with which a machine learning model’s predictions align with the actual outcomes. You calculate this by dividing the count of correct predictions by the total number of predictions. A higher accuracy score indicates better performance, with a perfect score of 1.0 signifying flawless prediction across all instances.

2.1. A Visual Depiction of Accuracy

Consider a machine learning model designed to identify spam emails. For each email, the model predicts either “spam” or “not spam.” Visualizing these predictions provides an intuitive understanding of the model’s performance before comparing them with actual labels.

2.2. Tagging and Counting for Accuracy

After obtaining the real labels, you can assess the model’s predictions and tag them as correct or incorrect. By counting the number of correct predictions and dividing it by the total number of predictions, you calculate the model’s accuracy. For instance, if a model correctly predicts 52 out of 60 emails, its accuracy is 87%.

2.3. The Allure and Limitations of Accuracy

Accuracy is appealing due to its simplicity and intuitive nature. However, it’s not always a reliable metric, especially when dealing with imbalanced datasets. The accuracy paradox arises when a model achieves high accuracy simply by predicting the majority class, which can be misleading in scenarios where the minority class is of greater interest.

3. Unveiling the Accuracy Paradox

The accuracy paradox highlights the pitfall of relying solely on accuracy, particularly when dealing with imbalanced datasets where one class significantly outweighs the others. This imbalance can skew the accuracy score, making it a deceptive measure of a model’s true performance.

3.1. Illustrating the Paradox with Spam Detection

Consider a scenario where only 3 out of 60 emails are actually spam. A model that naively classifies every email as “not spam” would achieve an accuracy of 95%, but it would fail to identify any of the actual spam emails.

3.2. Why Accuracy Fails in Imbalanced Datasets

The problem with accuracy in imbalanced datasets is that it gives equal weight to all classes. Thus, a model can achieve a high accuracy score simply by correctly classifying the majority class, even if it performs poorly on the minority class, which is often the class of interest.

4. Weighing the Pros and Cons of Accuracy

Understanding the advantages and disadvantages of accuracy helps in determining its suitability for different scenarios.

4.1. The Upsides of Accuracy

- Accuracy is most effective when dealing with balanced datasets where each class is equally important.

- It is easy to understand and communicate, making it a good starting point for model evaluation.

4.2. The Downsides of Accuracy

- Accuracy becomes unreliable when classes are imbalanced, as it can mask poor performance on the minority class.

- Communicating accuracy in such cases can be misleading and can hide the model’s inability to predict the target class effectively.

5. Introducing Precision and Recall: Alternatives to Accuracy

To overcome the limitations of accuracy, especially in imbalanced datasets, precision and recall offer more nuanced measures of model performance. These metrics focus on the model’s ability to correctly identify instances of a specific class, rather than overall correctness.

5.1. The Role of the Confusion Matrix

Before diving into precision and recall, it’s important to understand the confusion matrix. The confusion matrix breaks down the model’s predictions into four categories: true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN). This matrix provides a detailed view of the model’s performance, allowing for the calculation of precision and recall.

6. Understanding Precision

Precision quantifies the accuracy of positive predictions made by the model. It answers the question: “Of all the instances the model predicted as positive, how many were actually positive?” It is calculated as: Precision = TP / (TP + FP).

6.1. A Real-World Scenario: Spam Detection

Consider a spam detection model where precision measures the proportion of emails flagged as spam that are actually spam. A high precision indicates that the model is accurate in identifying spam, minimizing the number of legitimate emails incorrectly classified as spam.

6.2. Precision vs. Accuracy: A Clear Distinction

Unlike accuracy, which measures overall correctness, precision focuses solely on the accuracy of positive predictions. This makes precision particularly useful in scenarios where the cost of false positives is high.

6.3. The Pros and Cons of Precision

- Pros: Precision is effective in imbalanced datasets and when the cost of false positives is high.

- Cons: Precision does not account for false negatives, meaning it doesn’t consider instances of the target class that the model failed to identify.

7. Grasping Recall

Recall, also known as sensitivity, measures the model’s ability to identify all relevant instances of the positive class. It answers the question: “Of all the actual positive instances, how many did the model correctly identify?” It is calculated as: Recall = TP / (TP + FN).

7.1. Recall in Action: Spam Detection

In the context of spam detection, recall measures the proportion of actual spam emails that the model correctly identifies. A high recall indicates that the model is effective in detecting spam, minimizing the number of spam emails that slip through the filter.

7.2. Why Recall Matters

Recall is crucial in scenarios where the cost of false negatives is high. For example, in medical diagnosis, failing to identify a disease (false negative) can have severe consequences.

7.3. The Pros and Cons of Recall

- Pros: Recall is effective in imbalanced datasets and when the cost of false negatives is high.

- Cons: Recall does not account for the cost of false positives.

8. Practical Considerations: Balancing Accuracy, Precision, and Recall

In practice, choosing the right metric involves balancing accuracy, precision, and recall based on the specific goals of the project and the relative costs of different types of errors.

8.1. Class Balance and Cost of Error

When interpreting precision, recall, and accuracy, it’s essential to consider the class balance and the cost of errors. Some metrics, like accuracy, can be misleadingly good and disguise the performance of important minority classes.

8.2. The F1-Score: Harmonizing Precision and Recall

The F1-score is a single metric that combines precision and recall into a single value. It is the harmonic mean of precision and recall, providing a balanced measure of the model’s performance.

8.3. Decision Thresholds: Fine-Tuning Model Performance

Another way to navigate the balance between precision and recall is by manually setting a different decision threshold for probabilistic classification.

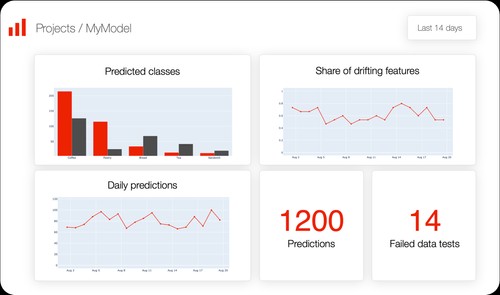

9. Leveraging Accuracy, Precision, and Recall in ML Monitoring

Accuracy, precision, and recall are valuable metrics for monitoring the performance of machine learning models over time. By tracking these metrics, you can detect degradation in model performance and take corrective action.

9.1. The Importance of True Labels

The major limitation of using these metrics for monitoring is the need for true labels in production. In some cases, you might need to wait days, weeks, or even months to know if the model predictions were correct.

9.2. Monitoring Proxy Metrics

In cases where true labels are not immediately available, you can monitor proxy metrics like data drift to detect deviations in the input data which might affect model quality.

9.3. Segment-Specific Quality Metrics

In practical applications, it is often advisable to compute the quality metrics for specific segments. Focusing on a single overall quality metric might disguise low performance in an important segment.

10. Calculating Accuracy, Precision, and Recall in Python

To calculate accuracy, precision, and recall in Python, you can use libraries like Scikit-learn. These libraries provide functions for computing these metrics from the model’s predictions and the true labels.

10.1. Using Evidently for Comprehensive Model Evaluation

Evidently is an open-source Python library that helps evaluate, test, and monitor ML models in production. It provides an interactive report that includes a confusion matrix, accuracy, precision, recall metrics, ROC curve, and other visualizations.

FAQ Section

1. What does accuracy mean in machine learning?

Accuracy measures how often a machine learning model correctly predicts the outcome, calculated by dividing the number of correct predictions by the total number of predictions. It’s a straightforward way to assess overall correctness.

2. How Is Accuracy Calculated In Machine Learning?

Accuracy is calculated using the formula: Accuracy = (Number of Correct Predictions) / (Total Number of Predictions). It provides a percentage of how often the model is right across all predictions.

3. Why is accuracy not always the best metric?

Accuracy can be misleading in imbalanced datasets where one class has significantly more instances than others. A model can achieve high accuracy by simply predicting the majority class, even if it performs poorly on the minority class.

4. When should I use precision instead of accuracy?

Precision is more suitable when the cost of false positives is high. It focuses on the accuracy of positive predictions, ensuring that when the model predicts a positive outcome, it is likely to be correct.

5. What does recall tell me about my model?

Recall measures the model’s ability to find all instances of the positive class. It’s useful when the cost of false negatives is high, as it focuses on minimizing the number of missed positive instances.

6. How do precision and recall relate to each other?

Precision and recall are inversely related. Improving precision often reduces recall, and vice versa. The choice between them depends on the specific goals of the project and the relative costs of false positives and false negatives.

7. What is the F1-score?

The F1-score is the harmonic mean of precision and recall, providing a balanced measure of the model’s performance. It’s useful when you want to balance both precision and recall.

8. How can I improve the accuracy of my model?

Improving accuracy depends on the specific problem and dataset. Techniques include feature engineering, trying different models, tuning hyperparameters, and addressing class imbalance through techniques like oversampling or undersampling.

9. Can accuracy be over 100%?

No, accuracy cannot be over 100%. It represents the percentage of correct predictions out of all predictions, so the maximum possible value is 100%.

10. Where can I learn more about machine learning metrics?

LEARNS.EDU.VN offers comprehensive courses and resources on machine learning, including detailed explanations of various metrics and their applications. Visit our website to explore our offerings.

Mastering the calculation and interpretation of accuracy in machine learning is pivotal for creating effective predictive models. LEARNS.EDU.VN is dedicated to providing you with the tools and knowledge necessary to excel in this field. By understanding the nuances of accuracy, precision, and recall, you can make informed decisions about model evaluation and optimization.

Ready to dive deeper into the world of machine learning? Visit LEARNS.EDU.VN today and explore our extensive range of courses and resources. Whether you’re looking to master the fundamentals or tackle advanced topics, we have something to help you achieve your goals. Contact us at 123 Education Way, Learnville, CA 90210, United States or Whatsapp us at +1 555-555-1212. learns.edu.vn – Your gateway to limitless learning!