Learning Apache Spark can seem daunting, but with the right approach, it’s achievable for anyone. This guide, brought to you by LEARNS.EDU.VN, explores how long it takes to learn Apache Spark, offering a structured path for beginners and experienced developers alike. We’ll cover essential concepts, practical tips, and resources to help you master this powerful big data processing framework. Discover how LEARNS.EDU.VN can support your learning journey with tailored courses and expert guidance, focusing on data processing, real-time analytics, and machine learning.

1. Understanding the Spark Learning Curve

The time it takes to learn Apache Spark varies significantly depending on your background, learning style, and goals. A clear understanding of these factors will help you set realistic expectations and tailor your learning plan.

1.1. Factors Influencing Learning Time

Several factors influence how long it takes to become proficient in Apache Spark:

- Prior Programming Experience: If you have experience with programming languages like Python, Scala, or Java, you’ll likely find it easier to grasp Spark’s concepts and APIs.

- Familiarity with Big Data Concepts: Understanding big data concepts such as distributed computing, Hadoop, and data warehousing can accelerate your learning process.

- Learning Style: Some individuals learn best through hands-on practice, while others prefer structured courses or documentation.

- Time Commitment: The amount of time you dedicate to learning each week will directly impact your progress.

- Learning Resources: Access to high-quality tutorials, documentation, and community support can significantly improve your learning experience.

- Project Goals: Are you aiming to use Spark for data analysis, machine learning, or real-time streaming? Each use case requires a different level of expertise.

1.2. Estimating Learning Time: A Tiered Approach

Here’s a tiered approach to estimate the time required to learn Apache Spark, depending on your desired level of proficiency:

| Proficiency Level | Description | Estimated Time Commitment | Key Skills |

|---|---|---|---|

| Beginner (Basic) | Able to write simple Spark applications, understand core concepts, and perform basic data transformations. | 40-80 hours | Spark Basics, RDDs, DataFrames, Spark SQL, basic transformations, and actions. |

| Intermediate (Competent) | Can develop more complex Spark applications, optimize performance, and work with various data sources and formats. | 160-320 hours | Advanced Spark SQL, performance tuning, working with Parquet/ORC, understanding Spark architecture, and basic machine learning pipelines. |

| Advanced (Expert) | Capable of designing and implementing large-scale Spark applications, contributing to open-source projects, and mentoring other developers. | 640+ hours | Spark Streaming, GraphX, advanced machine learning with MLlib/Spark ML, deep understanding of Spark internals, and cluster management. |

| Master (Architect) | Master of Apache Spark, able to design and manage very large and complex Spark applications, contributing to the Spark ecosystem, and mentoring other developers on Spark best practices. | 1280+ hours | Spark Cluster tuning and integration, advanced usage of the Spark API and Libraries, optimization and cost management. |

2. Setting Up Your Learning Environment

Before diving into Apache Spark, it’s essential to set up a conducive learning environment. This involves installing the necessary software and configuring your system for Spark development.

2.1. Installing Apache Spark

Follow these steps to install Apache Spark on your local machine:

-

Download Apache Spark: Visit the Apache Spark downloads page and select a pre-built package for your operating system (e.g., Hadoop 3.3 or later).

-

Install Java: Ensure you have Java Development Kit (JDK) 8 or later installed. You can download it from Oracle’s website or use an open-source distribution like OpenJDK.

-

Set Up Environment Variables: Configure the following environment variables:

JAVA_HOME: Point to your JDK installation directory.SPARK_HOME: Point to your Spark installation directory.PATH: Add$SPARK_HOME/binand$JAVA_HOME/binto your system’s PATH variable.

-

Verify Installation: Open a terminal and run

spark-shell. If Spark starts successfully, you’re ready to go.

2.2. Choosing an IDE

An Integrated Development Environment (IDE) can significantly enhance your Spark development experience. Here are some popular choices:

- IntelliJ IDEA: A powerful IDE with excellent support for Scala and Java.

- PyCharm: A dedicated Python IDE with robust features for Spark development.

- VS Code: A lightweight and versatile code editor with extensions for Python, Scala, and Java.

- Databricks Notebooks: A collaborative, cloud-based environment ideal for Spark development and data exploration.

2.3. Setting Up Databricks Community Edition

Databricks Community Edition is a free, cloud-based platform that provides a simplified environment for learning and experimenting with Apache Spark.

- Create an Account: Visit the Databricks website and sign up for the Community Edition.

- Create a Notebook: Once logged in, create a new notebook using either Python or Scala.

- Start Coding: You can now start writing and executing Spark code directly in the notebook.

Databricks Community Edition provides a simplified environment for learning Apache Spark.

3. Essential Concepts to Learn

To effectively learn Apache Spark, it’s crucial to grasp several core concepts. These concepts form the foundation for building and understanding Spark applications.

3.1. Resilient Distributed Datasets (RDDs)

RDDs are the fundamental data structure in Spark. They are immutable, distributed collections of data that can be processed in parallel across a cluster.

- Key Characteristics:

- Immutable: Once created, RDDs cannot be changed.

- Distributed: RDDs are partitioned across multiple nodes in a cluster.

- Resilient: RDDs can be recreated if a partition is lost.

- Operations on RDDs:

- Transformations: Operations that create new RDDs from existing ones (e.g.,

map,filter,reduceByKey). - Actions: Operations that return a value or write data to an external storage system (e.g.,

count,collect,saveAsTextFile).

- Transformations: Operations that create new RDDs from existing ones (e.g.,

3.2. DataFrames

DataFrames are a higher-level abstraction built on top of RDDs. They provide a structured way to organize and process data, similar to tables in a relational database.

- Key Characteristics:

- Structured Data: DataFrames have a schema that defines the data types of each column.

- Optimized Execution: Spark uses a query optimizer to improve the performance of DataFrame operations.

- Language Support: DataFrames are available in Python, Scala, Java, and R.

- Operations on DataFrames:

- SQL-like Queries: DataFrames support SQL-like queries for data manipulation and analysis.

- Transformations: Operations such as

select,filter,groupBy, andjoin. - Actions: Operations such as

show,count, andwrite.

3.3. Spark SQL

Spark SQL is a module for working with structured data using SQL queries. It allows you to query DataFrames and other data sources using standard SQL syntax.

- Key Features:

- ANSI SQL Compliance: Spark SQL supports a wide range of ANSI SQL features.

- Integration with DataFrames: You can easily create DataFrames from SQL queries and vice versa.

- Data Source Connectivity: Spark SQL supports various data sources, including Hive, Parquet, JSON, and JDBC databases.

3.4. Spark Streaming

Spark Streaming is an extension of Spark that enables real-time data processing. It allows you to ingest data from various sources, process it in micro-batches, and output the results to external systems.

- Key Concepts:

- DStreams: Discretized streams are sequences of RDDs that represent a stream of data.

- Micro-Batch Processing: Spark Streaming processes data in small batches at regular intervals.

- Fault Tolerance: Spark Streaming provides fault tolerance through checkpointing and replication.

3.5. MLlib and Spark ML

MLlib and Spark ML are Spark’s machine learning libraries. MLlib is the original library based on RDDs, while Spark ML is a newer library based on DataFrames.

- Key Algorithms:

- Classification: Logistic Regression, Decision Trees, Random Forests.

- Regression: Linear Regression, Gradient-Boosted Trees.

- Clustering: K-Means, Gaussian Mixture Models.

- Recommendation: Alternating Least Squares (ALS).

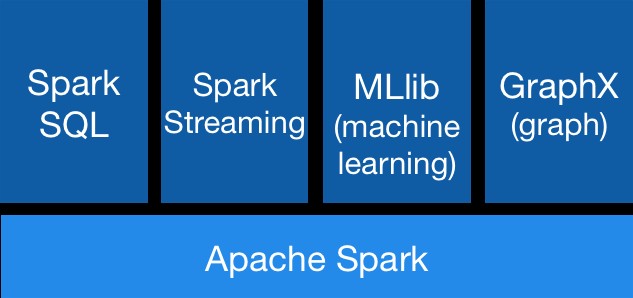

Spark Ecosystem

Spark Ecosystem

The Apache Spark ecosystem includes various components for different types of data processing.

4. Creating a Structured Learning Plan

A well-structured learning plan is crucial for efficiently learning Apache Spark. Here’s a sample plan to guide you through the process.

4.1. Week 1-2: Spark Basics

- Objective: Understand the core concepts of Spark and set up your development environment.

- Topics:

- Introduction to Apache Spark

- Installing and Configuring Spark

- RDDs: Creation, Transformations, and Actions

- DataFrames: Creation, Manipulation, and Queries

- Spark SQL: Basic SQL Operations

- Resources:

- Apache Spark Documentation

- Databricks Tutorials

- Online Courses (e.g., Coursera, Udemy)

- Hands-On:

- Write simple Spark applications to read and process data from text files.

- Create DataFrames from various data sources (e.g., CSV, JSON).

- Run SQL queries on DataFrames.

4.2. Week 3-4: Advanced Spark SQL and Performance Tuning

- Objective: Deepen your understanding of Spark SQL and learn how to optimize Spark applications for performance.

- Topics:

- Advanced Spark SQL Features (e.g., Window Functions, User-Defined Functions)

- Spark Architecture: Understanding Spark’s Execution Model

- Performance Tuning: Optimizing Spark Configurations and Code

- Working with Parquet and ORC Files

- Resources:

- Spark SQL Documentation

- “Spark: The Definitive Guide” by Bill Chambers and Matei Zaharia

- Blog Posts and Articles on Spark Performance Tuning

- Hands-On:

- Implement complex SQL queries using Spark SQL.

- Analyze the performance of Spark applications using the Spark UI.

- Optimize Spark configurations to improve performance.

4.3. Week 5-6: Spark Streaming and Real-Time Data Processing

- Objective: Learn how to use Spark Streaming to process real-time data.

- Topics:

- Introduction to Spark Streaming

- DStreams: Creating and Transforming Streams

- Integrating with Streaming Sources (e.g., Kafka, Flume)

- Windowing and State Management

- Resources:

- Spark Streaming Documentation

- Tutorials on Integrating Spark Streaming with Kafka

- Example Projects on GitHub

- Hands-On:

- Build a Spark Streaming application to process data from a Kafka topic.

- Implement windowing operations to calculate real-time statistics.

- Use checkpointing to ensure fault tolerance.

4.4. Week 7-8: Machine Learning with MLlib and Spark ML

- Objective: Learn how to use Spark’s machine learning libraries to build and deploy machine learning models.

- Topics:

- Introduction to MLlib and Spark ML

- Classification Algorithms (e.g., Logistic Regression, Decision Trees)

- Regression Algorithms (e.g., Linear Regression, Gradient-Boosted Trees)

- Clustering Algorithms (e.g., K-Means)

- Model Evaluation and Tuning

- Resources:

- MLlib Documentation

- Spark ML Documentation

- Online Courses on Machine Learning with Spark

- Hands-On:

- Build a classification model using Spark ML to predict customer churn.

- Implement a regression model using MLlib to predict sales.

- Use K-Means to cluster customers based on their purchasing behavior.

5. Leveraging LEARNS.EDU.VN for Your Spark Journey

LEARNS.EDU.VN offers a comprehensive platform to enhance your Apache Spark learning experience. Our resources are designed to provide you with the knowledge and skills you need to succeed.

5.1. Tailored Courses and Learning Paths

We provide tailored courses and learning paths specifically designed for Apache Spark. These resources help you master the core concepts and practical skills required for success in this field.

5.2. Expert Guidance and Support

LEARNS.EDU.VN connects you with seasoned Spark experts who can provide guidance, answer your questions, and offer personalized feedback on your projects. This support is invaluable as you navigate the complexities of Spark.

5.3. Hands-On Projects and Real-World Scenarios

Our platform offers hands-on projects and real-world scenarios that allow you to apply your knowledge and build a strong portfolio. These practical experiences are essential for demonstrating your skills to potential employers.

5.4. Community Engagement and Networking

Join our vibrant community of learners to collaborate, share insights, and network with fellow Spark enthusiasts. This community support fosters a collaborative learning environment and expands your professional connections.

6. Tips for Efficient Learning

To maximize your learning efficiency, consider these practical tips:

- Set Clear Goals: Define what you want to achieve with Spark and set specific, measurable goals.

- Focus on Practical Application: Prioritize hands-on coding and building projects over theoretical knowledge.

- Break Down Complex Topics: Divide complex topics into smaller, more manageable chunks.

- Practice Regularly: Consistent practice is key to reinforcing your understanding and skills.

- Seek Feedback: Ask for feedback from experienced Spark developers to identify areas for improvement.

- Stay Up-to-Date: Keep abreast of the latest developments and best practices in the Spark ecosystem.

7. Common Challenges and How to Overcome Them

Learning Apache Spark can present several challenges. Here’s how to overcome them:

- Complexity of Distributed Computing:

- Challenge: Understanding the intricacies of distributed computing can be daunting.

- Solution: Start with basic concepts like data partitioning and task distribution. Use visual aids and diagrams to understand the architecture.

- Performance Tuning:

- Challenge: Optimizing Spark applications for performance can be tricky.

- Solution: Learn about Spark’s execution model and use the Spark UI to identify bottlenecks. Experiment with different configurations and data formats.

- Debugging:

- Challenge: Debugging distributed applications can be challenging.

- Solution: Use logging and monitoring tools to track the execution of your code. Break down complex operations into smaller steps and test them individually.

- Integration with Other Technologies:

- Challenge: Integrating Spark with other technologies like Kafka and Hadoop can be complex.

- Solution: Follow tutorials and examples to learn how to configure and use these technologies together. Refer to the official documentation for guidance.

8. Real-World Applications of Apache Spark

Apache Spark is used in a wide range of industries and applications. Here are some notable examples:

- Data Analysis: Spark is used for analyzing large datasets to extract insights and trends.

- Machine Learning: Spark’s MLlib and Spark ML libraries are used for building and deploying machine learning models.

- Real-Time Streaming: Spark Streaming is used for processing real-time data from sources like Kafka and Twitter.

- ETL (Extract, Transform, Load): Spark is used for transforming and loading data into data warehouses and data lakes.

- Graph Processing: Spark’s GraphX library is used for analyzing and processing graph-structured data.

9. The Future of Apache Spark

Apache Spark continues to evolve with new features and improvements. Some key trends include:

- Enhanced Performance: Ongoing efforts to optimize Spark’s execution engine and data processing capabilities.

- Integration with Cloud Platforms: Seamless integration with cloud platforms like AWS, Azure, and Google Cloud.

- Support for New Data Sources: Expanding support for new data sources and formats.

- Advanced Machine Learning Capabilities: Continued development of Spark’s machine learning libraries.

- Improved Usability: Efforts to simplify the Spark API and make it more accessible to developers.

10. Resources for Continued Learning

To continue your Apache Spark journey, explore these valuable resources:

- Official Apache Spark Documentation: The official documentation is a comprehensive resource for learning about Spark’s features and APIs.

- Online Courses: Platforms like Coursera, Udemy, and edX offer courses on Apache Spark.

- Books: “Spark: The Definitive Guide” by Bill Chambers and Matei Zaharia is a highly recommended book.

- Blog Posts and Articles: Numerous blogs and articles cover various aspects of Spark.

- Community Forums: The Apache Spark community forums are a great place to ask questions and get help from other developers.

- GitHub: Explore open-source Spark projects on GitHub to learn from real-world code.

- Conferences and Meetups: Attend conferences and meetups to network with other Spark enthusiasts and learn about the latest trends.

FAQ Section:

Here are some frequently asked questions about learning Apache Spark:

-

Is Apache Spark difficult to learn?

- Apache Spark can be challenging initially, especially if you’re new to distributed computing. However, with a structured learning plan and consistent practice, it’s manageable.

-

What programming languages can I use with Spark?

- Spark supports Python, Scala, Java, and R. Python and Scala are the most commonly used languages.

-

Do I need to know Hadoop to learn Spark?

- While understanding Hadoop can be helpful, it’s not strictly necessary. Spark can run independently of Hadoop.

-

What are the prerequisites for learning Spark?

- Basic programming knowledge (preferably Python or Scala) and a basic understanding of data processing concepts are helpful.

-

Can I learn Spark without a background in big data?

- Yes, you can learn Spark without prior big data experience. Start with the basics and gradually build your knowledge.

-

How much does it cost to learn Spark?

- You can learn Spark for free using open-source resources and free online courses. Paid courses and certifications are also available.

-

What are the job prospects for Spark developers?

- Spark developers are in high demand, with excellent job prospects in various industries.

-

What skills are essential for a Spark developer?

- Essential skills include proficiency in Spark, knowledge of data processing techniques, and experience with big data technologies.

-

How can I stay up-to-date with the latest Spark developments?

- Follow the official Apache Spark blog, attend conferences, and participate in community forums.

-

Is it better to learn PySpark or Scala for Spark development?

- Both are excellent choices. PySpark is easier to learn for those with Python experience, while Scala offers better performance and more advanced features.

By following this comprehensive guide, you can embark on a successful journey to master Apache Spark. Remember, consistent effort and a well-structured learning plan are key to achieving your goals. Visit LEARNS.EDU.VN to explore our tailored courses, expert guidance, and hands-on projects that will accelerate your learning and prepare you for a rewarding career in the world of big data. Contact us at 123 Education Way, Learnville, CA 90210, United States, Whatsapp: +1 555-555-1212, or visit our website at learns.edu.vn to learn more.

Apache Spark is a powerful tool for processing large datasets and extracting valuable insights.