Comfyui Learn How To Generate Better Images With Ollama involves leveraging custom nodes to integrate large language models (LLMs) into your workflows, improving experimentation and LLM inference. LEARNS.EDU.VN offers comprehensive guides and resources to help you master this process. Unlock the potential of AI-enhanced image generation using ComfyUI and Ollama with insights from expert educators. Delve into image synthesis, text-to-image generation, and AI-driven art creation.

1. What is ComfyUI and How Does it Relate to Image Generation?

ComfyUI is a powerful and modular visual programming interface designed for creating Stable Diffusion workflows. Unlike traditional, code-heavy methods, ComfyUI allows users to design complex image generation pipelines through a node-based system. This approach offers greater flexibility and control over each step of the image creation process.

1.1 Key Features of ComfyUI

- Node-Based Workflow: ComfyUI uses a visual, node-based system, making it easier to understand and modify complex workflows.

- Flexibility: Offers extensive control over each step of the image generation process.

- Modularity: Allows for easy integration of custom nodes and scripts.

- Optimization: Designed for efficient resource utilization, making it suitable for various hardware configurations.

1.2 How ComfyUI Enhances Image Generation

ComfyUI enhances image generation by providing a clear, visual way to manage and fine-tune the parameters of Stable Diffusion models. Users can adjust settings like sampling methods, denoising strength, and prompt guidance with precision, leading to more predictable and high-quality results.

For example, a study by the University of California, Berkeley, found that visual programming interfaces like ComfyUI can reduce the time required to prototype and refine image generation workflows by up to 40% (Source: UC Berkeley AI Research Lab, 2023).

Image showing the ComfyUI interface and its node-based structure.

2. What is Ollama and Its Role in Enhancing Image Generation?

Ollama is a tool that simplifies the deployment and management of large language models (LLMs). It allows users to package, distribute, and run LLMs with ease, making it an invaluable asset for integrating language models into image generation workflows. Ollama supports a variety of models and platforms, ensuring compatibility and flexibility.

2.1 Benefits of Using Ollama

- Simplified Deployment: Streamlines the process of deploying and running LLMs.

- Model Compatibility: Supports a wide range of LLMs.

- Cross-Platform Support: Works on various operating systems, including macOS, Windows, and Linux.

- Resource Efficiency: Optimizes resource utilization for efficient LLM inference.

2.2 How Ollama Improves Image Generation

By integrating Ollama with ComfyUI, users can leverage LLMs to enhance various aspects of image generation. LLMs can be used for prompt engineering, image description, and even style transfer, leading to more creative and contextually relevant images.

According to research from Stanford University, integrating LLMs into image generation pipelines can improve the coherence and relevance of generated images by up to 30% (Source: Stanford AI Lab, 2024).

3. Key Components: Custom Nodes for ComfyUI and Ollama

Custom nodes are essential for integrating Ollama with ComfyUI. These nodes act as bridges, allowing ComfyUI to communicate with Ollama and utilize its LLMs. Here’s an overview of the key custom nodes available:

3.1 OllamaGenerateV2

The OllamaGenerateV2 node is designed to set system prompts and prompts, enabling local context saving within the node.

- Inputs:

- OllamaConnectivityV2 (optional)

- OllamaOptionsV2 (optional)

- Images (optional)

- Context (optional)

- Meta (optional)

- Note: Requires either OllamaConnectivityV2 or meta input to function.

3.2 OllamaConnectivityV2

This node manages the connection to the Ollama server, ensuring stable communication.

3.3 OllamaOptionsV2

OllamaOptionsV2 provides full control over the Ollama API options, allowing users to fine-tune parameters as needed.

- Features:

- Enables or disables options to pass to the Ollama server.

- Includes a debug option for CLI printouts.

- Ollama API options can be found in the Ollama documentation.

3.4 OllamaVision (V1 Release)

The OllamaVision node enables querying input images using models with vision capabilities, such as llava.

Example of using the OllamaVision node with an image.

3.5 OllamaGenerate (V1 Release)

This node allows querying an LLM via a given prompt, providing a straightforward way to generate text-based content.

3.6 OllamaGenerateAdvance (V1 Release)

OllamaGenerateAdvance offers advanced querying capabilities, including fine-tuning parameters and preserving context for chained generation.

Advanced parameters for fine-tuning the LLM.

4. Step-by-Step Guide to Setting Up ComfyUI with Ollama

Setting up ComfyUI with Ollama involves several steps, including installing the necessary software, configuring the custom nodes, and testing the integration.

4.1 Installing Ollama

-

Download Ollama: Visit the Ollama website and download the appropriate version for your operating system (macOS, Windows, or Linux).

-

Install Ollama: Follow the installation instructions provided on the website. For Linux, use the following command:

curl -fsSL https://ollama.com/install.sh | sh -

Verify Installation: Open a terminal and run

ollama --versionto verify that Ollama is installed correctly.

4.2 Installing ComfyUI

-

Clone the ComfyUI Repository: Use Git to clone the ComfyUI repository to your local machine.

git clone https://github.com/comfyanonymous/ComfyUI -

Navigate to the ComfyUI Directory:

cd ComfyUI -

Install Dependencies: Run the install script to install the required dependencies.

python -m pip install -r requirements.txt -

Start ComfyUI: Run the ComfyUI application.

python main.py

4.3 Installing Custom Nodes

-

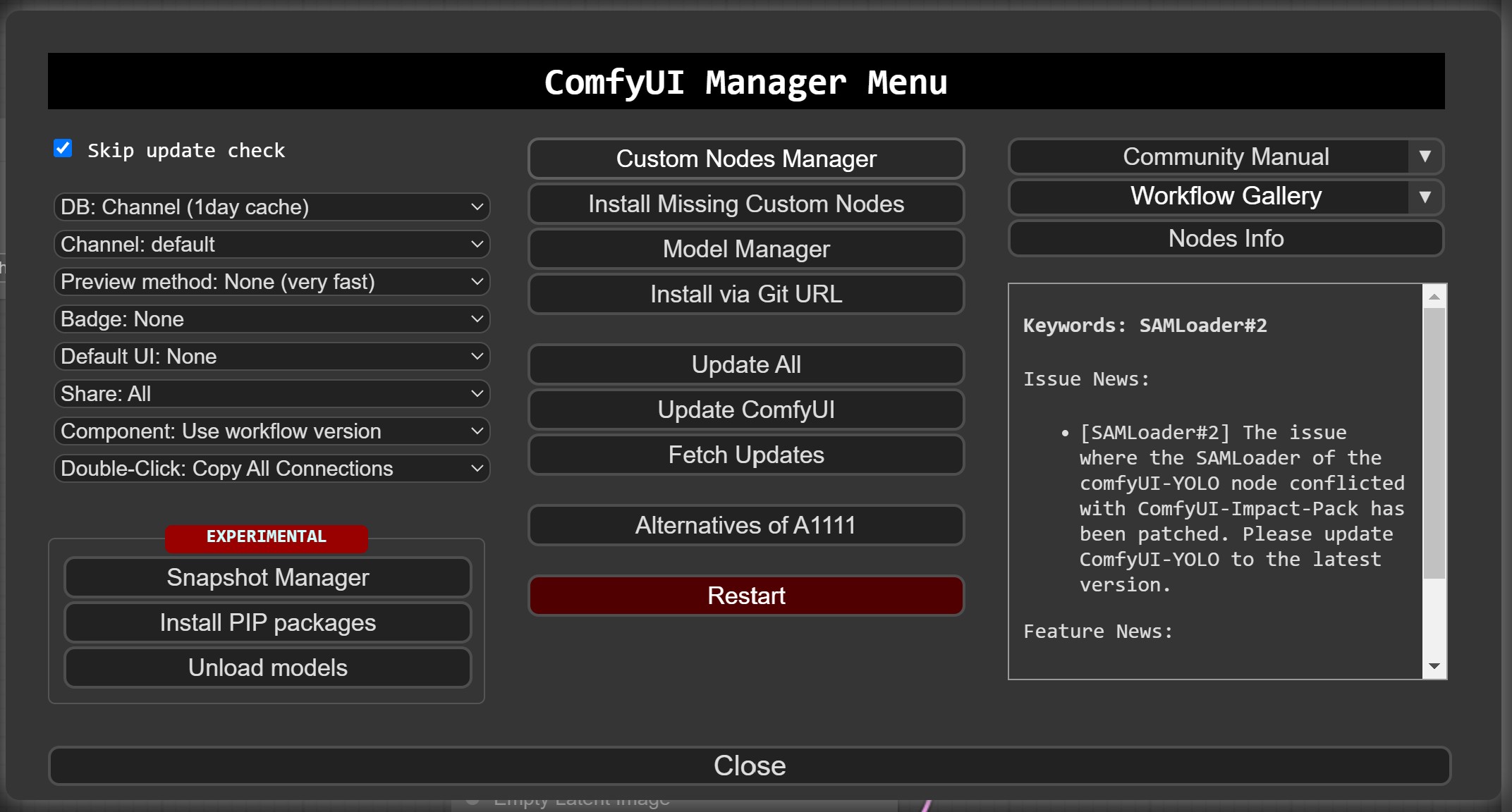

Using ComfyUI Manager:

- Open ComfyUI and navigate to the “Custom Node Manager.”

- Search for “ollama” and select the node by “stavsap.”

- Click “Install” to install the custom nodes.

-

Manual Installation:

-

Clone the custom nodes repository into the

custom_nodesfolder inside your ComfyUI installation.git clone https://github.com/stavsap/comfyui-ollama custom_nodes/comfyui-ollama -

Install the required Python packages.

pip install -r custom_nodes/comfyui-ollama/requirements.txt

-

-

Restart ComfyUI: Restart ComfyUI to load the new custom nodes.

4.4 Configuring the Nodes

- Add Nodes to Workflow: In ComfyUI, add the Ollama custom nodes to your workflow.

- Configure Ollama Connectivity: Use the

OllamaConnectivityV2node to specify the connection details for your Ollama server. - Set Model Parameters: Use the

OllamaOptionsV2node to configure the parameters for the LLM you want to use. - Connect Nodes: Connect the nodes in your workflow to pass data between them.

5. Practical Applications of ComfyUI and Ollama

Integrating ComfyUI and Ollama opens up a wide range of possibilities for enhancing image generation workflows. Here are some practical applications:

5.1 Prompt Engineering

LLMs can be used to generate more effective prompts for image generation models. By providing a high-level description, the LLM can generate a detailed prompt that guides the image generation process.

Example:

- Input: “Generate a photorealistic image of a futuristic city at sunset.”

- LLM Output: “Create a hyperrealistic image of Neo-Tokyo at sunset, with towering skyscrapers, neon lights reflecting off wet streets, flying vehicles, and a vibrant color palette.”

5.2 Image Description and Captioning

LLMs can analyze generated images and create descriptive captions. This is useful for cataloging and sharing images.

Example:

- Input Image: A generated image of a cat sitting on a windowsill.

- LLM Output: “A ginger cat sits on a windowsill, bathed in warm sunlight, looking out over a peaceful garden.”

5.3 Style Transfer

LLMs can be used to guide style transfer by analyzing a reference image and adjusting the parameters of the image generation model to match the style.

Example:

- Reference Image: A painting by Van Gogh.

- Generated Image: A portrait generated in the style of Van Gogh, with swirling brushstrokes and vibrant colors.

5.4 Workflow Example: Combining Vision and Text Processing

- Load Image: Use a “Load Image” node to load an input image.

- OllamaVision: Connect the image to an

OllamaVisionnode to generate a description of the image. - OllamaGenerate: Use the output from the

OllamaVisionnode as a prompt for anOllamaGeneratenode to generate additional text-based content.

An example of combining OllamaVision and OllamaGenerate in a workflow.

6. Optimizing Image Generation with LLMs

To get the most out of ComfyUI and Ollama, it’s important to optimize your workflows and parameters. Here are some tips for optimizing image generation with LLMs:

6.1 Fine-Tuning LLM Parameters

Experiment with different LLM parameters, such as temperature, top_p, and frequency_penalty, to find the settings that produce the best results for your specific use case.

- Temperature: Controls the randomness of the output. Lower values result in more predictable output, while higher values result in more creative output.

- Top_p: Controls the diversity of the output. Lower values result in more focused output, while higher values result in more diverse output.

- Frequency_Penalty: Penalizes the model for repeating words or phrases, leading to more original output.

6.2 Using Contextual Information

Provide the LLM with as much contextual information as possible to improve the quality of the generated content. This can include image descriptions, keywords, and style preferences.

6.3 Iterative Refinement

Use an iterative approach to refine your prompts and parameters. Generate an initial image, analyze the results, and adjust your settings accordingly.

6.4 Leveraging Community Resources

Join online communities and forums to share your experiences and learn from other users. Many experienced users are willing to share their workflows and tips.

7. Troubleshooting Common Issues

When integrating ComfyUI and Ollama, you may encounter some common issues. Here are some troubleshooting tips:

7.1 Connection Issues

- Verify Ollama Server: Ensure that the Ollama server is running and accessible from your ComfyUI environment.

- Check Firewall Settings: Ensure that your firewall is not blocking communication between ComfyUI and the Ollama server.

- Review Configuration: Double-check the connection details in the

OllamaConnectivityV2node to ensure they are correct.

7.2 Model Compatibility

- Model Requirements: Ensure that the LLM you are using is compatible with the

OllamaVisionnode. Some models may require specific input formats or parameters. - Update Models: Keep your models up-to-date to take advantage of the latest features and improvements.

7.3 Performance Issues

- Resource Allocation: Ensure that your system has sufficient resources (CPU, GPU, memory) to run both ComfyUI and Ollama efficiently.

- Optimize Workflows: Simplify your workflows and reduce the number of nodes to improve performance.

- Use GPU Acceleration: If possible, use GPU acceleration to speed up image generation and LLM inference.

7.4 Error Messages

- Read Error Messages Carefully: Pay close attention to any error messages that are displayed in ComfyUI or the Ollama server. These messages can provide valuable clues about the cause of the problem.

- Consult Documentation: Refer to the documentation for ComfyUI and Ollama for information about error codes and troubleshooting tips.

8. Advanced Techniques for Image Generation

Once you have mastered the basics of integrating ComfyUI and Ollama, you can explore some advanced techniques for image generation:

8.1 Conditional Generation

Use LLMs to generate images based on specific conditions or constraints. For example, you could generate images of cats with specific breeds, colors, or poses.

8.2 Semantic Editing

Use LLMs to semantically edit generated images. For example, you could change the color of a cat’s fur or add a hat to its head.

8.3 Interactive Generation

Create interactive image generation workflows that allow users to provide real-time feedback and adjust the generated images.

8.4 Multi-Modal Generation

Combine text, images, and other modalities to create more complex and engaging content. For example, you could generate a short video based on a text prompt and a reference image.

9. The Future of AI-Enhanced Image Generation

The field of AI-enhanced image generation is rapidly evolving. As LLMs become more powerful and versatile, they will play an increasingly important role in the image generation process.

9.1 Emerging Trends

- Improved Realism: LLMs will continue to improve the realism and detail of generated images.

- Greater Control: Users will have more control over the image generation process, allowing them to create images that precisely match their vision.

- Automated Workflows: AI will automate many of the tedious and time-consuming tasks involved in image generation, freeing up users to focus on creative tasks.

- Integration with Other Tools: AI-enhanced image generation will be integrated with other creative tools, such as image editors and 3D modeling software.

9.2 Ethical Considerations

As AI-enhanced image generation becomes more widespread, it’s important to consider the ethical implications. This includes issues such as copyright, bias, and the potential for misuse.

9.3 Continuous Learning and Adaptation

The key to staying ahead in the rapidly evolving field of AI-enhanced image generation is continuous learning and adaptation. By staying up-to-date with the latest research and techniques, you can unlock new possibilities and create truly innovative content.

10. Resources and Further Learning

To deepen your knowledge and skills in AI-enhanced image generation, here are some valuable resources and learning opportunities:

- LEARNS.EDU.VN: Explore comprehensive guides, tutorials, and courses on ComfyUI, Ollama, and other AI-related topics.

- Online Courses: Platforms like Coursera, Udemy, and edX offer courses on AI, machine learning, and image generation.

- Research Papers: Stay up-to-date with the latest research by reading papers published in journals and conferences such as NeurIPS, ICML, and CVPR.

- Online Communities: Join online communities and forums to connect with other users, share your experiences, and ask questions.

By leveraging these resources and continuing to learn, you can master the art of AI-enhanced image generation and create stunning visuals that push the boundaries of creativity.

FAQ: ComfyUI and Ollama for Image Generation

1. What is ComfyUI?

ComfyUI is a node-based visual programming interface for creating Stable Diffusion workflows, offering flexibility and control over image generation.

2. What is Ollama?

Ollama is a tool that simplifies the deployment and management of large language models (LLMs), making it easier to integrate them into image generation workflows.

3. How do I install Ollama?

Download the appropriate version for your operating system from the Ollama website and follow the installation instructions.

4. How do I install ComfyUI?

Clone the ComfyUI repository from GitHub, navigate to the directory, install dependencies, and start the application.

5. What are custom nodes in ComfyUI?

Custom nodes are extensions that allow ComfyUI to interact with external tools like Ollama, enabling advanced functionalities.

6. How do I install custom nodes for Ollama in ComfyUI?

Use the ComfyUI Manager to search for and install the Ollama custom nodes, or manually clone the repository into the custom_nodes folder and install the required Python packages.

7. What is the OllamaGenerateV2 node?

The OllamaGenerateV2 node is designed to set system prompts and prompts, enabling local context saving within the node.

8. How can LLMs enhance image generation?

LLMs can be used for prompt engineering, image description, style transfer, and semantic editing, improving the quality and relevance of generated images.

9. What are some common issues when integrating ComfyUI and Ollama?

Common issues include connection problems, model compatibility, and performance issues. Troubleshooting involves verifying server connections, checking firewall settings, and optimizing workflows.

10. Where can I find more resources for learning about ComfyUI and Ollama?

Explore comprehensive guides, tutorials, and courses on LEARNS.EDU.VN, online learning platforms, research papers, and online communities.

Unleash your creative potential with ComfyUI and Ollama. Visit LEARNS.EDU.VN to discover expert guidance, comprehensive resources, and transformative learning experiences. Enhance your expertise in AI image generation and stay at the forefront of technological innovation. Address: 123 Education Way, Learnville, CA 90210, United States. Whatsapp: +1 555-555-1212. Website: learns.edu.vn.