Abstract

Spike-timing-dependent plasticity (STDP) is widely recognized as the neurophysiological foundation of Hebbian Learning. It is demonstrably sensitive to both contingency and contiguity in the timing of pre- and postsynaptic neuron activity. In this article, we delve into how applying this Hebbian learning principle within a network of interconnected neurons, particularly in scenarios involving direct or indirect re-afference (such as observing or hearing one’s own actions), logically leads to the development of mirror neurons possessing predictive capabilities. Within this framework, we analyze mirror neurons as a dynamic system actively engaged in making inferences about the actions of others, thereby facilitating joint actions despite inherent sensorimotor delays. We explore the mechanism by which this system projects the self onto others, incorporating egocentric biases that contribute to mind-reading processes. Ultimately, we posit that Hebbian learning extends beyond the motor system, predicting the existence of mirror-like neurons for sensations and emotions. We review existing evidence supporting the presence of such vicarious activations in areas outside the motor cortex.

Keywords: mirror neurons, Hebbian learning, active inference, vicarious activations, mind-reading, projection

1. Introduction

The groundbreaking discovery of mirror neurons offered compelling neuroscientific support for the phenomenon of vicarious activations. These activations refer to the neural mechanisms within our own action systems that are also triggered when we observe actions performed by others, whether through sight [1–4] or sound [3,4]. Two decades after their initial identification, the precise function of mirror neurons remains a subject of vigorous debate [5–9]. Our focus here is not on resolving the functional questions surrounding mirror neurons. Instead, we aim to investigate their developmental origins, exploring the mechanisms that might lead to their emergence.

It’s noteworthy that mirror neurons in monkeys respond not only to visually observed actions but also to the sounds of actions, such as the crinkling of a plastic bag [3,4]. Similarly, human premotor cortices exhibit responses to action-related sounds like the hiss of a Coca-Cola can opening [10]. Such specific selectivity is unlikely to be genetically predetermined. Therefore, we adopt a mechanistic perspective to explore how these mirror neurons could develop over time.

We begin by defining the contemporary neuroscience understanding of Hebbian learning, grounded in spike-timing-dependent plasticity (STDP). We then investigate how this nuanced understanding of Hebbian learning can illuminate the emergence of mirror neurons. Furthermore, we propose how mirror neurons evolve into a system for active predictive mind-reading. Finally, we extend our argument to suggest that vicarious activations are not limited to the motor system, but also occur in somatosensory and emotional cortices. We propose that the same Hebbian learning rules could account for the development of mirror-like neurons in these broader brain regions.

2. What is Meant by Hebbian Learning

(a). Historically

The concept of Hebbian learning originates from the seminal work of Donald Hebb [11], who proposed a neurophysiological mechanism for learning and memory based on a fundamental principle. Hebb articulated this principle as follows: “When an axon of cell A is near enough to excite a cell B and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that A’s efficiency, as one of the cells firing B, is increased” (p. 62).

A careful examination of Hebb’s principle underscores his appreciation for the crucial roles of causality and consistency in learning. He didn’t simply state that simultaneous firing of two neurons strengthens their connection. Instead, he emphasized that one neuron must repeatedly (consistency) take part in firing (causality) the other to enhance the connection’s efficiency. While not Hebb himself, Carla Shatz offered a memorable, rhyming paraphrase of this principle: “what fires together, wires together” [12, p. 64].

While this mnemonic is catchy, it risks obscuring the critical element of causation present in Hebb’s original formulation. If two neurons truly “fire together” in perfect simultaneity, neither can be said to cause the firing of the other. Causality, in this context, is indicated by temporal precedence [13], where one neuron’s firing “took part in firing the other.” Therefore, Shatz’s paraphrase should be interpreted with caution, as it simplifies a more nuanced concept.

(b). Neurophysiological Understanding

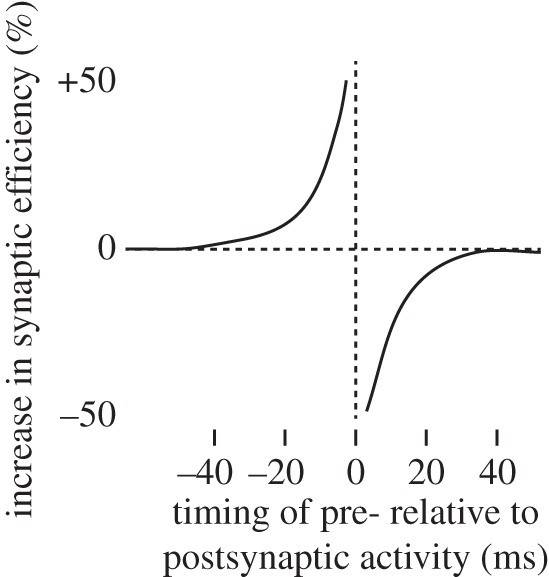

The 1990s witnessed significant advancements in neurophysiology that provided the foundation for our modern neurophysiological understanding of Hebbian learning, primarily through the discovery and characterization of spike-timing-dependent plasticity (STDP) [14–16]. Experiments involving stimulation of pairs of connected neurons with varying stimulus onset asynchronies revealed an asymmetric STDP window (see figure 1).

Temporal asymmetry of spike-timing-dependent plasticity.

In an excitatory synapse connecting to an excitatory neuron, if the presynaptic neuron is stimulated up to 40 ms before the postsynaptic neuron, the synapse undergoes potentiation, strengthening its connection. Conversely, if the presynaptic neuron is stimulated after the postsynaptic neuron, the synapse is depressed, weakening the connection. If neurons were simply firing at the same time, inherent temporal variability would lead to the presynaptic neuron firing sometimes before and sometimes after the postsynaptic neuron. In such a scenario, potentiation and depression effects would likely cancel each other out over time, resulting in minimal net STDP. As Hebb insightfully predicted, causality, reflected in temporal precedence, is thus a crucial determinant of synaptic plasticity.

Further experiments have refined our understanding of the consistency required for synaptic plasticity to occur. Bauer and colleagues [17] employed a standard STDP protocol, stimulating the presynaptic neuron 5–10 ms before the postsynaptic neuron. Applying 10 such paired stimulations resulted in significant synapse potentiation (see figure 2a). However, when they repeated the protocol, but interspersed unpaired stimulations where only the postsynaptic neuron was stimulated, the potentiation was negated, despite the same number of paired trials being applied (figure 2b). This highlights the critical role of contingency for STDP. In figure 2a, presynaptic activity is predictive of postsynaptic activity (p(post|pre) = 1, p(post|no pre) = 0), while in figure 2b, presynaptic firing is not informative (p(post|pre) = p(post|no pre) = 0.5). This elaborates on Hebb’s intuitive notion of “repeatedly and persistently takes part” and resonates with principles of associative learning [18].

(a) Applying 10 paired pre- and postsynaptic stimulations leads to significant potentiation of the synapse. (b) Intermixing 10 unpaired, postsynaptic stimulations only cancels the potentiation. (c) Applying 10 unpaired stimulations after 10 paired also cancels potentiation. (d) Delaying the unpaired stimulations by 15 or 50 min preserves the potentiation of the 10 paired trials. The presynaptic stimulation is shown as a curve to represent the excitatory postsynaptic potential that arrives in the postsynaptic neuron, the postsynaptic stimulation as a vertical bar to represent an action potential. Adapted from Bauer et al. [17]; epsp, excitatory postsynaptic potential.

Bauer et al. [17] then shifted the 10 unpaired stimulations to occur after the 10 paired stimulations and still observed no potentiation (figure 2c). However, when the unpaired events were delivered 15 or 50 minutes after the paired events, the STDP cancellation effect was no longer observed (figure 2d). This indicates that unpaired stimulations are integrated with paired stimulations if they fall within the approximately 7-minute window it took to deliver 10 paired and 10 unpaired trials, but not if they occur much later.

Thus, STDP is contingent on both contiguity and contingency. It utilizes a narrow time window of about 40 ms to determine if a presynaptic neuron contributed to causing a postsynaptic action potential (contiguity). It also employs a much broader time window of roughly 10 minutes to assess whether presynaptic activity is informative about postsynaptic activity (contingency). It remains an open question whether these specific details of contingency integration are universal across all neurons or specific to regions like the lateral nucleus of the amygdala, where these experiments were conducted.

In contemporary neurophysiology, ” Hebbian learning ” has become synonymous with the rapidly evolving understanding of STDP [15,16]. This understanding, inspired by Hebb’s foundational work, emphasizes STDP’s sensitivity to precise temporal precedence (causality) and contingency over timescales of minutes. The continued use of the term ” Hebbian learning ” to refer to STDP honors Hebb’s prescient insights into how much of learning could be explained by this form of spike-timing-dependent plasticity. In this article, we adopt this contemporary usage of Hebbian learning. Computational neuroscience also employs a similarly refined understanding of Hebbian learning, which also incorporates contingency (http://lcn.epfl.ch/~gerstner/SPNM/node70.html).

(c). Alternative Definitions

In contrast to the neurophysiological and computational understanding, some psychological literature still equates Hebbian learning with the simplified mnemonic “what fires together wires together.” We examine, as an example of this alternative definition, the work of Cooper et al. [19], particularly because their paper argues against Hebbian learning in the context of the mirror neuron system. Understanding the source of this divergence in definitions is crucial.

Cooper et al. write: “Hebb famously said that ‘Cells that fire together, wire together’ and, more formally, ‘any two cells or systems of cells that are repeatedly active at the same time will tend to become ‘associated,’ so that activity in one facilitates activity in the other.’ Thus, Keysers and Perrett’s Hebbian perspective implies that contiguity is sufficient for MNS development; that it does not also depend on contingency.”

Several misunderstandings are evident in this statement. First, Hebb himself never coined the phrase “Cells that fire together, wire together.” This mnemonic was introduced by Carla Shatz [12] in an article for Scientific American aimed at a general audience. Second, the quoted “formal postulate” attributed to Hebb (“any two cells …”) is not actually his postulate. Hebb used this sentence to summarize existing ideas, stating, “The general idea is an old one, that any two cells …” [p. 70].

Both the misattributed mnemonic and Hebb’s summary of earlier concepts obscure the causal element present in Hebb’s actual postulate: “When an axon of cell A is near enough to excite a cell B and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that A’s efficiency, as one of the cells firing B, is increased” ([11], p. 62).

As a consequence of these misinterpretations, Cooper et al.’s notion of Hebbian learning diverges significantly from ours. Their definition essentially reduces to contiguity, while our understanding encompasses temporal precedence, causality, and contingency. While Hebb’s own preference between these definitions remains unknown, it is essential to acknowledge this divergence. Failure to do so can lead to misapplications, such as, in our view, what Cooper et al. have done: applying their contiguity-based definition to our theory of mirror neuron emergence, which is based on a different, more nuanced notion of Hebbian learning. This definitional mismatch can lead to misunderstandings of our theory and, in this case, unwarranted criticisms.

3. Hebbian Learning and Mirror Neurons: A Macro-Temporal Perspective

Mirror neurons have been identified in the monkey’s ventral premotor cortex (PM; area F5, [2–4,20]) and inferior posterior parietal cortex (area PF/PFG, [21]). These two regions are reciprocally interconnected [22]: PF/PFG projects to PM, and PM projects back to PF/PFG. Furthermore, PF/PFG neurons also have reciprocal connections with neurons in the superior temporal sulcus (STS [22,23]), a region known to respond to visual perception of body movements, faces, and the sounds of actions [24]. Mirror neurons exist in other brain regions as well [25–27], but to illustrate the principle of how a Hebbian learning account of mirror neuron emergence could function, a simplified system involving only two brain regions—STS and PM—with reciprocal connections, is sufficient.

In this section, we adopt a relatively coarse temporal resolution of approximately 1 second for an initial approximation of the Hebbian account of mirror neuron development. At this level of description, Hebbian learning makes predictions at the neural level that are analogous to those made by associative sequence learning—a cognitive model initially developed to describe the emergence of imitation [28]—at the functional level. Key publications outlining Hebbian learning at this temporal resolution include those by Keysers & Perrett and Del Giudice et al. [24,29], while associative sequence learning is described in works by Heyes, Brass & Heyes, and Cook et al. [28,30,31]. In §4, we will shift to a finer time-scale to explore how mirror neurons could organize into a dynamic system that generates active inferences.

(a). Re-afference as a Training Signal

In newborn human and monkey infants, our knowledge of the selectivity of STS and PM neurons and their interconnections is limited. Therefore, we begin by assuming relatively random bidirectional connections between STS neurons (responding to visual and auditory aspects of different actions) and PM neurons (coding for the execution of similar actions). These connections are mediated by the posterior parietal lobe (particularly PF/PFG), but for simplicity, we do not explicitly address this intermediate stage here.

When an individual performs a novel hand action, they simultaneously see and hear themselves performing this action. This sensory input resulting from one’s own action is termed “re-afference.” The innate tendency of typically developing infants to gaze at their own hands ensures that re-afference occurs frequently as they explore new movements [32]. Consequently, activity in PM neurons initiating a specific action and activity in STS neurons responding to the visual and auditory feedback of that action would, to a first approximation (but see §4), consistently and repeatedly overlap in time. For example, an STS neuron responsive to grasping will exhibit firing patterns that temporally overlap with the activity of PM grasping neurons when the individual observes themselves grasping. Conversely, STS neurons for grasping will not systematically overlap in time with PM neurons for throwing, and vice versa.

Thus, re-afference creates a scenario where the firing of STS and PM neurons representing the same action will overlap more systematically than those representing different actions. This establishes a degree of contiguity (firing at roughly the same time) and contingency (e.g., p(sight of grasping|grasping execution) > p(sight of throwing|grasping execution)). At this macro-temporal scale, the synapses connecting STS and PM representations of the same action should be potentiated based on the principles of Hebbian learning outlined earlier, while connections representing different actions should be weakened.

(b). Re-afference Should Favor Matching Connections

We propose that repeated re-afference and the resulting Hebbian learning will lead to a predominance of matching STS-PM connections (i.e., connections linking representations of similar actions). This hypothesis rests on the largely untested assumption that, throughout an individual’s life, the statistical relationship between their actions and sensory input is such that Hebbian learning criteria primarily foster matching synaptic connections. In cases of direct auditory or visual re-afference, this is straightforward, as the sounds and sights of our own actions inherently correspond to the actions themselves.

However, certain actions are perceptually opaque, such as facial expressions. One might argue that mirror neurons for facial expressions are innate, citing evidence that newborns are more likely to produce certain facial expressions when observing others do so, seemingly before learning could account for this phenomenon [33]. The extent of facial expression imitation in newborns is still debated. While robust evidence supports imitation of tongue protrusion [34,35], evidence for other facial expressions is less conclusive [31,36,37].

We [6,24,29] and others [31] have suggested that indirect re-afference could provide the necessary contiguous and contingent signals to establish matching connections between STS neurons (responding to observed facial expressions) and motor programs for producing them. Parents often imitate their babies’ facial expressions, providing numerous instances of imitation during face-to-face interactions [37]. A baby would frequently experience indirect re-afference by seeing and hearing their facial expressions being mirrored, fostering matching Hebbian associations.

In this view, our genetic makeup might facilitate the development of mirror neurons for facial expressions, not through pre-wired STS-PM connections at birth, but by predisposing babies to attend to their parents’ faces and parents to imitate their babies’ facial expressions [29]. Ultimately, the development of mirror neurons for facial expressions still relies on learning during development, but this learning is channeled by these inherent behavioral predispositions. The impact of being imitated on Hebbian learning would likely be less immediate than direct re-afference due to the greater variability in timing and visual properties of parental imitation. In modern society, physical mirrors could also contribute to re-afference for facial expressions, potentially enhancing Hebbian learning, although their role in typical development is uncertain.

Re-afference is not limited to vision. Babies also hear themselves cry and laugh, allowing for the robust development of auditory mirror neurons for these emotional sounds, even in the absence of visual parental imitation. During babbling, infants create contingencies between premotor neuron firing (triggering pseudo-speech) and temporal lobe neurons (responding to such speech). Once synaptic connections are shaped by babbling, hearing a parent speak could activate motor programs to replicate words [6]. This process is further supported by parents’ tendency to modify their speech tone to resemble that of the baby (motherese [38]). Here, the cross-cultural prevalence of motherese and infants’ inclination to babble would canalize the emergence of appropriate articulatory mirror neurons.

Conversely, many stimuli that do not align with our motor programs occur incidentally while we perform actions (e.g., a baby grasping in a daycare with other babies crawling and playing). However, these sensory inputs lack the same contingency or tight temporal precedence relative to specific motor program activations, and therefore, should average out like noise. Certain special cases could create close temporal precedence and non-matching contingencies. For instance, when someone gives an object to a baby, the sight of the placing hand will just precede the baby’s grasping action, potentially leading to some association between STS neurons for placing and PM neurons for grasping. Indeed, “logically related mirror neurons” appear to exist [2], and laboratory experiments suggest that repeated exposure to non-matching contingencies can temporarily link motor programs to non-matching action observations (see section 4 of [39] for a review). Additionally, an object graspable in a particular way will always be systematically present when a baby grasps it in that way, creating predictable Hebbian connections between shape neurons in the visual system and PM neurons coding the affordances of the object. Such connections are observed in “canonical neurons” [40].

Unfortunately, empirical research directly testing our assumption that statistical relationships (contingency and occurrence within the Hebbian learning temporal window) between our actions and sensory experiences (auditory and visual) predominantly favor matching relationships in the mirror neuron system is limited. A few studies analyzing videos of babies and parents have shown that parents frequently imitate infants’ vocalizations and facial expressions, and infants spend considerable time observing their own hands and the facial expressions (often imitative) of caregivers (see [32] and section 5 of [37] for reviews). A powerful approach to test our hypothesis would involve recording infants’ sensory input and their own actions over extended periods to analyze the statistical relationship between motor output and auditory-visual input. Currently, the manual effort required to analyze days of such recordings makes this project impractical. However, with advancements in head-mounted devices (e.g., Google Glasses) and 3D motion tracking (e.g., Microsoft Kinect) becoming more accessible, systematic quantification of sensorimotor contingencies experienced by infants may soon be feasible. Until then, the remainder of our argument is based on the assumption that sensorimotor contingencies would favor a significant proportion of matching Hebbian connections between STS and PM.

(c). From Re-afference to Mirror Properties

If matching connections have been established through Hebbian learning, and an individual hears someone performing a similar action, the sound of the action, resembling re-afferent sounds associated with the listener’s past actions, would activate STS neurons. These activated STS neurons would, via potentiated synapses, trigger PM neurons responsible for executing actions that generate similar sounds. In this way, PM neurons become mirror neurons. The activity of PM neurons while listening to others’ actions essentially represents a reactivation of past procedural memories—memories of motor states that co-occurred with specific sensory events—but activated by an external social stimulus. This positions mirror neurons within a broader category of reactivation phenomena, encompassing memory and imagination.

The case of vision is more complex. We perceive our own actions from an egocentric perspective, while others’ actions are viewed from an allocentric perspective. How then can observing others’ actions trigger STS neurons that typically respond to viewing our own actions? First, some STS neurons are responsive to actions viewed from multiple perspectives [24]. The mechanisms underlying this property are not fully understood, but in monkeys, viewpoint invariance can emerge after experiencing different perspectives of the same 3D object [41]. Therefore, exposure to diverse perspectives of others’ actions may equip STS neurons with the ability to respond to actions across viewpoints and agents. Second, neurons might represent viewpoint-invariant action properties (e.g., rhythmicity, temporal frequency, etc.) that can be matched across actions with similar characteristics [42], potentially obviating the need for mental rotation. Third, instances of imitation or physical mirrors could provide humans with the contingencies necessary for Hebbian learning to operate between the third-person perspective of observing others’ actions and performing their own actions. Finally, for actions with characteristic sounds, individuals might initially learn contingencies between seeing and hearing others perform these actions (e.g., hearing speech while seeing lip movements). This could lead to the development of multimodal neurons in the STS [43]. The sight of an action could then trigger matching motor actions by activating the same audio-visual speech neurons that have been linked to the viewer’s motor program during auditory re-afference. The specific mechanisms, or combination of mechanisms, responsible for the emergence of visuo-motor mirror neurons capable of handling perspective differences remain to be investigated empirically.

(d). Alternative Accounts

The majority of research on the mirror neuron system does not directly address the developmental origins of mirror neurons. Instead, it often focuses on their potential role in social cognition and survival advantages [2,6,9,25,44–47]. Cecilia Heyes has interpreted such functional claims as implying a “genetic account” of mirror neurons, suggesting that “The standard view of MNs, which we will call the “genetic account”, alloys a claim about the origin of MNs with a claim about their function. It suggests that the mirrorness of MNs is due primarily to heritable genetic factors, and that the genetic predisposition to develop MNs evolved because MNs facilitate action understanding” [31].

We believe this to be an inaccurate interpretation of neuroscientific work on mirror neurons. For neuroscientists, proposing that mirror neurons contribute to social cognition and enhance fitness does not automatically imply that humans and monkeys are hard-wired with mirror neurons and that learning plays only a minimal role. Neuroscientists argue that disrupting mirror neuron function would likely impair social cognition, a claim supported by a growing body of evidence [48]. Such a claim is compatible with selective pressure on the genome to facilitate mirror neuron development, but it does not necessitate strong genetic encoding or pre-wiring at birth. As discussed earlier, the genome could canalize Hebbian learning of mirror neurons, rather than directly predisposing individuals to possess fully formed mirror neurons or dictating specific connections [29]. In short, the “standard view” may be criticized for neglecting the ontogeny of mirror neurons, but it does not necessarily claim that mirror neurons are entirely genetically encoded and impervious to learning.

Theories that do address the ontogenesis of mirror neurons generally emphasize the significant role of experience. This is true for our Hebbian learning model, associative sequence learning [28,30,31], the model by Casile et al. [34], the epigenetic model by Ferrari et al. [49], the Bayesian model by Kilner et al. [50], and most computational models of the mirror neuron system [51]. All these models also acknowledge a crucial role for genetic predisposition, at least in establishing synaptic connections between sensory and motor regions and implementing basic learning rules within the system.

The primary differences between these models likely lie in the level of description they prioritize. Our Hebbian learning model adopts a bottom-up neuroscientific approach, starting with fundamental components like spike-time-dependent plasticity at synapses and anatomical connection details (see also §4). It investigates whether mirror neurons can emerge bottom-up from the interactions of these basic elements. Associative sequence learning, in contrast, is a cognitive model, not a neural one. It emphasizes system-level variables shown to be critical for associative learning in behavioral experiments, but it does not delve into the biological implementation of learning at the synaptic level [28,31]. Casile et al. [34] highlight the possibility that mirror neurons for different actions might develop through different mechanisms, suggesting that genetic pre-wiring might be more significant for facial expressions, while Hebbian learning might be more important for hand actions [34]. The epigenetic model adds that experience can influence development not only through Hebbian learning but also by epigenetically modifying gene expression [49]. Computational models often focus on the overall system architecture in terms of information processing, often using error back-propagation algorithms without specific biological hypotheses about the implementation of these learning rules [51]. Finally, Kilner et al.’s predictive coding account [50] describes mirror neurons at the systems level using Bayesian statistics. The biological mechanisms for computing these statistics at the synaptic level are beyond the scope of Kilner et al.’s theory.

Therefore, there appears to be a fundamental consensus on the importance of learning in mirror neuron ontogenesis. Portraying the field as divided into two opposing camps—one claiming genetic determinism and the other conducting experiments to refute it—seems to be a misrepresentation. Instead, existing theories seem to be parallel efforts to explore how experience shapes a complex phenomenon, starting from different levels of analysis: synaptic level, brain region interactions, and cognitive entity associations. The major challenge in the coming decades will be to integrate these “local” perspectives into a unified model encompassing all levels. In the meantime, debating the superiority of one approach over another seems unproductive. Further development of each model will illuminate the levels that each approach most directly addresses. In this spirit, we now shift to explore a finer temporal dimension of Hebbian learning to demonstrate how bottom-up synaptic predictions can align with top-down predictions proposed by Kilner et al. [50].

4. The Micro-Temporal Hebbian Perspective and Predictions

A key feature of our contemporary understanding of Hebbian learning is its exquisite sensitivity to the precise temporal relationships between pre- and postsynaptic activity. We now examine the core concept of our Hebbian learning account—direct or indirect re-afference—at this millisecond timescale, expanding upon the brief account presented in Keysers and Keysers et al. [6,52].

(a). Predictive Forward Connections

Consider the action of reaching for a cookie, grasping it, and bringing it to the mouth. In the external world, the timing of each subcomponent of the action and their sensory consequences coincide in time (figure 3a). However, approximately 100 ms are required for premotor activity to initiate complex overt actions like reaching and grasping [53]. Another 100 ms are needed for the visual and auditory feedback of that action to activate STS neurons [54]. This results in a roughly 200 ms shift in the spiking of STS neurons (representing the visual and auditory aspects of an action) relative to the PM neurons that initiated the action (figure 3b).

(a) In the real world, the execution of an action and the sight and sound of each phase of an action occur at the same time, and one might therefore predict that corresponding phases in the sensory and motor domain would become associated. (b) Instead, latencies shift the responses in the STS relative to the premotor (PM) neurons, and Hebbian learning at a fine temporal scale predicts associations between subsequent phases, i.e. predictions. (c) Inhibitory feedback from PM to STS is also subjected to Hebbian learning and generates prediction errors in the emerging dynamic system (d). (Online version in colour.)

Therefore, the macro-temporal notion of STS neuron activity for an action overlapping in time with PM neuron activity initiating the action is an oversimplification. This has implications for Hebbian learning. STS responses to the sight of reaching no longer occur just before PM neuron activity for reaching, as required by the 40 ms STDP window (figure 1). Instead, the firing of STS neurons responding to a particular action phase (e.g., reaching) precedes PM neural activity initiating the next phase (e.g., grasping). Hebbian learning should primarily reinforce connections between STS reaching and PM grasping neurons. The dominant learning outcome should be connections with predictive properties. Some Hebbian learning might still occur within a given action phase because early spikes of STS reaching neurons occur just before late spikes of PM reaching neurons.

How predictive would this system be? Given a 200 ms delay between PM neuron activity and STS neuron firing (representing re-afference), the sight of an action component at time t would trigger activity (via Hebbian synapses) in PM neurons representing the action component that typically occurs at t + 200 ms. Sensorimotor delays directly determine the predictive horizon of sensorimotor connectivity. Thus, Hebbian learning, due to STDP’s temporal asymmetry (figure 1) and known sensorimotor system latencies (figure 3b), would naturally train a predictive system.

In the real world, action components are organized into diverse sequences, like letters in words. Predictive STS → PM connections would likely reflect the transition probability distribution of our actions. If, in our past motor history, action A was never followed by action x1 (p(x1|A) = 0), sometimes by x2 (p(x2|A) = 0.2), and often by x3 (p(x3|A) = 0.8), Hebbian learning would result in an STS neuron responding to A having near-zero connection weight with PM neurons initiating x1, a 0.2 weight with x2 neurons, and a 0.8 weight with x3 neurons. Consequently, PM neurons for these three actions would exhibit activity states of 0, 0.2, and 0.8 following the STS representation of action A. The PM activity pattern represents a probability distribution of upcoming actions, reflecting the observer’s past motor contingencies, and can serve as a prior (in a Bayesian sense) for the most likely next action.

(b). Inhibitory Backward Connections and Prediction Errors

An often-overlooked aspect of mirror neuron system anatomy is the presence of backward connections from PM to STS, which appear to exert a net inhibitory influence [55,56]. From a Hebbian perspective, the situation for these connections is slightly different. PM neurons fire before STS neurons, as Hebbian learning requires, albeit with a 200 ms interval instead of the optimal 40 ms. Therefore, for these inhibitory feedback connections, inhibitory projections from PM neurons encoding a particular action phase should be strengthened with STS representations of the same action and the action immediately preceding it (figure 3c).

Considering both forward and backward information flow, the mirror neuron system appears to be more than a simple associative system where observing an action triggers its motor representation. Instead, it becomes a dynamic system (figure 3d). The sight and sound of an action activate STS neurons. This leads to predictive activation of PM neurons encoding the action expected 200 ms later, with activation levels reflecting the likelihood of occurrence based on past sensorimotor contingencies. However, the system’s dynamics do not stop there. This prediction in PM neurons is fed back as an inhibitory signal to STS neurons. Because feedback targets neurons representing previous and current actions in PM, it should have two consequences. First, it would terminate sensory representations of past actions, potentially contributing to visual backward masking [57]. Second, by canceling representations associated with x1, x2, and x3 according to their respective probabilities, it essentially inhibits STS neurons representing the expected sensory consequences of the predicted action in PM. Conceptually, it inhibits the hypotheses PM neurons hold about the next action to be perceived.

When the brain then perceives the actual next action, if the input matches the hypothesis, the sensory consequences of that action are optimally inhibited, and minimal information flows from STS → PM. Because PM neurons (and posterior parietal neurons [58]) are organized into action chains within the premotor cortex, the representation of action x3 would then trigger activations of actions that typically follow x3 during execution, actively generating a stream of action representations in PM neurons without further sensory drive. These further predictions would continue to inhibit future STS input. If action x2 follows action A, inhibition would be weaker, and more of the sensory representation of x2 would reach PM. This represents a “prediction error,” modifying PM activity to better align with the input, deviating from prior expectations. If action x1 follows action A, no cancellation occurs in STS, and the strongest activity flows from STS → PM, rerouting PM activity to an action stream typically following x1, rather than x3 as initially hypothesized.

At this temporal resolution, during action observation or listening, the activity pattern across PM nodes is not a simple mirror of STS activity but an actively predicted probability distribution for the observer’s perception of the observed individual’s next action. Through Hebbian learning, the entire STS-PM loop becomes a dynamic system performing predictive coding. When the observed action unfolds as expected, PM activity is generated using normal motor control sequences rather than visual input.

(c). Regulating Learning and Contact Points with Other Models

Feedback inhibition also has an important consequence for learning regulation. During re-afference, once the system is trained, action execution triggers inhibitory feedback to STS neurons, ensuring STS neurons limit their input to PM neurons responsible for the action, thus making Hebbian learning self-limiting. If contingencies change, e.g., learning a new skill like piano playing, PM neurons fail to predict auditory re-afference, and new learning occurs as new input from STS reaches PM neurons, allowing for further Hebbian learning.

This prediction error computation within the Hebbian learned system establishes a crucial link to other mirror neuron system models. Kilner et al.’s predictive coding model [50] does not specify how the brain performs Bayesian predictions at synapses. Instead, it proposes that PM activity represents a Bayesian estimate of future actions, enabling the observer to infer the actor’s motor intentions. This model views STS → PM information flow as merely updating Bayesian probabilities of premotor states. Our model, from a bottom-up perspective, arrives at very similar interpretations. Our Hebbian learning model complements the Bayesian prediction model by providing a plausible biological bottom-up implementation. Conversely, the Bayesian prediction model helps interpret the information processing we describe within the framework of the Bayesian or predictive coding revolution in brain science.

Many areas of brain science now recognize perception as a hierarchical process where sensory information is not passively transmitted from lower to higher brain regions. Instead, perception is increasingly seen as an active process. The brain generates predictions based on past experience (Bayesian prior probabilities), which are sent from higher to lower regions and subtracted from actual sensory input. The sensory input sent from lower to higher regions after prediction subtraction is a prediction error, serving to update predictions rather than directly driving perception. This general framework has been successfully applied to understand neural activity in early visual cortex [59,60] and has recently been used to conceptualize the mirror neuron system [50] and even mentalizing [61].

Evidence for predictive coding in the mirror neuron system is still emerging. The predictive nature of PM response is evident from observations that images of reaching increase excitability in muscles involved in the likely subsequent action phase, grasping [62]. The possibility of PM activity driven by internal predictions, without explicit visual input, is supported by findings that mirror neurons responding during grasping execution also respond to observing reaching behind an opaque screen [20] and auditory mirror neurons responding to the cracking sound of peanut shelling start firing before this phase when viewing hands grasping a peanut [3]. Evidence that PM → STS predictions cancel out predicted actions, silencing STS → PM information flow only when actions are predictable, comes from observations that the dominant information flow direction is PM → STS during predictable action observation but STS → PM during unpredictable action beginnings [63].

(d). Hebbian Learning and Joint Actions

Humans can act together with remarkable temporal precision. Pianists in duets can synchronize actions within 30 ms of a leader [64]. Given the 200 ms sensorimotor delays mentioned earlier, how is this possible? Shouldn’t it take 200 ms for a musician to hear the leader’s play and respond? One powerful implication of fine-grained Hebbian learning analysis is that synaptic connections trained by re-afference, which includes typical human sensorimotor delays, train STS → PM connections to make predictions into the future with a time-shift offsetting sensorimotor delays encountered in joint actions with individuals experiencing similar delays. This is because it takes approximately the same time (≈200 ms) for your motor program to activate your STS neurons as it takes for your motor program to activate my STS neurons while I am listening to or watching you. Thus, Hebbian learning through re-afference trains sensorimotor predictions enabling accurate joint actions despite long sensorimotor delays.

(e). Hebbian Learning and Projection

A significant consequence of wiring the mirror neuron system based on re-afference is that the brain associates internal states present during action production with the sound and sight of that action. Consequently, when observing others’ actions, the motor activity pattern predictively activated in the observer is not merely a reflection of the actor’s brain activity but a projection of the observer’s own brain activity when performing similar actions. Because humans share approximately 99% of their genes with other humans, and over 90% with macaques, assuming that hidden motor states during our own actions are a reasonable model for those in another human or monkey brain is not unreasonable. This constitutes an informative “prior” that can be updated by contradictory evidence. However, the greater the difference between observer and observed agent, the more evident the projective nature of this process becomes.

To test this prediction, we used fMRI to measure brain activity in three conditions [65]. Participants performed hand actions (e.g., swirling a wine glass), observed another human performing similar actions, and observed an industrial robot performing similar actions. Observing a human action (figure 4a) activated a network of somatosensory, premotor, and parietal brain regions (figure 4b) similar to that used by participants during action execution (figure 4c). Comparing observer and executor activity patterns (b–c) revealed significant similarity (r(b,c) = 0.5)—the brain effectively simulated the agent’s brain activity. However, when participants observed the robot performing the action (figure 4d), they generated a brain activity pattern (figure 4e) unlike the activity of the robot’s processor causing its movement (figure 4f). Instead, the pattern continued to resemble that used by the participant to perform the action (figure 4c). This illustrates the projective nature of mind-reading through the mirror neuron system.

Seeing a human perform an action (a) leads to activations in the mirror system (b) that resembles the activity during the execution of similar actions by a human (c). Seeing a robot perform similar actions (d) generates a pattern of activity in the mirror system (e) that is very different from the pattern of activity that caused the robot to act (f), but resembles that which the human viewer would use to perform a similar action (c). Panels (a–e) adapted from Gazzola et al. [65]. (Online version in colour.)

5. Beyond the Motor System

Mirror neurons were initially discovered in PM [1–4,20] and posterior parietal regions [21,58] involved in action control, emphasizing motor aspects of mind-reading. However, evidence now suggests vicarious activation of higher levels of primary somatosensory cortex when observing others’ actions and secondary somatosensory cortex when observing others being touched [45]. Furthermore, regions involved in experiencing emotions also show vicarious activation when witnessing others’ emotions [6,66], including the insula for disgust, pain, and pleasure [67–69], rostral cingulate for pain [68], and striatum for reward [70].

While single-cell recordings directly proving vicarious somatosensory and emotional activations in fMRI are caused by single cells responding to both experiencing and observing somatosensation and emotions are lacking (but see [71]), Hebbian learning perspective makes mirror-like neurons for somatosensation or emotions unsurprising. When we are touched, we see our body touched and feel the somatosensory stimulation. Unlike the sensorimotor system where motor activity precedes visual/auditory re-afference, tactile and visual/auditory signals when touched are affected by similar latencies relative to the external event. Spikes from visual/auditory and somatosensory neurons would naturally fall within narrow Hebbian learning temporal windows, reinforcing connections between neurons encoding our inner touch sense in S2 and those encoding the visual and auditory aspects of touch in STS-like regions. Observing or hearing others being touched could then trigger mirror-like activity in S2, projecting our touch feeling onto the observed person. Because anticipation in the STS → PM system arises from latency differences between these neurons, which are small between STS and S2, STS-S2 connections are expected to show limited predictive coding. However, if tactile sensations result from actions predictable by the STS → PM system, such anticipations could be computed.

Similarly, when actively exploring objects with our hands, PM neuron activity controlling the action precedes not only STS neuron activity viewing and hearing the action but also BA2 neuron activity encoding haptic sensations during touch. We would expect a dynamic system similar to figure 1, including not only STS and PM but also BA2. In this system, Hebbian learning could also explain how people learn to suppress self-caused tactile sensations, generating haptic prediction errors central to motor control [72], and why self-tickling is impossible [73].

Finally, for emotions, many neurons would also become Hebbianly connected. If we feel pain from being hit by a sibling’s toy, we see the toy hit us, feel pain, make a facial expression, cry, and our parents mirror that expression. The visual impact precedes pain, which precedes facial expression and cries, which precede parental facial mimicry. If our theory is correct, this could lead to a chain of Hebbian associations across neurons representing these states. Later, seeing or hearing someone wince in pain would trigger matching facial motor programs, activating our inner feelings [74]. Observing someone being hit would vicariously recruit our somatosensory and emotional cortices. All these vicarious activations would result from synaptic plasticity during our own experiences, associating observable events with our felt and acted states in those situations. Applying this to others projects our states, with predicted egocentric biases.

6. Overall Conclusion

Two decades after the initial mirror neuron reports, they sparked a vision of motor systems playing a privileged role in mind-reading through embodied cognition [75]. We propose that our modern understanding of spike-timing-dependent synaptic plasticity refines our concept of Hebbian learning, providing a framework to explain not only mirror neuron emergence but also their predictive properties enabling quasi-synchronous joint actions. We show that this could create a system approximately solving the inverse problem of inferring others’ hidden internal states from observable changes, albeit with projection and egocentric biases. We also suggest that mirror neurons are a specific case of vicarious activations that Hebbian learning and fMRI data suggest extend to sharing others’ emotions and sensations.

Acknowledgements

We thank David Perrett and Rajat Thomas for fruitful discussions on Hebbian Learning.

Funding statement

V.G. was supported by VENI grant no. 451–09–006 of The Netherlands Organisation for Scientific Research (NWO). C.K. was supported by grant no. 312511 of the European Research Council, and grant nos. 056-13-013, 056-13-017 and 433-09-253 of NWO.

References

[1] Gallese V, Fadiga L, Fogassi L, Rizzolatti G. 1996 Action recognition in the premotor cortex. Brain 119(Pt 2):593–609. (PubMed)

[2] Rizzolatti G, Fadiga L, Gallese V, Fogassi L. 1996 Premotor cortex and the recognition of motor actions. Cogn. Brain Res. 3(2):131–141. (PubMed)

[3] Keysers C, Kohler E, Umiltà MA, Nanetti L, Fogassi L, Gallese V. 2003 Audiovisual mirror neurons and action recognition. Exp. Brain Res. 153(4):628–636. (PubMed)

[4] Kohler E, Keysers C, Umiltà MA, Fogassi L, Gallese V, Rizzolatti G. 2002 Hearing sounds, understanding actions: action representation in mirror neurons. Science 297(5582):846–848. (PubMed)

[5] Hickok G. 2009 Eight problems for the mirror neuron theory of action understanding in monkeys and humans. J. Cogn. Neurosci. 21(7):1229–1243. (PubMed)

[6] Keysers C, Gazzola V. 2010 Social neuroscience: understanding others’ minds from mirror neurons to empathy. J. Neurosci. 30(23):8035–8042. (PubMed)

[7] Dinstein I, Thomas C, Humphreys K, Miall RC, Lemon RN. 2007 Fractionating the mirror system: somatotopy for orofacial but not manual actions in human area 6. J. Neurosci. 27(16):4223–4229. (PubMed)

[8] Lingnau A, Gesierich B, Caramazza A. 2009 Asymmetric fMRI adaptation reveals no evidence for mirror neurons in human inferior parietal cortex. Proc. Natl Acad. Sci. USA 106(23):9925–9930. (PubMed)

[9] Csibra G. 2007 Action mirroring and action understanding: an alternative account. In Sensorimotor foundations of higher cognition, vol. 97 (eds Haggard P, Rossetti Y, Kawato M), pp. 435–459. Attention and performance. Oxford, UK: Oxford University Press.

[10] Gazzola V, Aziz-Zadeh L, Keysers C. 2006 Empathy and the somatotopic auditory mirror system in humans. Curr. Biol. 16(18):1824–1829. (PubMed)

[11] Hebb DO. 1949 The organization of behavior: a neuropsychological theory. New York, NY: Wiley.

[12] Shatz CJ. 1992 Divide and conquer: axon guidance and the formation of retinotopic maps. Neuron 8(2):173–181. (PubMed)

[13] Michotte A. 1946 La perception de la causalité. Louvain, Belgium: Publications Universitaires de Louvain.

[14] Markram H, Lübke J, Frotscher M, Sakmann B. 1997 Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science 275(5299):213–215. (PubMed)

[15] Dan Y, Poo MM. 2006 Spike timing-dependent plasticity of neural circuits and systems. Trends Neurosci. 29(1):23–30. (PubMed)

[16] Bi GQ, Poo MM. 2001 Synaptic modification by correlated activity: Hebb’s postulate revisited. Annu. Rev. Neurosci. 24:139–166. (PubMed)

[17] Bauer EP, LeDoux JE, Nader K. 2001 Consolidating fear memory—contingency and reconsolidation. Nat. Neurosci. 4(3):309–310. (PubMed)

[18] Dickinson N. 1980 Contemporary animal learning theory. Cambridge, UK: Cambridge University Press.

[19] Cooper RP, Cook R, Dickinson AR, Heyes CM. 2013 Associative learning explains mirror neurons. Biol. Lett. 9(5):20130118. (PMC free article) (PubMed)

[20] Umiltà MA, Kohler E, Gallese V, Fogassi L, Fadiga L, Keysers C, Rizzolatti G. 2001 I know what you are doing. A neurophysiological study. Neuron 32(1):91–101. (PubMed)

[21] Fogassi L, Gallese V, Fadiga L, Rizzolatti G, Umiltà MA. 1998 Parietal cortex: from sight to action. Science 280(5364):662–667. (PubMed)

[22] Luppino G, Murata A, Govoni P, Matelli M. 2001 Largely segregated parietofrontal connections linking rostral intraparietal cortex (area VIP) and the ventral premotor cortex (area F5). Exp. Brain Res. 137(1):83–87. (PubMed)

[23] Seltzer B, Pandya DN. 1994 Parietal, temporal, and occipital projections to cortex of the superior temporal sulcus in the rhesus monkey: a retrograde tracer study. J. Comp. Neurol. 343(3):445–463. (PubMed)

[24] Keysers C, Perrett DI. 2004 Demystifying social cognition: a critical review of social neuroscience. Trends Cogn. Sci. 8(11):501–507. (PubMed)

[25] Iacoboni M, Molnar-Szakacs I, Gallese V, Buccino G, Mazziotta JC, Rizzolatti G. 2005 Grasping the intentions of others with one’s own mirror neuron system. PLoS Biol. 3(3):e79. (PMC free article) (PubMed)

[26] Kaplan JT, Iacoboni M. 2006 The mirror system and human social cognition. In Neurobiology of human values (ed. Changeux JP), pp. 7–22. Berlin, Germany: Springer.

[27] Montgomery KJ, Seeherman KR, Haxby JV. 2009 Spatiotemporal dynamics of semantic and intentional components of action understanding. Brain Lang. 111(3):143–152. (PMC free article) (PubMed)

[28] Heyes CM. 2001 Causes and consequences of imitation. Trends Cogn. Sci. 5(6):253–261. (PubMed)

[29] Del Giudice M, Manera V, Keysers C. 2009 Needing a face: the ontogeny and functions of the human face processing system. Neurosci. Biobehav. Rev. 33(7):997–1013. (PubMed)

[30] Brass M, Heyes C. 2005 Imitation: is cognitive neuroscience solving the correspondence problem? Trends Cogn. Sci. 9(10):489–495. (PubMed)

[31] Cook R, Bird G, Catmur C, Press C, Heyes C. 2014 Mirror neurons: from origin to function. Behav. Brain Sci. 37(2):177–192. (PubMed)

[32] Rochat P. 2003 Five levels of self-awareness as they unfold early in life. Conscious. Cogn. 12(4):717–731. (PubMed)

[33] Meltzoff AN, Moore MK. 1977 Imitation of facial and manual gestures by human neonates. Science 198(4312):75–78. (PubMed)

[34] Casile A, Dipietro ML, Muratori LM, D’Amico LB, Mento G, Perucchini P. 2011 Neonatal imitative abilities: the differential effect of biological and artificial lips. Infant Behav. Dev. 34(1):102–110. (PubMed)

[35] Meltzoff AN, Moore MK. 1983 Newborn infants imitate adult facial gestures. Child Dev. 54(3):702–709. (PubMed)

[36] Ray ES, Heyes CM. 2011 Imitation in infancy: the wealth of the stimulus. Dev. Sci. 14(1):92–108. (PubMed)

[37] Ray ES, Catmur C. 2011 Resolving the paradox of infant imitation. Child Dev. Perspect. 5(3):147–154.

[38] Trehub SE, Trainor LJ, Unyk AM. 1993 Infants’ and adults’ sensitivity to pitch change in continuous সুরflows. Percept. Psychophys. 53(6):629–636. (PubMed)

[39] Catmur C, Walsh V, Heyes C. 2007 Sensorimotor learning configures the human mirror system. Curr. Biol. 17(17):1527–1531. (PubMed)

[40] Rizzolatti G, Fadiga L. 1998 Grasping affordances. Cogn. Neuropsychol. 15(5):437–470.

[41] Logothetis NK, Pauls J, Bülthoff HH, Poggio T. 1994 View-dependent object recognition by monkeys. Curr. Biol. 4(5):401–414. (PubMed)

[42] Blake R, Shiffrar M. 2007 Perceptual decoupling of biological motion. Psychol. Sci. 18(9):747–753. (PubMed)

[43] Calvert GA, Campbell R, Brammer MJ. 2000 Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Curr. Biol. 10(11):649–657. (PubMed)

[44] Gallese V. 2007 Before and below ‘theory of mind’: embodied simulation and social cognition. Phil. Trans. R. Soc. B 362(1480):659–669. (PMC free article) (PubMed)

[45] Keysers C, Wicker B, Gazzola V, Antonazzi K, Fogassi L, Gallese V. 2004 Somatosensory রূপকশন in the human insula anterior cingulate and somatosensory cortex during the observation and anticipation of touch. Neuron 42(2):335–346. (PubMed)

[46] Oberman LM, Ramachandran VS. 2007 The mirror neuron system in autism spectrum disorders. Soc. Neurosci. 2(2):138–155. (PubMed)

[47] Rizzolatti G, Craighero L. 2004 The mirror-neuron system. Annu. Rev. Neurosci. 27:169–192. (PubMed)

[48] Chakrabarti B, Baron-Cohen S. 2013 Empathy deficits in autism spectrum conditions. Dev. Psychopathol. 25(3):iii–iv, 431–443. (PubMed)

[49] Ferrari PF,针锋相锋 V, Paukner A. 2013 Epigenetic perspectives on social neuroscience. Front. Behav. Neurosci. 7:97. (PMC free article) (PubMed)

[50] Kilner JM, Friston KJ, Frith CD. 2007 Predictive coding: an account of the mirror-neuron system. Cogn. Neuropsychol. 24(8):875–896. (PubMed)

[51] Arbib MA. 2010 Mirror system activity for language and for tool use: is there a common origin? Prog. Brain Res. 181:261–277. (PubMed)

[52] Keysers C, Krüger H, Gazzola V. 2010 The human mirror neuron system for actions: physiological and functional properties. Cogn. Neurosci. 1(3):205–218. (PubMed)

[53] Grafton ST, Fagg AH, Woods RP, Arbib MA. 1996 Functional anatomy of pointing and grasping in humans. Cereb. Cortex 6(2):226–237. (PubMed)

[54] Beauchamp MS, Lee SS, Haxby JV, Martin A. 2003 fMRI responses to video and point-light displays of moving humans and manipulable objects. J. Cogn. Neurosci. 15(7):991–1001. (PubMed)

[55] Stefan M, Siebner HR, Wustenberg T, Baudewig J, Ikonomidou VN, Classen J. 2005 Transcranial magnetic stimulation over superior parietal lobule modulates corticocortical inhibition of the human motor cortex. J. Neurosci. 25(35):8016–8023. (PubMed)

[56] Chersi F, Thier P. 2001 Corticocortical connections of the macaque monkey parietal lobe as revealed by intracortical microstimulation combined with anatomical tracing techniques. Eur. J. Neurosci. 14(1):1–18. (PubMed)

[57] Breitmeyer BG, Öğmen H. 2006 Visual masking: time slices through conscious and unconscious vision. Oxford, UK: Oxford University Press.

[58] Rozzi S, Ferrari PF, Bonini L, Fogassi L. 2008 Action observation and execution matching system in monkey area PF: coding of goal-directed actions. J. Neurosci. 28(49):13371–13384. (PubMed)

[59] Rao RP, Ballard DH. 1999 Predictive coding in the visual cortex: a theoretical interpretation of extra-classical receptive field effects. Nat. Neurosci. 2(1):79–87. (PubMed)

[60] Lee TS, Mumford D. 2003 Hierarchical Bayesian inference in the visual cortex. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 20(7):1434–1448. (PubMed)

[61] Frith CD. 2007 The social brain? Phil. Trans. R. Soc. B 362(1480):671–678. (PMC free article) (PubMed)

[62] Gangitano M, Mottaghy FM, Pascual-Leone A. 2001 Phase-specific modulation of corticospinal excitability during observation of human movement. Neuron 29(2):453–462. (PubMed)

[63] Kilner JM, Vargas C, Duval C, Blakemore SJ, Sirigu A, Frith CD. 2004 Motor activation during observation of actions: dissociating mirror and predictive mechanisms. Nat. Neurosci. 7(5):522–527. (PubMed)

[64] Goebl W, Palmer C. 2009 Synchronization in piano duet performance. Exp. Brain Res. 192(4):649–661. (PubMed)

[65] Gazzola V, Rizzolatti G, Wicker B, Keysers C. 2007 The anthropomorphic brain: the responses to human and robotic actions mapped in the human brain. Cereb. Cortex 17(7):1720–1730. (PubMed)

[66] Singer T, Lamm C. 2009 The social neuroscience of empathy. Ann. N. Y. Acad. Sci. 1156:81–108. (PubMed)

[67] Wicker B, Keysers C, Plailly J, Royet JP, Gallese V, Rizzolatti G. 2003 Both of us disgusted in my insula: the common neural basis of seeing and feeling disgust. Neuron 40(3):655–664. (PubMed)

[68] Singer T, Seymour B, O’Doherty JP, Stephan KE, Dolan RJ, Frith CD. 2004 Empathy for pain involves the affective but not sensory components of pain. Science 303(5661):1157–1162. (PubMed)

[69] Jabbi M, Bastiaansen J, Keysers C. 2008 Empathy for positive and negative emotions in the gustatory cortex. Neuroimage 43(4):744–753. (PubMed)

[70] Moulier V, Pertzov Y, Keysers C, Bach DR. 2006 Dissociation between anticipation and experience of social reward in the human striatum. J. Neurosci. 26(37):9489–9493. (PubMed)

[71] Hutchison WD, Davis KD, Lozano AM, Tasker RR, Dostrovsky JO. 1999 Pain-related neurons in the human cingulate cortex. Nat. Neurosci. 2(5):403–405. (PubMed)

[72] Blakemore SJ, Wolpert DM, Frith CD. 1998 Central cancellation of self-produced tickle sensation. Nat. Neurosci. 1(7):635–640. (PubMed)

[73] Weiskrantz L, Elliott J, Darlington C. 1971 Tickling ourselves. Nature 230(5296):598–599. (PubMed)

[74] Goldman AI, Sripada CS. 2005 Simulationist models of face-based emotion recognition. Cognition 94(3):193–213. (PubMed)

[75] Gallese V, Goldman A. 1998 Mirror neurons and the simulation theory of mind-reading. Trends Cogn. Sci. 2(12):493–501.