Principal Component Analysis (PCA) in machine learning is a powerful technique for reducing the dimensionality of datasets while retaining the most important information; let LEARNS.EDU.VN be your guide! This article will explore PCA, its uses, advantages, disadvantages, and how to apply it in practice, ensuring you’re well-equipped to leverage this valuable tool. Get ready to dive into linear dimensionality reduction and feature extraction.

1. What is Principal Component Analysis (PCA) Used For?

Principal Component Analysis (PCA) is used for dimensionality reduction, feature extraction, and data visualization. PCA transforms high-dimensional data into a new set of variables, called principal components, which capture the most significant variance in the original data.

PCA is used across a wide range of applications:

- Data Visualization: Visualizing high-dimensional data in 2D or 3D plots for easier interpretation.

- Image Compression: Reducing the size of images while preserving essential features.

- Noise Reduction: Identifying and removing noise from data by focusing on the principal components.

- Feature Extraction: Creating a new set of features that are linear combinations of the original features, which can improve the performance of machine learning models.

PCA is a versatile tool that simplifies complex datasets and enhances the efficiency of machine learning tasks.

2. How Does PCA Work in Machine Learning?

PCA works by identifying the principal components of a dataset, which are orthogonal (uncorrelated) axes that capture the maximum variance in the data. Here’s a step-by-step breakdown:

- Standardization: Standardize the data to have a mean of 0 and a variance of 1.

- Covariance Matrix: Calculate the covariance matrix to understand the relationships between variables.

- Eigenvalue Decomposition: Perform eigenvalue decomposition on the covariance matrix to find eigenvectors and eigenvalues.

- Principal Components: Sort the eigenvectors by their corresponding eigenvalues in descending order; the eigenvector with the highest eigenvalue is the first principal component.

- Dimensionality Reduction: Select the top k principal components to reduce the dimensionality of the dataset.

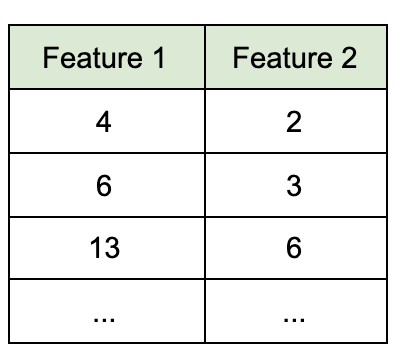

Here is an example of how it works:

Let’s assume you have a dataset with two features, height and weight, for a group of people. PCA will:

- Find the direction in which the data varies the most. This becomes the first principal component.

- Find a second direction, orthogonal to the first, that captures the remaining variance.

By projecting the original data onto these new axes, you reduce the dimensionality while retaining the most important information.

Alt Text: Scatter plot of a 2-dimensional dataset illustrating the distribution of data points which PCA aims to simplify through dimensionality reduction.

3. What Are the Key Steps to Perform PCA?

Performing PCA involves several critical steps to ensure accurate and effective dimensionality reduction. Each step plays a vital role in transforming the data and extracting the most important components.

3.1. Data Standardization

Data standardization is crucial because PCA is sensitive to the scale of the features. Features with larger values can dominate the analysis, leading to biased results.

How to Standardize Data:

-

Calculate the Mean: Compute the mean of each feature.

-

Calculate the Standard Deviation: Compute the standard deviation of each feature.

-

Standardize Each Value: For each value in the feature, subtract the mean and divide by the standard deviation.

- Formula: ( z = frac{x – mu}{sigma} )

- Where:

- ( x ) is the original value.

- ( mu ) is the mean of the feature.

- ( sigma ) is the standard deviation of the feature.

- ( z ) is the standardized value.

- Where:

- Formula: ( z = frac{x – mu}{sigma} )

Example:

Suppose you have a feature with the following values: 2, 4, 6, 8, 10.

- Mean: (2 + 4 + 6 + 8 + 10) / 5 = 6

- Standard Deviation: Approximately 3.16

- Standardized Values:

- (2 – 6) / 3.16 ≈ -1.27

- (4 – 6) / 3.16 ≈ -0.63

- (6 – 6) / 3.16 = 0

- (8 – 6) / 3.16 ≈ 0.63

- (10 – 6) / 3.16 ≈ 1.27

Standardizing the data ensures that each feature contributes equally to the PCA process, regardless of its original scale.

3.2. Covariance Matrix Computation

The covariance matrix provides insights into the relationships between different features in the dataset. It quantifies how much each pair of features varies together.

How to Compute the Covariance Matrix:

-

Center the Data: Ensure that each feature has a mean of zero.

-

Calculate Covariance: For each pair of features, calculate the covariance using the formula:

- Formula: ( text{Cov}(X, Y) = frac{sum_{i=1}^{n} (X_i – bar{X})(Y_i – bar{Y})}{n-1} )

- Where:

- ( X ) and ( Y ) are the two features.

- ( X_i ) and ( Y_i ) are the individual values of the features.

- ( bar{X} ) and ( bar{Y} ) are the means of the features.

- ( n ) is the number of data points.

- Where:

- Formula: ( text{Cov}(X, Y) = frac{sum_{i=1}^{n} (X_i – bar{X})(Y_i – bar{Y})}{n-1} )

Example:

Suppose you have two features, ( X ) and ( Y ), with the following values:

- ( X ): 1, 2, 3, 4, 5

- ( Y ): 2, 4, 6, 8, 10

- Means:

- ( bar{X} ) = (1 + 2 + 3 + 4 + 5) / 5 = 3

- ( bar{Y} ) = (2 + 4 + 6 + 8 + 10) / 5 = 6

- Centered Data:

- ( X – bar{X} ): -2, -1, 0, 1, 2

- ( Y – bar{Y} ): -4, -2, 0, 2, 4

- Covariance:

- ( text{Cov}(X, Y) = frac{(-2)(-4) + (-1)(-2) + (0)(0) + (1)(2) + (2)(4)}{5-1} = frac{8 + 2 + 0 + 2 + 8}{4} = frac{20}{4} = 5 )

The covariance matrix is a square matrix where each element represents the covariance between two features. For a dataset with three features (A, B, C), the covariance matrix would look like this:

[

begin{bmatrix}

text{Cov}(A, A) & text{Cov}(A, B) & text{Cov}(A, C)

text{Cov}(B, A) & text{Cov}(B, B) & text{Cov}(B, C)

text{Cov}(C, A) & text{Cov}(C, B) & text{Cov}(C, C)

end{bmatrix}

]

3.3. Eigenvalue Decomposition

Eigenvalue decomposition is a crucial step in PCA that involves finding the eigenvectors and eigenvalues of the covariance matrix. These values provide insights into the principal components of the data.

How to Perform Eigenvalue Decomposition:

-

Compute Eigenvectors and Eigenvalues: Apply eigenvalue decomposition to the covariance matrix using linear algebra techniques.

- Equation: ( text{Covariance Matrix} cdot v = lambda cdot v )

- Where:

- ( v ) is the eigenvector.

- ( lambda ) is the eigenvalue.

- Where:

- Equation: ( text{Covariance Matrix} cdot v = lambda cdot v )

-

Eigenvectors: The eigenvectors represent the directions or axes along which the data varies the most.

-

Eigenvalues: The eigenvalues represent the magnitude of the variance along each eigenvector.

Example:

Assume you have a covariance matrix:

[

begin{bmatrix}

2 & 1

1 & 3

end{bmatrix}

]

- Compute Eigenvalues: Solve for ( lambda ) in the equation ( text{det}(text{Covariance Matrix} – lambda I) = 0 )

- Where ( I ) is the identity matrix.

- ( text{det} begin{bmatrix} 2-lambda & 1 1 & 3-lambda end{bmatrix} = (2-lambda)(3-lambda) – 1 = 0 )

- ( lambda^2 – 5lambda + 5 = 0 )

- Solving the quadratic equation gives ( lambda_1 approx 4.30 ) and ( lambda_2 approx 0.70 )

- Compute Eigenvectors: For each eigenvalue, solve for ( v ) in the equation ( (text{Covariance Matrix} – lambda I) cdot v = 0 )

- For ( lambda_1 approx 4.30 ):

- ( begin{bmatrix} 2-4.30 & 1 1 & 3-4.30 end{bmatrix} cdot v = 0 )

- ( begin{bmatrix} -2.30 & 1 1 & -1.30 end{bmatrix} cdot begin{bmatrix} x y end{bmatrix} = 0 )

- Solving this system of equations gives the eigenvector ( v_1 approx begin{bmatrix} 0.56 0.83 end{bmatrix} )

- For ( lambda_2 approx 0.70 ):

- ( begin{bmatrix} 2-0.70 & 1 1 & 3-0.70 end{bmatrix} cdot v = 0 )

- ( begin{bmatrix} 1.30 & 1 1 & 2.30 end{bmatrix} cdot begin{bmatrix} x y end{bmatrix} = 0 )

- Solving this system of equations gives the eigenvector ( v_2 approx begin{bmatrix} -0.83 0.56 end{bmatrix} )

- For ( lambda_1 approx 4.30 ):

The eigenvectors ( v_1 ) and ( v_2 ) represent the principal components, and the eigenvalues ( lambda_1 ) and ( lambda_2 ) represent the amount of variance explained by each principal component.

3.4. Principal Components Selection

Selecting the number of principal components is a crucial step in PCA to balance dimensionality reduction and information retention. It involves evaluating the explained variance ratio for each component to determine how many components to keep.

How to Select Principal Components:

-

Sort Eigenvalues: Sort the eigenvalues in descending order.

-

Calculate Explained Variance Ratio: Compute the explained variance ratio for each eigenvalue using the formula:

- Formula: ( text{Explained Variance Ratio} = frac{lambdai}{sum{j=1}^{n} lambda_j} )

- Where:

- ( lambda_i ) is the eigenvalue for the ( i )-th principal component.

- ( n ) is the total number of principal components.

- Where:

- Formula: ( text{Explained Variance Ratio} = frac{lambdai}{sum{j=1}^{n} lambda_j} )

-

Cumulative Explained Variance: Calculate the cumulative explained variance by summing the explained variance ratios for each component.

-

Choose Number of Components: Select the number of components that retain a sufficiently high percentage of the total variance (e.g., 95% or 99%).

Example:

Suppose you have eigenvalues: 4.30, 0.70.

- Sorted Eigenvalues: 4.30, 0.70

- Explained Variance Ratio:

- For ( lambda_1 = 4.30 ): ( frac{4.30}{4.30 + 0.70} = frac{4.30}{5} = 0.86 ) or 86%

- For ( lambda_2 = 0.70 ): ( frac{0.70}{4.30 + 0.70} = frac{0.70}{5} = 0.14 ) or 14%

- Cumulative Explained Variance:

- 1 component: 86%

- 2 components: 86% + 14% = 100%

- Choose Number of Components:

- If you want to retain at least 85% of the variance, you can choose 1 component.

3.5. Data Transformation

Data transformation is the final step in PCA, where the original data is projected onto the selected principal components to obtain a reduced-dimensional representation.

How to Transform Data:

-

Select Principal Components: Choose the top ( k ) eigenvectors corresponding to the selected principal components.

-

Create Transformation Matrix: Form a transformation matrix ( W ) by stacking the selected eigenvectors as columns.

-

Transform Data: Multiply the standardized data ( X ) by the transformation matrix ( W ) to obtain the reduced-dimensional data ( X_{text{reduced}} ).

- Formula: ( X_{text{reduced}} = X cdot W )

Example:

Suppose you have standardized data:

[

X = begin{bmatrix}

1 & 2

3 & 4

5 & 6

end{bmatrix}

]

And you have selected one principal component with eigenvector:

[

W = begin{bmatrix}

0.56

0.83

end{bmatrix}

]

- Transform Data:

- ( X_{text{reduced}} = begin{bmatrix} 1 & 2 3 & 4 5 & 6 end{bmatrix} cdot begin{bmatrix} 0.56 0.83 end{bmatrix} )

- ( X_{text{reduced}} = begin{bmatrix} 1 cdot 0.56 + 2 cdot 0.83 3 cdot 0.56 + 4 cdot 0.83 5 cdot 0.56 + 6 cdot 0.83 end{bmatrix} )

- ( X_{text{reduced}} = begin{bmatrix} 2.22 5.00 7.78 end{bmatrix} )

Now, the original data ( X ) has been transformed into a reduced-dimensional representation ( X_{text{reduced}} ) using PCA.

4. What Are the Advantages of PCA?

PCA offers several advantages that make it a valuable tool in machine learning and data analysis. These advantages include dimensionality reduction, noise reduction, feature extraction, and improved model performance.

- Dimensionality Reduction: PCA reduces the number of features in a dataset while retaining the most important information. This simplifies the data and makes it easier to visualize and analyze.

- Noise Reduction: By focusing on the principal components that capture the most variance, PCA can effectively filter out noise and irrelevant information in the data.

- Feature Extraction: PCA creates a new set of features that are linear combinations of the original features. These new features can be more informative and improve the performance of machine learning models.

- Improved Model Performance: Reducing the dimensionality of the data can help prevent overfitting and improve the generalization ability of machine learning models.

PCA’s ability to simplify complex datasets while preserving essential information makes it an invaluable tool for various applications.

5. What Are the Disadvantages of PCA?

Despite its advantages, PCA has certain limitations that must be considered when applying it to a dataset. These disadvantages include information loss, interpretability issues, and sensitivity to data scaling.

- Information Loss: Dimensionality reduction inevitably leads to some loss of information. While PCA retains the most important variance, some details may be lost in the process.

- Interpretability Issues: The principal components are linear combinations of the original features, which can make them difficult to interpret. It may not be clear which original features contribute most to each principal component.

- Sensitivity to Data Scaling: PCA is sensitive to the scaling of the features. If the features are not standardized, those with larger values can dominate the analysis, leading to biased results.

- Linearity Assumption: PCA assumes that the relationships between features are linear. If the relationships are non-linear, PCA may not be effective in capturing the underlying structure of the data.

Understanding these limitations is crucial for applying PCA effectively and interpreting the results correctly.

6. What Are the Assumptions of PCA?

PCA relies on several key assumptions about the data. Understanding these assumptions is essential to ensure that PCA is applied appropriately and that the results are meaningful.

- Linearity: PCA assumes that the relationships between the original variables are linear. In other words, it seeks to find principal components that are linear combinations of the original features.

- Gaussian Distribution: PCA works best when the data is normally distributed or approximately Gaussian. If the data deviates significantly from a normal distribution, the performance of PCA may be suboptimal.

- Variance: PCA assumes that the variables with higher variance are more important. It seeks to capture the maximum variance in the data with the fewest components.

- Orthogonality: PCA assumes that the principal components are orthogonal (uncorrelated) to each other. This ensures that each component captures a unique aspect of the data.

Violating these assumptions can lead to suboptimal results and misinterpretations of the principal components.

7. What Are Some Real-World Applications of PCA in Machine Learning?

PCA is applied in numerous real-world scenarios across various industries. Its ability to reduce dimensionality, extract features, and improve model performance makes it a valuable tool for diverse applications.

- Image Compression: PCA is used to compress images by reducing the number of pixels while retaining the most important visual features. This is useful for storing and transmitting images more efficiently.

- Face Recognition: PCA is applied to reduce the dimensionality of facial images, making it easier to identify and classify faces. This is used in security systems, social media platforms, and other applications.

- Bioinformatics: PCA is used to analyze gene expression data, identify patterns, and reduce the dimensionality of complex biological datasets. This helps in understanding disease mechanisms and developing new treatments.

- Finance: PCA is used to analyze financial data, identify trends, and reduce the dimensionality of portfolios. This helps in risk management, asset allocation, and investment strategies.

- Environmental Science: PCA is applied to analyze environmental data, identify pollution sources, and reduce the dimensionality of complex environmental datasets. This helps in monitoring and managing environmental quality.

These are just a few examples of the many real-world applications of PCA in machine learning and data analysis.

Alt Text: Visual representation of principal components overlaid on a scatterplot, demonstrating how PCA identifies the directions of maximum variance in the data.

8. How Can PCA Be Used for Feature Extraction?

PCA can be effectively used for feature extraction by creating a new set of features that are linear combinations of the original features. These new features, called principal components, capture the most important information in the data.

Steps to Use PCA for Feature Extraction:

- Apply PCA: Apply PCA to the dataset to obtain the principal components.

- Select Components: Select the top k principal components that capture a sufficiently high percentage of the total variance.

- Transform Data: Transform the original data by projecting it onto the selected principal components.

- Use New Features: Use the transformed data as the new set of features for machine learning models.

Benefits of Using PCA for Feature Extraction:

- Dimensionality Reduction: Reduces the number of features, simplifying the data and making it easier to analyze.

- Noise Reduction: Filters out noise and irrelevant information, improving the signal-to-noise ratio.

- Improved Model Performance: Prevents overfitting and improves the generalization ability of machine learning models.

- Feature Importance: Provides insights into the importance of the original features by examining their contributions to the principal components.

By extracting the most important information from the original features, PCA can significantly enhance the performance and interpretability of machine learning models.

9. What Are the Alternatives to PCA?

While PCA is a powerful technique for dimensionality reduction, it is not always the best choice for every dataset. There are several alternatives to PCA that may be more appropriate in certain situations.

- Linear Discriminant Analysis (LDA): LDA is a supervised dimensionality reduction technique that aims to find the best linear combination of features to separate different classes. It is useful when the goal is to maximize the separation between classes.

- t-Distributed Stochastic Neighbor Embedding (t-SNE): t-SNE is a non-linear dimensionality reduction technique that is particularly well-suited for visualizing high-dimensional data in low-dimensional space. It focuses on preserving the local structure of the data.

- Autoencoders: Autoencoders are neural networks that can be trained to reconstruct the original data from a reduced-dimensional representation. They can capture non-linear relationships in the data and are useful for both dimensionality reduction and feature extraction.

- Independent Component Analysis (ICA): ICA is a technique that aims to separate a multivariate signal into additive subcomponents that are statistically independent. It is useful when the goal is to identify independent sources in the data.

Each of these alternatives has its own strengths and weaknesses, and the best choice depends on the specific characteristics of the dataset and the goals of the analysis.

10. FAQ about PCA in Machine Learning

Here are some frequently asked questions about Principal Component Analysis (PCA) in machine learning:

-

What is PCA?

- PCA is a dimensionality reduction technique that transforms high-dimensional data into a new set of variables, called principal components, which capture the most significant variance in the original data.

-

Why use PCA?

- PCA is used for dimensionality reduction, noise reduction, feature extraction, and improving the performance of machine learning models.

-

How does PCA work?

- PCA works by identifying the principal components of a dataset, which are orthogonal axes that capture the maximum variance in the data.

-

What are the key steps to perform PCA?

- The key steps include data standardization, covariance matrix computation, eigenvalue decomposition, principal components selection, and data transformation.

-

What are the advantages of PCA?

- The advantages include dimensionality reduction, noise reduction, feature extraction, and improved model performance.

-

What are the disadvantages of PCA?

- The disadvantages include information loss, interpretability issues, and sensitivity to data scaling.

-

What are the assumptions of PCA?

- The assumptions include linearity, Gaussian distribution, variance, and orthogonality.

-

What are some real-world applications of PCA?

- Real-world applications include image compression, face recognition, bioinformatics, finance, and environmental science.

-

How can PCA be used for feature extraction?

- PCA can be used for feature extraction by creating a new set of features that are linear combinations of the original features.

-

What are the alternatives to PCA?

- Alternatives include Linear Discriminant Analysis (LDA), t-Distributed Stochastic Neighbor Embedding (t-SNE), Autoencoders, and Independent Component Analysis (ICA).

Unlock Deeper Insights with LEARNS.EDU.VN

Ready to take your machine learning skills to the next level? At LEARNS.EDU.VN, we offer a wealth of resources to help you master advanced techniques like Principal Component Analysis (PCA).

Explore In-Depth Articles

Delve deeper into the theoretical foundations and practical applications of PCA with our comprehensive guides. Understand the nuances of data standardization, covariance matrix computation, and eigenvalue decomposition, all explained in an accessible and engaging manner.

Enroll in Expert-Led Courses

Our expert-led courses provide hands-on experience with PCA, guiding you through real-world datasets and applications. Learn how to implement PCA using Python libraries like Scikit-Learn, and discover how to interpret the results to improve your machine learning models.

Join Our Thriving Community

Connect with fellow learners and industry professionals in our community forums. Share your experiences, ask questions, and collaborate on projects to enhance your understanding of PCA and other machine learning techniques.

Visit LEARNS.EDU.VN today and unlock a world of knowledge and opportunities. Whether you’re looking to refine your skills, explore new topics, or connect with a supportive community, we have everything you need to succeed.

- Address: 123 Education Way, Learnville, CA 90210, United States

- WhatsApp: +1 555-555-1212

- Website: LEARNS.EDU.VN

Don’t wait—start your journey towards mastery with learns.edu.vn today!