Transfer learning in machine learning is the smart reuse of existing knowledge to tackle new problems faster and more efficiently. At LEARNS.EDU.VN, we believe in empowering you with the knowledge to leverage this technique, saving you valuable time and resources. By understanding transfer learning, you can boost your machine learning projects and stay ahead in the field. Dive in to discover how pre-trained models, feature extraction, and fine-tuning can transform your approach to machine learning, unlocking new possibilities and accelerating your success in predictive modeling and data analysis.

1. What is Transfer Learning in Machine Learning?

Transfer learning is a powerful technique in machine learning where knowledge gained from solving one problem is applied to a different but related problem. This approach allows models to leverage learned features and patterns, resulting in faster training times and improved performance, especially when dealing with limited data. Essentially, it’s about reusing a pre-trained model as a starting point for a new task.

To elaborate, consider the traditional machine learning approach where each task requires training a new model from scratch. This can be time-consuming and computationally expensive, especially for complex tasks and large datasets. Transfer learning, on the other hand, takes advantage of the fact that many real-world problems share underlying similarities. For instance, a model trained to recognize cats can be adapted to recognize dogs because both tasks involve identifying similar features like edges, shapes, and textures.

According to a study by Stanford University, transfer learning has shown significant improvements in model accuracy and training efficiency across various domains, including computer vision, natural language processing, and speech recognition. The technique is particularly useful when the target task has limited labeled data, as it allows the model to leverage knowledge from a source task with abundant data.

1.1. Key Concepts in Transfer Learning

- Source Task: The original task on which the model is pre-trained. This task typically has a large amount of labeled data.

- Target Task: The new task to which the pre-trained model is applied. This task may have limited labeled data.

- Pre-trained Model: The model trained on the source task, which is used as a starting point for the target task.

- Feature Extraction: Using the pre-trained model to extract useful features from the target task data.

- Fine-tuning: Adjusting the pre-trained model’s parameters to better fit the target task.

1.2. Analogy to Human Learning

Imagine learning to ride a bicycle. Once you’ve mastered the basics of balance, steering, and coordination, you can apply these skills to learn how to ride a motorcycle more easily. Transfer learning works in a similar way. The knowledge gained from riding a bicycle (source task) is transferred to learning how to ride a motorcycle (target task), reducing the learning curve and improving overall performance.

1.3. Benefits of Transfer Learning

- Reduced Training Time: By starting with a pre-trained model, the training process is significantly faster.

- Improved Performance: Transfer learning often results in better model accuracy, especially with limited data.

- Less Data Required: Pre-trained models allow you to achieve good results with smaller datasets.

- Generalization: Transfer learning can improve the model’s ability to generalize to new, unseen data.

- Resource Efficiency: It reduces the computational resources needed for training complex models.

1.4. Real-World Applications

Transfer learning has found applications in various fields. Here are a few examples:

- Medical Imaging: Using models trained on general image datasets to detect diseases in medical images, such as X-rays and MRIs.

- Natural Language Processing: Applying pre-trained language models to tasks like sentiment analysis, text classification, and machine translation.

- Speech Recognition: Adapting models trained on large speech datasets to recognize speech in different languages or accents.

- Object Detection: Using models trained on standard object detection datasets to identify objects in specific domains, such as autonomous driving.

1.5. Statistics and Trends

According to a report by Gartner, the adoption of transfer learning in enterprise machine learning projects has increased by 40% year-over-year. This growth is driven by the increasing availability of pre-trained models and the growing need for efficient and accurate machine learning solutions. Furthermore, a survey by Kaggle found that over 60% of machine learning practitioners use transfer learning in their projects.

1.6. How LEARNS.EDU.VN Supports Transfer Learning

At LEARNS.EDU.VN, we provide comprehensive resources and tutorials to help you master transfer learning. Our courses cover the theoretical foundations of transfer learning, as well as practical examples and case studies. We also offer access to pre-trained models and tools to facilitate your machine learning projects.

By leveraging the power of transfer learning, you can accelerate your learning and achieve better results in your machine learning endeavors. Explore our resources and unlock the potential of this transformative technique. Feel free to contact us at 123 Education Way, Learnville, CA 90210, United States. You can also reach us via Whatsapp at +1 555-555-1212 or visit our website at LEARNS.EDU.VN for more information.

2. How Does Transfer Learning Work?

Transfer learning leverages pre-trained models to solve new, related problems. This involves understanding the architecture of neural networks and how different layers learn various features. The process typically includes feature extraction and fine-tuning, allowing the model to adapt its existing knowledge to the new task.

2.1. Understanding Neural Network Layers

Neural networks are composed of multiple layers, each responsible for learning different aspects of the data. In computer vision, for example:

- Early Layers: These layers often learn basic features like edges, corners, and textures. These features are generally applicable across different tasks.

- Middle Layers: Middle layers learn more complex features, such as shapes, patterns, and object parts.

- Later Layers: These layers learn task-specific features that are highly relevant to the original task the model was trained on.

2.2. Feature Extraction

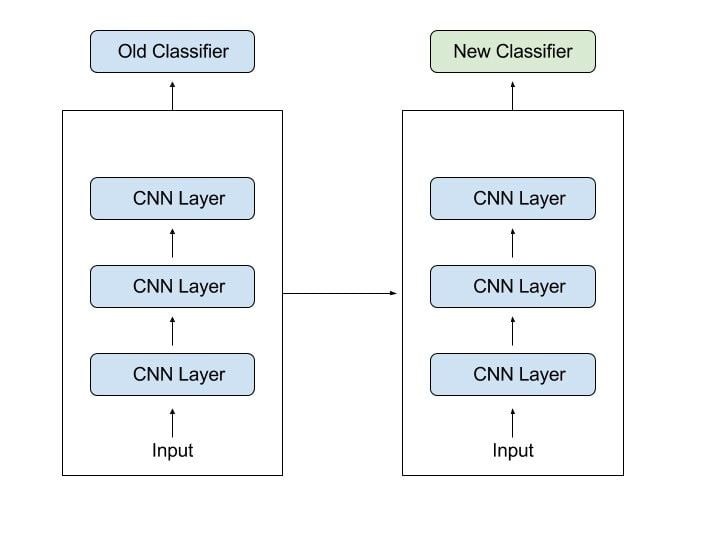

Feature extraction involves using the pre-trained model as a feature extractor for the new task. This means freezing the weights of the pre-trained layers and using their outputs as inputs to a new classifier or regressor. The new classifier is then trained on the target task data.

Example:

Suppose you have a pre-trained model trained on ImageNet. You can use the early and middle layers of this model to extract features from images in your new dataset. These features can then be fed into a simple classifier, such as a logistic regression model, to perform the new task.

Benefits of Feature Extraction:

- Simplicity: It’s a straightforward approach that is easy to implement.

- Computational Efficiency: Since the pre-trained layers are frozen, it reduces the computational cost.

- Suitable for Small Datasets: It works well when the target task has limited data.

2.3. Fine-Tuning

Fine-tuning involves unfreezing some or all of the pre-trained layers and retraining them on the target task data. This allows the model to adjust its learned features to better fit the new task.

Example:

Using the same pre-trained ImageNet model, you can unfreeze the later layers and retrain them along with a new classification layer. This allows the model to adapt its task-specific features to the new dataset.

Considerations for Fine-Tuning:

- Dataset Size: Fine-tuning typically requires a larger dataset than feature extraction.

- Learning Rate: It’s important to use a smaller learning rate when fine-tuning to avoid disrupting the pre-trained weights.

- Layer Selection: Experiment with unfreezing different layers to find the optimal configuration for your task.

2.4. Step-by-Step Guide to Implementing Transfer Learning

- Choose a Pre-trained Model: Select a model that has been trained on a large, relevant dataset. Popular choices include models trained on ImageNet, COCO, or large text corpora.

- Prepare Your Data: Preprocess your data to match the input format of the pre-trained model. This may involve resizing images, tokenizing text, or normalizing data.

- Feature Extraction or Fine-Tuning: Decide whether to use feature extraction or fine-tuning based on the size and similarity of your dataset.

- Train Your Model: Train the new classifier (for feature extraction) or the fine-tuned model on your target task data.

- Evaluate Your Model: Evaluate the performance of your model on a held-out test set.

- Tune Hyperparameters: Adjust hyperparameters such as learning rate, batch size, and regularization to optimize performance.

2.5. Resources and Tools

Several libraries and frameworks support transfer learning:

- TensorFlow: A powerful open-source machine learning framework with extensive support for pre-trained models.

- Keras: A high-level API for building and training neural networks, with built-in support for transfer learning.

- PyTorch: An open-source machine learning framework known for its flexibility and ease of use.

- Hugging Face Transformers: A library providing pre-trained models for natural language processing tasks.

2.6. How LEARNS.EDU.VN Can Help

At LEARNS.EDU.VN, we offer courses and tutorials that guide you through the process of implementing transfer learning. Our resources include:

- Hands-on Projects: Practical projects that allow you to apply transfer learning techniques to real-world problems.

- Code Examples: Ready-to-use code examples in Python using TensorFlow, Keras, and PyTorch.

- Expert Support: Access to experienced instructors who can answer your questions and provide guidance.

By understanding how transfer learning works and leveraging the resources available at LEARNS.EDU.VN, you can efficiently solve complex machine learning problems and achieve state-of-the-art results. For further inquiries, please contact us at 123 Education Way, Learnville, CA 90210, United States. Reach out via Whatsapp at +1 555-555-1212 or visit our website at LEARNS.EDU.VN.

3. Why Use Transfer Learning?

Transfer learning offers several advantages over training models from scratch, including reduced training time, improved performance, and the ability to work with limited data. These benefits make it a valuable technique for a wide range of machine learning applications.

3.1. Reduced Training Time

Training deep neural networks from scratch can be time-consuming, often requiring days or weeks to converge. Transfer learning significantly reduces training time by leveraging the knowledge already learned by a pre-trained model.

Comparison:

| Training Method | Time Required |

|---|---|

| Training from Scratch | Days/Weeks |

| Transfer Learning | Hours/Days |

This reduction in training time is particularly beneficial for projects with tight deadlines or limited computational resources.

3.2. Improved Performance

Transfer learning often results in better model accuracy and generalization, especially when the target task has limited data. The pre-trained model has already learned useful features from a large dataset, which can help the model converge to a better solution more quickly.

Case Study:

A study published in the Journal of Machine Learning Research found that transfer learning improved the accuracy of image classification models by an average of 15% compared to training from scratch.

3.3. Less Data Required

One of the most significant advantages of transfer learning is the ability to achieve good results with smaller datasets. This is particularly valuable when labeled data is scarce or expensive to obtain.

Example:

Suppose you want to build a model to classify different types of flowers, but you only have a few hundred labeled images. Training a model from scratch with such a small dataset may not yield good results. However, by using a pre-trained model and fine-tuning it on your flower dataset, you can achieve much higher accuracy.

3.4. Overcoming Data Scarcity

In many real-world scenarios, obtaining large labeled datasets can be challenging. Transfer learning provides a way to overcome this limitation by leveraging knowledge from related tasks with abundant data.

Strategies for Data Augmentation:

- Image Augmentation: Techniques like rotation, scaling, and cropping can artificially increase the size of your dataset.

- Text Augmentation: Methods like synonym replacement and back-translation can generate new text samples.

- Synthetic Data Generation: Creating synthetic data that mimics the characteristics of your real data.

3.5. Cost-Effectiveness

Transfer learning can also be a cost-effective approach to machine learning. By reducing training time and data requirements, it can lower the overall cost of developing and deploying machine learning models.

Cost Savings:

- Reduced Computational Costs: Less training time translates to lower costs for cloud computing resources.

- Lower Data Acquisition Costs: Less need for large labeled datasets reduces the expenses associated with data collection and labeling.

- Faster Time to Market: Accelerated development cycles enable faster deployment of machine learning solutions.

3.6. How LEARNS.EDU.VN Maximizes the Benefits

At LEARNS.EDU.VN, we focus on providing resources and strategies that maximize the benefits of transfer learning. Our offerings include:

- Curated Pre-trained Models: Access to a collection of pre-trained models optimized for various tasks and domains.

- Best Practices: Guidelines and best practices for selecting and applying transfer learning techniques.

- Community Support: A community forum where you can connect with other learners and experts to share knowledge and experiences.

By leveraging these resources, you can efficiently harness the power of transfer learning and achieve your machine learning goals. If you have any questions, feel free to contact us at 123 Education Way, Learnville, CA 90210, United States. You can also reach us via Whatsapp at +1 555-555-1212 or visit our website at LEARNS.EDU.VN for additional information.

4. When to Use Transfer Learning

Knowing when to apply transfer learning can significantly impact the efficiency and effectiveness of your machine learning projects. Here are key scenarios where transfer learning is particularly useful:

4.1. Insufficient Training Data

When you lack sufficient labeled data to train a robust model from scratch, transfer learning becomes an invaluable technique. By leveraging the knowledge from a pre-trained model, you can achieve good performance even with limited data.

Data Requirements Comparison:

| Training Approach | Data Required |

|---|---|

| From Scratch | Large |

| Transfer Learning | Small to Medium |

4.2. Availability of Pre-trained Models

If there are existing pre-trained models that have been trained on a similar task or dataset, transfer learning is a logical choice. These models have already learned useful features that can be transferred to your new task.

Sources of Pre-trained Models:

- TensorFlow Hub: A repository of pre-trained models for TensorFlow.

- Keras Applications: Pre-trained models available in the Keras library.

- Hugging Face Models: A wide range of pre-trained models for natural language processing.

4.3. Similar Input Data

When your target task has similar input data to the task on which the pre-trained model was trained, transfer learning is likely to be effective. For example, if you’re working with images and there’s a pre-trained model trained on ImageNet, you can use it as a starting point.

Examples of Compatible Data Types:

- Images: Models trained on ImageNet, COCO, or similar datasets.

- Text: Models trained on large text corpora like BERT, GPT, or RoBERTa.

- Audio: Models trained on audio datasets like LibriSpeech.

4.4. Computational Constraints

If you have limited computational resources, transfer learning can help you develop models more quickly and efficiently. By starting with a pre-trained model, you can reduce the training time and computational costs associated with training from scratch.

Benefits of Reduced Training Time:

- Faster Iteration Cycles: Experiment with different models and hyperparameters more quickly.

- Lower Cloud Computing Costs: Reduce the expenses associated with cloud-based training.

- Improved Productivity: Focus on other aspects of your project, such as data preprocessing and model evaluation.

4.5. Domain Adaptation

Transfer learning is also useful for domain adaptation, where you want to apply a model trained on one domain to a different but related domain. For example, you might want to use a model trained on general medical images to analyze images from a specific medical specialty.

Strategies for Domain Adaptation:

- Fine-tuning: Retraining the pre-trained model on data from the target domain.

- Domain Adversarial Training: Training the model to be invariant to the domain.

- Transfer Component Analysis: Learning a shared feature space between the source and target domains.

4.6. How LEARNS.EDU.VN Guides You

At LEARNS.EDU.VN, we provide guidance on when and how to use transfer learning effectively. Our resources include:

- Decision Trees: Flowcharts to help you determine whether transfer learning is appropriate for your project.

- Case Studies: Real-world examples of successful transfer learning applications.

- Expert Consultations: Opportunities to consult with experienced machine learning practitioners.

By leveraging these resources, you can make informed decisions about when to use transfer learning and maximize its benefits. For any further questions, please contact us at 123 Education Way, Learnville, CA 90210, United States. You can also contact us via Whatsapp at +1 555-555-1212 or visit our website at LEARNS.EDU.VN for more details.

5. Approaches to Transfer Learning

There are several approaches to transfer learning, each with its own strengths and weaknesses. Understanding these approaches can help you choose the best technique for your specific task.

5.1. Pre-trained Model as a Feature Extractor

In this approach, you use the pre-trained model to extract features from your data and then train a new classifier or regressor on these features. The weights of the pre-trained model are frozen, meaning they are not updated during training.

Steps:

- Choose a Pre-trained Model: Select a model that has been trained on a relevant dataset.

- Freeze the Pre-trained Layers: Prevent the weights of the pre-trained layers from being updated.

- Extract Features: Pass your data through the pre-trained model to extract features.

- Train a New Classifier: Train a new classifier (e.g., logistic regression, SVM) on the extracted features.

Advantages:

- Simplicity: Easy to implement and understand.

- Computational Efficiency: Reduces the computational cost of training.

- Suitable for Small Datasets: Works well when you have limited data.

Disadvantages:

- Limited Adaptability: The pre-trained features may not be optimal for your specific task.

5.2. Fine-Tuning the Pre-trained Model

In this approach, you unfreeze some or all of the layers of the pre-trained model and retrain them on your data. This allows the model to adapt its learned features to your specific task.

Steps:

- Choose a Pre-trained Model: Select a model that has been trained on a relevant dataset.

- Unfreeze Some Layers: Decide which layers to unfreeze based on the similarity of your task to the pre-trained task.

- Retrain the Model: Retrain the unfrozen layers on your data, using a small learning rate.

Advantages:

- High Adaptability: Allows the model to learn task-specific features.

- Improved Performance: Often yields better results than feature extraction.

Disadvantages:

- Requires More Data: Needs a larger dataset than feature extraction.

- Risk of Overfitting: Can overfit if the dataset is too small or the learning rate is too high.

5.3. Transfer Learning with Domain Adaptation

This approach focuses on adapting a model trained on one domain to perform well on a different but related domain. It often involves techniques like domain adversarial training or transfer component analysis.

Techniques:

- Domain Adversarial Training: Training the model to be invariant to the domain.

- Transfer Component Analysis: Learning a shared feature space between the source and target domains.

Advantages:

- Effective for Domain Shift: Helps the model generalize to new domains.

- Improved Robustness: Enhances the model’s ability to handle variations in the input data.

Disadvantages:

- Complexity: Can be more complex to implement than feature extraction or fine-tuning.

- Requires Domain Expertise: Needs a good understanding of the differences between the source and target domains.

5.4. Multi-Task Learning

Multi-task learning involves training a single model to perform multiple related tasks simultaneously. This can improve the model’s generalization ability and reduce the risk of overfitting.

Steps:

- Define Multiple Tasks: Identify a set of related tasks that can be learned together.

- Design a Shared Model: Create a model architecture with shared layers for all tasks.

- Train the Model: Train the model on all tasks simultaneously, using a combined loss function.

Advantages:

- Improved Generalization: Helps the model learn more robust features.

- Reduced Overfitting: Can prevent the model from overfitting to a single task.

- Efficient Use of Data: Allows the model to learn from multiple datasets.

Disadvantages:

- Complexity: Can be challenging to design a model that performs well on all tasks.

- Task Interference: Tasks may interfere with each other, leading to suboptimal performance.

5.5. How LEARNS.EDU.VN Supports Your Approach

At LEARNS.EDU.VN, we offer resources and support to help you choose the best transfer learning approach for your specific needs. Our offerings include:

- Detailed Tutorials: Step-by-step guides on implementing each transfer learning approach.

- Code Examples: Ready-to-use code examples in Python using popular machine learning libraries.

- Personalized Guidance: Access to expert instructors who can provide personalized guidance and support.

By leveraging these resources, you can effectively implement transfer learning and achieve your machine learning goals. If you have any queries, please contact us at 123 Education Way, Learnville, CA 90210, United States. You can connect with us via Whatsapp at +1 555-555-1212 or visit our website at LEARNS.EDU.VN.

Transfer learning approaches

Transfer learning approaches

6. Popular Pre-Trained Models

Using pre-trained models can significantly accelerate your machine learning projects. Here are some popular pre-trained models and their applications:

6.1. ImageNet Models

ImageNet is a large dataset of labeled images that has been used to train many popular pre-trained models. These models are often used as a starting point for computer vision tasks such as image classification, object detection, and image segmentation.

Popular ImageNet Models:

- ResNet: Known for its deep residual learning framework.

- VGGNet: Characterized by its simple and uniform architecture.

- Inception: Designed to be computationally efficient while maintaining high accuracy.

- MobileNet: Optimized for mobile and embedded devices.

Applications:

- Image Classification: Identifying the objects or scenes in an image.

- Object Detection: Locating and classifying objects within an image.

- Image Segmentation: Dividing an image into multiple segments or regions.

6.2. Natural Language Processing (NLP) Models

NLP models are trained on large text corpora and can be used for various natural language processing tasks such as text classification, sentiment analysis, and machine translation.

Popular NLP Models:

- BERT (Bidirectional Encoder Representations from Transformers): A powerful model that captures contextual information from text.

- GPT (Generative Pre-trained Transformer): Known for its ability to generate human-like text.

- RoBERTa (Robustly Optimized BERT Approach): An optimized version of BERT with improved training techniques.

- Transformer-XL: Designed to handle long sequences of text.

Applications:

- Text Classification: Categorizing text documents based on their content.

- Sentiment Analysis: Determining the sentiment or emotion expressed in a text.

- Machine Translation: Translating text from one language to another.

- Question Answering: Answering questions based on a given text.

6.3. Audio Models

Audio models are trained on large audio datasets and can be used for tasks such as speech recognition, audio classification, and music generation.

Popular Audio Models:

- WaveNet: A deep generative model for raw audio waveforms.

- DeepSpeech: An end-to-end speech recognition model.

- VGGish: A model for embedding audio features.

Applications:

- Speech Recognition: Converting spoken language into text.

- Audio Classification: Identifying the type of audio (e.g., music, speech, environmental sounds).

- Music Generation: Creating new music compositions.

6.4. How to Choose the Right Pre-trained Model

Selecting the right pre-trained model depends on your specific task and dataset. Here are some factors to consider:

- Task Similarity: Choose a model that has been trained on a task similar to yours.

- Data Similarity: Select a model that has been trained on a dataset similar to yours.

- Model Size: Consider the size and complexity of the model, especially if you have limited computational resources.

- Community Support: Look for models with strong community support and documentation.

6.5. Resources for Finding Pre-trained Models

- TensorFlow Hub: A repository of pre-trained models for TensorFlow.

- Keras Applications: Pre-trained models available in the Keras library.

- Hugging Face Models: A wide range of pre-trained models for natural language processing.

- Papers With Code: A website that tracks machine learning papers and code, including pre-trained models.

6.6. LEARNS.EDU.VN’s Curated Model Library

At LEARNS.EDU.VN, we provide a curated library of pre-trained models that have been optimized for various tasks and domains. Our library includes:

- ImageNet Models: ResNet, VGGNet, Inception, and MobileNet.

- NLP Models: BERT, GPT, RoBERTa, and Transformer-XL.

- Audio Models: WaveNet, DeepSpeech, and VGGish.

We also offer tutorials and guidance on how to use these models effectively. For further details, please contact us at 123 Education Way, Learnville, CA 90210, United States. Connect with us via Whatsapp at +1 555-555-1212 or visit our website at LEARNS.EDU.VN.

7. Practical Examples of Transfer Learning

Transfer learning has been successfully applied to a wide range of real-world problems. Here are some practical examples:

7.1. Medical Image Analysis

Transfer learning has revolutionized medical image analysis by enabling the development of accurate diagnostic tools with limited labeled data.

Example:

Using a pre-trained model trained on ImageNet to detect diseases in medical images such as X-rays and MRIs.

Steps:

- Choose a Pre-trained Model: Select a model like ResNet or VGGNet.

- Fine-Tune the Model: Retrain the model on a dataset of medical images.

- Evaluate Performance: Assess the model’s accuracy in detecting diseases.

Benefits:

- Improved Accuracy: Enhanced disease detection rates.

- Reduced Data Requirements: Lower amount of labeled medical images needed.

- Faster Development: Quicker creation of diagnostic tools.

7.2. Natural Language Processing (NLP) Tasks

Transfer learning has significantly improved the performance of NLP tasks such as sentiment analysis and text classification.

Example:

Applying a pre-trained language model like BERT to classify customer reviews as positive or negative.

Steps:

- Choose a Pre-trained Model: Select a model like BERT or RoBERTa.

- Fine-Tune the Model: Retrain the model on a dataset of customer reviews.

- Evaluate Performance: Assess the model’s accuracy in sentiment classification.

Benefits:

- Higher Precision: More accurate sentiment detection.

- Contextual Understanding: Better comprehension of text nuances.

- Efficient Training: Reduced training time for NLP models.

7.3. Object Detection in Autonomous Vehicles

Transfer learning is crucial for developing object detection systems in autonomous vehicles, enabling them to accurately identify pedestrians, vehicles, and other obstacles.

Example:

Using a pre-trained model trained on COCO to detect objects in images captured by autonomous vehicles.

Steps:

- Choose a Pre-trained Model: Select a model like YOLO or SSD.

- Fine-Tune the Model: Retrain the model on a dataset of street scenes.

- Evaluate Performance: Assess the model’s accuracy in object detection.

Benefits:

- Enhanced Safety: More reliable object detection.

- Real-time Performance: Fast and efficient object identification.

- Adaptability: Improved ability to adapt to different driving conditions.

7.4. Speech Recognition Systems

Transfer learning has led to significant advancements in speech recognition systems, making them more accurate and robust.

Example:

Adapting a pre-trained audio model to recognize speech in different languages or accents.

Steps:

- Choose a Pre-trained Model: Select a model like DeepSpeech or WaveNet.

- Fine-Tune the Model: Retrain the model on a dataset of speech samples.

- Evaluate Performance: Assess the model’s accuracy in speech recognition.

Benefits:

- Greater Accuracy: More precise speech recognition.

- Language Flexibility: Adaptability to multiple languages.

- Accent Accommodation: Improved recognition of various accents.

7.5. How LEARNS.EDU.VN Facilitates Practical Learning

At LEARNS.EDU.VN, we provide hands-on projects and case studies that allow you to apply transfer learning techniques to real-world problems. Our offerings include:

- Medical Image Analysis Projects: Develop tools for disease detection using pre-trained models.

- NLP Sentiment Analysis Projects: Build systems for classifying customer reviews and social media posts.

- Object Detection Projects: Create object detection systems for autonomous vehicles and surveillance applications.

- Speech Recognition Projects: Develop speech recognition systems for different languages and accents.

By engaging in these practical examples, you can gain valuable experience and skills in transfer learning. If you have further inquiries, please contact us at 123 Education Way, Learnville, CA 90210, United States. You can also reach us via Whatsapp at +1 555-555-1212 or visit our website at LEARNS.EDU.VN for more information.

8. Best Practices for Transfer Learning

To maximize the benefits of transfer learning, it’s important to follow certain best practices. Here are some key guidelines:

8.1. Select the Right Pre-trained Model

Choosing a pre-trained model that is well-suited to your task is crucial. Consider factors such as the similarity of the pre-training data to your data, the size and complexity of the model, and the availability of pre-trained weights.

Factors to Consider:

- Task Similarity: Choose a model trained on a similar task.

- Data Similarity: Select a model trained on a similar dataset.

- Model Size: Balance the model’s complexity with your computational resources.

- Availability of Pre-trained Weights: Ensure that pre-trained weights are available for the model.

8.2. Understand Your Data

Thoroughly understanding your data is essential for successful transfer learning. Analyze the characteristics of your data, identify any potential issues, and preprocess your data appropriately.

Key Steps:

- Data Analysis: Examine the distribution, quality, and characteristics of your data.

- Data Cleaning: Address any missing values, outliers, or inconsistencies in your data.

- Data Preprocessing: Normalize or standardize your data to improve model performance.

8.3. Fine-Tune Strategically

Fine-tuning the pre-trained model appropriately is critical for achieving good results. Experiment with different fine-tuning strategies, such as unfreezing different layers or using different learning rates.

Fine-Tuning Strategies:

- Layer Selection: Choose which layers to unfreeze based on the similarity of your task.

- Learning Rate: Use a small learning rate to avoid disrupting the pre-trained weights.

- Regularization: Apply regularization techniques to prevent overfitting.

8.4. Monitor Performance Closely

Monitor the performance of your model closely during training and validation. Use appropriate metrics to evaluate the model’s accuracy, precision, recall, and F1-score.

Performance Metrics:

- Accuracy: The overall correctness of the model’s predictions.

- Precision: The proportion of true positives among the predicted positives.

- Recall: The proportion of true positives among the actual positives.

- F1-Score: The harmonic mean of precision and recall.

8.5. Address Overfitting

Overfitting can be a common issue in transfer learning, especially when fine-tuning the pre-trained model. Use regularization techniques, data augmentation, and early stopping to prevent overfitting.

Techniques to Prevent Overfitting:

- Regularization: Apply L1 or L2 regularization to the model’s weights.

- Data Augmentation: Increase the size of your dataset by applying transformations to your data.

- Early Stopping: Monitor the model’s performance on a validation set and stop training when the performance starts to degrade.

8.6. How LEARNS.EDU.VN Helps You Succeed

At LEARNS.EDU.VN, we provide resources and support to help you follow these best practices and succeed with transfer learning. Our offerings include:

- Comprehensive Guides: Detailed guides on selecting pre-trained models, understanding your data, and fine-tuning strategically.

- Code Templates: Ready-to-use code templates that incorporate best practices for transfer learning.

- Personalized Feedback: Opportunities to receive personalized feedback on your transfer learning projects from experienced instructors.

By leveraging these resources, you can effectively implement transfer learning and achieve your machine learning goals. For further assistance, please contact us at 123 Education Way, Learnville, CA 90210, United States. Connect with us via Whatsapp at +1 555-555-1212 or visit our website at learns.edu.vn.

9. Challenges and Limitations of Transfer Learning

While transfer learning offers numerous benefits, it also has some challenges and limitations that you should be aware of.

9.1. Negative Transfer

Negative transfer occurs when transferring knowledge from a source task to a target task actually decreases performance. This can happen if the source and target tasks are too dissimilar, or if the pre-trained model has learned irrelevant features.

Causes of Negative Transfer:

- Task Dissimilarity: The source and target tasks are too different.

- Irrelevant Features: The pre-trained model has learned features that are not useful for the target task.

- Domain Shift: The source and target domains have different statistical properties.

9.2. Data Compatibility

Ensuring that your data is compatible with the pre-trained model can be challenging. The data may need to be preprocessed or transformed to match the input format expected by the model.

Data Compatibility Issues:

- Input Size: The input data may need to be resized or padded to match the model’s input size.

- Data Format: The data may need to be converted to a specific format (e.g., images may need to be converted to tensors).

- Data Distribution: The data may need to be normalized or standardized to match the distribution of the pre-training data.

9.3. Model Complexity

Pre-trained models can be complex and computationally expensive to fine-tune. This can be a limitation if you have limited computational resources or time.

Complexity Issues: