Natural Language Processing (NLP) is rapidly transforming the landscape of artificial intelligence (AI), powering innovations from sophisticated text generators capable of crafting compelling narratives to intelligent chatbots that engage in seemingly human-like conversations. Text-to-image programs, which convert descriptive text into photorealistic visuals, further exemplify the revolutionary progress in this field. Recent advancements have dramatically enhanced computers’ ability to comprehend not only human languages but also programming languages and complex biological sequences like DNA and protein structures, all of which share language-like characteristics. These cutting-edge AI models are now enabling us to unlock deeper insights from textual data and generate meaningful, expressive outputs, paving the way for unprecedented applications across industries. To truly understand and harness this transformative technology, resources like those offered by machine learning by deeplearning.ai are invaluable, providing expert-led education to navigate this complex domain.

Understanding Natural Language Processing (NLP)

Natural language processing (NLP) is the field dedicated to developing machines that can process and interact with human language – or data that mirrors human language – in its written, spoken, and structural forms. Evolving from computational linguistics, which utilizes computer science to decipher language principles, NLP distinguishes itself as an engineering discipline focused on building practical technologies rather than purely theoretical frameworks. Its primary goal is to create tools that accomplish useful tasks by understanding and manipulating language. NLP is broadly categorized into two interconnected areas: natural language understanding (NLU), which centers on semantic analysis to grasp the intended meaning of text, and natural language generation (NLG), which focuses on enabling machines to produce text. It’s important to differentiate NLP from speech recognition, although they are often used together. Speech recognition is concerned with converting spoken language into text and vice versa, bridging the gap between audio and written language. For those seeking to delve deeper into NLP and related machine learning techniques, machine learning by deeplearning.ai offers comprehensive educational programs to build expertise in this area.

The Growing Importance of Natural Language Processing (NLP)

NLP’s significance in our daily lives is undeniable and continues to expand as language technology permeates diverse sectors. From enhancing customer service with intelligent chatbots in retail to revolutionizing medicine through the analysis of electronic health records, NLP is becoming indispensable. Conversational agents like Amazon’s Alexa and Apple’s Siri leverage NLP to interpret user requests and deliver relevant information. Advanced agents, such as GPT-3, now available for commercial applications, can generate sophisticated, human-quality text on a wide array of subjects and power chatbots capable of engaging in coherent and nuanced conversations. Google employs NLP to refine its search engine results, while social media platforms like Facebook utilize it to detect and filter hate speech.

While NLP has achieved remarkable sophistication, ongoing development is crucial. Current systems are not without flaws, sometimes exhibiting biases, incoherence, or unpredictable behavior. Despite these challenges, machine learning professionals have abundant opportunities to apply NLP in increasingly vital ways to support and enhance societal functions. For individuals looking to contribute to this exciting field, machine learning by deeplearning.ai provides the necessary educational foundation to tackle these challenges and drive innovation in NLP.

Applications of Natural Language Processing (NLP)

NLP is applied to a vast range of language-centric tasks, including answering questions, categorizing text, and facilitating human-computer dialogue.

Here are 11 key applications of NLP:

- Spam Detection: This is a common binary classification problem in NLP, aiming to categorize emails as either spam or not spam. Spam detection systems analyze email text, subject lines, and sender information to calculate the probability of an email being unsolicited. Email services like Gmail use these models to enhance user experience by filtering out unwanted emails and directing them to spam folders. Mastering classification techniques like those used in spam detection is a core skill taught in machine learning by deeplearning.ai courses.

- Grammatical Error Correction: These models are designed to identify and correct grammatical errors in text. This task is often approached as a sequence-to-sequence problem, where the model learns to transform ungrammatical input sentences into grammatically correct output. Online grammar tools like Grammarly and word processors such as Microsoft Word integrate these systems to improve writing quality. Educational institutions also utilize them for grading student essays. Understanding sequence-to-sequence models is crucial in NLP, and machine learning by deeplearning.ai provides in-depth learning paths to master these techniques.

- Topic Modeling: This unsupervised text mining technique explores a collection of documents to uncover underlying themes or topics. Input is a set of documents, and output is a list of topics, each defined by a set of words and their prevalence within documents. Latent Dirichlet Allocation (LDA), a widely used topic modeling method, conceptualizes documents as mixtures of topics and topics as distributions of words. Topic modeling has practical applications, such as assisting lawyers in evidence discovery in legal documents. Unsupervised learning methods like topic modeling are covered extensively in machine learning by deeplearning.ai programs.

- Text Generation: More formally known as natural language generation (NLG), this involves creating text that resembles human writing. These models can be adapted to generate various text formats and genres, including tweets, blog posts, and even computer code. Text generation techniques range from Markov processes and LSTMs to advanced models like BERT, GPT-2, and LaMDA. It’s particularly valuable for features like autocomplete and sophisticated chatbots. The deep learning models underpinning text generation are a key focus in machine learning by deeplearning.ai specializations.

- Information Retrieval: This task focuses on finding documents most relevant to a specific query, a core function in search and recommendation systems. The aim is not to directly answer the query but to efficiently retrieve a subset of highly relevant documents from a potentially vast collection. Modern document retrieval systems typically involve two stages: indexing and matching. Many systems use vector space models, such as Two-Tower Networks, for indexing, and similarity or distance metrics for matching. Google has recently integrated a multimodal information retrieval model into its search engine that handles text, image, and video data. Understanding information retrieval systems is crucial for building effective search technologies, and machine learning by deeplearning.ai provides the knowledge base to work in this area.

How Natural Language Processing (NLP) Operates

NLP models function by identifying patterns and relationships within the components of language, such as letters, words, and sentences in a text dataset. NLP architectures employ diverse methodologies for data preparation, feature extraction, and model building. Key processes include:

- Feature Extraction: Traditional machine learning methods often rely on features—numerical representations of documents based on their content—generated by techniques like Bag-of-Words, TF-IDF, or engineered features such as document length, word polarity, and metadata. More recent techniques include Word2Vec, GLoVE, and learning features directly during neural network training. Machine learning by deeplearning.ai courses cover these feature extraction techniques in detail, equipping learners with practical skills.

- Bag-of-Words: This method counts the frequency of each word or n-gram (sequence of n words) in a document. For example, as illustrated below, a Bag-of-Words model generates a numerical representation of a text dataset based on the occurrences of each word in a predefined word index.

- **TF-IDF:** Unlike Bag-of-Words, TF-IDF (Term Frequency-Inverse Document Frequency) weights words based on their importance within a document and across a corpus. It considers two factors to evaluate a word's significance:

- **Term Frequency (TF):** Measures how important a word is within a specific document.

*TF(word in a document) = (Number of occurrences of that word in document) / (Total number of words in document)*

- **Inverse Document Frequency (IDF):** Measures how unique or rare a word is across the entire corpus.

*IDF(word in a corpus) = log(Total number of documents in the corpus / Number of documents that contain the word)*

A word is deemed important if it appears frequently in a document. However, common words like "a" and "the" also appear frequently, leading to high TF scores without reflecting actual importance. IDF addresses this by assigning higher weights to rare words and lower weights to common words. The [TF-IDF](https://deeplearning.ai/the-batch/bug-squasher/) score is calculated as the product of TF and IDF.- **Word2Vec:** [Introduced in 2013](https://deeplearning.ai/the-batch/how-to-keep-up-in-a-changing-field/), Word2Vec utilizes a shallow neural network to learn high-dimensional word embeddings from raw text. It comes in two main forms: Skip-Gram, which predicts surrounding words given a target word, and Continuous Bag-of-Words (CBOW), which predicts a target word from its context words. After training, and discarding the output layer, these models transform words into vector embeddings that capture contextual meaning, making them valuable inputs for various NLP tasks. Words appearing in similar contexts will have similar embeddings.

- **GLoVE:** Similar to Word2Vec, GLoVE (Global Vectors for Word Representation) also learns word embeddings but employs matrix factorization techniques on word co-occurrence statistics across a corpus, rather than neural network training. It builds a matrix of global word-to-word co-occurrence counts to derive word embeddings.-

Modeling: After preprocessing and feature extraction, the data is fed into an NLP architecture to perform specific tasks. The choice of model depends on the task at hand.

- Numerical features from methods like TF-IDF can be used with traditional models such as logistic regression, Naive Bayes, decision trees, or gradient boosting for classification tasks. For named entity recognition, hidden Markov models combined with n-grams can be effective.

- Deep neural networks often operate directly on raw text or word embeddings, minimizing the need for explicit feature engineering, although TF-IDF or Bag-of-Words features can still be used as input.

- Language Models: In essence, a language model’s objective is to predict the next word in a sequence, given preceding words. Probabilistic models using Markov assumptions are examples:

P(Wn) = P(Wn | Wn-1)

Deep learning is also extensively used to build language models. Deep learning models take word embeddings as input and, at each step, output a probability distribution for the next word from the vocabulary. Pre-trained language models learn language structure from large text corpora like Wikipedia and can be fine-tuned for specific tasks. For example, BERT has been adapted for tasks ranging from fact-checking to headline generation. Machine learning by deeplearning.ai courses provide a comprehensive understanding of language models, from foundational concepts to advanced implementations.

Key Natural Language Processing (NLP) Techniques

Many NLP tasks can be addressed using a core set of general techniques, broadly categorized into traditional machine learning and deep learning methods.

Traditional Machine Learning NLP Techniques:

- Naive Bayes: This probabilistic classifier applies Bayes’ theorem:

P(label | text) = [P(label) P(text | label)] / P(text)*

It predicts the label with the highest posterior probability. The “naive” assumption is that word features are independent:

P(text | label) = P(word_1 | label) P(word_2 | label) … P(word_n | label)*

In NLP, Naive Bayes is used for tasks like spam detection and bug finding in software code. Machine learning by deeplearning.ai offers courses that cover the theoretical foundations and practical applications of Naive Bayes and other traditional machine learning techniques.

- Decision Trees: These supervised classification models partition datasets based on features to maximize information gain at each split.

- Latent Dirichlet Allocation (LDA): Used for topic modeling, LDA views documents as mixtures of topics and topics as distributions of words. It is a statistical approach based on the intuition that topics can be described by a small set of representative words.

- Hidden Markov Models (HMMs): Markov models predict the next state based on the current state. In NLP, this can be used to predict the next word based on the preceding word. HMMs extend Markov models by introducing hidden states, which are unobserved properties of the data. In part-of-speech (POS) tagging, words are observed states, and POS tags are hidden states. HMMs use emission probabilities (probability of an observed word given a hidden POS tag) and transition probabilities (probability of transitioning between POS tags). The Viterbi algorithm is commonly used to infer the most likely sequence of hidden states (POS tags) for a given sentence.

Deep Learning NLP Techniques:

- Transformers: Introduced in the groundbreaking 2017 paper “Attention Is All You Need” (Vaswani et al.), transformers revolutionized NLP by replacing recurrence with self-attention mechanisms to capture global dependencies between input and output. This parallel processing of all words significantly accelerates training and inference compared to RNNs. The transformer architecture has driven advancements in NLP, leading to models like BLOOM, Jurassic-X, and Turing-NLG. Transformers have also found success in computer vision tasks, including 3D image generation. Machine learning by deeplearning.ai offers specialized courses that delve into the intricacies of transformer networks and their applications.

Six Influential Natural Language Processing (NLP) Models

Over time, numerous NLP models have had a significant impact on the AI community, and some have even gained mainstream attention. Chatbots and language models are among the most prominent. Here are some notable examples:

- Eliza: Developed in the mid-1960s, Eliza was designed to pass the Turing Test, aiming to make users believe they were interacting with a human. It used pattern matching and rule-based responses without understanding language context.

- Tay: Microsoft’s chatbot launched in 2016, Tay was intended to mimic a teenager’s online communication style and learn from interactions on Twitter. However, it quickly adopted offensive language from users and was deactivated shortly after launch, highlighting the challenges of bias in training data, as discussed in the “Stochastic Parrots” paper.

- BERT and Muppet-Inspired Models: Many advanced NLP models are named after Muppet characters, including ELMo, BERT, Big Bird, ERNIE, Kermit, Grover, RoBERTa, and Rosita. These models excel at providing contextual embeddings and enhancing knowledge representation, crucial for advanced NLP tasks taught in machine learning by deeplearning.ai courses.

- Generative Pre-trained Transformer 3 (GPT-3): A massive 175-billion-parameter model, GPT-3 can generate original, remarkably fluent prose comparable to human writing in response to prompts. Based on the transformer architecture, GPT-3’s predecessor, GPT-2, is open source. Microsoft has an exclusive license to GPT-3 from OpenAI, but access is available to others through an API. Open-source alternatives are being developed by groups like EleutherAI and Meta.

- Language Model for Dialogue Applications (LaMDA): Google’s conversational chatbot, LaMDA, is a transformer-based model trained on dialogue data rather than standard web text. It aims to provide contextually relevant and sensible conversational responses. Google engineer Blake Lemoine controversially claimed LaMDA was sentient after extended conversations about rights and personhood, even suggesting it changed his view on Asimov’s laws of robotics. This claim was widely debated and dismissed by many experts. Lemoine was later dismissed by Google.

- Mixture of Experts (MoE): Unlike most models that use a uniform set of parameters, MoE models dynamically select different parameter sets for different inputs using efficient routing algorithms to achieve enhanced performance. Switch Transformer is an MoE example focused on reducing computational and communication costs in large models.

Programming Languages, Libraries, and Frameworks for Natural Language Processing (NLP)

Numerous languages and libraries support NLP development. Here are some of the most valuable.

-

Python: Python is the dominant language for NLP tasks, with most deep learning libraries and frameworks designed for it. Useful Python libraries for NLP include:

- NLTK

- spaCy

- Gensim

- Scikit-learn

- Transformers (Hugging Face)

- TensorFlow

- PyTorch

Learning these tools is essential for NLP practitioners, and machine learning by deeplearning.ai courses often utilize Python and these libraries in their practical exercises.

-

R: While Python is now more prevalent, many early NLP models were developed in R, and it remains popular in data science and statistics. R libraries for NLP include TidyText, Weka, Word2Vec, SpaCyR, TensorFlow, and PyTorch.

-

Other languages like JavaScript, Java, and Julia also have libraries for NLP.

Controversies Surrounding Natural Language Processing (NLP)

NLP has been at the center of several controversies, ranging from model behavior to broader ethical and societal impacts.

“Nonsense on stilts”: Gary Marcus has critiqued deep learning-based NLP for generating seemingly sophisticated language that can mislead users into believing these algorithms possess genuine understanding and reasoning capabilities beyond their actual abilities. This highlights the importance of critical evaluation and understanding the limitations of current NLP technologies, a perspective encouraged by machine learning by deeplearning.ai in its educational materials.

Getting Started with Natural Language Processing (NLP)

For those beginning their NLP journey, numerous excellent educational resources are available.

Course Machine Learning Specialization A foundational set of three courses that introduces beginners to the fundamentals of learning algorithms. Prerequisites include high-school math and basic programming skills View Course

Course Deep Learning Specialization An intermediate set of five courses that help learners get hands-on experience building and deploying neural networks, the technology at the heart of today’s most advanced NLP and other sorts of AI models. View Course

Course Natural Language Processing Specialization An intermediate set of four courses that provide learners with the theory and application behind the most relevant and widely used NLP models. View Course

To deepen your NLP knowledge, reading research papers is highly recommended. Start with papers introducing the models and techniques discussed here, many of which are available on arxiv.org. Additional helpful resources include online tutorials, blogs, and open-source projects.

A strong starting point is to implement basic algorithms (linear and logistic regression, Naive Bayes, decision trees, and basic neural networks) in Python. The next step is to adapt open-source implementations to new datasets or tasks, gaining practical experience. Machine learning by deeplearning.ai provides structured learning paths that guide you through these foundational steps and beyond, offering specializations in both machine learning and NLP.

Conclusion

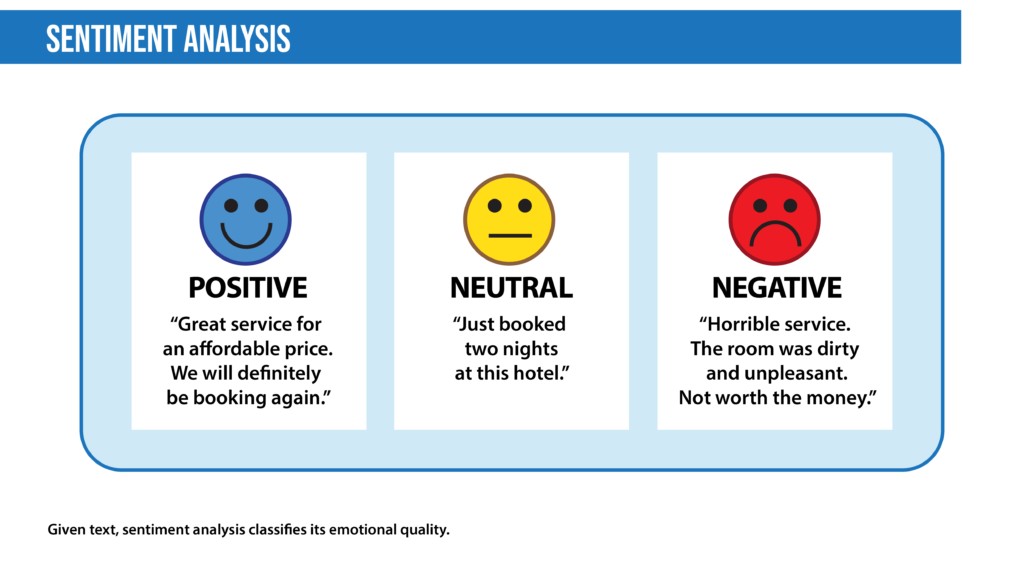

NLP is a rapidly advancing and vital domain within AI, with applications spanning translation, summarization, text generation, and sentiment analysis. Businesses are increasingly leveraging NLP for internal applications like detecting insurance fraud, assessing customer sentiment, optimizing aircraft maintenance, and for customer-facing solutions like Google Translate.

Aspiring NLP practitioners should begin by building foundational AI skills in mathematics, Python programming, and core algorithms like decision trees, Naive Bayes, and logistic regression. Online courses, especially those offered through machine learning by deeplearning.ai, are invaluable for building this foundation and progressing to specialized NLP topics. Specialization requires knowledge of neural networks, frameworks like PyTorch and TensorFlow, and data preprocessing techniques. The transformer architecture, a field-changer since 2017, is particularly important.

NLP is an exciting and impactful field with the potential for significant positive contributions. However, it is also associated with controversies related to bias and misuse. Responsible practitioners must understand these issues, recognizing that models can reflect biases from training data, and sophisticated language models can be used for disinformation. Furthermore, the environmental impact of training large models, such as greenhouse gas emissions, is a growing concern.

This overview provides an introduction to NLP. For deeper exploration, machine learning by deeplearning.ai offers courses for all levels, from AI beginners to those seeking NLP specialization. Regardless of your current expertise or goals, continuous learning is key in this dynamic field.