In the realm of machine learning, evaluating the performance of classification models is crucial. Accuracy, precision, and recall stand out as essential metrics for gauging the quality of these models. Each metric offers a distinct perspective on model performance, making the choice between them dependent on the specific application and its priorities. This guide delves into the nuances of accuracy, precision, and recall, exploring their strengths and limitations.

We will also illustrate the practical application of these metrics using the open-source Evidently Python library, a powerful tool for ML model evaluation and monitoring.

Understanding Key Classification Metrics: Accuracy, Precision, and Recall

- Accuracy: Measures the overall correctness of a classification model, indicating how frequently it makes correct predictions across all classes.

- Precision: Focuses on the accuracy of positive predictions. It answers the question: “Out of all instances predicted as positive, how many are actually positive?”.

- Recall: Highlights the model’s ability to identify all actual positive instances. It answers the question: “Out of all actual positive instances, how many did the model correctly identify?”.

- Choosing the Right Metric: The selection of the most appropriate metric hinges on factors like class balance within your dataset and the relative costs associated with different types of errors.

Start free ⟶Or try open source ⟶

Alt: Evidently Classification Performance Report showcasing accuracy, precision, and recall metrics.

Start with AI observability

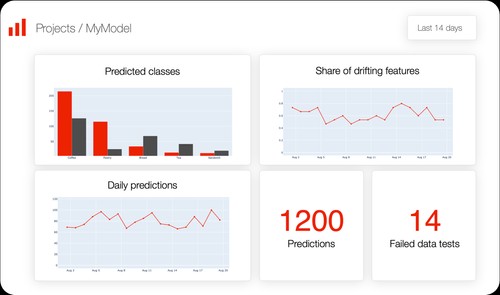

Want to keep tabs on your classification models? Automate the quality checks with Evidently Cloud. Powered by the leading open-source Evidently library with 20m+ downloads.

Start free ⟶Or try open source ⟶

A classification model’s core function is to assign predefined labels to data points. Imagine building a model to predict customer churn for a software product. This model would categorize users into “churner” or “non-churner.” To evaluate the effectiveness of such a model, we need to compare its predictions with the actual outcomes – whether users actually churned or not. This is where metrics like accuracy, precision, and recall become indispensable.

Let’s delve deeper into each of these metrics to gain a comprehensive understanding.

Accuracy: Measuring Overall Correctness

Accuracy is perhaps the most intuitive metric. It quantifies how often your machine learning model makes correct predictions overall. The calculation is straightforward: divide the number of correct predictions by the total number of predictions made.

Simply put, accuracy tells you: “How often is the model right?”.

Alt: Accuracy formula: (Number of Correct Predictions) / (Total Number of Predictions).

Accuracy is expressed as a value between 0 and 1, or as a percentage. A higher accuracy score signifies better model performance, with 1.0 (or 100%) representing perfect accuracy – every prediction is correct.

Its simplicity is a major advantage. Accuracy is easily understood and communicated, providing a general sense of how well a model classifies data points.

Accuracy in Action: A Spam Detection Example

Consider a spam detection model. For each email, the model predicts either “spam” or “not spam.” Let’s visualize the model’s predicted labels:

Alt: Visual representation of predicted labels (spam/not spam) for emails in a dataset.

Now, we compare these predictions with the actual labels (whether each email is truly spam or not). We can then visually mark correct and incorrect predictions.

Alt: Visual representation of email predictions with correct predictions marked with green ticks and incorrect predictions marked with red crosses.

By counting the correctly classified emails (green ticks) and dividing by the total number of emails, we calculate the accuracy. In this example, 52 out of 60 predictions are correct, resulting in an accuracy of approximately 87%.

Alt: Accuracy calculation for the spam detection example: 52 correct predictions / 60 total predictions = 87% accuracy.

While accuracy appears to be a straightforward measure of model quality, it can be deceptive in certain scenarios, leading to what’s known as the accuracy paradox.

The Accuracy Paradox: When High Accuracy Misleads

The accuracy paradox arises because accuracy treats all classes equally and focuses solely on overall correctness. This can be problematic when dealing with imbalanced datasets.

In the previous spam detection example, we implicitly assumed a relatively balanced dataset with a reasonable proportion of both spam and non-spam emails. In such cases, accuracy can be a meaningful metric. This is often true for scenarios like image classification (e.g., “cat or dog”) where classes are generally balanced.

However, many real-world classification problems exhibit class imbalance, where one class significantly outweighs the other. Examples include fraud detection, predicting equipment failures, customer churn prediction, and identifying rare diseases in medical imaging. In these situations, the events we are most interested in predicting are often rare, occurring in only a small percentage of cases (e.g., 1-10% or even less).

Let’s revisit the spam detection scenario, but this time with a highly imbalanced dataset. Imagine that only 3 out of 60 emails (5%) are actually spam.

Alt: Example of an imbalanced dataset in spam detection: only 3 spam emails out of 60.

In this imbalanced scenario, it’s easy to achieve high accuracy simply by predicting the majority class – “not spam” – for every email.

Alt: Illustration of always predicting “not spam” in an imbalanced dataset.

If we always predict “not spam,” we’d be correct for the 57 non-spam emails, achieving an accuracy of 95% (57/60).

While the accuracy is high, this model is completely useless for spam detection. It fails to identify any spam emails and defeats the purpose of the classification task. This is the essence of the accuracy paradox: high accuracy can mask poor performance, particularly on the minority class of interest.

Advantages and Disadvantages of Accuracy

Let’s summarize the pros and cons of using accuracy as a metric.

Pros:

- Simplicity and Interpretability: Accuracy is easy to calculate, understand, and explain.

- Effectiveness with Balanced Classes: It’s a useful metric when classes are balanced and the overall correctness of the model is the primary concern.

Cons:

- Misleading with Imbalanced Classes: Accuracy can be highly misleading in imbalanced datasets, providing an inflated view of performance while failing to capture performance on the minority class.

- Ignores Class-Specific Performance: It doesn’t differentiate between the model’s ability to predict different classes, treating all errors equally.

When dealing with imbalanced datasets, or when the focus is on accurately predicting a specific class (like spam in our example), accuracy alone is insufficient. We need metrics that are sensitive to the performance on the class of interest. This is where precision and recall come into play. They provide a more granular view of model performance by focusing on specific types of correct and incorrect predictions.

Before diving into precision and recall, let’s briefly introduce the confusion matrix, a fundamental tool for understanding classification model performance and the basis for calculating these metrics.

Accuracy, precision, and recall for multi-class classification: While this article focuses on binary classification, these metrics can be extended to multi-class problems. Further information on multi-class classification metrics is available in a separate article.

Confusion Matrix: Decomposing Classification Outcomes

In binary classification, we have two classes, often labeled as “positive” (1) and “negative” (0). In our spam example, “spam” is the positive class, and “not spam” is the negative class.

When evaluating a classification model, we can categorize predictions into four outcomes based on whether the prediction and the actual label are positive or negative. These outcomes are neatly summarized in a confusion matrix.

Correct Predictions:

- True Positive (TP): The model correctly predicts the positive class. In spam detection, this is correctly classifying a spam email as “spam.”

- True Negative (TN): The model correctly predicts the negative class. In spam detection, this is correctly classifying a non-spam email as “not spam.”

Incorrect Predictions (Errors):

- False Positive (FP): The model incorrectly predicts the positive class when the actual class is negative. In spam detection, this is incorrectly classifying a non-spam email as “spam” (a “false alarm”).

- False Negative (FN): The model incorrectly predicts the negative class when the actual class is positive. In spam detection, this is incorrectly classifying a spam email as “not spam” (a “missed spam”).

The confusion matrix is a table that visually organizes these four outcomes:

Alt: Example confusion matrix showing True Positives (TP), True Negatives (TN), False Positives (FP), and False Negatives (FN).

For a more in-depth exploration of the confusion matrix, refer to our detailed guide on Confusion Matrix.

Using the confusion matrix, we can refine our understanding of accuracy. Accuracy is calculated as the sum of correct predictions (TP + TN) divided by the total number of predictions (TP + TN + FP + FN).

Alt: Accuracy formula based on confusion matrix components: (TP + TN) / (TP + TN + FP + FN).

As we observed with the naive “always predict not spam” model, high accuracy can be achieved by maximizing True Negatives (TN) in imbalanced datasets, while completely missing True Positives (TP). Accuracy, therefore, overlooks the specific types of errors a model makes, focusing only on overall correctness.

To specifically evaluate a model’s ability to correctly identify positive instances, we turn to precision and recall.

Precision: The Accuracy of Positive Predictions

Precision measures the accuracy of positive predictions made by the model. It answers the question: “When the model predicts the positive class, how often is it correct?”. Precision is calculated by dividing the number of true positives (TP) by the total number of positive predictions (TP + FP).

In essence, precision answers: “Of all predicted positives, how many are actually positive?”.

Alt: Precision formula: (True Positives) / (True Positives + False Positives).

Precision, like accuracy, ranges from 0 to 1 (or 0% to 100%). Higher precision indicates a model that is more reliable when it predicts the positive class, minimizing false positive errors. A perfect precision of 1.0 means that every positive prediction made by the model is actually a true positive.

Precision Illustrated: Spam Detection Revisited

Let’s revisit our imbalanced spam detection scenario, where only 5% of emails are spam.

Here’s a reminder of the actual distribution of spam and non-spam emails:

Alt: Visual representation of the imbalanced spam dataset (5% spam).

Let’s again consider the naive model that always predicts “not spam.”

Alt: Illustration of the “always predict not spam” model.

We know that accuracy is a misleadingly high 95%. However, let’s calculate precision. To do this, we divide the number of correctly predicted spam emails (true positives) by the total number of emails predicted as spam (true positives + false positives). Since our naive model never predicts “spam,” both true positives and false positives are zero.

In this case, precision is undefined (division by zero). However, we can conceptually consider it to be 0, as there are no positive predictions and hence no correct positive predictions. This starkly contrasts with the high accuracy and highlights precision’s ability to expose the inadequacy of this naive model in identifying spam.

Now, let’s consider a more realistic, trained ML model that actually identifies some emails as spam. Comparing its predictions to the true labels, we get the following results:

Alt: Visual representation of a trained model’s predictions in the spam detection example, showing true positives, false positives, true negatives, and false negatives.

Let’s re-evaluate the model’s quality. Accuracy remains at 95%, identical to the naive model. This reinforces that accuracy is not a discriminatory metric in this imbalanced scenario.

Calculating precision, we divide the number of correctly predicted spam emails (3 – true positives) by the total number of emails predicted as spam (6 – true positives + false positives).

Alt: Precision calculation for the trained spam detection model: 3 true positives / (3 true positives + 3 false positives) = 50% precision.

The precision is 50%. This means that when the model flags an email as spam, it is correct only half the time. The other half are false alarms (false positives). Precision provides a more realistic assessment of the model’s performance in identifying spam compared to accuracy.

Pros and Cons of Precision

When is precision the right metric to prioritize?

Advantages of Precision:

- Effective for Imbalanced Datasets: Precision is robust in imbalanced datasets, accurately reflecting the model’s performance in predicting the positive class.

- High Cost of False Positives: Precision is crucial when the cost of a false positive is high. In such scenarios, minimizing false positives is paramount, even if it means missing some positive instances.

Consider the spam detection example. If the primary concern is to avoid incorrectly classifying legitimate emails as spam (false positives), then precision becomes a critical metric. False positives can lead to users missing important emails, a potentially high-cost error. Prioritizing precision aims to minimize these errors, ensuring that when an email is classified as spam, there’s a high likelihood it actually is.

However, precision has limitations.

Disadvantage of Precision:

- Ignores False Negatives: Precision only considers false positives and completely disregards false negatives – cases where the model fails to identify actual positive instances.

In the spam detection context, a high-precision model might minimize false positives but could also miss many spam emails (false negatives). To address this limitation, we need another metric that complements precision by focusing on the detection of all actual positive instances: recall.

Recall: Capturing All Positive Instances

Recall, also known as sensitivity or the true positive rate, measures the model’s ability to identify all actual positive instances within the dataset. It answers the question: “Out of all actual positive instances, how many did the model correctly identify?”. Recall is calculated by dividing the number of true positives (TP) by the total number of actual positive instances (TP + FN).

In simpler terms, recall answers: “Can the model find all instances of the positive class?”.

Alt: Recall formula: (True Positives) / (True Positives + False Negatives).

Recall is also expressed between 0 and 1 (or 0% and 100%). Higher recall indicates a model that is better at identifying all positive instances, minimizing false negative errors. A perfect recall of 1.0 signifies that the model correctly identifies every single positive instance in the dataset.

The term “sensitivity” is often used in medical contexts, referring to a test’s ability to correctly identify individuals with a disease (true positives). In machine learning, “recall” is the more prevalent term, but the concept is identical.

Recall in Practice: Spam Detection Once More

Let’s continue with our spam detection example. We’ve already calculated accuracy (95%) and precision (50%) for our trained model. Now, let’s calculate recall.

Using the same prediction results:

Alt: Visual representation of model predictions for recall calculation.

To calculate recall, we divide the number of correctly identified spam emails (true positives – 3) by the total number of actual spam emails in the dataset (true positives + false negatives – 3 + 0 = 3).

Alt: Recall calculation for the trained spam detection model: 3 true positives / (3 true positives + 0 false negatives) = 100% recall.

The recall is 100%. This means the model successfully identified all spam emails present in the dataset. There are no false negatives – no spam emails were missed.

Therefore, our example model has 95% accuracy, 50% precision, and 100% recall. The interpretation of these metrics depends heavily on the specific goals of the spam detection system.

Advantages and Disadvantages of Recall

When is recall the primary metric to optimize for?

Advantages of Recall:

- Effective for Imbalanced Datasets: Like precision, recall is valuable for imbalanced datasets, focusing on the model’s ability to find instances of the minority class.

- High Cost of False Negatives: Recall is crucial when the cost of false negatives is high. In these scenarios, it’s critical to identify as many positive instances as possible, even if it leads to some false positives.

Consider medical diagnosis, security screening, or fraud detection. In these applications, failing to identify a positive case (false negative) can have severe consequences – missing a disease, overlooking a threat, or failing to detect fraudulent activity. Prioritizing recall minimizes these high-cost false negatives, even if it increases false positives.

However, recall also has a downside.

Disadvantage of Recall:

- Ignores False Positives: Recall, in isolation, doesn’t consider the number of false positives. A model can achieve perfect recall by simply predicting the positive class for every instance, but this would likely result in a high number of false positives and low precision, rendering the model practically useless.

Imagine a spam filter that flags every email as spam. It would achieve 100% recall (no spam emails would be missed), but the overwhelming number of false positives would make it unusable.

Therefore, neither precision nor recall alone provides a complete picture of model performance. Often, a balance between precision and recall is desired, and the optimal balance depends on the specific application and the relative costs of false positives and false negatives.

Want a more detailed example of evaluating classification models? Explore our code tutorial that compares two classification models with seemingly similar accuracy but different precision and recall characteristics: What is your model hiding?.

Practical Considerations: Choosing Between Precision and Recall

Accuracy, precision, and recall are all vital metrics for evaluating classification models. Since no single metric is universally “best,” it’s crucial to consider them together or select the most relevant metric based on the specific context.

Here are key practical considerations for choosing between precision and recall:

1. Class Balance: Always be mindful of class distribution. In imbalanced datasets, accuracy can be misleading. Precision and recall offer a more nuanced view of performance, particularly for the minority class. Visualizing class distribution, as shown in the Evidently library example below, helps in understanding the context of metric evaluation.

Alt: Class representation chart from Evidently Python library showing class imbalance.

Example class representation plot generated using Evidently Python library.

2. Cost of Errors: The most critical factor is understanding the costs associated with false positives and false negatives in your specific application.

Prioritize Precision when: The cost of false positives is high. You want to be highly confident when predicting the positive class, even if you miss some positive instances. Examples: spam detection (avoiding misclassifying important emails), medical diagnosis (avoiding unnecessary treatments).

Prioritize Recall when: The cost of false negatives is high. You need to identify as many positive instances as possible, even if it means accepting more false positives. Examples: fraud detection (catching all fraudulent transactions), disease detection (identifying all cases of a disease), security screening (detecting all threats).

Quantifying or estimating the costs of these errors is essential for making informed decisions about metric prioritization. For example, in churn prediction, a false negative (missing a potential churner) costs lost revenue, while a false positive (incorrectly identifying a non-churner as a churner) costs marketing incentives.

3. F1-Score: If you want to balance precision and recall equally, the F1-score is a useful metric. The F1-score is the harmonic mean of precision and recall, providing a single metric that considers both false positives and false negatives.

4. Decision Threshold: For probabilistic classification models, you can adjust the decision threshold to fine-tune the balance between precision and recall.

Alt: Visual representation of decision threshold in classification.

Increasing the decision threshold generally increases precision (fewer false positives) and decreases recall (more false negatives). Conversely, decreasing the threshold increases recall (fewer false negatives) and decreases precision (more false positives). Choosing the appropriate decision threshold allows you to tailor the model’s behavior to your specific needs.

Learn more about setting optimal decision thresholds in our dedicated guide.

Ultimately, the choice between prioritizing precision or recall, or finding a balance between them, depends on a careful consideration of the application’s specific requirements and the trade-offs between different types of errors.

Want a real-world example with data and code? Explore our tutorial on employee churn prediction: “What is your model hiding?”. This tutorial demonstrates how to evaluate and compare different classification models based on accuracy, precision, and recall.

Monitoring Accuracy, Precision, and Recall in Production

Alt: Example metrics summary from Evidently Python library showing accuracy, precision, and recall for model monitoring.

Example metrics summary generated using Evidently Python library.

Accuracy, precision, and recall are not only useful for initial model evaluation but also for monitoring model performance in production. As numerical metrics, they can be tracked over time to detect performance degradation. However, production monitoring requires access to true labels, which may not always be immediately available.

In some applications, like e-commerce personalization, feedback is rapid. User interactions (clicks, purchases) provide near real-time labels, allowing for quick calculation of metrics. In other cases, obtaining true labels can be delayed (days, weeks, or months). In such scenarios, retrospective metric calculation is possible, and proxy metrics like data drift can be used to proactively detect potential performance issues.

Furthermore, calculating metrics for specific segments of data is often beneficial. For example, in churn prediction, segmenting customers by demographics or usage patterns and monitoring precision and recall within each segment can reveal performance disparities and highlight areas for model improvement. Focusing solely on overall metrics can mask critical performance issues within important segments.

Calculating Accuracy, Precision, and Recall in Python with Evidently

The Evidently Python library provides a user-friendly way to calculate and visualize accuracy, precision, recall, and other classification metrics. To use Evidently, you need a dataset containing model predictions and true labels. Evidently generates interactive reports with confusion matrices, metric summaries, ROC curves, and other visualizations, facilitating comprehensive model evaluation. These checks can also be integrated into production pipelines for automated monitoring.

Alt: Accuracy, precision, and recall calculation in Python using Evidently library.

Evidently offers a wide range of Reports and Test Suites for data and model quality assessment. Explore Evidently on GitHub and get started with the Getting Started Tutorial.

[fs-toc-omit]Get started with AI observability

Explore our open-source library with over 25 million downloads, or sign up for Evidently Cloud to leverage no-code checks and foster team collaboration on AI quality.Sign up free ⟶Or try open source ⟶