In the realm of machine learning, particularly within regression analysis, Mean Squared Error (MSE) stands as a fundamental metric for quantifying the discrepancy between predicted and actual values. When leveraging the power of scikit-learn, understanding not only MSE but also its gradient is crucial for optimizing models and achieving accurate predictions. This article delves into the concept of the “Scikit Learn Gradient Of Mean Square Error”, exploring its significance, calculation, and application within the scikit-learn ecosystem.

I. Understanding Mean Squared Error: The Foundation

Before we explore the gradient, let’s solidify our understanding of Mean Squared Error itself. As highlighted in the original scikit-learn documentation, MSE is a strictly consistent scoring function for the ‘mean’ functional in regression tasks. This means it’s optimally aligned for measuring the average magnitude of error in your predictions.

Mathematically, for a set of (n) samples, MSE is calculated as:

[text{MSE}(y, hat{y}) = frac{1}{ntext{samples}} sum{i=0}^{n_text{samples} – 1} (y_i – hat{y}_i)^2]

Where:

- (y_i) represents the true value of the i-th sample.

- (hat{y}_i) is the predicted value for the i-th sample.

MSE provides a single numerical value representing the average squared difference between predictions and actual values. A lower MSE indicates a better model fit, as it signifies smaller prediction errors. Scikit-learn offers the mean_squared_error function to readily compute this metric, as demonstrated in the original documentation:

from sklearn.metrics import mean_squared_error

import numpy as np

y_true = [3, -0.5, 2, 7]

y_pred = [2.5, 0.0, 2, 8]

mse = mean_squared_error(y_true, y_pred)

print(f"Mean Squared Error: {mse}") # Output: Mean Squared Error: 0.375 Mean Squared Error Calculation

Mean Squared Error Calculation

II. The Gradient of Mean Squared Error: Guiding Model Optimization

While MSE quantifies the error, its gradient is the compass that guides optimization algorithms towards minimizing this error. In the context of machine learning models, particularly those trained using gradient-based methods, the gradient of the loss function (in this case, MSE) is paramount.

The gradient of MSE with respect to the model’s parameters (let’s denote them as (theta)) indicates the direction of the steepest increase in MSE. Conversely, the negative gradient points in the direction of the steepest decrease in MSE. Optimization algorithms like Gradient Descent leverage this negative gradient to iteratively adjust model parameters, aiming to find the parameters that yield the minimum MSE.

Let’s consider a simple linear regression model. The prediction (hat{y}_i) can be expressed as (hat{y}_i = theta_0 + theta_1 x_i), where (theta_0) and (theta_1) are the model parameters (intercept and coefficient, respectively) and (x_i) is the feature value for the i-th sample.

To find the gradient of MSE with respect to (theta_0) and (theta_1), we need to compute the partial derivatives:

-

Partial derivative with respect to (theta_0) (intercept):

[frac{partial text{MSE}}{partial theta0} = frac{1}{ntext{samples}} sum{i=0}^{ntext{samples} – 1} 2(y_i – hat{y}i)(-1) = -frac{2}{ntext{samples}} sum{i=0}^{ntext{samples} – 1} (y_i – hat{y}_i)]

-

Partial derivative with respect to (theta_1) (coefficient):

[frac{partial text{MSE}}{partial theta1} = frac{1}{ntext{samples}} sum{i=0}^{ntext{samples} – 1} 2(y_i – hat{y}_i)(-xi) = -frac{2}{ntext{samples}} sum{i=0}^{ntext{samples} – 1} x_i(y_i – hat{y}_i)]

These partial derivatives form the gradient vector of the MSE function. Gradient Descent algorithms utilize these gradients in an iterative process:

-

Initialize parameters (theta) randomly.

-

Compute the gradients (frac{partial text{MSE}}{partial theta}) using the current parameters and training data.

-

Update parameters in the opposite direction of the gradient (to minimize MSE):

[theta{new} = theta{old} – eta frac{partial text{MSE}}{partial theta}]

where (eta) is the learning rate, controlling the step size in the gradient direction.

-

Repeat steps 2 and 3 until convergence (MSE reaches a minimum or changes minimally).

III. Gradient Descent and Scikit-learn: Implicit Optimization

Scikit-learn models, particularly those in linear_model, ensemble, and neural_network modules, often employ gradient descent or its variants (like Stochastic Gradient Descent – SGD) under the hood to optimize their parameters. While you might not explicitly see the gradient calculations in your scikit-learn code, they are happening behind the scenes.

For instance, consider LinearRegression in scikit-learn. Although it uses Ordinary Least Squares (OLS) which has a closed-form solution, conceptually, even OLS aims to minimize the sum of squared errors – the same objective as MSE. For more complex models or when using regularized regression (like Ridge or Lasso), gradient-based optimization becomes essential, and scikit-learn seamlessly handles this.

Let’s illustrate with SGDRegressor, which explicitly uses Stochastic Gradient Descent:

from sklearn.linear_model import SGDRegressor

from sklearn.datasets import make_regression

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

X, y = make_regression(n_samples=100, n_features=1, noise=20, random_state=42)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

sgd_reg = SGDRegressor(loss='squared_error', # Loss function is MSE

random_state=42,

max_iter=1000,

learning_rate='invscaling',

eta0=0.01) # Initial learning rate

sgd_reg.fit(X_train, y_train)

y_pred = sgd_reg.predict(X_test)

mse = mean_squared_error(y_test, y_pred)

print(f"SGD Regressor MSE: {mse}")In this example, we explicitly set loss='squared_error' in SGDRegressor, indicating that MSE is the loss function to be minimized. Scikit-learn then internally calculates the gradient of MSE and uses SGD to update the model’s coefficients to reduce this loss.

Similarly, models like GradientBoostingRegressor also rely on gradients, although in a more complex boosting framework. They iteratively build trees, and each tree aims to correct the errors of the previous trees by fitting to the negative gradient of the loss function (which, when using ‘squared_error’ loss, is related to the residuals).

IV. Scikit-learn’s mean_squared_error Metric for Evaluation vs. Optimization

It’s important to distinguish between using mean_squared_error as:

-

A Loss Function (during model training): As demonstrated with

SGDRegressorand conceptually with other gradient-based models, MSE can be the function that the optimization algorithm strives to minimize. The gradient of MSE is the key to this optimization process. -

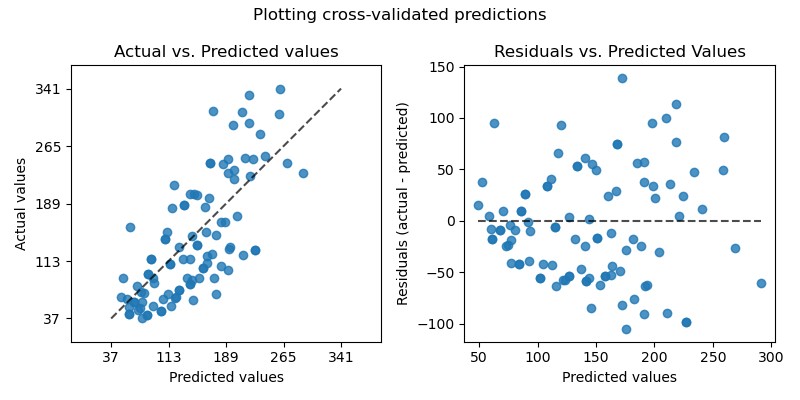

An Evaluation Metric (after model training): As shown in the initial example and throughout the original documentation,

mean_squared_errorfromsklearn.metricsis used to evaluate the performance of a trained model on a separate dataset (like a test set or during cross-validation). In this context, we are simply calculating the MSE to understand how well the model generalizes to unseen data. We are not using its gradient here.

The mean_squared_error function in sklearn.metrics is purely for evaluation. It takes true and predicted values as input and returns the MSE score. It does not calculate or utilize the gradient.

V. Advantages and Considerations of Mean Squared Error

Advantages:

- Differentiable: The MSE function is differentiable, which is essential for gradient-based optimization algorithms. The existence of a gradient allows for efficient iterative minimization.

- Convex (for linear models): For linear regression models, the MSE loss function is convex. This guarantees that gradient descent will converge to a global minimum, finding the optimal parameters.

- Widely Understood and Interpreted: MSE is a commonly used and well-understood metric in statistics and machine learning, making it easy to interpret and compare model performance.

- Penalizes Large Errors Quadratically: Squaring the errors gives more weight to larger errors. This can be desirable in scenarios where large errors are significantly more costly than small errors.

Considerations and Limitations:

- Sensitivity to Outliers: Due to the squared term, MSE is highly sensitive to outliers. A few extreme outliers can disproportionately inflate the MSE value, potentially skewing model optimization and evaluation. Metrics like Mean Absolute Error (MAE) are more robust to outliers.

- Assumes Homoscedasticity: MSE implicitly assumes homoscedasticity – that the variance of errors is constant across the range of predictions. If heteroscedasticity is present (error variance changes with predictions), MSE might not be the most appropriate loss function, and models optimized with MSE might perform sub-optimally in certain prediction ranges.

- Not on Original Scale: MSE is in squared units of the target variable, which can make it less interpretable on the original scale. Root Mean Squared Error (RMSE) addresses this by taking the square root of MSE, bringing the error metric back to the original unit scale.

VI. Conclusion

Understanding the “scikit learn gradient of mean square error” involves grasping both the MSE metric itself and its crucial role in model optimization. Scikit-learn leverages the gradient of MSE (and gradients of other loss functions) within its algorithms to train regression models effectively. While you use functions like mean_squared_error for evaluating model performance, the underlying gradient calculations drive the learning process. By appreciating this interplay, you can better select appropriate models, tune hyperparameters, and interpret evaluation metrics to build robust and accurate regression models with scikit-learn.

This article serves as a starting point. Further exploration of gradient descent variants (SGD, Adam, etc.), different loss functions available in scikit-learn, and the nuances of model evaluation will deepen your understanding of regression modeling and the power of scikit-learn.