Introduction to Supervised Learning

In the ever-evolving landscape of computer science, machine learning stands out as a transformative field, empowering computers to learn without explicit programming. Within machine learning, two primary branches emerge: Supervised Learning and unsupervised learning. This article delves into the realm of supervised learning, a fundamental technique that forms the bedrock of numerous applications we encounter daily.

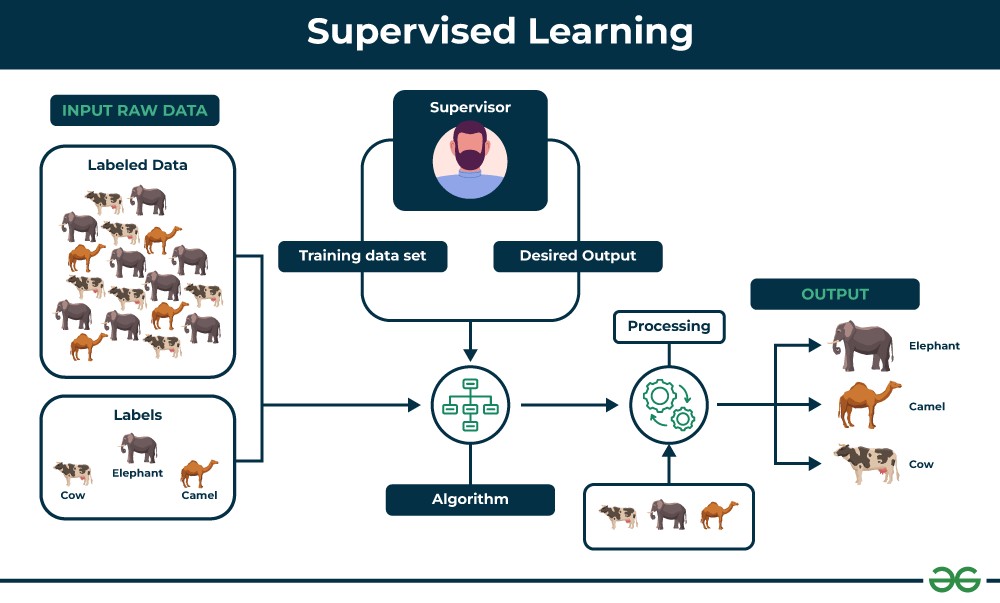

Supervised learning is a type of machine learning where algorithms learn from a labeled dataset. Imagine teaching a child to identify different types of fruits. You would show them examples of apples, bananas, and oranges, clearly labeling each one. Supervised learning operates on the same principle. It’s about training a model using data that is already tagged with the correct answers. This allows the model to learn the relationships between input features and output labels, enabling it to predict outcomes for new, unseen data.

Supervised Learning Process

Supervised Learning Process

What is Supervised Learning?

At its core, supervised learning is defined by the presence of a “supervisor,” much like a teacher guiding a student. This “supervisor” is the labeled data itself. In supervised machine learning, we feed the algorithm a dataset where each data point is paired with a corresponding correct output or label. The algorithm’s task is to learn a mapping function that can accurately predict the output for new, unlabeled data based on the patterns it discerns from the training data.

Think of it as learning by example. You provide the machine with examples of inputs and their desired outputs, and the machine learns to generalize from these examples. This learning process allows the algorithm to make informed predictions or classifications when presented with new, similar data.

Key Characteristics of Supervised Learning

- Labeled Data is Essential: The defining characteristic of supervised learning is its reliance on labeled data. Without pre-existing labels, the algorithm cannot learn the desired mappings.

- Goal-Oriented Learning: Supervised learning is goal-oriented. The objective is clearly defined – to predict a specific output or classify data into predefined categories.

- Training Phase: Supervised learning involves a distinct training phase where the algorithm learns from the labeled data. This phase is crucial for model development and performance.

- Prediction on New Data: Once trained, the model can be used to make predictions or classifications on new, unseen data points.

How Supervised Learning Works: A Step-by-Step Explanation

-

Data Collection and Labeling: The process begins with gathering a relevant dataset and labeling it. This involves identifying the input features and assigning the correct output label for each data point. For example, in image classification, this would involve collecting images of different objects and labeling each image with the object it contains (e.g., “cat,” “dog,” “car”).

-

Dataset Splitting: The labeled dataset is typically divided into two sets: a training set and a testing set. The training set is used to train the supervised learning model, while the testing set is used to evaluate its performance on unseen data.

-

Algorithm Selection: Based on the nature of the problem (regression or classification) and the data characteristics, an appropriate supervised learning algorithm is chosen. Examples include linear regression, logistic regression, decision trees, and support vector machines.

-

Model Training: The chosen algorithm is trained using the training dataset. During training, the algorithm iteratively adjusts its internal parameters to minimize the difference between its predictions and the actual labels in the training data. This process aims to learn the underlying patterns and relationships within the data.

-

Model Evaluation: After training, the model’s performance is evaluated using the testing dataset. Various evaluation metrics are used to assess how well the model generalizes to unseen data. For regression problems, metrics like Mean Squared Error (MSE) and R-squared are used. For classification problems, metrics like accuracy, precision, recall, and F1-score are employed.

-

Prediction and Deployment: If the model performs satisfactorily on the testing set, it can be deployed to make predictions on new, real-world data. For example, a trained spam filter can be used to classify incoming emails as spam or not spam.

Real-world Examples of Supervised Learning

To solidify understanding, consider these everyday examples of supervised learning in action:

-

Email Spam Filtering: Supervised learning algorithms are trained on emails labeled as “spam” or “not spam.” They learn to identify patterns in email content, sender information, and other features to classify new emails, effectively filtering out unwanted messages.

-

Image Recognition: From facial recognition on smartphones to automated image tagging on social media, supervised learning powers image recognition systems. These systems are trained on vast datasets of labeled images to identify objects, scenes, and even people within images.

-

Medical Diagnosis: In healthcare, supervised learning assists in diagnosis by analyzing patient data like medical images, test results, and medical history. Trained models can detect patterns indicative of diseases, aiding doctors in making accurate and timely diagnoses.

-

Credit Risk Assessment: Banks and financial institutions use supervised learning to assess credit risk. By training models on historical loan data (labeled with loan outcomes – repaid or defaulted), they can predict the likelihood of a new loan applicant defaulting, helping them make informed lending decisions.

-

Sentiment Analysis: Understanding customer sentiment from text data (like reviews or social media posts) is crucial for businesses. Supervised learning models can be trained to classify text as positive, negative, or neutral, providing valuable insights into customer opinions and preferences.

Types of Supervised Learning Algorithms

Supervised learning algorithms broadly fall into two main categories, based on the type of output they predict:

Regression: Predicting Continuous Values

Regression algorithms are employed when the goal is to predict a continuous numerical value. This could be anything from predicting house prices, stock market trends, temperature, or customer spending. In regression, the output variable is a real number.

Common Regression Algorithms

-

Linear Regression: A fundamental algorithm that models the relationship between variables by fitting a linear equation to the observed data. It’s simple yet effective for many linear relationships.

-

Polynomial Regression: An extension of linear regression that can model non-linear relationships by fitting a polynomial equation to the data.

-

Support Vector Regression (SVR): A powerful algorithm that uses support vector machines for regression tasks. It aims to find a hyperplane that best fits the data while minimizing errors within a certain margin.

-

Decision Tree Regression: Utilizes a tree-like structure to make decisions and predict continuous values. Decision trees are interpretable and can handle both linear and non-linear relationships.

-

Random Forest Regression: An ensemble method that combines multiple decision trees to improve prediction accuracy and robustness. Random forests are less prone to overfitting compared to single decision trees.

Classification: Predicting Categorical Values

Classification algorithms are used when the goal is to predict a categorical value, assigning data points to predefined categories or classes. Examples include classifying emails as spam or not spam, identifying the type of object in an image (cat, dog, bird), or predicting customer churn (churn or no churn).

Common Classification Algorithms

-

Logistic Regression: Despite its name, logistic regression is a classification algorithm. It models the probability of a data point belonging to a particular class. It’s widely used for binary classification problems (two classes).

-

Support Vector Machines (SVM): Powerful and versatile algorithms that can perform both linear and non-linear classification. SVMs aim to find the optimal hyperplane that separates different classes with the maximum margin.

-

Decision Trees: As with regression, decision trees can also be used for classification. They create a tree-like structure to classify data based on a series of decisions or rules.

-

Random Forests: Ensemble learning methods that combine multiple decision trees to enhance classification accuracy and stability.

-

Naive Bayes: A probabilistic classifier based on Bayes’ theorem. It assumes independence between features, which simplifies computation and makes it surprisingly effective for text classification and other applications.

Evaluating Supervised Learning Models

Evaluating the performance of supervised learning models is crucial to ensure they are accurate, reliable, and generalize well to new data. Different evaluation metrics are used for regression and classification tasks.

Evaluation Metrics for Regression

-

Mean Squared Error (MSE): MSE calculates the average of the squared differences between the predicted values and the actual values. A lower MSE indicates better model performance, as it signifies smaller prediction errors.

-

Root Mean Squared Error (RMSE): RMSE is simply the square root of the MSE. It provides a more interpretable measure of prediction error, as it is in the same units as the target variable. Like MSE, lower RMSE values are better.

-

Mean Absolute Error (MAE): MAE measures the average of the absolute differences between predicted and actual values. MAE is less sensitive to outliers compared to MSE and RMSE, making it a robust metric when dealing with datasets containing extreme values.

-

R-squared (Coefficient of Determination): R-squared measures the proportion of the variance in the target variable that is explained by the model. It ranges from 0 to 1, with higher values indicating a better fit. An R-squared of 1 means the model perfectly explains all the variance in the target variable.

Evaluation Metrics for Classification

-

Accuracy: Accuracy is the most straightforward metric, representing the percentage of correctly classified instances out of the total instances. While easy to understand, accuracy can be misleading in cases of imbalanced datasets (where one class is much more frequent than others).

-

Precision: Precision focuses on the accuracy of positive predictions. It measures the proportion of correctly predicted positive instances out of all instances predicted as positive. High precision means the model is good at avoiding false positives.

-

Recall: Recall, also known as sensitivity or true positive rate, measures the proportion of correctly predicted positive instances out of all actual positive instances. High recall means the model is good at identifying most of the positive instances and avoiding false negatives.

-

F1 Score: The F1 score is the harmonic mean of precision and recall. It provides a balanced measure of a model’s performance, especially useful when dealing with imbalanced datasets. A higher F1 score indicates a better balance between precision and recall.

-

Confusion Matrix: A confusion matrix is a table that visualizes the performance of a classification model by showing the counts of true positives, true negatives, false positives, and false negatives. It provides a detailed breakdown of the model’s predictions and helps identify areas where it may be struggling.

Applications of Supervised Learning

The versatility of supervised learning makes it applicable across a wide spectrum of industries and domains. Here are some prominent applications:

-

Spam Filtering: As mentioned earlier, supervised learning is the backbone of effective spam filters, protecting users from unwanted and potentially harmful emails.

-

Image Classification: From automatic photo tagging to medical image analysis, supervised learning empowers machines to understand and categorize visual information, revolutionizing fields like computer vision and healthcare.

-

Medical Diagnosis: Supervised learning aids medical professionals in diagnosing diseases, predicting patient outcomes, and personalizing treatment plans, leading to improved healthcare and patient care.

-

Fraud Detection: Financial institutions leverage supervised learning to detect fraudulent transactions in real-time, safeguarding customer accounts and preventing financial losses.

-

Natural Language Processing (NLP): Supervised learning is fundamental to many NLP tasks, including sentiment analysis, machine translation, chatbot development, and text summarization, enabling machines to understand, interpret, and generate human language.

Advantages of Supervised Learning

-

Leverages Labeled Data: Supervised learning effectively utilizes readily available labeled data to learn patterns and make predictions.

-

Optimizes Performance: With clear objectives and evaluation metrics, supervised learning allows for iterative model optimization to achieve desired performance levels.

-

Solves Real-World Problems: Its ability to address both regression and classification problems makes supervised learning a powerful tool for tackling diverse real-world challenges.

-

Versatile Applications: As highlighted in the applications section, supervised learning has broad applicability across numerous industries and domains.

-

Controlled Output: In classification tasks, supervised learning allows for defining and controlling the specific classes or categories the model will predict.

Disadvantages of Supervised Learning

-

Requires Labeled Data: The need for labeled data is both a strength and a weakness. Obtaining large, high-quality labeled datasets can be expensive, time-consuming, and sometimes impractical.

-

Computational Demands: Training complex supervised learning models, especially on large datasets, can be computationally intensive and time-consuming, requiring significant computing resources.

-

Not Suitable for All Tasks: Supervised learning is not ideal for tasks where labeled data is scarce or unavailable, or when the goal is to discover hidden patterns in unlabeled data.

-

Complexity with Big Data: While powerful, classifying and processing extremely large datasets can pose challenges for supervised learning algorithms.

-

Training Process Overhead: The training process itself requires careful data preparation, algorithm selection, hyperparameter tuning, and evaluation, which can be complex and require expertise.

Supervised Learning vs. Unsupervised Learning: Key Differences

While supervised learning thrives on labeled data to predict outputs, unsupervised learning explores unlabeled data to discover hidden patterns and structures. Here’s a table summarizing the key differences:

| Parameters | Supervised Machine Learning | Unsupervised Machine Learning |

|---|---|---|

| Input Data | Labeled data | Unlabeled data |

| Computational Complexity | Simpler method | Computationally complex |

| Accuracy | Highly accurate | Less accurate |

| Number of Classes | Known number of classes | Unknown number of classes |

| Data Analysis | Offline analysis | Real-time analysis |

| Algorithms Used | Linear Regression, Logistic Regression, SVM, Decision Trees, Random Forests, Neural Networks | K-Means Clustering, Hierarchical Clustering, Association Rule Learning |

| Output | Desired output is provided | Desired output is not explicitly provided |

| Training Data | Uses training data to infer model | No explicit training data used |

| Model Complexity | May struggle with very large, complex models | Can handle larger and more complex models |

| Model Testing | Model can be tested and evaluated | Evaluation is more challenging without ground truth |

| Common Terminology | Classification (often used) | Clustering (often used) |

| Example Application | Optical character recognition | Face detection in an image |

| Supervision | Requires supervision (labeled data) for training | No supervision required for training |

Conclusion: The Power of Supervised Learning

Supervised learning stands as a cornerstone of modern machine learning, providing powerful techniques for prediction and classification. Its ability to learn from labeled data and generalize to new instances has led to transformative applications across diverse fields. While it has its limitations, particularly the reliance on labeled data, the strengths and versatility of supervised learning solidify its crucial role in shaping the future of artificial intelligence and data-driven solutions.

Frequently Asked Questions (FAQs)

1. What is the core difference between supervised and unsupervised learning?

The fundamental difference lies in the type of data used for training. Supervised learning uses labeled data, where each data point has a known output or category. The algorithm learns to map inputs to outputs. Unsupervised learning, on the other hand, uses unlabeled data and aims to discover hidden patterns, structures, or groupings within the data itself, without explicit output guidance.

2. Can you explain supervised learning in simple terms?

Imagine teaching a dog tricks. You show the dog what to do (input) and reward them when they do it correctly (labeled output). Supervised learning is similar. You “show” the machine examples of inputs and their correct outputs (labeled data), and the machine learns to predict the correct output for new inputs based on what it has learned from those examples.

3. What are some popular algorithms used in supervised learning?

Common supervised learning algorithms include:

- Classification: Logistic Regression, Support Vector Machines (SVM), Decision Trees, Random Forests, Naive Bayes.

- Regression: Linear Regression, Polynomial Regression, Support Vector Regression (SVR), Decision Tree Regression, Random Forest Regression.

4. What are some popular algorithms used in unsupervised learning?

Common unsupervised learning algorithms include:

- Clustering: K-Means Clustering, Hierarchical Clustering, DBSCAN.

- Dimensionality Reduction: Principal Component Analysis (PCA), t-SNE.

- Association Rule Learning: Apriori Algorithm, Eclat Algorithm.

5. What is unsupervised learning and when is it used?

Unsupervised learning is a type of machine learning where the algorithm is trained on unlabeled data. It aims to find patterns, structures, and relationships in the data without explicit guidance or labeled outputs. It’s used when you want to:

- Discover hidden groupings in data (clustering).

- Reduce the dimensionality of data while preserving important information.

- Detect anomalies or outliers in data.

- Learn associations between different items in a dataset.

6. How do I choose between supervised and unsupervised learning?

The choice depends primarily on the nature of your data and your goal:

- Choose supervised learning if you have labeled data and want to make predictions or classifications for new data based on that labeled data. Your goal is to predict a specific output.

- Choose unsupervised learning if you have unlabeled data and want to explore the data to find patterns, structures, or groupings. Your goal is to understand the data better and extract meaningful insights without predefined outputs.

Next Article Supervised and Unsupervised Learning in R Programming