At LEARNS.EDU.VN, we believe understanding the intricacies of machine learning is key to unlocking its vast potential. So, What Are The Features In Machine Learning? This guide explains feature types, their importance, and how they impact model performance. Discover insights into feature engineering, data preprocessing, and feature selection, all aimed at enhancing your machine learning journey.

1. Introduction to Features in Machine Learning

In machine learning, features are the measurable properties or characteristics of data used by algorithms to make predictions or decisions. These features act as inputs, influencing the model’s learning process and subsequent performance. Imagine features as the ingredients in a recipe; the quality and type of ingredients (features) directly impact the final dish (model’s output).

1.1. Defining Features and Their Role

Features are individual, quantifiable properties of the data. They can be numerical, categorical, or even more complex representations like images or text. The role of features is to provide the machine learning model with the necessary information to identify patterns and make accurate predictions. Without relevant and well-prepared features, even the most advanced algorithms will struggle to perform effectively.

1.2. Why Feature Understanding is Crucial

Understanding features is crucial for several reasons:

- Improved Model Accuracy: Relevant and well-engineered features can significantly improve the accuracy of machine learning models.

- Enhanced Model Interpretability: Knowing which features are most influential helps in understanding how the model makes decisions.

- Reduced Overfitting: Selecting the right features can prevent the model from learning noise in the data, leading to better generalization.

- Efficient Training: Using only the most important features reduces the computational cost and time required for training the model.

1.3 The Importance of Feature Selection

Feature selection is a critical step in machine learning, involving choosing the most relevant subset of features from your dataset to train your model. This process not only simplifies the model, making it easier to interpret, but also improves its performance by reducing overfitting and enhancing generalization. Selecting the right features can lead to more accurate predictions, faster training times, and a better understanding of the underlying patterns in your data.

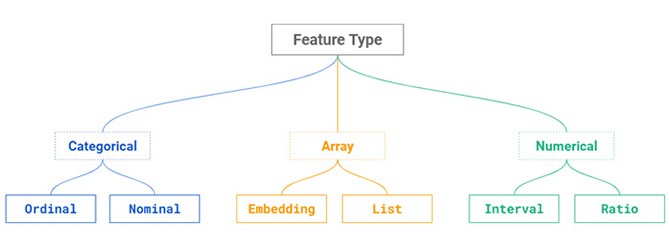

2. Types of Features in Machine Learning

Machine learning features can be broadly classified into several types, each requiring different preprocessing and handling techniques. Understanding these types is essential for effective feature engineering and model building.

2.1. Numerical Features

Numerical features represent quantitative data and can be further divided into two categories:

-

Discrete Features: These are integer-based features that represent countable items. For example, the number of rooms in a house or the number of customer transactions in a month.

-

Continuous Features: These features represent measurable quantities and can take on any value within a range. Examples include temperature, height, or stock prices.

Numerical features often require scaling or normalization to ensure that no single feature dominates the model due to its magnitude.

2.2. Categorical Features

Categorical features represent qualitative data and can be divided into:

-

Nominal Features: These features represent categories without any inherent order. Examples include colors (red, blue, green) or types of fruit (apple, banana, orange).

-

Ordinal Features: These features represent categories with a meaningful order or ranking. Examples include education levels (high school, bachelor’s, master’s) or customer satisfaction ratings (low, medium, high).

Categorical features need to be encoded into numerical format before being used in most machine learning algorithms. Common encoding techniques include one-hot encoding and label encoding.

2.3. Text Features

Text features consist of textual data, such as product reviews, news articles, or social media posts. These features require special preprocessing techniques to convert them into numerical representations that machine learning models can understand.

- Bag of Words (BoW): A simple technique that counts the frequency of each word in a document.

- Term Frequency-Inverse Document Frequency (TF-IDF): A more advanced technique that weighs words based on their importance in the document and the entire corpus.

- Word Embeddings (Word2Vec, GloVe, BERT): These techniques represent words as dense vectors in a high-dimensional space, capturing semantic relationships between words.

2.4. Image Features

Image features represent visual data and require techniques such as:

- Pixel Values: Raw pixel values of an image.

- Edge Detection: Identifying edges and boundaries in an image.

- Texture Analysis: Analyzing the texture patterns in an image.

- Convolutional Neural Networks (CNNs): Deep learning models that automatically learn hierarchical features from images.

2.5. Time Series Features

Time series features represent data points indexed in time order. These features require specific techniques to capture temporal dependencies and patterns.

- Lag Features: Past values of the time series used as predictors.

- Rolling Statistics: Statistical measures calculated over a rolling window of time (e.g., moving average, standard deviation).

- Seasonal Decomposition: Decomposing the time series into trend, seasonal, and residual components.

2.6 Derived Features

Derived features are new features created from existing ones through mathematical operations, combinations, or transformations. This process, known as feature engineering, aims to enhance the model’s ability to capture complex relationships in the data. For instance, you might create a derived feature by calculating the body mass index (BMI) from height and weight or by combining multiple transaction features to generate a total spending feature.

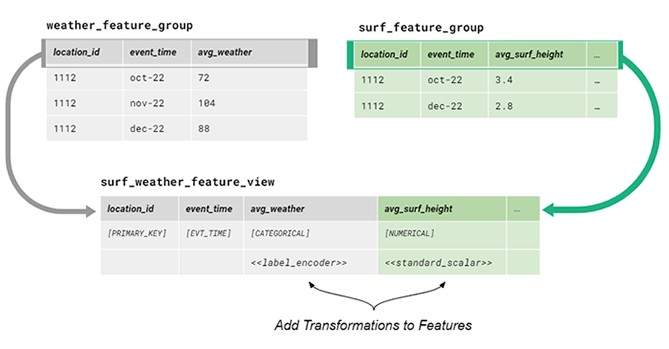

3. Feature Engineering: Crafting Effective Features

Feature engineering involves transforming raw data into features that better represent the underlying problem to the machine learning model, resulting in improved accuracy and performance.

3.1. Importance of Feature Engineering

Feature engineering is often considered more important than algorithm selection. A well-engineered feature set can enable even a simple model to achieve excellent results, while a poorly engineered feature set can hinder the performance of the most sophisticated algorithms.

3.2. Techniques for Numerical Features

- Scaling: Scaling numerical features ensures that they have a similar range of values, preventing features with larger values from dominating the model. Common scaling techniques include Min-Max scaling and standardization.

- Transformation: Transforming numerical features can help to make the data more normally distributed, which can improve the performance of some models. Common transformations include logarithmic, exponential, and power transformations.

- Binning: Binning involves grouping continuous values into discrete bins, which can help to simplify the data and make it easier for the model to learn.

3.3. Techniques for Categorical Features

- One-Hot Encoding: One-hot encoding creates a binary column for each category, indicating the presence or absence of that category.

- Label Encoding: Label encoding assigns a unique numerical value to each category.

- Target Encoding: Target encoding replaces each category with the mean value of the target variable for that category.

3.4. Techniques for Text Features

- Tokenization: Breaking down text into individual words or tokens.

- Stop Word Removal: Removing common words that do not carry much meaning (e.g., “the”, “a”, “is”).

- Stemming and Lemmatization: Reducing words to their root form (e.g., “running” to “run”).

3.5. Creating Interaction Features

Interaction features are created by combining two or more existing features. These features can capture complex relationships between variables that individual features might miss. For example, an interaction feature might be the product of two numerical features or the combination of two categorical features.

3.6 Handling Missing Values

Missing values in your dataset can significantly impact the performance of machine learning models. Addressing this issue involves several strategies, including imputation, where missing values are replaced with estimated values based on statistical measures or model predictions, and removal, where rows or columns with missing values are deleted from the dataset. The choice of method depends on the amount of missing data and its potential impact on the model’s accuracy.

4. Feature Selection: Choosing the Right Features

Feature selection involves selecting a subset of the most relevant features from the original feature set. This process helps to reduce overfitting, improve model interpretability, and reduce computational cost.

4.1. Benefits of Feature Selection

- Improved Accuracy: By removing irrelevant or redundant features, feature selection can improve the accuracy of machine learning models.

- Reduced Overfitting: Feature selection reduces the complexity of the model, making it less likely to overfit the training data.

- Enhanced Interpretability: A simpler model with fewer features is easier to understand and interpret.

- Faster Training: Reducing the number of features reduces the computational cost and time required for training the model.

4.2. Feature Selection Methods

- Filter Methods: Filter methods evaluate the relevance of features based on statistical measures such as correlation, chi-squared test, and information gain.

- Wrapper Methods: Wrapper methods evaluate subsets of features by training and testing a machine learning model on each subset. Common wrapper methods include forward selection, backward elimination, and recursive feature elimination.

- Embedded Methods: Embedded methods perform feature selection as part of the model training process. Examples include LASSO regression and decision tree-based methods.

4.3. Dimensionality Reduction Techniques

Dimensionality reduction techniques transform high-dimensional data into a lower-dimensional space while preserving the most important information. Common techniques include:

- Principal Component Analysis (PCA): A linear dimensionality reduction technique that identifies the principal components of the data.

- t-Distributed Stochastic Neighbor Embedding (t-SNE): A non-linear dimensionality reduction technique that is particularly useful for visualizing high-dimensional data in a lower-dimensional space.

4.4 Feature Importance

Determining feature importance involves evaluating which features have the most significant impact on the predictive performance of a machine learning model. Techniques such as analyzing coefficients in linear models or using tree-based methods like Random Forest can provide insights into feature importance. Understanding which features are most influential allows for better model interpretability, feature selection, and overall model optimization.

5. Feature Scaling and Normalization

Feature scaling and normalization are essential preprocessing steps that ensure all features contribute equally to the model, preventing features with larger values from dominating the learning process.

5.1. Why Scaling and Normalization are Important

- Equal Contribution: Scaling and normalization ensure that all features have a similar range of values, preventing features with larger values from dominating the model.

- Improved Convergence: Some machine learning algorithms converge faster when features are scaled or normalized.

- Better Performance: Scaling and normalization can improve the accuracy and stability of machine learning models.

5.2. Scaling Techniques

- Min-Max Scaling: Scales features to a range between 0 and 1.

- Standard Scaling: Scales features to have a mean of 0 and a standard deviation of 1.

- Robust Scaling: Scales features using the median and interquartile range, making it robust to outliers.

5.3. Normalization Techniques

- L1 Normalization: Scales features so that the sum of their absolute values is equal to 1.

- L2 Normalization: Scales features so that the sum of their squared values is equal to 1.

5.4 Considerations for Time Series Data

When dealing with time series data, it’s crucial to preserve the temporal order of the data during preprocessing. Techniques like differencing, where you subtract consecutive observations, can help stabilize the mean of the time series. Additionally, consider seasonal decomposition to separate the time series into trend, seasonal, and residual components, which can then be used as features in your model.

6. Working with Embeddings

Embeddings are dense vector representations of categorical or textual data, capturing semantic relationships and improving model performance.

6.1. Understanding Embeddings

An embedding is mapping from a discrete set to a vector space, called the embedding space. Embeddings are a lower dimensional representation of some input features that is computed using a feature embedding algorithm. Embeddings can be used for similarity search and to train a model that is based on a sparse categorical variable.

6.2. Types of Embeddings

- Word Embeddings (Word2Vec, GloVe, FastText): These embeddings represent words as dense vectors in a high-dimensional space, capturing semantic relationships between words.

- Entity Embeddings: These embeddings represent categorical variables as dense vectors, capturing relationships between categories.

6.3. Using Embeddings in Machine Learning

Embeddings can be used as input features in machine learning models, improving their ability to capture complex relationships between variables. Embeddings are particularly useful for dealing with high-dimensional categorical data and text data.

7. Advanced Feature Engineering Techniques

Exploring advanced feature engineering techniques can further enhance the predictive power of your models.

7.1. Polynomial Features

Polynomial features involve creating new features by raising existing features to a power or combining multiple features using polynomial functions. These features can capture non-linear relationships between variables.

7.2. Spline Features

Spline features involve fitting piecewise polynomial functions to the data, allowing for more flexible and accurate modeling of non-linear relationships.

7.3. Feature Crossing

Feature crossing involves creating new features by combining two or more existing features. This technique can capture interaction effects between variables and improve model accuracy.

7.4 Automated Feature Engineering

Automated feature engineering utilizes algorithms and techniques to automatically generate new features from existing ones, often revealing hidden patterns and improving model performance. Tools like Featuretools and auto-sklearn can automate this process, saving time and effort while potentially discovering valuable features that may not be immediately apparent.

8. Practical Examples of Feature Engineering

Let’s look at a few practical examples of feature engineering across different domains.

8.1. Example 1: E-Commerce

In e-commerce, features can be engineered to predict customer churn, enhance personalized recommendations, and detect fraudulent transactions.

| Feature | Description | Engineering Technique |

|---|---|---|

| Purchase History | Number of past purchases made by the customer | Calculate frequency and recency of purchases; create segments based on purchase behavior |

| Product Category Preferences | Categories of products frequently purchased by the customer | Use one-hot encoding to represent category preferences; create embeddings to capture relationships between categories |

| Website Activity | Time spent on the website, pages visited, products viewed | Calculate session duration, page views per session; create interaction features between time spent and pages visited |

| Customer Reviews | Text reviews written by the customer | Perform sentiment analysis to extract sentiment scores; use TF-IDF or word embeddings to represent the text |

| Shipping Address | Location of the customer | Extract geographical features such as latitude and longitude; calculate distance to nearest distribution center |

| Payment Method | Type of payment method used by the customer | Use one-hot encoding to represent payment methods; identify patterns of fraudulent payment methods |

| Time of Day | Time when the purchase happened | Create cyclical features using sine and cosine transformation to encode the time of day |

8.2. Example 2: Healthcare

In healthcare, features can be engineered to predict disease risk, optimize treatment plans, and improve patient outcomes.

| Feature | Description | Engineering Technique |

|---|---|---|

| Medical History | Past medical conditions, procedures, and medications | Use one-hot encoding to represent medical conditions; create interaction features between conditions and medications |

| Lab Results | Blood tests, urine tests, and other diagnostic tests | Normalize lab results; calculate ratios between different lab values; identify abnormal values |

| Vital Signs | Heart rate, blood pressure, temperature | Calculate mean, min, max, and standard deviation of vital signs; identify trends and patterns |

| Lifestyle Factors | Diet, exercise, smoking habits | Use one-hot encoding to represent lifestyle factors; create interaction features between lifestyle factors and medical conditions |

| Demographic Data | Age, gender, race | Use one-hot encoding to represent demographic data; create interaction features between demographic data and medical conditions |

| Genetic Information | Presence of specific genes or genetic mutations | Use one-hot encoding to represent genetic information; identify genetic markers associated with specific diseases |

| Time Since Last Doctor Visit | Elapsed time since patient last visited the doctor | Scale and normalize to ensure it’s within a similar range of values, prevent features with larger values from dominating the model. |

8.3. Example 3: Financial Services

In financial services, features can be engineered to detect fraud, assess credit risk, and predict market trends.

| Feature | Description | Engineering Technique |

|---|---|---|

| Transaction History | Past transactions made by the customer | Calculate frequency, recency, and monetary value of transactions; create segments based on transaction behavior |

| Account Information | Account balance, credit limit, interest rate | Normalize account information; calculate ratios between different account values; identify unusual patterns |

| Demographic Data | Age, income, occupation | Use one-hot encoding to represent demographic data; create interaction features between demographic data and transaction behavior |

| Credit Score | Creditworthiness of the customer | Normalize credit score; create interaction features between credit score and transaction behavior |

| Market Data | Stock prices, interest rates, economic indicators | Calculate moving averages, volatility, and correlations between different market variables; identify trends and patterns |

| Social Media Activity | Publicly available information about the customer on social media | Perform sentiment analysis to extract sentiment scores; identify connections to known fraudsters |

| IP Address Location | The geographical location of where a user is making the transaction from | Use one-hot encoding to represent geographical data. |

9. Tools and Libraries for Feature Engineering

Several tools and libraries are available to help streamline the feature engineering process.

9.1. Python Libraries

- Pandas: A powerful library for data manipulation and analysis, providing data structures such as DataFrames and Series.

- NumPy: A library for numerical computing, providing support for arrays, matrices, and mathematical functions.

- Scikit-learn: A comprehensive library for machine learning, providing tools for feature engineering, model selection, and evaluation.

- Featuretools: A library for automated feature engineering, automatically generating new features from relational data.

9.2. Other Tools

- Alteryx: A data analytics platform that provides a visual interface for data preparation, blending, and analysis.

- RapidMiner: A data science platform that provides a graphical user interface for building and deploying machine learning models.

- DataRobot: An automated machine learning platform that automates the entire machine learning pipeline, including feature engineering, model selection, and deployment.

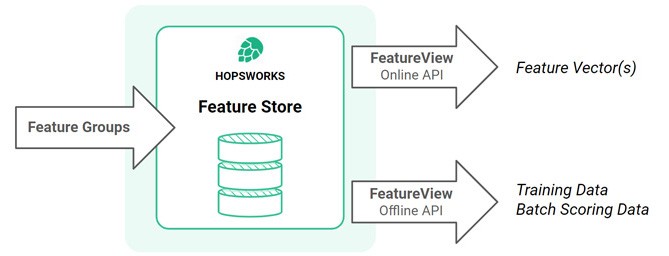

9.3. Cloud-Based Feature Stores

Cloud-based feature stores, such as AWS SageMaker Feature Store, Google Cloud Vertex AI Feature Store, and Azure Feature Store, provide centralized repositories for storing, managing, and serving features for machine learning models. These platforms enable teams to collaborate on feature engineering, ensure consistency between training and serving, and simplify the deployment of machine learning models at scale.

10. Best Practices for Feature Engineering

Following best practices can help to ensure the success of your feature engineering efforts.

10.1. Understand the Data

Before starting feature engineering, take the time to thoroughly understand the data. This includes understanding the meaning of each feature, the relationships between features, and the potential biases or limitations of the data.

10.2. Start with Simple Features

Start with simple, intuitive features before moving on to more complex or advanced features. This will help you to establish a baseline performance and identify the most important features.

10.3. Validate Features

Validate features by testing them on a holdout dataset or using cross-validation. This will help you to ensure that the features generalize well to new data and do not lead to overfitting.

10.4. Document Features

Document features by providing a clear description of their meaning, how they were engineered, and any assumptions or limitations. This will help to ensure that the features can be understood and used by others.

10.5. Iterate and Refine

Feature engineering is an iterative process. Continuously iterate and refine your features based on feedback from the model and new insights from the data.

11. The Future of Feature Engineering

The field of feature engineering is constantly evolving, with new techniques and tools being developed all the time.

11.1. Automated Feature Engineering

Automated feature engineering is becoming increasingly popular, with tools and libraries that automatically generate new features from relational data. This can help to streamline the feature engineering process and discover new, valuable features.

11.2. Deep Learning for Feature Engineering

Deep learning models can automatically learn features from raw data, reducing the need for manual feature engineering. This is particularly useful for complex data types such as images, text, and audio.

11.3. Feature Stores

Feature stores are becoming increasingly important for managing and sharing features across different machine learning projects. These centralized repositories enable teams to collaborate on feature engineering, ensure consistency between training and serving, and simplify the deployment of machine learning models at scale.

12. Conclusion: Mastering Feature Engineering

Mastering feature engineering is essential for building high-performance machine learning models. By understanding the different types of features, applying appropriate engineering techniques, and following best practices, you can create features that unlock the full potential of your data.

At LEARNS.EDU.VN, we are dedicated to providing you with the knowledge and resources you need to excel in machine learning. Whether you’re looking to learn new skills, deepen your understanding of key concepts, or find effective learning strategies, we’re here to support you on your journey.

Struggling to find reliable and easy-to-understand learning resources? Lacking motivation and direction in your studies? Visit LEARNS.EDU.VN today and discover a wealth of expertly crafted articles, tutorials, and courses designed to help you master machine learning and other essential skills. Connect with our education experts for personalized guidance and unlock your full potential. Contact us at 123 Education Way, Learnville, CA 90210, United States. Whatsapp: +1 555-555-1212. Website: learns.edu.vn.

Frequently Asked Questions (FAQ)

Here are some frequently asked questions related to features in machine learning:

-

What is a feature in machine learning?

A feature is a measurable property or characteristic of data used by machine learning algorithms to make predictions or decisions.

-

Why is feature engineering important?

Feature engineering is important because it involves transforming raw data into features that better represent the underlying problem to the machine learning model, resulting in improved accuracy and performance.

-

What are the different types of features in machine learning?

The different types of features include numerical features, categorical features, text features, image features, and time series features.

-

What is feature selection?

Feature selection involves selecting a subset of the most relevant features from the original feature set to reduce overfitting, improve model interpretability, and reduce computational cost.

-

What are the different feature selection methods?

The different feature selection methods include filter methods, wrapper methods, and embedded methods.

-

What is feature scaling and normalization?

Feature scaling and normalization are preprocessing steps that ensure all features contribute equally to the model, preventing features with larger values from dominating the learning process.

-

What are embeddings?

Embeddings are dense vector representations of categorical or textual data, capturing semantic relationships and improving model performance.

-

What is automated feature engineering?

Automated feature engineering utilizes algorithms and techniques to automatically generate new features from existing ones, often revealing hidden patterns and improving model performance.

-

What are feature stores?

Feature stores are centralized repositories for storing, managing, and serving features for machine learning models, enabling teams to collaborate on feature engineering, ensure consistency between training and serving, and simplify the deployment of machine learning models at scale.

-

How can I learn more about feature engineering?

You can learn more about feature engineering by reading books, taking online courses, attending conferences, and experimenting with different techniques on real-world datasets.