In the realm of machine learning, particularly when training deep learning models, understanding the concept of an epoch is fundamental. An epoch signifies a complete traversal through the entire training dataset. It’s a crucial part of the training process, ensuring that every data sample contributes to updating the model’s parameters, ultimately aiming for optimized performance over successive epochs.

This article will delve deep into the definition of epochs, elucidate their importance in training robust deep learning models, and explore their impact on overall model performance. We will also differentiate epochs from related concepts like iterations and batches to provide a clear understanding of the training dynamics.

Understanding Epochs in Machine Learning

At its core, an epoch in machine learning refers to one complete cycle of training where the entire training dataset is used exactly once to update the weights of the neural network. Think of it as one full ‘lesson’ for your model using all available learning material (the training data). During each epoch, the model learns patterns from the data, and these learnings are solidified by adjusting its internal parameters.

In practical deep learning scenarios, datasets are often too large to process in one go due to memory limitations. To handle this, the dataset is divided into smaller, manageable chunks called batches or mini-batches. The model processes these batches sequentially within an epoch. After processing each batch, the model’s parameters are updated using optimization algorithms like Stochastic Gradient Descent (SGD).

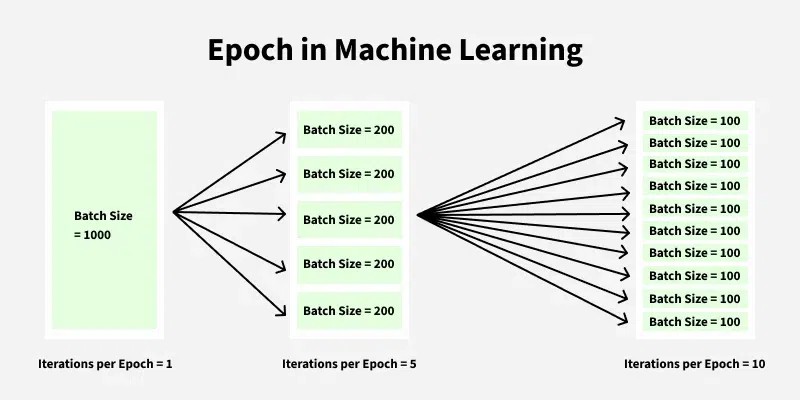

The batch size, a hyperparameter determined by the user, dictates the number of samples in each batch. The number of batches in a single epoch is simply the total number of training samples divided by the batch size. After the model has processed all the batches (and therefore the entire dataset), one epoch is complete.

Following each epoch, it’s vital to evaluate the model’s performance, typically using a separate validation dataset. This evaluation helps track the learning progress and identify potential issues like overfitting.

The number of epochs is a critical hyperparameter that significantly influences training. Generally, increasing the number of epochs allows the model to learn more complex patterns and potentially improve performance. However, this isn’t always beneficial. Training for too many epochs can lead to overfitting, a scenario where the model becomes overly specialized to the training data and performs poorly on new, unseen data. Monitoring performance on the validation set is crucial to determine the optimal number of epochs and prevent overfitting, often employing techniques like early stopping.

Epoch Example Explained

Let’s illustrate the concept of epochs with a concrete example:

Imagine you have a training dataset containing 1000 images of cats and dogs.

-

Scenario 1: Training without batches – If you were to train in a way that processes the entire dataset at once (which is often impractical for large datasets), one epoch would consist of feeding all 1000 images to the model and updating the weights once after processing all of them.

-

Scenario 2: Training with batches – More realistically, you decide to use a batch size of 100. This means your dataset of 1000 images is divided into 10 batches (1000 samples / 100 batch size = 10 batches). In this case, one epoch would consist of 10 iterations. In each iteration, the model processes one batch of 100 images and updates its parameters. After 10 iterations, the model has processed all 10 batches, completing one epoch.

epoch-in-machine-learning_

epoch-in-machine-learning_

In practice, when training models, it’s common to set a potentially large number of epochs (e.g., 100 or more) and employ an early stopping mechanism. Early stopping monitors the model’s performance on a validation set and halts training when the validation loss starts to increase or no longer improves, even if the maximum number of epochs has not been reached. This prevents overfitting and saves computational time.

Iteration: A Step within an Epoch

To further clarify, let’s define iteration. An iteration is a single step in the training process where a batch of data is passed through the model, the loss is calculated, and the model’s parameters are updated. Within each epoch, the number of iterations is equal to the number of batches.

For instance, if you’re training for 4 epochs and each epoch consists of 10 iterations (due to batching), then the total number of iterations during the entire training process will be 40.

Example: Consider a training dataset with 1000 samples and a chosen batch size of 100.

- Total training samples: 1000

- Batch size: 100

- Iterations per epoch: 1000 / 100 = 10 iterations

- Epochs: Let’s say 5 epochs

Therefore, the total number of iterations for 5 epochs would be 10 iterations/epoch * 5 epochs = 50 iterations.

Batch: Dividing the Dataset

A batch in machine learning is simply a subset of the training dataset. During each iteration, the model processes one batch of data. Using batches is crucial for:

- Memory Efficiency: Processing data in batches reduces the amount of data loaded into memory at once, allowing training of models on larger datasets that wouldn’t fit into memory otherwise.

- Computational Efficiency: Batch processing can leverage vectorized operations and parallel processing capabilities of GPUs, leading to faster training times compared to processing data sample by sample.

- Smoother Gradient Updates: Gradients calculated on batches are often less noisy than gradients calculated on individual samples, leading to more stable and efficient convergence during training.

Example: If you have a dataset of 1000 samples and set a batch size of 50, you will have 20 batches (1000 / 50 = 20). In each epoch, the model’s weights will be updated 20 times, once after processing each batch of 50 samples.

Epoch vs. Batch: Key Differences

| Feature | Epoch | Batch |

|---|---|---|

| Definition | One complete pass through the entire dataset | A subset of the training data processed at once |

| Scope | Encompasses all batches in training data | A smaller division within an epoch |

| Number | Typically ranges from 1 to many | Size is usually greater than 1, less than dataset size |

| Hyperparameter | Set by the user to control training length | Set by the user to manage memory and efficiency |

Why Multiple Epochs are Necessary

Training a machine learning model effectively almost always requires using more than one epoch. Here’s why:

-

Parameter Optimization: Machine learning models, especially neural networks, learn by adjusting their internal parameters (weights and biases). A single epoch is often insufficient for the model to converge to an optimal set of parameters. Multiple epochs allow the model to iteratively refine these parameters, gradually improving its performance.

-

Learning Complex Patterns: Real-world datasets are often complex and contain intricate patterns. Exposing the model to the dataset multiple times through epochs enables it to capture these complex relationships more effectively. The model needs repeated exposure to the data to generalize well.

-

Convergence Monitoring: Training over multiple epochs allows us to monitor the model’s learning curve. By observing metrics like training and validation loss across epochs, we can assess whether the model is learning, converging, or overfitting. This monitoring is crucial for making informed decisions about the training process.

-

Early Stopping Application: As mentioned earlier, early stopping is a vital technique to prevent overfitting. It relies on monitoring validation performance over multiple epochs. Without multiple epochs, there wouldn’t be a progression to monitor and no opportunity to implement early stopping.

Advantages of Using Multiple Epochs

Utilizing multiple epochs in machine learning training provides several key advantages:

-

Improved Model Performance: The primary benefit is the potential for significantly improved model accuracy and generalization. By iteratively processing the training data, the model refines its understanding and ability to make accurate predictions.

-

Progress Tracking and Insights: Multiple epochs enable detailed progress monitoring. Tracking metrics like loss and accuracy across epochs provides insights into the learning process, allowing data scientists to diagnose issues and make adjustments.

-

Memory Efficiency via Mini-Batches: Epoch-based training, particularly with mini-batches, is essential for training on large datasets that exceed memory capacity. Mini-batches break down the data into manageable chunks, allowing training on massive datasets that would be impossible to handle in a single pass.

-

Overfitting Control with Early Stopping: Epochs facilitate the use of early stopping, a powerful regularization technique. By stopping training when validation performance plateaus or degrades, we prevent overfitting and ensure the model generalizes well to unseen data.

-

Optimized Training Process: The iterative nature of epoch-based training allows for a more controlled and optimized learning process. We can fine-tune hyperparameters, adjust learning rates, and implement various strategies based on the observed performance trends over multiple epochs.

Disadvantages of Epochs and Overcoming Them

While epochs are essential, training for too many epochs can lead to disadvantages, primarily overfitting.

-

Overfitting Risk: The most significant drawback is the risk of overfitting. If a model is trained for an excessive number of epochs, it might start to memorize the training data, including noise and irrelevant details. This results in excellent performance on the training set but poor generalization to new, unseen data.

-

Computational Cost: Training for more epochs directly translates to increased computational time and resources. For very large datasets or complex models, excessive epochs can become computationally expensive and time-consuming.

-

Finding the Optimal Number of Epochs: Determining the ideal number of epochs is not always straightforward. It depends on the dataset, model complexity, and other hyperparameters. Training for too few epochs might lead to underfitting (the model doesn’t learn enough), while too many leads to overfitting.

Mitigation Strategies:

- Early Stopping: As discussed, early stopping is the primary method to combat overfitting. By monitoring validation performance and stopping training at the optimal point, we can find a balance between learning and generalization.

- Regularization Techniques: Techniques like dropout, weight decay, and L1/L2 regularization can help prevent overfitting and allow for training over more epochs without memorizing the training data.

- Cross-validation: Using cross-validation techniques helps in more robustly evaluating model performance and selecting the appropriate number of epochs or early stopping criteria.

- Experimentation and Monitoring: Careful experimentation and continuous monitoring of training and validation metrics are essential to identify the signs of overfitting and determine the appropriate number of epochs for a given task.

Conclusion

In summary, epochs are a cornerstone concept in machine learning, representing complete passes through the training dataset. They are indispensable for enabling models to learn complex patterns, optimize their parameters, and achieve high performance. Understanding the relationship between epochs, iterations, and batches, along with the advantages and potential disadvantages of using multiple epochs, is crucial for anyone working with machine learning models. By carefully controlling the number of epochs and employing techniques like early stopping, we can harness the power of epochs to train effective and generalizable machine learning models.

Frequently Asked Questions: Epoch in Machine Learning

What is Epoch?

In machine learning, an epoch is defined as one complete forward and backward pass of all training examples. It represents a full cycle of training where the model sees the entire dataset once and updates its internal parameters based on the learned patterns. Using multiple epochs is standard practice to achieve optimal model performance by allowing for iterative learning and refinement.

What is the difference between epoch and iteration?

An epoch is the overarching cycle of training encompassing the entire dataset. An iteration is a single parameter update step within an epoch, typically performed on a batch of data. The number of iterations in an epoch depends on the batch size. In essence, an epoch is made up of multiple iterations.

Why are epochs used in machine learning?

Epochs are used in machine learning because they provide a structured way for models to learn from data iteratively. Each epoch allows the model to refine its understanding of the data, adjust its parameters, and improve its predictive capabilities. Multiple epochs are generally needed for models to converge to a satisfactory level of performance, especially with complex datasets.

What is an Epoch in a Neural Network?

In the context of neural networks, an epoch involves feeding the entire training dataset through the network once in the forward pass and then propagating the error back through the network in the backward pass to update the weights. This process is repeated for a specified number of epochs to train the neural network to learn the underlying patterns in the data.

What is an Epoch in TensorFlow?

In TensorFlow, a popular machine learning framework, epochs are a fundamental part of the training process. When using the

model.fit()method in TensorFlow/Keras, theepochsargument directly specifies the number of epochs the model should be trained for. TensorFlow handles the iterations and batching within each epoch automatically, making it easy to control the training duration in terms of epochs.

Next Article Machine Learning Examples