At learns.edu.vn, we understand the importance of evaluating machine-learning models effectively. Precision in machine learning refers to the accuracy of positive predictions, ensuring that when a model predicts something as positive, it is indeed correct. This article explores precision, its calculation, importance, and how it compares to other key metrics like accuracy and recall, providing you with the expertise to assess and improve your models. Master the nuances of machine learning evaluation, enhance your understanding of model performance, and discover how to leverage these insights for superior results.

1. Understanding Precision in Machine Learning

Precision, a critical metric in machine learning, specifically assesses the accuracy of positive predictions made by a model. It answers a fundamental question: Out of all the instances the model predicted as positive, how many were actually positive? Precision is vital because it highlights the reliability of positive predictions, which is particularly important in scenarios where false positives (incorrectly identifying something as positive) can have significant consequences. This metric helps data scientists and machine learning engineers ensure their models are not only identifying positive cases but are doing so with a high degree of accuracy, enhancing the overall trustworthiness of the model’s output.

1.1. Defining Precision

Precision is calculated as the ratio of true positives (TP) to the sum of true positives and false positives (FP). Mathematically, it’s expressed as:

Precision = TP / (TP + FP)

- True Positives (TP): The number of instances correctly identified as positive by the model.

- False Positives (FP): The number of instances incorrectly identified as positive by the model.

This calculation gives a clear indication of how well the model avoids false alarms.

1.2. Importance of Precision

Precision is paramount in scenarios where the cost of a false positive is high. Consider these real-world examples:

- Medical Diagnosis: In identifying a disease, a false positive could lead to unnecessary treatment, causing stress and potential harm to the patient.

- Spam Detection: A false positive means a legitimate email is marked as spam, potentially causing the user to miss important information.

- Fraud Detection: A false positive could lead to blocking a legitimate transaction, causing inconvenience to the customer and potential loss of business.

- Quality Control: In manufacturing, incorrectly identifying a good product as defective (false positive) leads to unnecessary rejection and waste.

- Network Intrusion Detection: False positives can trigger unnecessary alerts, overwhelming security teams and potentially masking real threats.

- Predictive Maintenance: A false positive can result in unnecessary maintenance, leading to wasted resources and downtime.

In each of these cases, minimizing false positives is crucial, making precision a key metric to optimize.

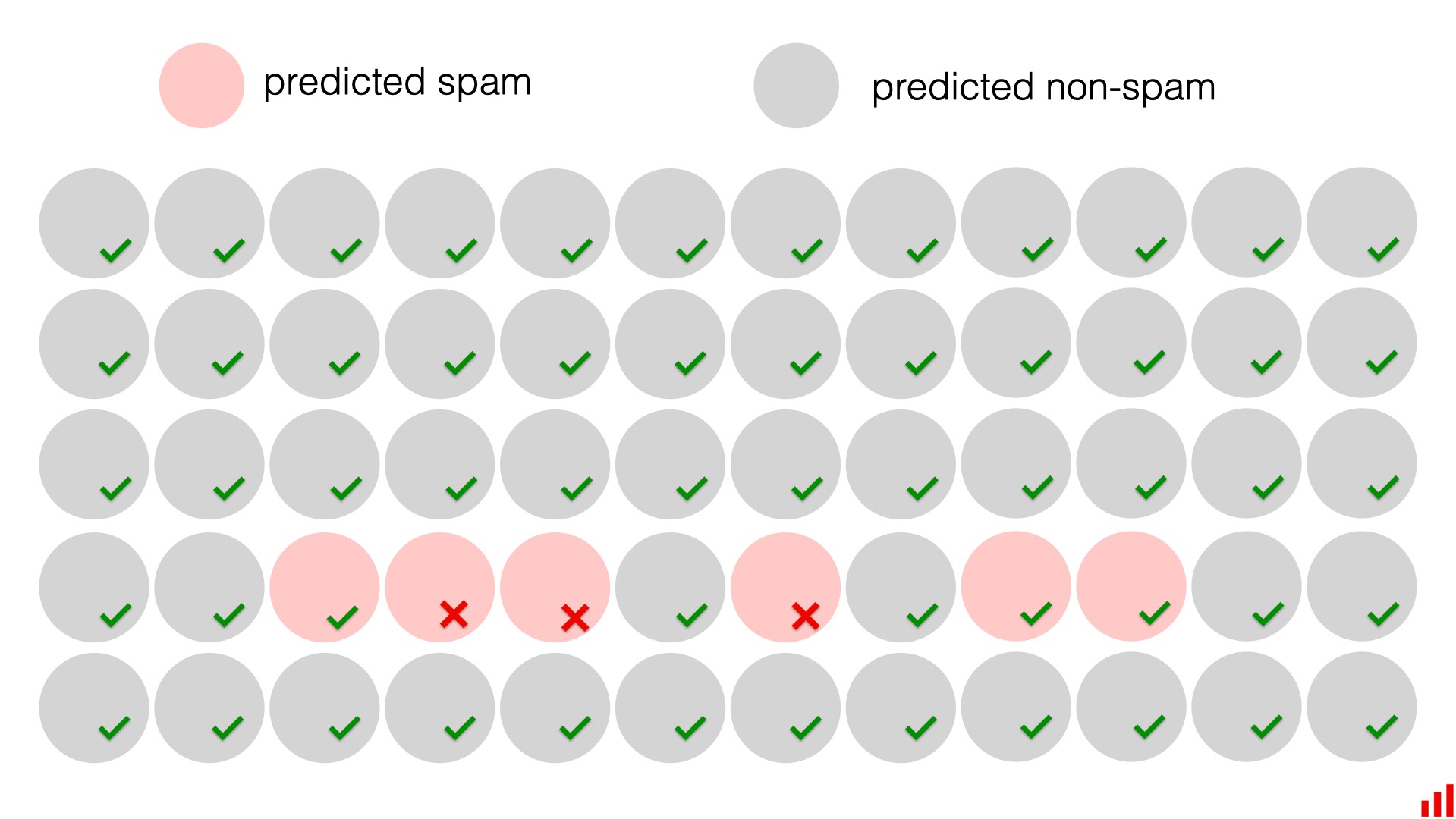

1.3. Visualizing Precision

Consider a scenario where a machine learning model is used to detect defective products on a manufacturing line.

Defective product detection scenario

Defective product detection scenario

In this example:

- True Positives (TP): 80 products were correctly identified as defective.

- False Positives (FP): 20 good products were incorrectly identified as defective.

Precision = TP / (TP + FP) = 80 / (80 + 20) = 80 / 100 = 0.8

This means the model has a precision of 0.8 or 80%, indicating that when the model identifies a product as defective, it is correct 80% of the time.

2. Calculating Precision: A Step-by-Step Guide

Calculating precision is a straightforward process that provides valuable insights into the performance of a classification model. By following these steps, you can accurately determine the precision of your model and understand how well it avoids false positives. This calculation is essential for evaluating the reliability of your model’s positive predictions and making informed decisions about its deployment and optimization.

2.1. Understanding the Data

Before calculating precision, it is essential to understand the data and the specific problem you are trying to solve. Key aspects to consider include:

- Data Balance: Is the dataset balanced, with an equal number of positive and negative instances, or is it imbalanced? Precision is particularly useful in imbalanced datasets where the focus is on accurately identifying the minority class.

- Business Context: What are the implications of false positives and false negatives in the specific application? Understanding the business context helps in determining whether precision or recall is more important.

- Model Objective: What is the primary goal of the model? Is it to minimize false positives, minimize false negatives, or achieve a balance between both?

2.2. Creating a Confusion Matrix

A confusion matrix is a table that summarizes the performance of a classification model by showing the counts of true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN). Creating a confusion matrix is the first step in calculating precision.

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actual Positive | True Positive (TP) | False Negative (FN) |

| Actual Negative | False Positive (FP) | True Negative (TN) |

2.3. Identifying True Positives (TP) and False Positives (FP)

To calculate precision, you need to identify the true positives (TP) and false positives (FP) from the confusion matrix.

- True Positives (TP): These are the instances that were correctly predicted as positive. In the confusion matrix, they are located in the top-left cell.

- False Positives (FP): These are the instances that were incorrectly predicted as positive. In the confusion matrix, they are located in the bottom-left cell.

2.4. Applying the Precision Formula

Once you have identified the true positives (TP) and false positives (FP), you can calculate precision using the formula:

Precision = TP / (TP + FP)

The result will be a value between 0 and 1, where 1 indicates perfect precision (no false positives) and 0 indicates the model does not correctly predict any positive instances.

2.5. Interpreting the Result

The precision value provides insights into the model’s ability to avoid false positives. A high precision value indicates that when the model predicts an instance as positive, it is likely to be correct. However, it is important to consider precision in conjunction with other metrics like recall and accuracy to get a complete picture of the model’s performance.

3. Accuracy vs. Precision: Key Differences

While both accuracy and precision are important metrics for evaluating machine learning models, they measure different aspects of model performance. Understanding the key differences between these metrics is crucial for selecting the right metric to optimize and interpret model results effectively. Accuracy provides an overall measure of correctness, while precision focuses specifically on the accuracy of positive predictions. By considering both metrics, you can gain a comprehensive understanding of your model’s strengths and weaknesses.

3.1. Definition and Calculation

- Accuracy:

- Definition: Accuracy measures the overall correctness of the model by calculating the ratio of correctly predicted instances (both positive and negative) to the total number of instances.

- Formula:

- Accuracy = (TP + TN) / (TP + TN + FP + FN)

- Precision:

- Definition: Precision measures the accuracy of positive predictions by calculating the ratio of true positives to the total number of instances predicted as positive.

- Formula:

- Precision = TP / (TP + FP)

3.2. Focus and Interpretation

- Accuracy:

- Focus: Accuracy focuses on the overall performance of the model, considering both positive and negative instances.

- Interpretation: A high accuracy score indicates that the model is generally correct in its predictions, but it does not provide specific insights into the model’s performance on positive or negative instances.

- Precision:

- Focus: Precision focuses specifically on the accuracy of positive predictions, indicating how well the model avoids false positives.

- Interpretation: A high precision score indicates that when the model predicts an instance as positive, it is likely to be correct. This is particularly important in scenarios where false positives are costly.

3.3. Use Cases

- Accuracy:

- Suitable When:

- The dataset is balanced, with an equal number of positive and negative instances.

- The cost of false positives and false negatives is similar.

- The overall correctness of the model is the primary concern.

- Suitable When:

- Precision:

- Suitable When:

- The dataset is imbalanced, with a disproportionate number of positive and negative instances.

- The cost of false positives is high.

- The accuracy of positive predictions is the primary concern.

- Suitable When:

3.4. Example Scenario

Consider a machine learning model used to detect fraudulent transactions.

- Accuracy: If the model has an accuracy of 95%, it means that it correctly classifies 95% of all transactions as either fraudulent or legitimate.

- Precision: If the model has a precision of 90%, it means that when it identifies a transaction as fraudulent, it is correct 90% of the time.

In this scenario, precision is particularly important because a false positive (incorrectly identifying a legitimate transaction as fraudulent) can lead to customer dissatisfaction and loss of business.

3.5. Benefits of Using Accuracy

- Simplicity: Accuracy is simple to calculate and easy to understand.

- Overall Performance: It provides a general measure of how well the model is performing across all classes.

- Balanced Datasets: Accuracy is most reliable when the dataset has a relatively equal distribution of positive and negative instances.

- Equal Error Costs: When the cost of making a false positive and a false negative error is similar, accuracy is a useful metric.

3.6. Limitations of Using Accuracy

- Misleading with Imbalanced Data: Accuracy can be misleading when dealing with imbalanced datasets. A model can achieve high accuracy by simply predicting the majority class, without effectively identifying the minority class.

- Ignores Error Types: Accuracy does not differentiate between different types of errors (false positives and false negatives), which can be critical in certain applications.

- Overly Optimistic: In imbalanced datasets, a high accuracy can give a false sense of security, masking poor performance on the minority class.

3.7. Benefits of Using Precision

- Focus on Positive Predictions: Precision specifically evaluates the accuracy of positive predictions, making it useful in scenarios where the reliability of positive predictions is crucial.

- Imbalanced Data: It is particularly effective in imbalanced datasets, where the goal is to accurately identify the minority class.

- Cost-Sensitive Applications: When the cost of false positives is high, precision helps in minimizing these costly errors.

- Trust in Positive Outcomes: High precision ensures that when the model predicts a positive outcome, users can have greater confidence in its correctness.

3.8. Limitations of Using Precision

- Ignores False Negatives: Precision does not take into account false negatives, which can be a significant concern in some applications.

- Overemphasis on Positive Class: It focuses solely on the positive class, potentially overlooking the importance of correctly identifying negative instances.

- Not a Complete Picture: Precision alone does not provide a complete picture of the model’s performance and should be used in conjunction with other metrics like recall and F1-score.

3.9. Accuracy & Precision: Table Comparison

| Feature | Accuracy | Precision |

|---|---|---|

| Definition | Overall correctness of the model | Accuracy of positive predictions |

| Formula | (TP + TN) / (TP + TN + FP + FN) | TP / (TP + FP) |

| Focus | Overall performance | Positive predictions |

| Interpretation | Percentage of correct predictions | Percentage of positive predictions that are correct |

| Suitable When | Balanced dataset, equal cost of errors | Imbalanced dataset, high cost of false positives |

| Limitations | Misleading with imbalanced data, ignores error types | Ignores false negatives, overemphasis on positive class |

3.10. Use Cases and Examples

- Accuracy: In a balanced dataset where the goal is to classify images as either “cat” or “dog,” accuracy can be used to measure the overall correctness of the model.

- Precision: In spam email detection, precision is crucial because a false positive (marking a legitimate email as spam) can lead to important information being missed.

- Scenario 1: Medical Diagnosis:

- High Accuracy: The model correctly diagnoses 98% of patients.

- High Precision: When the model diagnoses a patient with a disease, it is correct 95% of the time.

- Scenario 2: Fraud Detection:

- High Accuracy: The model correctly identifies 99% of transactions.

- High Precision: When the model flags a transaction as fraudulent, it is correct 92% of the time.

4. Precision vs. Recall: Finding the Right Balance

Precision and recall are two critical metrics in machine learning that provide different perspectives on the performance of a classification model. While precision focuses on the accuracy of positive predictions, recall emphasizes the ability of the model to identify all positive instances. Understanding the trade-off between precision and recall is essential for optimizing the model to meet specific business needs.

4.1. Understanding Recall

Recall, also known as sensitivity or true positive rate, measures the ability of the model to identify all relevant instances. It is calculated as the ratio of true positives (TP) to the sum of true positives and false negatives (FN).

Recall = TP / (TP + FN)

- True Positives (TP): The number of instances correctly identified as positive by the model.

- False Negatives (FN): The number of instances incorrectly identified as negative by the model.

4.2. The Precision-Recall Trade-Off

There is often an inverse relationship between precision and recall. Improving precision can reduce recall, and vice versa. This trade-off occurs because increasing the threshold for making positive predictions can reduce false positives (increasing precision) but may also increase false negatives (decreasing recall).

- High Precision, Low Recall: The model is highly accurate when it predicts a positive instance, but it may miss many positive instances.

- Low Precision, High Recall: The model identifies most of the positive instances, but it may also include many false positives.

4.3. When to Prioritize Precision

Prioritize precision when the cost of false positives is high. In these scenarios, it is more important to avoid incorrectly identifying negative instances as positive, even if it means missing some positive instances.

- Medical Diagnosis: A false positive can lead to unnecessary treatment and stress for the patient.

- Spam Detection: A false positive can cause important emails to be marked as spam, leading to missed opportunities.

4.4. When to Prioritize Recall

Prioritize recall when the cost of false negatives is high. In these scenarios, it is more important to identify all positive instances, even if it means including some false positives.

- Fraud Detection: A false negative can result in a fraudulent transaction going undetected, leading to financial losses.

- Disease Detection: A false negative can result in a disease going undetected, delaying treatment and potentially leading to severe health consequences.

- Predictive Maintenance: Failing to predict equipment failure (false negative) can lead to costly downtime and repairs.

4.5. Balancing Precision and Recall

In many applications, it is important to strike a balance between precision and recall. This can be achieved by using metrics like the F1-score, which combines precision and recall into a single metric.

4.6. F1-Score

The F1-score is the harmonic mean of precision and recall, providing a balanced measure of the model’s performance. It is calculated as:

F1-Score = 2 (Precision Recall) / (Precision + Recall)

The F1-score ranges from 0 to 1, with 1 indicating perfect precision and recall.

4.7. Adjusting the Decision Threshold

Another way to balance precision and recall is by adjusting the decision threshold of the model. By increasing the threshold, you can increase precision at the expense of recall. Conversely, by decreasing the threshold, you can increase recall at the expense of precision.

4.8. Example Scenario: Precision vs. Recall

Consider a machine learning model used to detect potential terrorists at an airport.

- High Precision: The model is highly accurate when it identifies someone as a potential terrorist, but it may miss many potential terrorists.

- High Recall: The model identifies most of the potential terrorists, but it may also include many false positives (incorrectly identifying innocent travelers as potential terrorists).

In this scenario, the decision of whether to prioritize precision or recall depends on the specific goals and constraints.

- Prioritize Precision: If the goal is to minimize the number of innocent travelers who are wrongly detained, prioritize precision.

- Prioritize Recall: If the goal is to ensure that no potential terrorists are missed, prioritize recall.

4.9. Additional Metrics

- Area Under the Precision-Recall Curve (AUC-PR): AUC-PR summarizes the trade-off between precision and recall across different probability thresholds. It is particularly useful for imbalanced datasets where the class distribution is skewed. A higher AUC-PR indicates better performance.

- Average Precision (AP): AP is a single-value metric that represents the average of precision scores at each threshold, weighted by the improvement in recall from the previous threshold. It is commonly used in information retrieval and object detection tasks.

- Matthews Correlation Coefficient (MCC): MCC measures the correlation between the predicted and actual classifications, taking into account true and false positives and negatives. It provides a balanced measure of performance, especially for imbalanced datasets.

- Cost-Benefit Analysis: In some cases, it’s helpful to perform a cost-benefit analysis to determine the optimal balance between precision and recall based on the specific costs associated with false positives and false negatives.

4.10. Precision & Recall: Table Comparison

| Feature | Precision | Recall |

|---|---|---|

| Definition | Accuracy of positive predictions | Ability to identify all positive instances |

| Formula | TP / (TP + FP) | TP / (TP + FN) |

| Focus | Minimizing false positives | Minimizing false negatives |

| Suitable When | High cost of false positives | High cost of false negatives |

| Example Use Cases | Medical diagnosis, spam detection | Fraud detection, disease detection |

| Trade-Off | Increasing precision can decrease recall, and vice versa | Increasing recall can decrease precision, and vice versa |

| Balancing Metric | F1-score | F1-score |

5. Factors Affecting Precision in Machine Learning

Several factors can impact the precision of a machine learning model. Understanding these factors is essential for optimizing the model and improving its performance. These factors range from the quality and balance of the data to the specific algorithms used and the parameters configured. By addressing these aspects, data scientists and machine learning engineers can build more reliable and accurate models.

5.1. Data Quality

The quality of the data used to train the model is a critical factor affecting precision. High-quality data is accurate, complete, and relevant to the problem being solved.

- Accurate Data: Inaccurate data can lead to the model learning incorrect patterns, resulting in false positives and reduced precision.

- Complete Data: Missing data can also lead to biased results and reduced precision.

- Relevant Data: Irrelevant data can introduce noise and reduce the model’s ability to accurately identify positive instances.

5.2. Data Imbalance

Data imbalance occurs when the number of instances in one class is significantly different from the number of instances in other classes. This can be a common problem in many real-world applications, such as fraud detection and disease detection.

- Impact on Precision: In imbalanced datasets, the model may be biased towards the majority class, resulting in low precision for the minority class.

- Mitigation Techniques: Techniques such as oversampling the minority class, undersampling the majority class, and using cost-sensitive learning algorithms can help mitigate the impact of data imbalance on precision.

5.3. Feature Selection

Feature selection involves selecting the most relevant features from the dataset to train the model. Irrelevant or redundant features can introduce noise and reduce the model’s ability to accurately identify positive instances.

- Impact on Precision: Poor feature selection can lead to reduced precision.

- Techniques: Techniques such as univariate feature selection, recursive feature elimination, and feature importance ranking can help identify the most relevant features.

5.4. Algorithm Selection

The choice of algorithm can also affect precision. Different algorithms have different strengths and weaknesses, and some algorithms may be better suited for certain types of problems than others.

- Impact on Precision: Using an inappropriate algorithm can lead to reduced precision.

- Considerations: Factors to consider when selecting an algorithm include the type of data, the complexity of the problem, and the desired level of interpretability.

5.5. Hyperparameter Tuning

Hyperparameters are parameters that are set before training the model and can significantly impact its performance. Tuning hyperparameters involves finding the optimal values for these parameters to achieve the best possible performance.

- Impact on Precision: Suboptimal hyperparameter values can lead to reduced precision.

- Techniques: Techniques such as grid search, random search, and Bayesian optimization can help find the optimal hyperparameter values.

5.6. Model Complexity

The complexity of the model can also affect precision. A model that is too simple may not be able to capture the underlying patterns in the data, resulting in low precision. On the other hand, a model that is too complex may overfit the training data, resulting in poor generalization and reduced precision on new data.

- Impact on Precision: Both underfitting and overfitting can lead to reduced precision.

- Regularization Techniques: Regularization techniques such as L1 regularization, L2 regularization, and dropout can help prevent overfitting.

5.7. Class Overlap

Class overlap occurs when the instances of different classes are not well-separated in the feature space. This can make it difficult for the model to accurately distinguish between the classes, resulting in reduced precision.

- Impact on Precision: Class overlap can lead to reduced precision, especially when the decision boundary between the classes is not clear.

- Non-Linear Models: Using non-linear models like decision trees, random forests, or neural networks can sometimes help improve precision in cases where there is significant class overlap.

5.8. Data Preprocessing

The way data is preprocessed can significantly impact the performance of a machine learning model. Data preprocessing involves cleaning, transforming, and scaling the data to make it more suitable for training the model.

- Impact on Precision: Inadequate data preprocessing can lead to reduced precision.

- Normalization and Scaling: Normalizing or scaling the data can help prevent features with larger values from dominating the model.

- Encoding Categorical Variables: Encoding categorical variables properly can also improve the model’s performance.

5.9. Noise in Data

Noise in the data refers to irrelevant or meaningless information that can interfere with the learning process. Noise can come from various sources, such as measurement errors, data entry mistakes, or random variations.

- Impact on Precision: Noise can obscure the true patterns in the data, leading to reduced precision.

- Techniques for Reducing Noise: Techniques like outlier detection, data smoothing, and filtering can help reduce the impact of noise on the model’s performance.

5.10. Evaluation Metrics

The choice of evaluation metrics can also impact the way precision is interpreted and optimized.

- Impact on Precision: Using inappropriate evaluation metrics can lead to a focus on optimizing the wrong aspects of the model.

- Balanced Evaluation: It’s important to consider multiple metrics such as precision, recall, F1-score, and AUC-PR to get a comprehensive understanding of the model’s performance.

6. Improving Precision: Practical Strategies

Improving precision is a key objective in many machine learning applications, particularly when the cost of false positives is high. By implementing the following strategies, you can enhance the precision of your models and ensure more reliable positive predictions. These strategies cover a range of techniques, from data preprocessing and feature engineering to algorithm selection and hyperparameter tuning.

6.1. Data Preprocessing Techniques

Effective data preprocessing is essential for improving the quality of the data used to train the model.

- Handling Missing Values: Impute missing values using appropriate techniques such as mean imputation, median imputation, or model-based imputation.

- Removing Outliers: Identify and remove outliers that can skew the model’s learning process.

- Correcting Inconsistencies: Correct any inconsistencies or errors in the data to ensure accuracy.

6.2. Feature Engineering

Feature engineering involves creating new features from existing ones to improve the model’s ability to identify positive instances.

- Creating Interaction Terms: Combine multiple features to create interaction terms that capture non-linear relationships.

- Transforming Features: Transform features using techniques such as polynomial transformation or logarithmic transformation to improve their distribution.

- Creating Domain-Specific Features: Create features that are specific to the problem domain and capture relevant information.

6.3. Feature Selection

Selecting the most relevant features can improve precision by reducing noise and focusing on the most informative variables.

- Univariate Feature Selection: Select features based on statistical tests such as chi-squared test or ANOVA.

- Recursive Feature Elimination: Recursively remove features and evaluate the model’s performance to identify the most important features.

- Feature Importance Ranking: Use tree-based models or other techniques to rank features based on their importance.

6.4. Algorithm Selection

The choice of algorithm can significantly impact precision. Consider algorithms that are well-suited for the specific problem and data.

- Logistic Regression: Logistic regression is a simple and interpretable algorithm that can provide good precision.

- Support Vector Machines (SVM): SVM can achieve high precision by finding the optimal hyperplane that separates the classes.

- Random Forests: Random forests can handle non-linear relationships and provide good precision.

6.5. Hyperparameter Tuning

Tuning hyperparameters can optimize the model for precision.

- Grid Search: Systematically search through a predefined grid of hyperparameter values.

- Random Search: Randomly sample hyperparameter values from a predefined distribution.

- Bayesian Optimization: Use Bayesian optimization to efficiently explore the hyperparameter space.

6.6. Adjusting Decision Threshold

Adjusting the decision threshold can directly impact precision. Increasing the threshold can reduce false positives and improve precision, but it may also decrease recall.

- Precision-Recall Curve: Plot the precision-recall curve to visualize the trade-off between precision and recall and select the optimal threshold.

- Cost-Benefit Analysis: Perform a cost-benefit analysis to determine the optimal threshold based on the costs associated with false positives and false negatives.

6.7. Ensemble Methods

Ensemble methods combine multiple models to improve overall performance and precision.

- Boosting: Boosting algorithms such as AdaBoost and Gradient Boosting can improve precision by focusing on instances that are difficult to classify.

- Stacking: Stacking involves training multiple models and combining their predictions using a meta-learner.

6.8. Addressing Data Imbalance

Data imbalance can significantly impact precision, especially for the minority class.

- Oversampling: Oversample the minority class by duplicating instances or generating synthetic instances using techniques such as SMOTE.

- Undersampling: Undersample the majority class by randomly removing instances.

- Cost-Sensitive Learning: Use cost-sensitive learning algorithms that assign higher costs to misclassifying instances of the minority class.

6.9. Using More Data

Increasing the amount of training data can improve the model’s ability to generalize and accurately identify positive instances.

- Data Augmentation: Generate additional training data by applying transformations to existing data, such as rotation, scaling, or cropping.

- Collecting More Data: Collect more data from real-world sources to increase the diversity and representativeness of the training data.

6.10. Regularization Techniques

Regularization techniques can prevent overfitting and improve the model’s ability to generalize to new data.

- L1 Regularization: L1 regularization adds a penalty term to the loss function that encourages the model to select a subset of the most important features.

- L2 Regularization: L2 regularization adds a penalty term to the loss function that discourages the model from assigning large weights to any one feature.

- Dropout: Dropout randomly drops out neurons during training, which can help prevent overfitting and improve generalization.

7. Real-World Applications of Precision

Precision plays a crucial role in various real-world applications where the accuracy of positive predictions is paramount. Understanding these applications can help you appreciate the importance of precision and how it can be effectively utilized. These examples span diverse industries, illustrating the broad applicability of precision as a key metric.

7.1. Medical Diagnosis

In medical diagnosis, precision is critical to minimize false positives, which can lead to unnecessary treatments and patient anxiety.

- Cancer Detection: Precision ensures that when a test indicates the presence of cancer, it is highly likely to be accurate, reducing the risk of unnecessary biopsies and treatments.

- Disease Screening: Precision in disease screening helps avoid false alarms, ensuring that healthy individuals are not subjected to unnecessary follow-up tests.

- Pathology: High precision in pathology reduces the chances of misdiagnosing a benign condition as malignant, preventing unnecessary surgeries and treatments.

7.2. Fraud Detection

In fraud detection, precision is essential to minimize false positives, which can lead to blocking legitimate transactions and inconveniencing customers.

- Credit Card Fraud: Precision ensures that when a transaction is flagged as fraudulent, it is highly likely to be accurate, reducing the risk of blocking legitimate purchases.

- Insurance Fraud: Precision helps avoid false accusations of insurance fraud, protecting innocent policyholders from unwarranted investigations.

- Online Banking: High precision in online banking fraud detection minimizes disruptions to legitimate users while still effectively identifying fraudulent activities.

7.3. Spam Detection

In spam detection, precision is crucial to minimize false positives, which can cause important emails to be marked as spam and missed by the user.

- Email Filtering: Precision ensures that legitimate emails are not mistakenly classified as spam, preventing users from missing important communications.

- Social Media Filtering: Precision helps avoid false accusations of spamming, protecting legitimate users from unwarranted account suspensions.

- Content Moderation: High precision in content moderation minimizes the removal of legitimate content, ensuring that users can freely express themselves.

7.4. Quality Control

In quality control, precision is essential to minimize false positives, which can lead to rejecting good products and increasing production costs.

- Manufacturing: Precision ensures that when a product is identified as defective, it is highly likely to be accurate, reducing the risk of rejecting good products.

- Food Processing: Precision helps avoid false accusations of contamination, protecting consumers and preventing unnecessary product recalls.

- Pharmaceuticals: High precision in pharmaceutical quality control minimizes the rejection of safe and effective drugs, ensuring that patients have access to the medications they need.

7.5. Search and Information Retrieval

In search and information retrieval, precision is important to ensure that the results returned are relevant to the user’s query.

- Web Search: Precision ensures that the top search results are highly relevant to the user’s query, improving the user experience.

- Document Retrieval: Precision helps avoid returning irrelevant documents, ensuring that users can quickly find the information they need.

- Recommendation Systems: High precision in recommendation systems minimizes the recommendation of irrelevant items, increasing user satisfaction and engagement.

7.6. Ad Targeting

In ad targeting, precision is crucial to ensure that ads are shown to users who are likely to be interested in them.

- Online Advertising: Precision ensures that ads are shown to users who are likely to click on them, increasing the effectiveness of the advertising campaign.

- Personalized Marketing: Precision helps avoid showing irrelevant ads, reducing user annoyance and improving brand perception.

- Email Marketing: High precision in email marketing minimizes the sending of irrelevant emails, increasing open rates and reducing unsubscribe rates.

7.7. Network Intrusion Detection

In network intrusion detection, precision is essential to minimize false positives, which can lead to unnecessary alerts and overwhelm security teams.

- Security Monitoring: Precision ensures that when an intrusion is detected, it is highly likely to be accurate, allowing security teams to focus on real threats.

- Threat Intelligence: Precision helps avoid false alarms, protecting innocent systems from unwarranted investigations.

- Cybersecurity: High precision in cybersecurity minimizes disruptions to legitimate network traffic while still effectively identifying malicious activities.

7.8. Predictive Maintenance

In predictive maintenance, precision is crucial to minimize false positives, which can lead to unnecessary maintenance and downtime.

- Equipment Monitoring: Precision ensures that when a maintenance need is predicted, it is highly likely to be accurate, reducing the risk of unnecessary maintenance.

- Infrastructure Management: Precision helps avoid false alarms, protecting critical infrastructure from unwarranted interventions.

- Manufacturing: High precision in manufacturing predictive maintenance minimizes disruptions to production while still effectively preventing equipment failures.

7.9. Loan Approval

In loan approval processes, precision helps minimize the risk of approving loans to individuals who are likely to default.

- Credit Scoring: Precision ensures that when a loan is approved, the applicant is highly likely to repay it, reducing the risk of financial losses.

- Risk Management: Precision helps avoid false positives, protecting financial institutions from unwarranted losses.

- Financial Services: High precision in loan approval minimizes the approval of risky loans while still effectively providing credit to deserving individuals.

7.10. Resource Allocation

Precision aids in optimizing the allocation of limited resources, ensuring that they are directed towards the most impactful and effective areas.

- Public Health: Precision ensures that resources are directed to the most effective interventions, improving public health outcomes.

- Environmental Conservation: Precision helps avoid misallocation of conservation efforts, protecting ecosystems and biodiversity effectively.

- Disaster Relief: High precision in disaster relief minimizes the misdirection of aid, ensuring that resources reach those who need them most.

8. Tools and Libraries for Calculating Precision

Several tools and libraries are available for calculating precision in machine learning. These tools provide convenient functions and methods for evaluating model performance and calculating precision along with other relevant metrics. Using these tools can streamline the evaluation process and provide valuable insights into the model’s effectiveness.

8.1. Scikit-Learn

Scikit-learn is a popular Python library for machine learning that provides a wide range of tools for model evaluation, including functions for calculating precision.

-

precision_score: This function calculates the precision score from true labels and predicted labels.from sklearn.metrics import precision_score true_labels = [0, 1, 1, 0, 1] predicted_labels = [0, 1, 0, 0, 1] precision = precision_score(true_labels, predicted_labels) print("Precision:", precision) -

classification_report: This function generates a comprehensive report that includes precision, recall, F1-score, and support for each class.from sklearn.metrics import classification_report true_labels = [0, 1, 1, 0, 1] predicted_labels = [0, 1, 0, 0, 1] report = classification_report(true_labels, predicted_labels) print(report) -

Confusion Matrix: Scikit-learn also provides a function to compute the