Recall is a critical concept in machine learning, particularly when evaluating the performance of classification models. But What Is Recall In Machine Learning exactly, and why is it so important? This comprehensive guide, brought to you by LEARNS.EDU.VN, will explore the definition of recall, its calculation, practical applications, and its relationship to other key metrics like precision and accuracy. Understanding the nuances of recall empowers you to build more effective and reliable machine learning models, ensuring accurate and impactful results in various real-world scenarios. We’ll also explore related concepts such as false negatives and false positives, sensitivity, and true positive rate, all crucial for a holistic understanding of model evaluation in machine learning.

1. Understanding Recall: The Foundation of Model Evaluation

Recall, also known as sensitivity or the true positive rate, measures a machine learning model’s ability to identify all relevant instances within a dataset. It focuses specifically on the positive class and assesses how well the model captures all actual positive cases. This metric is especially valuable when the cost of missing a positive instance is high. This means understanding what is recall in machine learning is very important.

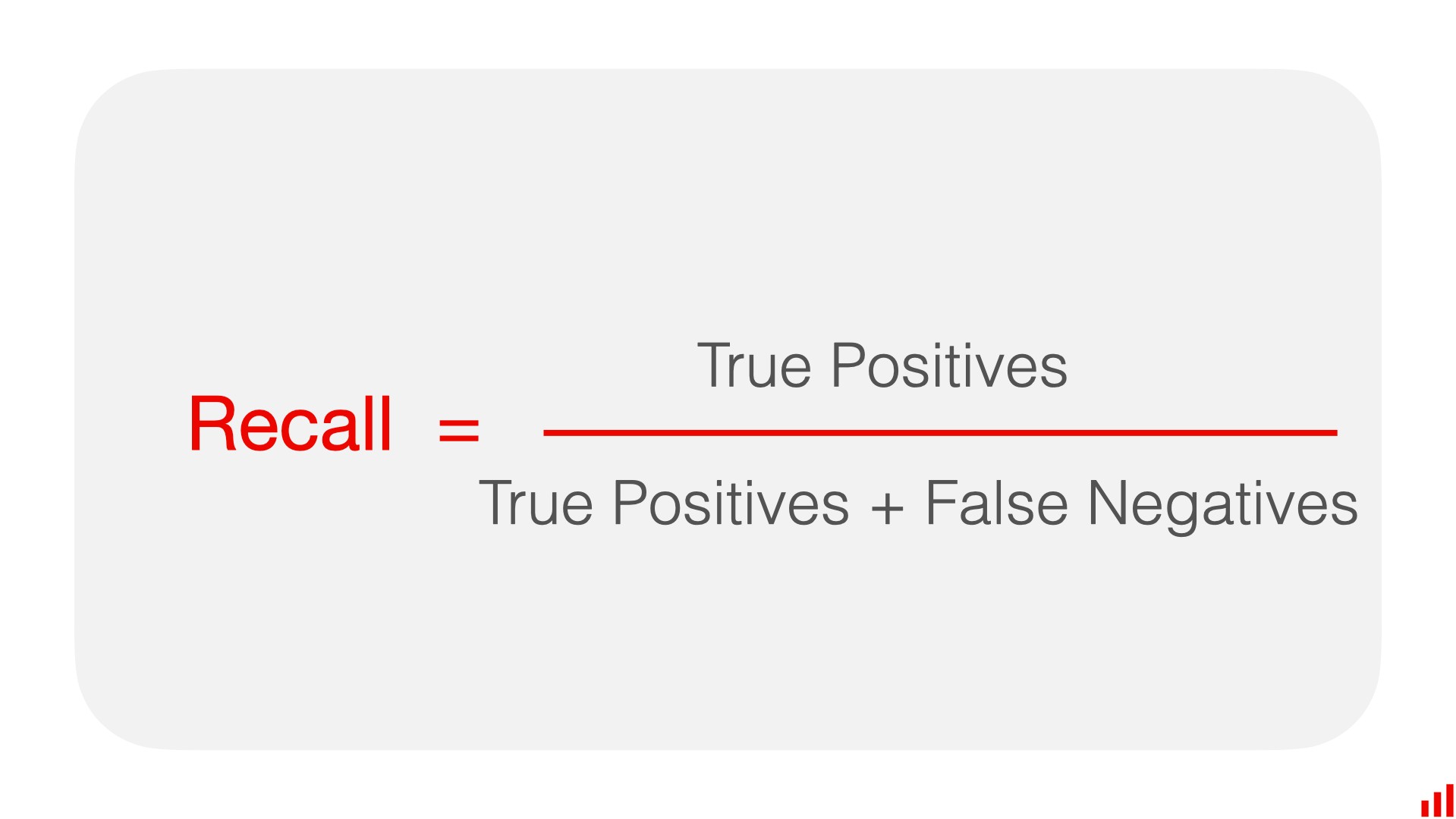

1.1. The Definition of Recall

Recall is calculated as the ratio of true positives (TP) to the sum of true positives and false negatives (FN).

Recall = TP / (TP + FN)

- True Positives (TP): The number of positive instances correctly predicted as positive by the model.

- False Negatives (FN): The number of positive instances incorrectly predicted as negative by the model.

1.2. Why is Recall Important?

Recall is particularly important in scenarios where missing a positive instance has significant consequences. Consider these examples:

- Medical Diagnosis: In detecting a disease, a high recall ensures that the model identifies as many patients with the condition as possible, minimizing the risk of overlooking individuals who need treatment.

- Fraud Detection: In identifying fraudulent transactions, a high recall ensures that the model flags most fraudulent activities, reducing financial losses.

- Spam Detection: In spam filtering, a high recall ensures that most spam emails are caught, although it might also increase the chances of flagging some legitimate emails as spam.

1.3. Recall vs. Precision: Striking the Right Balance

While recall focuses on capturing all positive instances, precision measures the accuracy of the positive predictions. Precision is calculated as the ratio of true positives to the sum of true positives and false positives (FP).

Precision = TP / (TP + FP)

- False Positives (FP): The number of negative instances incorrectly predicted as positive by the model.

Achieving a high recall often comes at the cost of lower precision, and vice versa. The ideal balance between recall and precision depends on the specific application and the relative costs of false positives and false negatives.

Understanding the balance between precision and recall in model evaluation.

2. Diving Deeper: Calculating and Interpreting Recall

To effectively use recall, you must know how to calculate and interpret it correctly. This section provides a step-by-step guide with practical examples.

2.1. Step-by-Step Calculation of Recall

- Create a Confusion Matrix: Organize the model’s predictions into a confusion matrix, which summarizes the true positives, false positives, false negatives, and true negatives.

- Identify True Positives (TP) and False Negatives (FN): Locate the values in the confusion matrix that represent the number of true positives and false negatives.

- Apply the Formula: Use the recall formula: Recall = TP / (TP + FN).

- Interpret the Result: The resulting value represents the recall score, indicating the model’s ability to capture all positive instances.

2.2. Example Calculation

Suppose you have a medical diagnosis model that predicts whether a patient has a disease. After testing the model on a dataset of 100 patients, you obtain the following results:

- True Positives (TP): 70 (70 patients correctly identified as having the disease)

- False Negatives (FN): 10 (10 patients with the disease incorrectly identified as not having the disease)

- False Positives (FP): 5 (5 patients without the disease incorrectly identified as having the disease)

- True Negatives (TN): 15 (15 patients correctly identified as not having the disease)

Using the recall formula:

Recall = TP / (TP + FN) = 70 / (70 + 10) = 70 / 80 = 0.875

In this case, the recall score is 0.875, or 87.5%. This means that the model correctly identifies 87.5% of the patients with the disease.

2.3. Interpreting Recall Scores

- High Recall (Close to 1): Indicates that the model is very good at capturing most of the positive instances. It minimizes the risk of false negatives.

- Low Recall (Close to 0): Indicates that the model is missing many positive instances. It has a high rate of false negatives.

- Moderate Recall (Around 0.5): Indicates that the model is capturing a reasonable portion of the positive instances, but there is room for improvement.

2.4. Tools for Calculating Recall in Python

Python offers several libraries to calculate recall easily. Here are some popular options:

- Scikit-learn: A comprehensive machine learning library that provides functions to calculate various metrics, including recall.

- Evidently: An open-source Python library that helps evaluate, test, and monitor ML models in production. It allows for the quick calculation and visualization of accuracy, precision, and recall.

- Statsmodels: A library focused on statistical modeling, which also includes tools for evaluating model performance.

3. Practical Applications of Recall in Different Industries

Recall is a versatile metric with significant applications across various industries. This section explores how recall is used in different sectors to improve decision-making and outcomes.

3.1. Healthcare

In healthcare, recall is crucial for identifying diseases and conditions accurately. Examples include:

- Cancer Detection: Ensuring that all potential cancer cases are identified, even if it means some false positives require further investigation.

- Infectious Disease Screening: Identifying individuals infected with a disease to prevent further spread, even if some healthy individuals are flagged for additional testing.

- Emergency Room Triage: Prioritizing patients with critical conditions to ensure they receive immediate attention, even if some less urgent cases are temporarily delayed.

3.2. Finance

In the finance industry, recall is essential for detecting fraudulent activities and managing risk. Examples include:

- Fraud Detection: Identifying all fraudulent transactions to minimize financial losses, even if some legitimate transactions are flagged for review.

- Credit Risk Assessment: Identifying high-risk borrowers to reduce defaults, even if some creditworthy individuals are denied loans.

- Anti-Money Laundering: Detecting suspicious financial activities to comply with regulations and prevent illicit fund flows, even if some normal transactions are flagged.

3.3. Security

In security, recall is vital for identifying potential threats and ensuring public safety. Examples include:

- Airport Security Screening: Identifying all dangerous objects to prevent potential attacks, even if some harmless items trigger additional screening.

- Cybersecurity Threat Detection: Identifying all malicious activities to protect systems from cyberattacks, even if some benign activities are flagged as suspicious.

- Surveillance Monitoring: Identifying potential security breaches to prevent incidents, even if some normal behaviors are flagged for review.

3.4. Manufacturing

In manufacturing, recall is used to identify defective products and maintain quality control. Examples include:

- Defect Detection: Identifying all defective products to prevent them from reaching customers, even if some good products are flagged for inspection.

- Equipment Failure Prediction: Identifying potential equipment failures to schedule maintenance and prevent downtime, even if some false alarms occur.

- Process Monitoring: Identifying anomalies in the production process to maintain consistent quality, even if some normal variations are flagged.

3.5. E-commerce

In e-commerce, recall helps in personalizing recommendations and detecting fraudulent activities. Examples include:

- Personalized Recommendations: Identifying all relevant products for a user to increase sales, even if some irrelevant products are also recommended.

- Fraudulent Transaction Detection: Identifying all fraudulent transactions to protect customers, even if some legitimate transactions are flagged.

- Customer Churn Prediction: Identifying customers likely to leave to offer retention incentives, even if some loyal customers are also targeted.

4. The Relationship Between Recall, Precision, and Accuracy

Understanding the relationships between recall, precision, and accuracy is crucial for building effective machine learning models. This section explores how these metrics interact and how to balance them for optimal performance.

4.1. Accuracy: A General Overview

Accuracy measures the overall correctness of a model by calculating the ratio of correct predictions to the total number of predictions.

Accuracy = (TP + TN) / (TP + TN + FP + FN)

While accuracy provides a general overview of model performance, it can be misleading when dealing with imbalanced datasets.

4.2. The Precision-Recall Tradeoff

There is often a tradeoff between precision and recall. Improving one metric may lead to a decrease in the other. This tradeoff is particularly evident when adjusting the decision threshold of a model.

- Increasing Recall: Lowering the decision threshold increases the number of positive predictions, which can capture more true positives but also increase false positives, leading to lower precision.

- Increasing Precision: Raising the decision threshold reduces the number of positive predictions, which can decrease false positives but also miss some true positives, leading to lower recall.

4.3. F1-Score: Balancing Precision and Recall

The F1-score is a metric that combines precision and recall into a single value, providing a balanced measure of model performance. It is calculated as the harmonic mean of precision and recall.

F1-Score = 2 (Precision Recall) / (Precision + Recall)

The F1-score is useful when you want to find a balance between precision and recall, particularly when the costs of false positives and false negatives are similar.

4.4. When to Prioritize Recall, Precision, or Accuracy

The choice of which metric to prioritize depends on the specific application and the relative costs of false positives and false negatives.

- Prioritize Recall: When the cost of false negatives is high, such as in medical diagnosis or fraud detection.

- Prioritize Precision: When the cost of false positives is high, such as in spam filtering or credit risk assessment.

- Prioritize Accuracy: When the dataset is balanced and the costs of false positives and false negatives are similar, providing a general overview of model performance.

- Prioritize F1-Score: When you want to balance precision and recall, particularly when the costs of false positives and false negatives are similar.

4.5. Practical Example: Choosing the Right Metric

Consider a scenario where you are building a fraud detection model. The cost of missing a fraudulent transaction (false negative) is significantly higher than the cost of incorrectly flagging a legitimate transaction (false positive). In this case, you should prioritize recall to ensure that most fraudulent transactions are detected, even if it means some legitimate transactions are flagged for review.

On the other hand, if you are building a spam filtering model, the cost of incorrectly flagging a legitimate email as spam (false positive) is higher than the cost of missing a spam email (false negative). In this case, you should prioritize precision to ensure that legitimate emails are not incorrectly classified as spam.

4.6. Using Evidently to Visualize and Analyze Metrics

Evidently is a powerful tool for visualizing and analyzing various metrics, including recall, precision, and accuracy. It provides interactive reports that include confusion matrices, ROC curves, and other visualizations, making it easier to understand model performance and make informed decisions.

Evidently Python library allows for the quick visualization of key metrics.

5. Strategies to Improve Recall in Machine Learning Models

Improving recall is essential when the cost of false negatives is high. This section explores several strategies to enhance recall in machine learning models.

5.1. Data Balancing Techniques

Imbalanced datasets can significantly affect recall. Data balancing techniques can help improve recall by addressing the imbalance between classes.

- Oversampling: Increasing the number of instances in the minority class by duplicating existing samples or generating synthetic samples.

- Undersampling: Reducing the number of instances in the majority class by randomly removing samples.

- SMOTE (Synthetic Minority Oversampling Technique): Generating synthetic samples for the minority class by interpolating between existing samples.

5.2. Adjusting the Decision Threshold

The decision threshold determines the probability at which an instance is classified as positive. Adjusting the decision threshold can help improve recall by increasing the number of positive predictions.

- Lowering the Threshold: Decreasing the decision threshold increases the number of positive predictions, which can capture more true positives but also increase false positives, leading to lower precision.

- ROC Curve Analysis: Using ROC (Receiver Operating Characteristic) curves to visualize the tradeoff between true positive rate (recall) and false positive rate at different decision thresholds.

- Precision-Recall Curve Analysis: Using precision-recall curves to visualize the tradeoff between precision and recall at different decision thresholds.

5.3. Ensemble Methods

Ensemble methods combine multiple models to improve overall performance. These methods can help improve recall by leveraging the strengths of different models.

- Boosting: Training multiple models sequentially, with each model focusing on correcting the errors made by the previous models.

- Bagging: Training multiple models independently on different subsets of the data and averaging their predictions.

- Random Forests: An ensemble of decision trees, where each tree is trained on a random subset of the data and features.

5.4. Cost-Sensitive Learning

Cost-sensitive learning assigns different costs to different types of errors, allowing the model to prioritize minimizing the cost of false negatives.

- Cost Matrix: Defining a cost matrix that specifies the cost of each type of error (true positive, false positive, false negative, true negative).

- Algorithm Modification: Modifying the learning algorithm to incorporate the cost matrix and prioritize minimizing the overall cost.

5.5. Feature Engineering

Feature engineering involves creating new features or transforming existing features to improve model performance. This can help improve recall by providing the model with more relevant information.

- Domain Knowledge: Using domain knowledge to identify and create features that are likely to be predictive of the positive class.

- Feature Transformation: Transforming existing features to make them more suitable for the model, such as scaling, normalization, or encoding categorical variables.

5.6. Algorithm Selection

Choosing the right algorithm can significantly impact recall. Some algorithms are better suited for imbalanced datasets or for prioritizing recall.

- Decision Trees: Algorithms that can handle imbalanced datasets and prioritize recall by creating decision rules that focus on capturing positive instances.

- Support Vector Machines (SVM): Algorithms that can be tuned to prioritize recall by adjusting the cost parameter.

- Logistic Regression: Algorithms that can be adjusted to prioritize recall by adjusting the decision threshold.

5.7. Monitoring and Retraining

Continuously monitoring model performance and retraining the model with new data can help maintain high recall over time.

- Performance Monitoring: Tracking recall, precision, and other metrics over time to detect any degradation in performance.

- Retraining: Retraining the model with new data to adapt to changing patterns and maintain high recall.

6. Advanced Techniques for Maximizing Recall

Beyond the foundational strategies, several advanced techniques can further enhance recall in machine learning models. These methods often require deeper understanding and more sophisticated implementation.

6.1. Threshold Optimization

Optimizing the decision threshold involves selecting the threshold that provides the best balance between recall and precision for a specific application.

- Grid Search: Systematically evaluating different threshold values to identify the optimal threshold.

- ROC Curve Analysis: Using ROC curves to identify the threshold that maximizes the true positive rate while minimizing the false positive rate.

- Precision-Recall Curve Analysis: Using precision-recall curves to identify the threshold that provides the desired balance between precision and recall.

6.2. Anomaly Detection Techniques

Anomaly detection techniques identify rare or unusual instances that deviate significantly from the norm. These techniques can be used to improve recall by detecting positive instances that are difficult to classify using traditional methods.

- One-Class SVM: Training a model to recognize normal instances and identify anomalies as instances that deviate significantly from the normal pattern.

- Isolation Forest: Building an ensemble of decision trees to isolate anomalies by identifying instances that require fewer splits to isolate.

6.3. Active Learning

Active learning involves selecting the most informative instances for labeling, allowing the model to learn more effectively from a smaller amount of labeled data. This can help improve recall by focusing on instances that are likely to be positive but are difficult to classify.

- Uncertainty Sampling: Selecting instances for which the model is most uncertain about the prediction.

- Query by Committee: Training multiple models and selecting instances for which the models disagree the most.

6.4. Transfer Learning

Transfer learning involves leveraging knowledge gained from a related task to improve performance on a new task. This can help improve recall by transferring knowledge about positive instances from a related dataset to the target dataset.

- Pre-trained Models: Using pre-trained models trained on large datasets to initialize the model and improve performance on the target task.

- Fine-tuning: Fine-tuning the pre-trained model on the target dataset to adapt it to the specific characteristics of the target task.

6.5. Meta-Learning

Meta-learning involves learning how to learn, allowing the model to adapt quickly to new tasks or datasets. This can help improve recall by enabling the model to quickly adapt to changing patterns and maintain high recall over time.

- Model Agnostic Meta-Learning (MAML): Training a model that can quickly adapt to new tasks by learning a good initialization point.

- Reptile: A meta-learning algorithm that focuses on finding a good initialization point by repeatedly training and adapting to new tasks.

7. Implementing Recall with LEARNS.EDU.VN: A Comprehensive Approach

At LEARNS.EDU.VN, we provide a comprehensive approach to understanding and implementing recall in machine learning models. Our resources include:

7.1. Detailed Guides and Tutorials

We offer detailed guides and tutorials that cover the fundamentals of recall, its calculation, and its application in various industries. These resources are designed to help you understand the concepts and implement them effectively.

7.2. Practical Examples and Case Studies

Our platform includes practical examples and case studies that demonstrate how recall is used in real-world scenarios. These examples provide valuable insights and help you apply the concepts to your own projects.

7.3. Hands-On Projects and Exercises

We provide hands-on projects and exercises that allow you to practice implementing recall in machine learning models. These projects are designed to reinforce your understanding and develop your skills.

7.4. Expert Support and Mentorship

Our team of experts is available to provide support and mentorship, helping you overcome challenges and achieve your goals. We offer personalized guidance and answer your questions to ensure your success.

7.5. Courses and Workshops

We offer courses and workshops that cover advanced topics in recall and machine learning. These courses are designed to help you stay up-to-date with the latest trends and techniques.

By leveraging our resources, you can gain a deep understanding of recall and its application in machine learning models. This will enable you to build more effective and reliable models that deliver accurate and impactful results.

8. Real-World Examples and Case Studies

To illustrate the practical significance of recall, let’s examine several real-world examples and case studies where high recall is crucial.

8.1. Case Study 1: Medical Diagnosis of Rare Diseases

In diagnosing rare diseases, the consequences of missing a positive case (false negative) can be severe. Delayed or missed diagnoses can lead to delayed treatment, disease progression, and even death. Therefore, medical diagnosis models for rare diseases must prioritize recall.

- Challenge: Rare diseases often have limited data, making it difficult to train accurate models. Additionally, symptoms can be subtle and overlap with more common conditions.

- Solution: Using data augmentation techniques, cost-sensitive learning, and ensemble methods to improve recall. Prioritizing algorithms that are effective with imbalanced datasets.

- Outcome: Improved diagnostic accuracy and reduced risk of missed diagnoses, leading to better patient outcomes.

8.2. Case Study 2: Fraud Detection in Online Transactions

In online transactions, the cost of missing a fraudulent transaction can be significant. Fraudulent activities can result in financial losses, reputational damage, and legal liabilities. Therefore, fraud detection models must prioritize recall.

- Challenge: Fraudulent transactions are often rare and difficult to detect, as fraudsters constantly adapt their methods to evade detection.

- Solution: Using anomaly detection techniques, active learning, and feature engineering to improve recall. Monitoring model performance and retraining the model with new data to adapt to changing fraud patterns.

- Outcome: Reduced financial losses and improved customer protection, leading to increased trust and loyalty.

8.3. Case Study 3: Cybersecurity Threat Detection

In cybersecurity, the cost of missing a threat can be catastrophic. Cyberattacks can result in data breaches, system downtime, and financial losses. Therefore, cybersecurity threat detection models must prioritize recall.

- Challenge: Cyber threats are constantly evolving, making it difficult to keep detection models up-to-date. Additionally, the volume of data that needs to be analyzed is enormous, requiring efficient and scalable solutions.

- Solution: Using transfer learning, meta-learning, and anomaly detection techniques to improve recall. Leveraging threat intelligence feeds and continuously monitoring model performance.

- Outcome: Enhanced security and reduced risk of cyberattacks, leading to improved data protection and business continuity.

8.4. Case Study 4: Defect Detection in Manufacturing

In manufacturing, the cost of missing a defective product can be significant. Defective products can result in customer dissatisfaction, warranty claims, and reputational damage. Therefore, defect detection models must prioritize recall.

- Challenge: Defects can be rare and difficult to detect, particularly in complex manufacturing processes. Additionally, the cost of false positives (incorrectly flagging a good product as defective) can be high, as it can disrupt production and increase costs.

- Solution: Using data augmentation techniques, ensemble methods, and cost-sensitive learning to improve recall while minimizing false positives. Leveraging sensor data and machine vision to detect subtle defects.

- Outcome: Improved product quality and reduced costs, leading to increased customer satisfaction and profitability.

8.5. Case Study 5: Targeted Advertising for High-Value Products

In targeted advertising, the cost of missing a potential high-value customer can be significant. Reaching the right audience can lead to increased sales and revenue. Therefore, targeted advertising models must prioritize recall.

- Challenge: Identifying potential high-value customers can be difficult, as they may have diverse characteristics and behaviors. Additionally, the cost of false positives (targeting irrelevant customers) can be high, as it can waste advertising resources and annoy potential customers.

- Solution: Using feature engineering, active learning, and ensemble methods to improve recall while minimizing false positives. Leveraging customer data and behavioral insights to identify potential high-value customers.

- Outcome: Increased sales and revenue, leading to improved marketing ROI.

9. Common Pitfalls and How to Avoid Them

When working with recall, it is essential to be aware of common pitfalls and how to avoid them. This section discusses some of the most common mistakes and provides guidance on how to prevent them.

9.1. Ignoring Imbalanced Datasets

One of the most common pitfalls is ignoring the impact of imbalanced datasets on recall. When one class is significantly more prevalent than the other, models can become biased towards the majority class, resulting in low recall for the minority class.

- Solution: Use data balancing techniques such as oversampling, undersampling, or SMOTE to address the imbalance. Additionally, use evaluation metrics that are less sensitive to imbalanced datasets, such as the F1-score or area under the precision-recall curve (AUPRC).

9.2. Overfitting the Training Data

Overfitting occurs when a model learns the training data too well and performs poorly on new, unseen data. This can result in high recall on the training data but low recall on the test data.

- Solution: Use regularization techniques such as L1 or L2 regularization to prevent overfitting. Additionally, use cross-validation to evaluate model performance on multiple subsets of the data and ensure that the model generalizes well.

9.3. Neglecting Feature Engineering

Neglecting feature engineering can result in poor model performance, including low recall. The quality of the features used to train a model has a significant impact on its ability to accurately classify instances.

- Solution: Invest time and effort in feature engineering to create informative and relevant features. Use domain knowledge to identify features that are likely to be predictive of the target variable. Additionally, use feature selection techniques to identify the most important features and remove irrelevant ones.

9.4. Using Inappropriate Algorithms

Using inappropriate algorithms can result in suboptimal performance, including low recall. Some algorithms are better suited for certain types of data or tasks than others.

- Solution: Choose algorithms that are appropriate for the data and task at hand. Consider the characteristics of the data, such as its size, dimensionality, and distribution. Additionally, consider the goals of the task, such as maximizing recall or balancing precision and recall.

9.5. Failing to Monitor Model Performance

Failing to monitor model performance can result in undetected degradation in recall over time. Models can become less accurate as the data changes or as new patterns emerge.

- Solution: Continuously monitor model performance and retrain the model with new data to adapt to changing patterns and maintain high recall. Use automated monitoring tools to track key metrics and alert you to any significant changes in performance.

9.6. Ignoring Cost Considerations

Ignoring cost considerations can lead to suboptimal decision-making when prioritizing recall. The costs of false positives and false negatives can vary significantly depending on the application.

- Solution: Consider the costs of false positives and false negatives when choosing the appropriate balance between recall and precision. Use cost-sensitive learning techniques to incorporate cost information into the model training process. Additionally, use decision analysis tools to evaluate the economic impact of different decision thresholds.

9.7. Over-Reliance on Default Thresholds

Over-reliance on default decision thresholds can lead to suboptimal performance. The default threshold of 0.5 may not be appropriate for all applications.

- Solution: Optimize the decision threshold for the specific application. Use ROC curve analysis or precision-recall curve analysis to identify the threshold that provides the best balance between recall and precision.

10. Emerging Trends and Future Directions

The field of machine learning is constantly evolving, and new trends and directions are emerging that have the potential to further enhance recall. This section discusses some of the most promising developments.

10.1. Deep Learning Techniques

Deep learning techniques, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have shown remarkable success in a variety of tasks, including image recognition, natural language processing, and speech recognition. These techniques can also be used to improve recall in classification models.

- CNNs: Effective for extracting features from images and can be used to detect subtle patterns that are difficult to identify using traditional methods.

- RNNs: Effective for processing sequential data and can be used to detect temporal patterns that are indicative of the target variable.

10.2. Explainable AI (XAI)

Explainable AI (XAI) techniques aim to make machine learning models more transparent and interpretable. This can help improve recall by identifying the features and patterns that are most important for predicting the target variable.

- SHAP (SHapley Additive exPlanations): A technique that assigns each feature a Shapley value, which measures its contribution to the prediction.

- LIME (Local Interpretable Model-agnostic Explanations): A technique that explains the predictions of any machine learning model by approximating it locally with a linear model.

10.3. Federated Learning

Federated learning is a distributed machine learning technique that allows models to be trained on decentralized data without sharing the data itself. This can help improve recall by leveraging data from multiple sources while preserving privacy.

- Privacy Preservation: Federated learning ensures that sensitive data is not shared, making it suitable for applications where privacy is a concern.

- Scalability: Federated learning can scale to handle large datasets and can be used to train models on edge devices.

10.4. Reinforcement Learning

Reinforcement learning is a type of machine learning where an agent learns to make decisions in an environment to maximize a reward signal. This can be used to improve recall by training an agent to actively seek out positive instances.

- Active Learning: Reinforcement learning can be used to implement active learning, where the agent selects the most informative instances for labeling.

- Adaptive Sampling: Reinforcement learning can be used to implement adaptive sampling, where the agent adjusts the sampling strategy to focus on positive instances.

10.5. Quantum Machine Learning

Quantum machine learning is a field that explores the use of quantum computers to solve machine learning problems. Quantum computers have the potential to solve certain types of problems much faster than classical computers, which could lead to significant improvements in recall.

- Quantum Algorithms: Quantum algorithms, such as quantum support vector machines (QSVMs) and quantum neural networks (QNNs), have the potential to improve the performance of machine learning models.

- Computational Power: Quantum computers offer significantly increased computational power compared to classical computers, which could enable the training of more complex models.

FAQ

1. What is the difference between recall and accuracy?

Accuracy measures the overall correctness of a model, while recall measures the model’s ability to identify all positive instances. Accuracy can be misleading when dealing with imbalanced datasets, whereas recall focuses specifically on the positive class.

2. When should I prioritize recall over precision?

Prioritize recall when the cost of false negatives is high. This is common in scenarios like medical diagnosis, fraud detection, and cybersecurity threat detection, where missing a positive instance can have severe consequences.

3. How can I improve recall in my machine learning model?

You can improve recall by using data balancing techniques, adjusting the decision threshold, using ensemble methods, implementing cost-sensitive learning, performing feature engineering, selecting appropriate algorithms, and continuously monitoring and retraining your model.

4. What are some common pitfalls to avoid when working with recall?

Common pitfalls include ignoring imbalanced datasets, overfitting the training data, neglecting feature engineering, using inappropriate algorithms, failing to monitor model performance, ignoring cost considerations, and over-reliance on default thresholds.

5. What is the F1-score, and how does it relate to recall and precision?

The F1-score is the harmonic mean of precision and recall, providing a balanced measure of model performance. It is useful when you want to find a balance between precision and recall, particularly when the costs of false positives and false negatives are similar.

6. Can I use deep learning to improve recall?

Yes, deep learning techniques such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) can be used to improve recall by extracting more complex features and patterns from the data.

7. What is the role of the decision threshold in recall?

The decision threshold determines the probability at which an instance is classified as positive. Adjusting the decision threshold can help improve recall by increasing the number of positive predictions, but it can also increase the number of false positives.

8. How does cost-sensitive learning impact recall?

Cost-sensitive learning assigns different costs to different types of errors, allowing the model to prioritize minimizing the cost of false negatives. This can help improve recall by making the model more sensitive to positive instances.

9. What are ensemble methods, and how can they improve recall?

Ensemble methods combine multiple models to improve overall performance. These methods can help improve recall by leveraging the strengths of different models and reducing the risk of overfitting.

10. How can I monitor the performance of my model over time to ensure high recall?

You can monitor model performance by tracking recall, precision, and other metrics over time. Use automated monitoring tools to detect any degradation in performance and retrain the model with new data to adapt to changing patterns and maintain high recall.

Conclusion

Understanding what is recall in machine learning and how to optimize it is crucial for building effective and reliable models, especially when the cost of missing positive instances is high. By mastering the concepts, techniques, and strategies discussed in this guide, you can significantly improve the performance of your models and achieve better outcomes in various real-world applications. At LEARNS.EDU.VN, we are committed to providing you with the resources and support you need to succeed in the field of machine learning.

Ready to delve deeper into the world of machine learning and master essential metrics like recall? Visit LEARNS.EDU.VN today to explore our comprehensive courses, detailed guides, and hands-on projects. Whether you’re looking to enhance your skills in data balancing, threshold optimization, or advanced techniques like deep learning, our platform offers the expertise and resources you need. Don’t miss out on the opportunity to transform your understanding and build more effective, reliable models. Start your learning journey with LEARNS.EDU.VN now!

For further information, contact us at:

Address: 123 Education Way, Learnville, CA 90210, United States

WhatsApp: +1 555-555-1212

Website: learns.edu.vn