Reinforcement Learning (RL) is a powerful machine learning technique that allows AI agents to learn optimal behavior by interacting with an environment. Through trial and error, agents learn to make decisions that maximize cumulative rewards, mimicking how humans learn through experience. This article explores the core concepts of RL, its mechanisms, applications, and advantages and disadvantages.

Reinforcement Learning centers around the interaction between an agent and its environment. The agent seeks to achieve a specific goal by performing actions and receiving feedback in the form of rewards or penalties. This iterative process enables the agent to refine its decision-making strategy over time.

Key components of this interaction include:

- Agent: The learner or decision-maker that takes actions within the environment.

- Environment: The world or system the agent interacts with.

- State: The current situation or condition the agent finds itself in.

- Action: The possible choices or moves the agent can make.

- Reward: The feedback from the environment, positive for desirable actions and negative for undesirable ones.

How Reinforcement Learning Works: A Deep Dive

The RL process is a continuous cycle of action, feedback, and adjustment. The agent takes an action based on its current state, the environment responds with a new state and a reward, and the agent updates its strategy accordingly. This loop repeats until the agent learns an optimal policy – a set of actions that maximize long-term rewards.

Crucial elements within this cycle are:

- Policy: The agent’s strategy for selecting actions based on the current state.

- Reward Function: Defines the feedback the agent receives for each action, guiding it towards the desired goal.

- Value Function: Predicts the long-term cumulative reward the agent can expect from a given state.

- Model of the Environment (Optional): A representation that helps the agent predict future states and rewards.

Illustrative Example: Navigating a Maze

Imagine a robot learning to navigate a maze to find a treasure while avoiding obstacles.

- Goal: Reach the treasure.

- Actions: Move up, down, left, or right.

- Rewards: Positive reward for reaching the treasure, negative reward for hitting an obstacle.

The robot initially explores the maze randomly. Through trial and error, it learns which actions lead to positive rewards and avoids actions that result in penalties. Over time, the robot develops an optimal path to the treasure, maximizing its cumulative reward. This learning process involves:

- Exploration: Initially, the robot tries different paths randomly.

- Feedback: Receives rewards for progress and penalties for errors.

- Behavior Adjustment: Modifies its strategy based on the feedback.

- Optimal Path Discovery: Eventually learns the best path to the treasure.

Types of Reinforcement in RL

Two primary types of reinforcement shape agent behavior:

1. Positive Reinforcement

Positive reinforcement strengthens a behavior by providing a positive reward when the desired action is performed. This encourages the agent to repeat the action in similar situations. For example, giving the robot a point for moving closer to the treasure.

2. Negative Reinforcement

Negative reinforcement strengthens a behavior by removing an undesirable stimulus when the desired action is performed. This encourages the agent to repeat the action to avoid the negative stimulus. For example, removing an obstacle from the robot’s path when it makes a correct turn.

CartPole: A Classic RL Problem

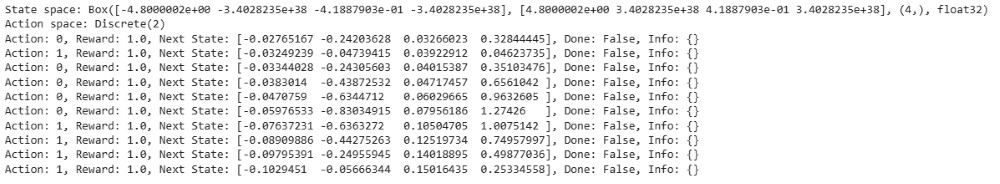

The CartPole environment in OpenAI Gym provides a benchmark problem for testing RL algorithms. The goal is to balance a pole upright on a moving cart by applying force to the cart. The agent learns to control the cart’s movement to keep the pole balanced.

- State Space: Cart position, cart velocity, pole angle, pole angular velocity.

- Action Space: Push cart left or right.

- Reward: +1 for each time step the pole remains balanced.

Applications of Reinforcement Learning

RL finds applications in diverse fields, including:

- Robotics: Training robots for complex tasks like assembly and navigation.

- Game Playing: Developing AI agents that excel in games like chess and Go.

- Industrial Control: Optimizing processes in manufacturing and resource management.

- Personalized Learning: Creating adaptive educational systems tailored to individual learners.

Advantages of Reinforcement Learning

- Solving Complex Problems: Handles complex, dynamic environments effectively.

- Learning from Experience: Adapts and improves through trial and error.

- Direct Interaction: Learns by directly interacting with the environment.

- Handling Uncertainty: Performs well in non-deterministic environments.

Disadvantages of Reinforcement Learning

- Resource Intensive: Requires substantial computational power and data.

- Reward Function Design: Performance heavily relies on a well-defined reward function.

- Debugging Challenges: Difficult to understand and debug agent behavior.

- Not for Simple Tasks: Overly complex for straightforward problems.

Conclusion

Reinforcement Learning is a transformative technique with the potential to revolutionize various fields by enabling AI agents to learn and adapt in complex environments. While challenges remain, the ability of RL to solve intricate problems and optimize decision-making makes it a crucial area of research and development in artificial intelligence.