Sparsity in machine learning is a fascinating approach focused on optimizing models by identifying and removing unnecessary data. At LEARNS.EDU.VN, we believe in empowering learners with cutting-edge knowledge, and sparsity is a vital concept for anyone delving into machine learning. By understanding and implementing sparsity, you can dramatically enhance model efficiency and performance. Sparsity techniques are now integral to modern AI development, enabling faster computation and reduced memory usage in various applications.

1. Understanding Sparsity in Machine Learning

Sparsity in machine learning refers to the characteristic of a dataset or model where a significant portion of the values are zero or negligibly small. This concept is fundamental in optimizing computational efficiency, reducing memory usage, and improving the interpretability of models. Machine learning experts leverage sparsity to simplify complex models, making them more manageable and faster to train and deploy. Sparsity plays a pivotal role in various applications, ranging from image recognition to natural language processing.

1.1. Defining Sparsity

Sparsity, at its core, implies that a large fraction of the elements in a matrix or vector are zero. In machine learning terms, this can apply to feature sets, model parameters, or even the data itself. For example, in a large dataset, if most features have zero values across numerous instances, that dataset is considered sparse. Similarly, a model is sparse if many of its weights or coefficients are zero. This characteristic allows for significant optimization, as these zero values contribute minimally to computations and can be safely ignored or removed.

Sparsity can be quantitatively measured by the sparsity ratio, which is the proportion of zero elements to the total number of elements in a dataset or model. A high sparsity ratio indicates that the dataset or model is highly sparse, making it suitable for sparsity-aware algorithms and techniques.

1.2. Types of Sparsity

Understanding the different types of sparsity is crucial for applying the right optimization techniques. Sparsity can manifest in various forms, each with its implications for model training and performance:

- Data Sparsity: This occurs when a dataset contains a large number of zero values. For instance, in collaborative filtering, most users have interacted with only a small fraction of available items, resulting in a sparse interaction matrix. Similarly, in text analysis, the bag-of-words representation often leads to sparse feature vectors due to the vast vocabulary and limited occurrence of each word in a document.

- Model Sparsity: This refers to a model where a significant portion of its parameters (weights or coefficients) are zero. Model sparsity can be achieved through various regularization techniques or pruning methods. Sparse models are more interpretable, require less memory, and can generalize better to unseen data.

- Weight Sparsity: A specific type of model sparsity where individual weights in a neural network are zeroed out. This can be achieved through techniques like L1 regularization, which encourages weights to become exactly zero. Weight sparsity reduces the model’s complexity, mitigates overfitting, and accelerates inference by skipping computations involving zero weights.

- Activation Sparsity: In neural networks, activation sparsity refers to a situation where a large number of neurons have zero activations for a given input. This can be caused by the ReLU (Rectified Linear Unit) activation function, which outputs zero for negative inputs. Activation sparsity can lead to more efficient computation, as only a subset of neurons is active for each input.

1.3. Benefits of Sparsity

Sparsity offers several significant advantages in machine learning, making it a valuable concept to understand and implement:

- Reduced Memory Usage: Sparse models and datasets require significantly less memory to store. By eliminating zero values, the memory footprint is reduced, making it feasible to handle large-scale datasets and deploy models on resource-constrained devices.

- Faster Computation: Sparse matrix operations can be performed more efficiently than dense matrix operations. By skipping computations involving zero values, the computational workload is reduced, leading to faster training and inference times.

- Improved Model Interpretability: Sparse models are often more interpretable than dense models. By selecting a subset of relevant features or parameters, sparsity can reveal the most important factors influencing the model’s predictions, providing insights into the underlying data patterns.

- Mitigation of Overfitting: Sparsity can act as a form of regularization, preventing overfitting by reducing model complexity. By encouraging models to have fewer non-zero parameters, sparsity promotes generalization to unseen data, improving the model’s robustness and reliability.

- Enhanced Feature Selection: Sparsity techniques can be used to automatically select the most relevant features from a high-dimensional dataset. By driving the coefficients of irrelevant features to zero, sparsity algorithms identify the features that contribute most to the model’s performance, simplifying the model and improving its predictive accuracy.

Sparsity is particularly useful in scenarios such as:

- Natural Language Processing (NLP): In NLP, text data is often represented as sparse matrices due to the high dimensionality of the vocabulary.

- Recommender Systems: User-item interaction data is inherently sparse, as users typically interact with only a small subset of available items.

- Image Processing: Certain image transformations and feature extraction techniques can result in sparse representations of images.

1.4. The Jenga Analogy

Imagine playing Jenga. The tower is built with wooden blocks stacked in layers, and each player must remove one block without toppling the tower. This is similar to how sparsity works in machine learning. The “tower” is a neural network, and the “blocks” are the parameters.

In the initial stages of training a neural network, many parameters may seem essential. However, some of these parameters are less critical than others. Sparsity techniques allow us to carefully remove these non-essential parameters (the equivalent of pulling blocks from the Jenga tower) without significantly affecting the network’s performance (keeping the tower standing).

The goal is to create a leaner, more efficient network by eliminating redundancy, which not only speeds up computation but also reduces memory usage. Just as in Jenga, if you remove too many critical blocks, the tower collapses; similarly, in machine learning, excessive pruning can lead to a significant loss in accuracy. The art lies in finding the right balance to optimize the network without compromising its effectiveness.

2. Techniques for Achieving Sparsity

Several methods can be employed to induce sparsity in machine learning models and datasets. These techniques range from regularization methods to pruning strategies and specialized algorithms designed to handle sparse data efficiently. Understanding these techniques is crucial for leveraging the benefits of sparsity in various machine learning tasks.

2.1. Regularization Techniques

Regularization is a fundamental approach to inducing sparsity in machine learning models. By adding a penalty term to the loss function, regularization encourages the model to have smaller weights, effectively driving some of them to zero. The two most common regularization techniques for achieving sparsity are L1 regularization and Elastic Net regularization.

2.1.1. L1 Regularization (Lasso)

L1 regularization, also known as Lasso (Least Absolute Shrinkage and Selection Operator), adds a penalty term proportional to the absolute value of the weights to the loss function. The L1 penalty has the effect of shrinking the weights towards zero, and in many cases, it forces some weights to become exactly zero.

Mathematically, the L1 regularization term is expressed as:

λ * Σ |wᵢ|where λ is the regularization parameter that controls the strength of the penalty, and wᵢ represents the individual weights in the model.

The key advantage of L1 regularization is its ability to perform feature selection by driving the coefficients of irrelevant features to zero. This leads to sparse models that are more interpretable and less prone to overfitting. L1 regularization is particularly useful when dealing with high-dimensional datasets where only a subset of features is relevant.

2.1.2. Elastic Net Regularization

Elastic Net regularization combines L1 and L2 regularization to provide a balance between feature selection and weight shrinkage. It adds a penalty term that is a linear combination of the L1 and L2 penalties.

The Elastic Net regularization term is expressed as:

λ₁ * Σ |wᵢ| + λ₂ * Σ wᵢ²where λ₁ and λ₂ are the regularization parameters that control the strength of the L1 and L2 penalties, respectively.

Elastic Net regularization is particularly useful when dealing with datasets that have highly correlated features. In such cases, L1 regularization may arbitrarily select one feature over another, while Elastic Net tends to select groups of correlated features. This can lead to more stable and interpretable models.

2.2. Pruning Techniques

Pruning is a technique that involves removing connections or parameters from a neural network. By eliminating unnecessary connections, pruning reduces the model’s complexity, improves its efficiency, and induces sparsity. Pruning can be performed either during or after training.

2.2.1. Weight Pruning

Weight pruning is a technique that involves removing individual weights from a neural network based on their magnitude or importance. The goal is to identify and eliminate the weights that have the least impact on the model’s performance.

Weight pruning can be performed in several ways:

- Magnitude-based Pruning: This involves removing the weights with the smallest absolute values. The assumption is that weights with small magnitudes contribute less to the model’s predictions and can be safely removed.

- Sensitivity-based Pruning: This involves estimating the sensitivity of the model’s performance to the removal of each weight. Weights with low sensitivity are considered less important and are pruned.

- Iterative Pruning: This involves iteratively pruning the network and retraining it to recover any lost accuracy. The process is repeated until the desired level of sparsity is achieved.

Weight pruning can significantly reduce the size and computational cost of neural networks without sacrificing accuracy. However, it can also lead to irregular sparsity patterns, which may not be well-suited for certain hardware architectures.

2.2.2. Connection Pruning

Connection pruning involves removing entire connections between neurons in a neural network. This can be achieved by setting the weights of the connections to zero or by physically removing the connections from the network architecture.

Connection pruning can lead to more structured sparsity patterns than weight pruning, which can be beneficial for certain hardware architectures. However, it may also be more difficult to implement, as it requires modifying the network architecture.

2.2.3. Neuron Pruning

Neuron pruning involves removing entire neurons from a neural network. This can be achieved by setting the outputs of the neurons to zero or by physically removing the neurons from the network architecture.

Neuron pruning can lead to more significant reductions in model size and computational cost than weight or connection pruning. However, it may also be more likely to impact the model’s accuracy, as it involves removing entire computational units.

2.3. Sparse Coding

Sparse coding is a technique that aims to represent data using a sparse set of basis vectors. The goal is to find a set of basis vectors that can efficiently represent the data with as few non-zero coefficients as possible.

Sparse coding can be used for a variety of tasks, including:

- Feature Extraction: Sparse coding can be used to extract a sparse set of features from high-dimensional data. These features can then be used as input to other machine learning models.

- Dimensionality Reduction: Sparse coding can be used to reduce the dimensionality of data while preserving its essential information.

- Data Compression: Sparse coding can be used to compress data by representing it using a sparse set of coefficients.

Sparse coding algorithms typically involve solving an optimization problem that minimizes the reconstruction error while encouraging sparsity in the coefficients.

2.4. Thresholding Techniques

Thresholding techniques involve setting all values below a certain threshold to zero. This can be used to induce sparsity in datasets or model parameters.

Thresholding can be performed in several ways:

- Hard Thresholding: This involves setting all values below the threshold to zero.

- Soft Thresholding: This involves shrinking the values towards zero, with values below the threshold being set to zero.

- Adaptive Thresholding: This involves adjusting the threshold based on the distribution of the data or model parameters.

Thresholding techniques are simple and easy to implement, but they can also be sensitive to the choice of threshold.

2.5. Specialized Algorithms

Several specialized algorithms are designed to handle sparse data efficiently. These algorithms take advantage of the sparsity structure to reduce memory usage and computational cost.

Examples of specialized algorithms for sparse data include:

- Sparse Matrix Factorization: This involves decomposing a sparse matrix into a product of two or more dense matrices.

- Sparse Linear Regression: This involves fitting a linear regression model to sparse data using specialized algorithms that take advantage of the sparsity structure.

- Sparse Support Vector Machines (SVMs): This involves training an SVM model on sparse data using specialized algorithms that take advantage of the sparsity structure.

These specialized algorithms can significantly improve the performance of machine learning models on sparse data.

3. Applications of Sparsity in Machine Learning

Sparsity is a versatile concept with applications across various domains within machine learning. Its ability to reduce complexity, improve efficiency, and enhance interpretability makes it invaluable in tackling diverse challenges. Here are some key areas where sparsity plays a pivotal role:

3.1. Natural Language Processing (NLP)

In NLP, sparsity is particularly useful due to the high dimensionality of text data. Text data is often represented using techniques like bag-of-words or TF-IDF, which create a feature for each unique word in the vocabulary. This results in very large feature vectors where most elements are zero, as each document contains only a small fraction of the total vocabulary.

Sparsity techniques in NLP enable:

- Efficient Text Representation: By using sparse matrices to represent text data, memory usage is significantly reduced, allowing for the processing of large text corpora.

- Feature Selection: L1 regularization can be used to identify the most relevant words for a particular task, such as sentiment analysis or text classification.

- Model Compression: Pruning techniques can be applied to reduce the size of NLP models, such as language models or machine translation systems, without significantly impacting their performance.

For example, in sentiment analysis, a sparse model can focus on the words that are most indicative of positive or negative sentiment, ignoring the vast majority of words that are neutral or irrelevant.

3.2. Recommender Systems

Recommender systems often deal with sparse data, as users typically interact with only a small subset of the available items. The user-item interaction matrix is typically very sparse, with most entries being zero, indicating that the user has not interacted with the item.

Sparsity techniques in recommender systems enable:

- Efficient Collaborative Filtering: By using sparse matrix factorization techniques, recommender systems can efficiently predict user preferences based on the interactions of similar users.

- Cold Start Problem Mitigation: Sparsity can help address the cold start problem, where new users or items have very few interactions. By leveraging side information and regularization techniques, recommender systems can make accurate predictions even with limited data.

- Scalability: Sparsity allows recommender systems to scale to large user and item populations, as the memory and computational requirements are reduced.

For example, in a movie recommender system, sparsity can help predict which movies a user will enjoy based on the ratings of other users with similar tastes, even if the user has only rated a few movies.

3.3. Image and Video Processing

In image and video processing, sparsity can be used to reduce the dimensionality of data, extract relevant features, and compress data for storage and transmission.

Sparsity techniques in image and video processing enable:

- Image Compression: Sparse coding and wavelet transforms can be used to represent images and videos using a sparse set of coefficients, allowing for efficient compression.

- Feature Extraction: Sparse autoencoders can be used to learn a sparse set of features from images and videos, which can then be used for tasks such as object recognition or image classification.

- Noise Reduction: Sparsity can be used to remove noise from images and videos by representing the data using a sparse set of basis vectors and then thresholding the coefficients.

For example, in image compression, sparsity can help reduce the file size of an image by representing it using a small number of non-zero coefficients in a wavelet basis.

3.4. Deep Learning

In deep learning, sparsity can be used to reduce the size and computational cost of neural networks, improve their generalization performance, and enhance their interpretability.

Sparsity techniques in deep learning enable:

- Model Compression: Pruning techniques can be used to remove unnecessary connections and neurons from neural networks, reducing their size and computational cost.

- Regularization: L1 regularization and other sparsity-inducing techniques can be used to prevent overfitting and improve the generalization performance of neural networks.

- Feature Selection: Sparsity can help identify the most important features for a particular task, allowing for more interpretable and efficient models.

For example, in a convolutional neural network (CNN) for image classification, sparsity can help identify the most important filters and channels, reducing the computational cost of the network and improving its robustness to noise.

3.5. Bioinformatics

In bioinformatics, sparsity is useful for analyzing high-dimensional data such as gene expression data and protein-protein interaction networks.

Sparsity techniques in bioinformatics enable:

- Gene Selection: L1 regularization can be used to identify the genes that are most relevant to a particular disease or biological process.

- Network Analysis: Sparse matrix factorization techniques can be used to analyze protein-protein interaction networks and identify key proteins and pathways.

- Data Integration: Sparsity can help integrate data from multiple sources, such as genomics, proteomics, and metabolomics, by identifying the most relevant features from each data source.

For example, in gene expression analysis, sparsity can help identify the genes that are differentially expressed between different disease subtypes, providing insights into the underlying biological mechanisms.

4. Sparsity in NVIDIA Ampere Architecture

The NVIDIA Ampere architecture introduces significant advancements in sparsity support, particularly with the third-generation Tensor Cores in NVIDIA A100 GPUs. These Tensor Cores are designed to take advantage of fine-grained sparsity in network weights, providing substantial performance improvements without sacrificing accuracy.

4.1. Fine-Grained Sparsity

The NVIDIA Ampere architecture employs structured sparsity with a fine-grained pruning technique. Unlike coarse-grained pruning, which can cut entire channels from a neural network layer and often lower accuracy, fine-grained pruning focuses on removing individual weights without noticeably reducing accuracy. This is crucial for maintaining model performance while still achieving significant sparsity.

The fine-grained approach allows for a more nuanced optimization, ensuring that only the least impactful weights are removed. Users can validate this by retraining their models after pruning to confirm the accuracy is maintained.

4.2. Performance Gains

The A100 GPU can run BERT (Bidirectional Encoder Representations from Transformers), the state-of-the-art model for natural-language processing, significantly faster by utilizing sparsity compared to dense math. The NVIDIA Ampere architecture defines a method for training a neural network with half its weights removed, achieving what’s known as 50 percent sparsity.

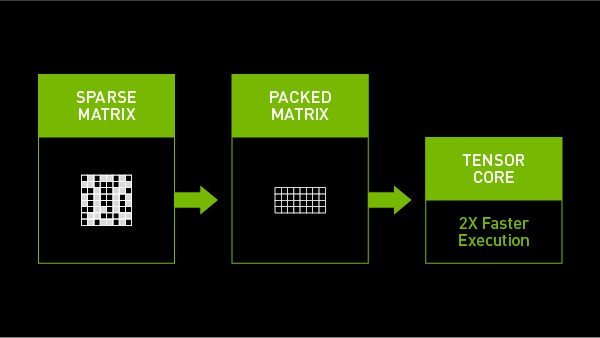

4.3. How It Works

Once a network is suitably pruned, the A100 GPU automates the rest of the work. Tensor Cores in the A100 GPU efficiently compress sparse matrices to enable the appropriate dense math. By skipping what are effectively zero-value locations in a matrix, computing is reduced, saving both power and time. Compressing sparse matrices also reduces the use of precious memory and bandwidth.

This streamlined process ensures that the benefits of sparsity are fully realized, leading to faster and more efficient AI computations.

5. Implementing Sparsity: A Step-by-Step Guide

Implementing sparsity in machine learning models involves several steps, from data preparation to model training and deployment. Here’s a comprehensive guide to help you get started:

5.1. Data Preparation

- Identify Sparse Features: Analyze your dataset to identify features with a high proportion of zero values. These features are good candidates for sparsity-aware techniques.

- Data Cleaning: Ensure that your data is clean and preprocessed. Handle missing values appropriately, either by imputing them or removing the corresponding instances.

- Feature Scaling: Scale your features to ensure that they have similar ranges. This is important for regularization techniques like L1 regularization, which can be sensitive to the scale of the features.

5.2. Model Selection

- Choose a Suitable Model: Select a machine learning model that is compatible with sparsity. Linear models, such as linear regression and logistic regression, are well-suited for sparsity due to their simplicity and interpretability. Deep learning models can also benefit from sparsity, but require more careful implementation.

- Consider Regularization: If you’re using a linear model, consider using L1 regularization (Lasso) or Elastic Net regularization to induce sparsity. These techniques automatically select the most relevant features and drive the coefficients of irrelevant features to zero.

- Explore Pruning Techniques: If you’re using a deep learning model, explore pruning techniques to reduce the size and computational cost of the network. Weight pruning, connection pruning, and neuron pruning are all viable options.

5.3. Model Training

- Set Regularization Parameters: If you’re using regularization, carefully tune the regularization parameters to achieve the desired level of sparsity. Use cross-validation to find the optimal values for the regularization parameters.

- Implement Pruning: If you’re using pruning, start by pruning a small percentage of the least important weights or connections. Retrain the model after each pruning step to recover any lost accuracy.

- Monitor Performance: Monitor the model’s performance during training to ensure that it is not overfitting or underfitting. Use metrics such as accuracy, precision, recall, and F1-score to evaluate the model’s performance.

5.4. Model Evaluation

- Evaluate Sparsity: Measure the sparsity of the trained model by calculating the proportion of zero weights or connections. A higher sparsity ratio indicates a more efficient model.

- Evaluate Performance: Evaluate the model’s performance on a held-out test set to ensure that it generalizes well to unseen data. Compare the performance of the sparse model to that of a dense model to assess the benefits of sparsity.

- Interpretability: If interpretability is important, analyze the non-zero weights or connections in the sparse model to gain insights into the most important features or relationships in the data.

5.5. Deployment

- Optimize for Sparsity: When deploying the sparse model, use specialized libraries or hardware that can take advantage of the sparsity structure. This can significantly improve the model’s performance and reduce its memory footprint.

- Quantization: Consider using quantization techniques to further reduce the model’s size and improve its performance. Quantization involves converting the model’s weights and activations to lower precision formats, such as 8-bit integers.

- Hardware Acceleration: If possible, deploy the sparse model on hardware that is specifically designed for sparse computations, such as GPUs or specialized AI accelerators.

5.6. Tools and Libraries

Several tools and libraries can help you implement sparsity in machine learning models:

- TensorFlow: TensorFlow provides built-in support for sparsity, including sparse tensors and sparse matrix operations.

- PyTorch: PyTorch also provides support for sparsity, including sparse tensors and pruning techniques.

- Scikit-learn: Scikit-learn provides implementations of L1 regularization (Lasso) and Elastic Net regularization.

- Sparse Matrices in SciPy: SciPy provides a variety of sparse matrix formats and algorithms for efficient storage and computation with sparse matrices.

By following these steps and using the appropriate tools and libraries, you can effectively implement sparsity in your machine learning models and reap the benefits of reduced memory usage, faster computation, and improved model interpretability.

6. Sparsity in Different Machine Learning Paradigms

Sparsity’s utility extends across various machine learning paradigms, each leveraging its benefits in unique ways. Understanding how sparsity is applied in these different contexts can help you tailor your approach for specific tasks and datasets.

6.1. Supervised Learning

In supervised learning, sparsity can be used for feature selection, model compression, and improved generalization.

- Feature Selection: L1 regularization is commonly used to select the most relevant features for a supervised learning task. By driving the coefficients of irrelevant features to zero, L1 regularization identifies the features that contribute most to the model’s performance.

- Model Compression: Pruning techniques can be used to reduce the size and computational cost of supervised learning models, such as decision trees and neural networks.

- Improved Generalization: Sparsity can help prevent overfitting by reducing the model’s complexity. This leads to better generalization performance on unseen data.

For example, in a classification task, sparsity can help identify the most important features for distinguishing between different classes, leading to a more accurate and interpretable model.

6.2. Unsupervised Learning

In unsupervised learning, sparsity can be used for dimensionality reduction, feature extraction, and data clustering.

- Dimensionality Reduction: Sparse coding and sparse autoencoders can be used to reduce the dimensionality of data while preserving its essential information.

- Feature Extraction: Sparse coding can be used to extract a sparse set of features from high-dimensional data. These features can then be used as input to other machine learning models.

- Data Clustering: Sparsity can help improve the performance of clustering algorithms by identifying the most relevant features for distinguishing between different clusters.

For example, in a clustering task, sparsity can help identify the features that are most discriminative between different clusters, leading to more accurate and meaningful clusters.

6.3. Reinforcement Learning

In reinforcement learning, sparsity can be used to reduce the size of the state space, improve exploration, and enhance the interpretability of policies.

- State Space Reduction: Sparsity can help reduce the size of the state space by identifying the most relevant features for decision-making.

- Improved Exploration: Sparsity can encourage exploration by promoting policies that focus on a small subset of actions.

- Policy Interpretability: Sparsity can enhance the interpretability of policies by identifying the most important states and actions for achieving a particular goal.

For example, in a robotics task, sparsity can help identify the most important sensors and actuators for controlling the robot, leading to a more efficient and robust control policy.

6.4. Graph Learning

In graph learning, sparsity can be used to reduce the density of graphs, improve the efficiency of graph algorithms, and enhance the interpretability of graph structures.

- Graph Sparsification: Sparsity can be used to reduce the density of graphs by removing less important edges.

- Efficient Graph Algorithms: Sparse graph algorithms can be used to efficiently process large graphs with a high proportion of zero edges.

- Graph Interpretability: Sparsity can enhance the interpretability of graph structures by identifying the most important nodes and edges.

For example, in a social network analysis task, sparsity can help identify the most influential users and the most important connections between them, leading to a better understanding of the network structure and dynamics.

7. Challenges and Considerations

While sparsity offers numerous benefits, it also presents certain challenges and considerations that must be addressed to ensure its successful implementation:

7.1. Information Loss

One of the primary concerns when implementing sparsity is the potential for information loss. By removing zero or small values, you risk discarding information that may be relevant to the model’s performance.

To mitigate this risk:

- Carefully Select Sparsity Techniques: Choose sparsity techniques that are appropriate for your data and task. L1 regularization, for example, is less likely to result in information loss than hard thresholding.

- Tune Sparsity Parameters: Carefully tune the sparsity parameters to find a balance between sparsity and accuracy. Use cross-validation to evaluate the model’s performance at different sparsity levels.

- Monitor Performance: Monitor the model’s performance during training to ensure that it is not overfitting or underfitting. Use metrics such as accuracy, precision, recall, and F1-score to evaluate the model’s performance.

7.2. Hardware Compatibility

Not all hardware is equally well-suited for sparse computations. Some hardware architectures, such as CPUs, may not be able to efficiently process sparse matrices.

To address this challenge:

- Use Specialized Libraries: Use specialized libraries, such as Intel MKL or cuSPARSE, that are optimized for sparse computations on CPUs and GPUs.

- Deploy on GPUs: Deploy sparse models on GPUs, which are specifically designed for parallel computations and can efficiently process sparse matrices.

- Consider Specialized Hardware: Consider using specialized hardware, such as AI accelerators, that are specifically designed for sparse computations.

7.3. Irregular Memory Access

Sparse matrices can lead to irregular memory access patterns, which can reduce the efficiency of computations.

To mitigate this issue:

- Use Appropriate Sparse Matrix Formats: Use sparse matrix formats, such as Compressed Sparse Row (CSR) or Compressed Sparse Column (CSC), that are designed to minimize irregular memory access.

- Optimize Memory Layout: Optimize the memory layout of sparse matrices to improve memory access patterns.

- Use Cache-Aware Algorithms: Use cache-aware algorithms that are designed to take advantage of the cache hierarchy and minimize memory access latency.

7.4. Training Instability

Sparsity can sometimes lead to training instability, particularly in deep learning models.

To address this challenge:

- Use Gradient Clipping: Use gradient clipping to prevent exploding gradients, which can occur when training sparse models.

- Use Batch Normalization: Use batch normalization to stabilize the training process and improve the model’s convergence.

- Use Learning Rate Scheduling: Use learning rate scheduling to gradually reduce the learning rate during training, which can help prevent oscillations and improve convergence.

7.5. Interpretability

While sparsity can improve the interpretability of models by identifying the most important features or connections, it can also make it more difficult to understand the model’s behavior.

To enhance the interpretability of sparse models:

- Visualize Sparse Structures: Visualize the sparse structures in the model, such as the non-zero weights or connections, to gain insights into the model’s behavior.

- Use Explainable AI Techniques: Use explainable AI techniques, such as LIME or SHAP, to explain the model’s predictions and identify the factors that contribute most to the predictions.

- Provide Contextual Information: Provide contextual information about the sparse features or connections to help users understand their meaning and significance.

By carefully addressing these challenges and considerations, you can successfully implement sparsity in your machine learning models and reap the benefits of reduced memory usage, faster computation, and improved model interpretability.

8. Future Trends in Sparsity Research

The field of sparsity research is constantly evolving, with new techniques and applications emerging all the time. Here are some of the future trends in sparsity research:

8.1. Automated Sparsity

Automated sparsity techniques aim to automatically learn the optimal sparsity patterns for a given task, without requiring manual tuning or intervention. These techniques use reinforcement learning or other optimization methods to find the best sparsity configuration for a model.

Automated sparsity can significantly reduce the time and effort required to implement sparsity in machine learning models, making it more accessible to a wider range of users.

8.2. Dynamic Sparsity

Dynamic sparsity techniques allow the sparsity patterns to change during training, adapting to the evolving characteristics of the data and the model. These techniques can improve the model’s performance and robustness, particularly in dynamic or non-stationary environments.

Dynamic sparsity can be implemented using techniques such as:

- Adaptive Regularization: Adaptively adjusting the regularization parameters during training to achieve the desired level of sparsity.

- Rewiring: Dynamically adding and removing connections in a neural network during training to optimize its structure.

- Synaptic Plasticity: Mimicking the synaptic plasticity of biological neural networks to dynamically adjust the weights and connections in a neural network.

8.3. Hardware-Aware Sparsity

Hardware-aware sparsity techniques are designed to take advantage of the specific characteristics of the underlying hardware architecture. These techniques optimize the sparsity patterns to improve the model’s performance on a particular hardware platform.

Hardware-aware sparsity can be implemented using techniques such as:

- Structured Sparsity: Enforcing structured sparsity patterns that are well-suited for the target hardware architecture.

- Quantization-Aware Sparsity: Combining sparsity with quantization to further reduce the model’s size and improve its performance on low-precision hardware.

- Co-Design: Co-designing the hardware and software to optimize the performance of sparse computations.

8.4. Sparsity for Edge Computing

Sparsity is particularly important for edge computing, where resources are limited and efficiency is critical. Sparsity techniques can be used to reduce the size and computational cost of machine learning models, making them suitable for deployment on edge devices.

Sparsity for edge computing can be implemented using techniques such as:

- Model Compression: Compressing machine learning models using pruning, quantization, and other sparsity techniques.

- Federated Learning: Training machine learning models on decentralized edge devices using federated learning, which allows the models to learn from data without transmitting it to a central server.

- On-Device Learning: Training machine learning models directly on edge devices, which requires efficient and lightweight algorithms.

8.5. Sparsity in Foundation Models

Foundation models, such as large language models and vision transformers, have achieved remarkable performance on a wide range of tasks. Sparsity techniques can be used to reduce the size and computational cost of foundation models, making them more accessible and efficient.

Sparsity in foundation models can be implemented using techniques such as:

- Pruning: Pruning the weights and connections in the foundation model to reduce its size and computational cost.

- Knowledge Distillation: Transferring knowledge from a large, dense foundation model to a smaller, sparse model.

- Mixture of Experts: Using a mixture of experts architecture, where each expert is a sparse model that specializes in a particular subtask.

These future trends in sparsity research promise to further enhance the efficiency, performance, and accessibility of machine learning models, making them more powerful and versatile for a wide range of applications.

9. Sparsity and LEARNS.EDU.VN

At LEARNS.EDU.VN, we are committed to providing comprehensive and up-to-date educational resources on the latest advancements in machine learning. Sparsity is a key concept in modern AI, and we offer a range of courses and tutorials to help you master this important topic.

9.1. Course Offerings

We offer a variety of courses that cover sparsity in machine learning, including:

- Introduction to Sparsity: This course provides a foundational understanding of sparsity, covering the basic concepts, techniques, and applications.

- Sparsity for Deep Learning: This course focuses on the use of sparsity in deep learning models, covering pruning, quantization, and other techniques for reducing the size and computational cost of neural networks.

- Advanced Sparsity Techniques: This course delves into advanced sparsity techniques, such as automated sparsity, dynamic sparsity, and hardware-aware sparsity.

9.2. Expert Insights

Our courses are taught by experienced machine learning practitioners and researchers who are experts in the field of sparsity. They provide valuable insights and practical guidance to help you implement sparsity in your own projects.

9.3. Hands-On Projects

Our courses include hands-on projects that allow you to apply your knowledge and skills to real-world problems. You will have the opportunity to implement sparsity in a variety of machine learning models and datasets, gaining valuable experience and building your portfolio.

9.4. Community Support

We foster a vibrant community of learners where you can connect with other students, ask questions, and share your experiences. Our online forums and discussion boards provide a supportive environment for learning and collaboration.

At learns.edu.vn, we believe that sparsity is a crucial concept for anyone working in machine learning. Our courses and resources are designed to help you master this important topic and unlock the full potential of your machine learning models.

10. Frequently Asked Questions (FAQ) About Sparsity in Machine Learning

1. What Is Sparsity In Machine Learning?

Sparsity in machine learning refers to the presence of a large number of zero or near-zero values in a dataset or model. It’s a characteristic that can be leveraged to improve computational efficiency and reduce memory usage.

2. Why is sparsity important in machine learning?

Sparsity is important because it can lead to faster computation, reduced memory usage, improved model interpretability, and mitigation of overfitting.

3. What are the different types of sparsity?

The main types of sparsity include data sparsity, model sparsity, weight sparsity, and activation sparsity.

**4. How can I achieve sparsity