Machine learning, a transformative field within artificial intelligence, has reshaped industries and redefined possibilities. At LEARNS.EDU.VN, we aim to provide clarity and insight into this dynamic discipline, exploring its origins, evolution, and future trajectory. By examining the historical milestones and key figures, we uncover the answer to the question: When Did Machine Learning Start? Delve into the philosophical roots, significant breakthroughs, and the current state-of-the-art, and see how machine learning impacts sectors from healthcare to education. Discover more with LEARNS.EDU.VN.

1. What is Machine Learning?

Machine learning stands as a pivotal branch of computer science, dedicated to crafting algorithms that evolve and learn from data. Over the decades, machine learning has triggered profound transformations across diverse research domains, most notably in natural language processing (NLP) and image recognition. This is largely because machine learning algorithms demonstrate enhanced efficiency and precision in crucial tasks like predictive analysis and data interpretation.

This makes machine learning indispensable across a wide array of sectors, including finance, healthcare, retail, and manufacturing. For instance, in search engine optimization (SEO), machine learning can forecast user behavior on a site, leading to adjustments in content and design for improved engagement. For those eager to enrich their understanding of machine learning models, LEARNS.EDU.VN offers extensive resources on machine learning datasets and advanced techniques.

Various machine learning algorithms exist, each possessing unique strengths and weaknesses. Popular options include supervised learning, unsupervised learning, reinforcement learning, deep neural networks (DNNs), and Bayesian networks. It’s important to understand that no single algorithm reigns supreme; the choice depends on the specific application. LEARNS.EDU.VN helps navigate these options, providing the knowledge to make informed decisions.

2. Philosophical Underpinnings and Early Mechanical Inventions

The roots of artificial intelligence extend far before its formal naming, encompassing ancient automatons, philosophical explorations, and pioneering mechanical inventions that shaped the future of AI.

2.1 Ancient Automatons and Mechanical Marvels

Humanity’s quest to replicate life and intelligence through artificial means dates back to ancient times. Legends, such as those of Hephaestus crafting mechanical servants in Greek mythology, mirror the real-world innovations of ancient civilizations:

- 1st century CE: Hero of Alexandria designed steam-powered automatons and mechanical theaters, showcasing sophisticated mechanical principles.

- 8th century: The Banu Musa brothers in Baghdad engineered programmable automatic flute players, demonstrating early automation.

- 13th century: Villard de Honnecourt envisioned perpetual motion machines and a mechanical angel, pushing the boundaries of imagination and engineering.

These early automatons, highlighted by sources like the British Society for the History of Mathematics, represented essential steps in automating human-like actions and initiated philosophical debates about life and intelligence.

2.2 Philosophical Foundations

The 17th and 18th centuries were marked by key philosophical shifts that influenced AI’s development:

- René Descartes (1637): Argued that animals and the human body are essentially complex machines, suggesting that intelligence could be replicated mechanically. More information can be found in his book, Discourse on the Method.

- Gottfried Wilhelm Leibniz: Proposed a universal language of thought that could be logically manipulated, anticipating modern computational approaches. His work on calculus and binary systems is foundational.

- Thomas Hobbes (1651): Equated reasoning with computation, stating “reason… is nothing but reckoning,” a concept central to cognitive science and AI. His views are detailed in Leviathan.

2.3 Early Computational Devices

The 17th to 19th centuries saw the creation of mechanical calculators and logical machines that foreshadowed modern computers:

- 1642: Blaise Pascal invented the Pascaline, an early mechanical calculator. Further details are available from the Musée des Arts et Métiers in Paris.

- 1820s-1830s: Charles Babbage designed the Difference Engine and the Analytical Engine, considered the first concept for a general-purpose computer. The Science Museum in London has exhibits on Babbage’s work.

- 1840s: Ada Lovelace wrote what is often deemed the first computer program for the Analytical Engine. Her notes on the Analytical Engine are archived at the Science Museum.

- Late 19th century: William Stanley Jevons created the “logical piano,” a machine to solve simple logical problems. The Science Museum holds information on Jevons’s machine.

These precursors showed that automating logical and mathematical operations—critical elements of AI—was possible. The convergence of philosophical inquiries and mechanical innovations set the stage for AI’s emergence as a distinct field in the mid-20th century.

3. The Early History of Machine Learning

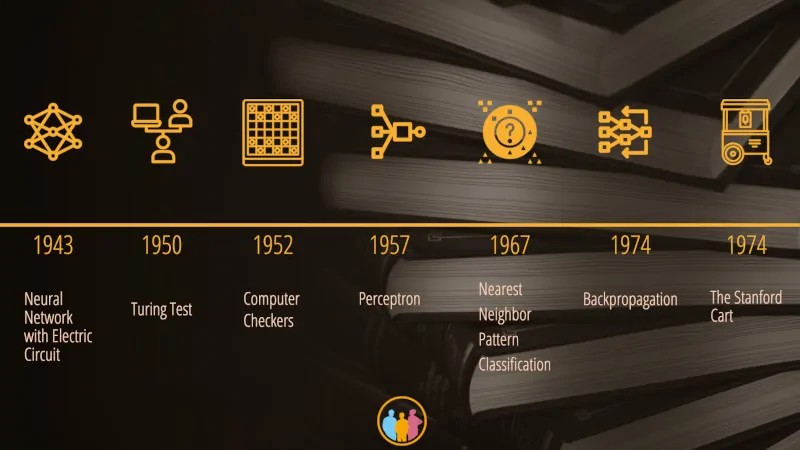

Machine learning’s timeline is marked by significant advancements since the advent of computers. Let’s explore some pivotal moments.

3.1 1943: The First Neural Network with Electric Circuit

Warren McCulloch and Walter Pitts developed the first neural network using electric circuits in 1943. Their goal was to address a problem posed by John von Neumann: enabling computers to communicate.

This groundbreaking model demonstrated computer-to-computer communication without human intervention. As noted in A Logical Calculus of the Ideas Immanent in Nervous Activity, this marked the beginning of machine learning development.

3.2 1950: Turing Test

Proposed by Alan Turing, the Turing Test evaluates a machine’s ability to imitate human behavior. The test involves determining whether a machine can act like a human, to the point that humans cannot distinguish between the machine and a real person based on their answers.

The test aims to assess whether machines can think intelligently and exhibit some form of emotional capability. Whether the answer is true or false is less important than whether it appears human-like. While numerous attempts have been made, no machine has successfully passed the Turing Test. Critics argue that the test measures imitation rather than genuine intelligence, as explored in Turing’s original paper, Computing Machinery and Intelligence.

3.3 1952: Computer Checkers

Arthur Samuel, a machine learning pioneer, created the first computer program capable of playing checkers at a championship level in 1952. His program utilized alpha-beta pruning to assess winning probabilities and the minimax algorithm to minimize losses.

These techniques, detailed in Samuel’s paper, Some Studies in Machine Learning Using the Game of Checkers, are still prevalent in game AI today.

3.4 1957: Frank Rosenblatt – The Perceptron

In 1957, psychologist Frank Rosenblatt developed the perceptron, a pioneering machine learning algorithm. The Perceptron, as detailed in Rosenblatt’s The Perceptron: A Probabilistic Model for Information Storage and Organization in the Brain, was an early adopter of artificial neural networks, aiming to enhance the accuracy of computer predictions.

The perceptron’s objective was to learn from data by iteratively adjusting parameters until an optimal solution was achieved. It simplified the process for computers to learn and build upon previous methods.

3.5 1967: The Nearest Neighbor Algorithm

The Nearest Neighbor Algorithm, developed in 1967, automatically identifies patterns within large datasets. It evaluates similarities between items to determine proximity. This algorithm is used for tasks such as finding relationships between data points and predicting future events based on past events.

Cover and Hart’s publication, Nearest Neighbor Pattern Classification, explains this method as an inductive logic technique used to classify an input object based on its nearest neighbors.

3.6 1974: The Backpropagation

Initially designed to help neural networks recognize patterns, backpropagation has been applied to enhance performance and generalize from datasets. The objective is to improve model accuracy by adjusting weights to predict future outputs.

Paul Werbos laid the groundwork for this approach in his 1974 dissertation, Beyond Regression: New Tools for Prediction and Analysis in the Behavioral Sciences.

3.7 1979: The Stanford Cart

The Stanford Cart, a remote-controlled robot, achieved a milestone in 1979 by navigating a chair-filled room autonomously. The Cart, documented in Hans Moravec’s Visual Mapping by a Robot Rover, could avoid obstacles and reach a specific destination without human intervention.

4. The AI Winter in the History of Machine Learning

AI experienced both high and low periods. The AI winter, from the late 1970s to the 1990s, was a period of reduced funding and project shutdowns due to lack of success. This period, as discussed in AI: The Tumultuous History of the Search for Artificial Intelligence, involved hype cycles that led to disappointment among developers, researchers, users, and the media.

5. The Rise of Machine Learning in History

The resurgence of machine learning in the 21st century is attributed to Moore’s Law and the exponential growth of computing power. Affordable computing enabled the training of AI algorithms with more data, increasing their accuracy and efficiency.

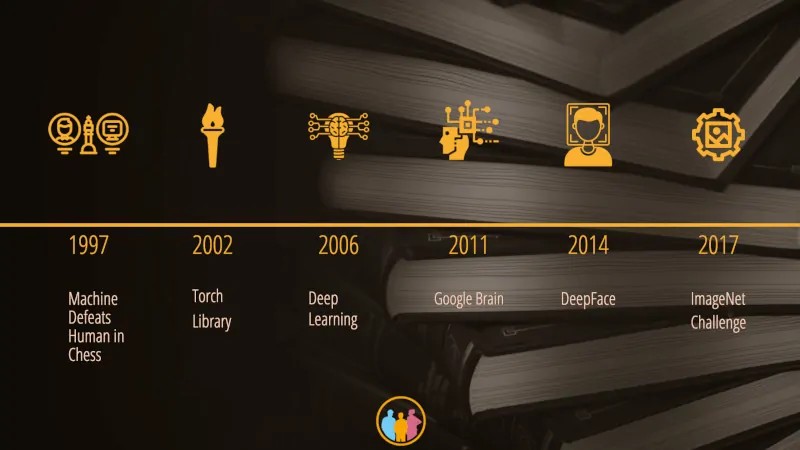

5.1 1997: A Machine Defeats a Man in Chess

In 1997, IBM’s Deep Blue defeated chess grandmaster Garry Kasparov, marking the first time a machine outperformed an expert player in chess. This event, covered extensively in media outlets like The New York Times, demonstrated that AI systems could exceed human intellect in complex tasks.

This milestone indicated a turning point, demonstrating that mankind had created an AI that could learn and evolve independently.

5.2 2002: Software Library Torch

Torch, created by Geoffrey Hinton, Pedro Domingos, and Andrew Ng, is a software library for machine learning and data science. Founded in 2002 as an alternative to other libraries, Torch facilitated the development of the first large-scale free machine learning platform. Although Torch is no longer in active development, it paved the way for PyTorch.

5.3 2006: Geoffrey Hinton, the father of Deep Learning

Geoffrey Hinton’s “A Fast Learning Algorithm for Deep Belief Nets,” published in 2006, marked the birth of deep learning. Hinton showed that a deep belief network could be trained to recognize patterns in images, as noted in his paper, Reducing the Dimensionality of Data with Neural Networks.

5.4 2011: Google Brain

Google Brain, founded in 2011, is a Google research group focused on AI and machine learning. Working with other AI research groups like DeepMind, their objective is to create machines that can learn from data, understand language, answer questions, and reason with common sense.

5.5 2014: DeepFace

DeepFace, a deep learning algorithm developed by Meta in 2014, gained attention for surpassing human performance in facial recognition tests. As highlighted in the paper DeepFace: Closing the Gap to Human-Level Performance in Face Verification, the algorithm relies on deep neural networks composed of multiple layers of artificial neurons and weights.

5.6 2017: ImageNet Challenge – Milestone in the History of Machine Learning

The ImageNet Challenge, a computer vision competition since 2010, reached a milestone in 2017 when 29 out of 38 teams achieved 95% accuracy with their computer vision models, marking a significant improvement in image recognition. Details on the challenge can be found on the ImageNet website.

5.7 The Rise of Generative AI

While the origins of generative AI can be traced back to the early days of artificial intelligence, its growth has been explosive, particularly with the launch of ChatGPT in 2022.

The concept emerged in the 1960s with chatbots like ELIZA, which generated simple responses based on pattern matching. In 2014, Ian Goodfellow introduced Generative Adversarial Networks (GANs), leading to more realistic synthetic data.

Continued advancements throughout the 2010s included:

- 2017: The Transformer architecture revolutionized natural language processing.

- 2018: OpenAI released the GPT (Generative Pre-trained Transformer) language model.

- 2020: OpenAI’s GPT-3 demonstrated text generation capabilities.

The public release of ChatGPT on November 30, 2022, propelled generative AI into the mainstream, reaching 100 million monthly active users within two months. In early 2023, Microsoft integrated ChatGPT into Bing, and Google released Bard. By late 2023, ChatGPT had over 100 million weekly active users, and over 2 million developers were using OpenAI’s API.

As of 2024, generative AI is evolving, with multimodal models working with various data types. The technology is now a central focus for the tech industry and policymakers.

6. Present: State-of-the-Art Machine Learning

Machine learning is used across diverse fields, from fashion to agriculture. Machine learning algorithms recognize patterns, extract predictive insights, and process large amounts of data with accuracy in a short timeframe.

6.1 ML in Robotics

Machine learning enhances robotics through classification, clustering, regression, and anomaly detection.

- Classification teaches robots to distinguish between objects.

- Clustering groups similar objects.

- Regression allows robots to control movements by predicting future values.

- Anomaly detection identifies unusual patterns.

Robots improve performance through reinforcement learning, allowing them to learn from their actions. Machine learning also helps designers create accurate models for future robots by predicting the results of future experiments.

6.2 ML in Healthcare

Machine learning is used to diagnose and treat diseases, identify patterns, and help doctors make better decisions, as noted in the Journal of the American Medical Association.

6.3 ML in Education

Machine learning personalizes education by tracking student progress, providing personalized content, and creating rich environments. Machine learning assesses learners’ progress and adjusts courses, as reported by the International Journal of Artificial Intelligence in Education.

| Application | Description | Benefits |

|---|---|---|

| Personalized Learning | Machine learning algorithms analyze student performance and adapt content to individual needs. | Improved learning outcomes, increased student engagement, targeted support for struggling students |

| Automated Grading | Machine learning models can grade assignments and provide feedback, freeing up educators’ time. | Reduced workload for educators, faster feedback for students, consistent and objective assessment |

| Early Intervention | Machine learning can identify students at risk of falling behind and provide early intervention strategies. | Proactive support, reduced dropout rates, improved student success |

| Curriculum Design | Machine learning can analyze data to identify gaps in the curriculum and suggest improvements. | More relevant and effective curriculum, better alignment with industry needs, improved student preparedness |

7. Future of Machine Learning

Machine learning continues to be a rapidly progressing field. Recent advancements are promising, but represent just the beginning.

7.1 Quantum Computing

Quantum computers process information using quantum mechanics, offering capabilities beyond conventional computers. They process data faster, accessing information at a microscopic level, as explained in Nature.

7.2 Is AutoML the Future of Machine Learning?

AutoML automates the training and tuning of machine learning models. Developed by Google, AutoML streamlines development processes for businesses, automating tasks such as model development, feature building, and optimization.

8. Final Word on the History of Machine Learning

Machine learning has evolved since its inception in the 1940s. Today, it powers tasks from facial recognition to automated driving. With the right dataset, machine learning can achieve nearly anything. As the field grows, expect to see more applications of machine learning in the future.

By providing detailed insights and practical knowledge, LEARNS.EDU.VN empowers learners to understand and apply machine learning effectively.

9. FAQs on History of Machine Learning

Q1: Who invented machine learning?

While Alan Turing laid early foundations, Arthur Samuel coined the term “machine learning” in 1959.

Q2: Is there a book on the history of machine learning?

While a definitive book may be lacking, articles and videos provide valuable historical insights.

Q3: How fast will the development of machine learning progress?

Machine learning development will continue to accelerate with increased investment and research.

Q4: What were some of the earliest applications of Machine Learning?

Early applications include game-playing programs, such as Arthur Samuel’s checkers program, and pattern recognition systems, like Frank Rosenblatt’s Perceptron.

Q5: How did the AI Winter affect Machine Learning research?

The AI Winter led to reduced funding and interest in AI and ML research, slowing progress in the field for several years.

Q6: What role did the development of neural networks play in Machine Learning history?

The development of neural networks, particularly the backpropagation algorithm, was a crucial step in enabling more complex and effective machine learning models.

Q7: How has increased computing power influenced Machine Learning progress?

Increased computing power has allowed for the training of larger and more complex models on larger datasets, leading to significant advances in ML capabilities.

Q8: What is the significance of the ImageNet Challenge in the history of Machine Learning?

The ImageNet Challenge accelerated the development of computer vision techniques, pushing the boundaries of image recognition and object detection.

Q9: How has the rise of Big Data impacted Machine Learning?

The rise of Big Data has provided machine learning algorithms with vast amounts of data to learn from, leading to more accurate and robust models.

Q10: What are some current trends in Machine Learning research?

Current trends include generative AI, reinforcement learning, explainable AI (XAI), and federated learning, among others.

Are you eager to explore more about machine learning and other innovative fields? Visit LEARNS.EDU.VN at 123 Education Way, Learnville, CA 90210, United States, or contact us via WhatsApp at +1 555-555-1212. Discover our courses and articles designed to help you excel in today’s rapidly evolving landscape. Let learns.edu.vn be your guide in unlocking the future.