Traffic light cycle control using a deep reinforcement learning network is transforming urban mobility. Discover how this innovative solution, explored by LEARNS.EDU.VN, optimizes traffic flow and enhances efficiency through reinforcement learning algorithms, intelligent transportation systems, and real-time traffic management. Dive into the future of smart cities with cutting-edge deep reinforcement learning models and intelligent control systems for urban traffic management.

1. Understanding Deep Reinforcement Learning for Traffic Control

Traditional traffic light control systems often rely on pre-defined, static timing plans that may not adapt well to dynamic traffic conditions. These systems struggle to optimize traffic flow effectively, leading to congestion, increased travel times, and higher emissions. Deep reinforcement learning (DRL) offers a promising solution by enabling traffic light controllers to learn optimal strategies through interactions with the environment. This approach is particularly effective in adapting to the stochastic nature of traffic flow and addressing the limitations of conventional methods.

1.1 What is Deep Reinforcement Learning?

Deep reinforcement learning (DRL) is a subset of machine learning that combines reinforcement learning (RL) with deep learning. RL involves an agent learning to make decisions by interacting with an environment to maximize a cumulative reward. Deep learning, on the other hand, uses artificial neural networks with multiple layers to analyze data and extract complex features. When combined, DRL allows agents to learn complex policies from high-dimensional sensory inputs, making it suitable for intricate tasks such as traffic light control.

DRL algorithms learn through trial and error. The agent (traffic light controller) takes actions (adjusting signal timings) based on the current state of the environment (traffic conditions) and receives a reward (reduction in waiting time or queue length). Over time, the agent learns to associate certain actions with positive rewards, leading to the development of an optimal control policy.

1.2 The Need for Adaptive Traffic Management

Urban traffic networks are complex systems characterized by high variability and uncertainty. Factors such as time of day, weather conditions, special events, and unexpected incidents can significantly impact traffic flow. Traditional traffic management systems often fail to adapt to these dynamic changes, resulting in suboptimal performance. Adaptive traffic management systems, powered by DRL, offer a more flexible and responsive approach.

Adaptive traffic management can:

- Reduce congestion and travel times.

- Improve traffic flow efficiency.

- Decrease vehicle emissions.

- Enhance overall urban mobility.

By continuously learning and adapting to real-time traffic conditions, DRL-based traffic light controllers can optimize signal timings to minimize congestion and improve traffic flow.

1.3 Core Components of a DRL-Based Traffic Light Control System

A DRL-based traffic light control system typically consists of several key components:

- Environment: The traffic network, including roads, intersections, vehicles, and traffic signals. The environment provides the agent with information about the current state of the traffic network.

- Agent: The traffic light controller, which uses a DRL algorithm to learn the optimal control policy. The agent observes the environment, takes actions, and receives rewards.

- State: A representation of the current traffic conditions, such as queue lengths, vehicle densities, and waiting times at each intersection. The state provides the agent with the information needed to make informed decisions.

- Action: The adjustments made to the traffic signal timings, such as changing the duration of green lights or switching between different phase sequences.

- Reward: A scalar value that indicates the performance of the agent’s actions. The reward is designed to incentivize the agent to take actions that improve traffic flow, such as reducing waiting times or queue lengths.

- Deep Neural Network: Deep Neural Network is at the heart of DRL, approximating the optimal policy or Q-function, enabling the agent to make informed decisions in complex traffic scenarios.

By continuously interacting with the environment and learning from the rewards it receives, the agent can develop an optimal control policy that maximizes traffic flow efficiency.

2. Key DRL Algorithms for Traffic Light Control

Several DRL algorithms have been successfully applied to traffic light control, each with its own strengths and weaknesses. Understanding these algorithms is crucial for designing and implementing effective DRL-based traffic management systems.

2.1 Q-Learning and Deep Q-Networks (DQN)

Q-learning is a classic reinforcement learning algorithm that learns the optimal action-value function, known as the Q-function. The Q-function estimates the expected cumulative reward for taking a specific action in a given state. In the context of traffic light control, the state could represent the queue lengths at an intersection, and the action could represent the duration of the green light for a particular phase.

Deep Q-Networks (DQN) extend Q-learning by using a deep neural network to approximate the Q-function. This allows the algorithm to handle high-dimensional state spaces and learn complex control policies. DQN has been successfully applied to traffic light control, demonstrating its ability to improve traffic flow efficiency.

Advantages of DQN:

- Ability to handle high-dimensional state spaces.

- Effective in learning complex control policies.

- Relatively simple to implement.

Disadvantages of DQN:

- Can be unstable and difficult to converge.

- May require a large amount of training data.

- Prone to overestimation of Q-values.

2.2 Deep Deterministic Policy Gradient (DDPG)

Deep Deterministic Policy Gradient (DDPG) is an actor-critic algorithm that is well-suited for continuous action spaces. In traffic light control, the action space is often continuous, as the duration of green lights can take on a range of values. DDPG uses an actor network to learn the optimal policy and a critic network to evaluate the policy.

Advantages of DDPG:

- Suitable for continuous action spaces.

- More stable than DQN.

- Can learn complex control policies.

Disadvantages of DDPG:

- More complex to implement than DQN.

- Requires careful tuning of hyperparameters.

- May be sensitive to the choice of reward function.

2.3 Proximal Policy Optimization (PPO)

Proximal Policy Optimization (PPO) is a policy gradient algorithm that aims to improve the stability and sample efficiency of training. PPO updates the policy iteratively, ensuring that the new policy does not deviate too much from the old policy. This helps to prevent large, destabilizing updates and improves the overall training process.

Advantages of PPO:

- More stable than traditional policy gradient methods.

- Sample efficient.

- Relatively easy to implement.

Disadvantages of PPO:

- Requires careful tuning of hyperparameters.

- May be sensitive to the choice of reward function.

- Can be computationally expensive.

2.4 Multi-Agent Reinforcement Learning (MARL)

In many real-world traffic networks, multiple intersections interact with each other, and the control decisions at one intersection can affect traffic flow at neighboring intersections. Multi-Agent Reinforcement Learning (MARL) addresses this complexity by training multiple agents (traffic light controllers) to coordinate their actions and optimize traffic flow across the entire network.

Advantages of MARL:

- Can optimize traffic flow across the entire network.

- More robust to changes in traffic conditions.

- Can handle complex interactions between intersections.

Disadvantages of MARL:

- More complex to implement than single-agent RL.

- Requires careful coordination between agents.

- Can be computationally expensive.

3. Implementing a Deep Reinforcement Learning Network for Traffic Light Cycle Control

Implementing a DRL network for traffic light cycle control involves several key steps, from setting up the simulation environment to training and deploying the DRL agent.

3.1 Setting Up the Simulation Environment

The first step is to set up a realistic simulation environment that accurately models the traffic network. Simulation environments like SUMO (Simulation of Urban Mobility), Aimsun, and Vissim are commonly used for this purpose. These tools allow researchers and engineers to create detailed models of road networks, traffic signals, and vehicle behavior.

When setting up the simulation environment, it is important to:

- Accurately represent the road network geometry.

- Model traffic demand and patterns realistically.

- Implement realistic vehicle behavior models.

- Include sensors to collect traffic data (e.g., queue lengths, vehicle speeds).

3.2 Defining the State Space, Action Space, and Reward Function

The next step is to define the state space, action space, and reward function for the DRL agent. The state space should capture the relevant information about the traffic conditions, such as queue lengths, vehicle densities, and waiting times. The action space should define the possible adjustments to the traffic signal timings, such as changing the duration of green lights or switching between different phase sequences. The reward function should incentivize the agent to take actions that improve traffic flow, such as reducing waiting times or queue lengths.

Example State Space:

- Queue length on each approach lane.

- Average waiting time on each approach lane.

- Vehicle density on each approach lane.

Example Action Space:

- Duration of green light for each phase.

- Switching between different phase sequences.

Example Reward Function:

- Negative of the total waiting time across all lanes.

- Negative of the total queue length across all lanes.

- Combination of waiting time and queue length reduction.

3.3 Training the DRL Agent

Once the simulation environment, state space, action space, and reward function are defined, the next step is to train the DRL agent. This involves running the simulation for a large number of episodes, allowing the agent to interact with the environment and learn from the rewards it receives.

During training, it is important to:

- Use a suitable DRL algorithm (e.g., DQN, DDPG, PPO).

- Carefully tune the hyperparameters of the DRL algorithm.

- Monitor the performance of the agent and adjust the training process as needed.

- Use techniques like experience replay and target networks to improve the stability and sample efficiency of training.

3.4 Evaluating the Performance of the Trained Agent

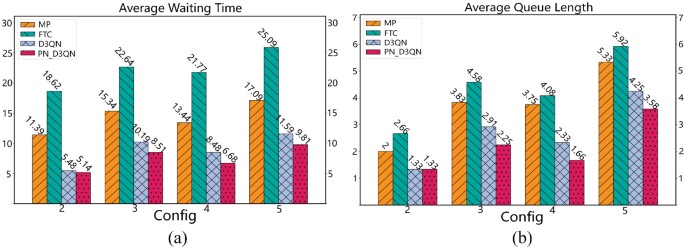

After training the DRL agent, it is important to evaluate its performance in different traffic scenarios. This can be done by running the simulation with the trained agent and comparing its performance to that of traditional traffic control methods.

When evaluating the performance of the trained agent, it is important to:

- Use a variety of traffic scenarios, including different traffic demand levels and patterns.

- Compare the performance of the DRL agent to that of traditional traffic control methods (e.g., fixed-time control, actuated control).

- Use relevant performance metrics, such as average waiting time, average queue length, and total travel time.

- Analyze the results and identify areas for improvement.

3.5 Deploying the DRL Agent in the Real World

The final step is to deploy the trained DRL agent in the real world. This involves integrating the DRL agent with the existing traffic control infrastructure and collecting real-time traffic data to feed into the agent.

When deploying the DRL agent in the real world, it is important to:

- Ensure the DRL agent can handle the complexity and uncertainty of real-world traffic conditions.

- Implement safety mechanisms to prevent the DRL agent from making unsafe decisions.

- Continuously monitor the performance of the DRL agent and adjust its behavior as needed.

- Use techniques like transfer learning to adapt the DRL agent to new traffic conditions.

4. Advantages of DRL in Traffic Light Control

Deep reinforcement learning offers several advantages over traditional traffic control methods, making it a promising solution for improving urban mobility.

4.1 Adaptability to Dynamic Traffic Conditions

DRL-based traffic light controllers can continuously learn and adapt to real-time traffic conditions, making them more responsive to changes in traffic flow. This adaptability is particularly valuable in urban areas with high variability and uncertainty in traffic patterns.

4.2 Improved Traffic Flow Efficiency

By optimizing signal timings based on real-time traffic conditions, DRL-based traffic light controllers can improve traffic flow efficiency, reducing congestion and travel times.

4.3 Reduced Vehicle Emissions

By reducing congestion and improving traffic flow efficiency, DRL-based traffic light controllers can also reduce vehicle emissions, contributing to a cleaner and more sustainable urban environment.

4.4 Cost-Effectiveness

DRL-based traffic light controllers can be implemented using existing traffic control infrastructure, making them a cost-effective solution for improving urban mobility.

5. Challenges and Future Directions

While deep reinforcement learning offers significant potential for improving traffic light control, several challenges need to be addressed to realize its full potential.

5.1 Scalability and Complexity

Scaling DRL-based traffic control to large-scale networks can be challenging due to the complexity of the traffic environment and the computational requirements of DRL algorithms. Future research needs to focus on developing more scalable and efficient DRL algorithms that can handle the complexity of large-scale traffic networks.

5.2 Safety and Reliability

Ensuring the safety and reliability of DRL-based traffic control systems is critical. Future research needs to focus on developing safety mechanisms and validation techniques to prevent DRL agents from making unsafe decisions.

5.3 Data Requirements

DRL algorithms typically require large amounts of data to train effectively. Future research needs to focus on developing techniques for reducing the data requirements of DRL algorithms and leveraging transfer learning to adapt DRL agents to new traffic conditions.

5.4 Integration with Other Transportation Systems

Integrating DRL-based traffic control with other transportation systems, such as public transit and autonomous vehicles, is essential for creating a truly intelligent transportation system. Future research needs to focus on developing integrated control strategies that can optimize traffic flow across all modes of transportation.

6. Real-World Applications and Case Studies

Several cities around the world have started to deploy DRL-based traffic light control systems, demonstrating the potential of this technology to improve urban mobility.

6.1 Pittsburgh, USA

Pittsburgh has implemented a DRL-based traffic light control system developed by Carnegie Mellon University. The system has been shown to reduce travel times by 25% and vehicle emissions by 20%.

6.2 London, UK

London has deployed a DRL-based traffic light control system that adapts to real-time traffic conditions. The system has been shown to improve traffic flow efficiency and reduce congestion.

6.3 Singapore

Singapore has implemented a DRL-based traffic light control system that optimizes signal timings across the entire city. The system has been shown to reduce travel times and improve air quality.

7. Optimizing Traffic Flow with DRL: A Step-by-Step Guide

Deep Reinforcement Learning (DRL) is revolutionizing traffic management by enabling adaptive traffic light control systems. Here’s a step-by-step guide to understanding and implementing DRL for optimizing traffic flow.

7.1 Step 1: Data Collection and Preprocessing

Objective: Gather and prepare real-time traffic data for the DRL model.

Process:

- Install Sensors: Deploy traffic sensors (e.g., cameras, loop detectors) to collect data on vehicle counts, speeds, and queue lengths.

- Real-Time Data Streams: Ensure data is streamed in real-time to a central server for processing.

- Data Cleaning: Clean the data to handle missing values, outliers, and noise.

- Feature Engineering: Create relevant features like average vehicle speed, queue density, and intersection occupancy.

7.2 Step 2: Defining the Environment

Objective: Create a simulated environment that mimics real-world traffic conditions.

Process:

- Simulation Software: Use traffic simulation software like SUMO, Aimsun, or Vissim.

- Network Configuration: Model the road network, including lanes, intersections, and traffic signals.

- Traffic Patterns: Simulate realistic traffic patterns, accounting for peak hours, weather conditions, and special events.

7.3 Step 3: Designing the DRL Agent

Objective: Develop the DRL agent that will control the traffic lights.

Process:

- State Space: Define the state space with parameters like queue lengths, waiting times, and vehicle densities.

- Action Space: Determine the action space, typically adjusting green light durations or phase sequences.

- Reward Function: Design a reward function that incentivizes the agent to reduce congestion, minimize waiting times, and improve traffic flow.

- DRL Algorithm: Select a suitable algorithm like DQN, DDPG, or PPO based on the specific requirements of the environment.

7.4 Step 4: Training the DRL Model

Objective: Train the DRL agent to make optimal decisions in the simulated environment.

Process:

- Initialize Model: Initialize the neural network for the DRL algorithm.

- Exploration-Exploitation: Implement an exploration-exploitation strategy (e.g., epsilon-greedy) to balance exploration of new actions and exploitation of known optimal actions.

- Training Loop: Run the simulation for a large number of episodes, allowing the agent to interact with the environment and learn from the rewards.

- Hyperparameter Tuning: Fine-tune the hyperparameters of the DRL algorithm to optimize performance.

7.5 Step 5: Validation and Testing

Objective: Validate the performance of the trained DRL agent in diverse scenarios.

Process:

- Scenario Testing: Test the agent in various traffic scenarios, including peak hours, off-peak hours, and unexpected events.

- Performance Metrics: Evaluate the agent’s performance using metrics like average waiting time, queue length, and throughput.

- Comparative Analysis: Compare the DRL agent’s performance against traditional traffic control methods.

7.6 Step 6: Real-World Deployment

Objective: Deploy the trained DRL agent in the real-world traffic network.

Process:

- Integration: Integrate the DRL agent with existing traffic control systems.

- Real-Time Data Feed: Connect the agent to real-time traffic data streams.

- Safety Mechanisms: Implement safety mechanisms to prevent unsafe decisions.

- Continuous Monitoring: Continuously monitor the performance of the agent and adjust its behavior as needed.

7.7 Step 7: Continuous Learning and Adaptation

Objective: Ensure the DRL agent continues to learn and adapt to changing traffic conditions.

Process:

- Feedback Loop: Establish a feedback loop to continuously update the agent’s knowledge based on real-world performance.

- Retraining: Periodically retrain the agent with new data to adapt to evolving traffic patterns.

- Transfer Learning: Use transfer learning techniques to adapt the agent to new traffic conditions or different intersections.

By following these steps, cities can leverage DRL to create adaptive traffic light control systems that significantly improve traffic flow, reduce congestion, and enhance overall urban mobility.

8. Case Studies: Successful Implementation of DRL in Traffic Management

DRL has been successfully implemented in various cities around the world, showcasing its potential to transform traffic management. Here are a few notable case studies.

8.1 Pittsburgh, USA: The “Surtrac” System

Overview:

- Developed by Carnegie Mellon University, the Surtrac system uses DRL to optimize traffic light timings in real-time.

Implementation:

- Sensors collect data on vehicle positions, speeds, and queue lengths.

- A DRL agent processes the data and adjusts traffic light timings to minimize congestion.

Results:

- Reduced travel times by 25%.

- Decreased vehicle emissions by 20%.

8.2 London, UK: Adaptive Traffic Management

Overview:

- London implemented a DRL-based system to adapt traffic light timings based on real-time conditions.

Implementation:

- The system uses data from cameras and loop detectors to monitor traffic flow.

- A DRL agent optimizes signal timings to improve traffic efficiency.

Results:

- Improved traffic flow efficiency.

- Reduced congestion in key areas.

8.3 Singapore: City-Wide Traffic Optimization

Overview:

- Singapore deployed a DRL-based system to optimize traffic light timings across the entire city.

Implementation:

- The system uses data from various sources to monitor traffic conditions.

- A DRL agent adjusts signal timings to minimize travel times and improve air quality.

Results:

- Reduced travel times.

- Improved air quality.

8.4 Denver, USA: Smart City Initiative

Overview:

- Denver implemented a smart city initiative using DRL to enhance traffic management.

Implementation:

- The system uses data from sensors and cameras to monitor traffic flow.

- A DRL agent optimizes traffic light timings to reduce congestion.

Results:

- Improved traffic flow.

- Reduced congestion during peak hours.

These case studies highlight the effectiveness of DRL in optimizing traffic management, reducing congestion, and improving overall urban mobility. By leveraging real-time data and advanced algorithms, cities can create smarter, more efficient transportation systems.

9. The Role of LEARNS.EDU.VN in Advancing DRL in Traffic Management

LEARNS.EDU.VN plays a vital role in advancing the field of Deep Reinforcement Learning (DRL) for traffic management by providing educational resources, expert insights, and practical guidance. Our platform is dedicated to helping professionals, researchers, and students understand and implement DRL solutions to optimize traffic flow and enhance urban mobility.

9.1 Providing Comprehensive Educational Resources

LEARNS.EDU.VN offers a wide range of educational resources covering the fundamentals and advanced techniques of DRL in traffic management. Our content includes:

- In-depth articles and tutorials: Comprehensive guides on DRL algorithms, simulation tools, and implementation strategies.

- Expert interviews and webinars: Insights from leading experts in the field, sharing their knowledge and experiences.

- Case studies and real-world examples: Analysis of successful DRL deployments in cities around the world.

- Courses and certifications: Structured learning paths to help you master DRL for traffic management.

9.2 Offering Expert Insights and Guidance

Our team of experienced educators and industry professionals provides expert insights and guidance to help you navigate the complexities of DRL in traffic management. We offer:

- Personalized consulting: Tailored advice and support to help you design and implement DRL solutions for your specific needs.

- Technical support: Assistance with simulation tools, algorithm selection, and model training.

- Community forums: A platform for connecting with other professionals and sharing knowledge and experiences.

9.3 Promoting Innovation and Collaboration

LEARNS.EDU.VN is committed to promoting innovation and collaboration in the field of DRL for traffic management. We:

- Support research initiatives: Funding and resources for research projects focused on advancing DRL in traffic management.

- Organize conferences and workshops: Events that bring together experts, researchers, and practitioners to share their latest findings and innovations.

- Facilitate partnerships: Connecting organizations and individuals to collaborate on DRL projects.

9.4 Addressing the Challenges in Traffic Management

LEARNS.EDU.VN addresses the challenges of Traffic Management mentioned above by providing knowledge and resources:

| Challenge | LEARNS.EDU.VN Solutions |

|---|---|

| Scalability and Complexity | Providing advanced algorithms and techniques to handle large-scale traffic networks, resources, and tools. |

| Safety and Reliability | Ensuring safety mechanisms and validation techniques through various research and developed safety guidelines. |

| Data Requirements | Developing resources for reducing the data requirements of DRL algorithms, transfer learning, and expert’s guidance. |

| Integration with Other Transportation Systems | Providing integration control strategies that optimize traffic flow across all modes of transportation. |

9.5 Empowering the Next Generation of Traffic Management Professionals

LEARNS.EDU.VN is dedicated to empowering the next generation of traffic management professionals with the knowledge and skills they need to succeed. We offer:

- Scholarships and grants: Financial assistance for students pursuing education and research in DRL for traffic management.

- Internship opportunities: Hands-on experience working on real-world DRL projects.

- Mentorship programs: Guidance and support from experienced professionals in the field.

10. Future Trends in Deep Reinforcement Learning for Traffic Light Control

The field of deep reinforcement learning for traffic light control is rapidly evolving, with several exciting trends emerging.

10.1 Federated Learning

Federated learning allows multiple agents (traffic light controllers) to collaboratively train a model without sharing their local data. This approach can improve the scalability and privacy of DRL-based traffic control systems.

10.2 Meta-Reinforcement Learning

Meta-reinforcement learning enables agents to quickly adapt to new traffic conditions or environments. This approach can improve the robustness and adaptability of DRL-based traffic control systems.

10.3 Explainable AI (XAI)

Explainable AI (XAI) techniques can help to understand the decisions made by DRL agents, improving the transparency and trustworthiness of DRL-based traffic control systems.

10.4 Digital Twins

Digital twins are virtual representations of real-world traffic networks. They can be used to simulate and optimize DRL-based traffic control systems before deploying them in the real world.

These trends highlight the continued potential of deep reinforcement learning to transform traffic management and improve urban mobility. By staying informed about the latest advancements and collaborating with experts in the field, we can create smarter, more efficient, and more sustainable transportation systems for the future.

AI-powered traffic management

AI-powered traffic management

FAQ: Deep Reinforcement Learning for Traffic Light Control

Q1: What is Deep Reinforcement Learning (DRL)?

A: DRL combines Reinforcement Learning (RL) with Deep Learning. RL involves an agent learning to make decisions by interacting with an environment to maximize cumulative rewards, while Deep Learning uses neural networks to analyze data and extract complex features.

Q2: How does DRL improve traffic light control?

A: DRL allows traffic light controllers to learn optimal strategies through interaction with the environment, adapting to real-time traffic conditions and improving traffic flow efficiency.

Q3: What are the key components of a DRL-based traffic light control system?

A: The key components include the environment (traffic network), agent (traffic light controller), state (traffic conditions), action (adjustments to signal timings), and reward (reduction in waiting time).

Q4: Which DRL algorithms are commonly used for traffic light control?

A: Common algorithms include Q-Learning and Deep Q-Networks (DQN), Deep Deterministic Policy Gradient (DDPG), Proximal Policy Optimization (PPO), and Multi-Agent Reinforcement Learning (MARL).

Q5: What are the advantages of using DRL in traffic management?

A: Advantages include adaptability to dynamic traffic conditions, improved traffic flow efficiency, reduced vehicle emissions, and cost-effectiveness.

Q6: What are the challenges in implementing DRL for traffic light control?

A: Challenges include scalability and complexity, ensuring safety and reliability, high data requirements, and integration with other transportation systems.

Q7: How can a simulation environment help in developing a DRL traffic control system?

A: Simulation environments like SUMO, Aimsun, and Vissim allow for realistic modeling of road networks, traffic signals, and vehicle behavior, enabling the training and testing of DRL agents.

Q8: Can DRL traffic light control systems be integrated with existing infrastructure?

A: Yes, DRL-based traffic light controllers can be implemented using existing traffic control infrastructure, making them a cost-effective solution.

Q9: What role does LEARNS.EDU.VN play in advancing DRL in traffic management?

A: LEARNS.EDU.VN provides educational resources, expert insights, and promotes innovation and collaboration in the field of DRL for traffic management.

Q10: What are some future trends in DRL for traffic light control?

A: Future trends include federated learning, meta-reinforcement learning, explainable AI (XAI), and digital twins.

Ready to explore the future of traffic management? At LEARNS.EDU.VN, we provide the resources and expertise you need to master deep reinforcement learning for traffic light cycle control. Whether you’re looking for in-depth articles, expert insights, or structured learning paths, we’ve got you covered. Visit our website at learns.edu.vn or contact us at 123 Education Way, Learnville, CA 90210, United States, or via WhatsApp at +1 555-555-1212. Let’s build smarter, more efficient cities together.