A Deep Learning Framework For Neuroscience provides powerful tools for analyzing neural data and modeling brain function, offering insights into neurological disorders and cognitive processes; LEARNS.EDU.VN offers resources and guidance to help you harness this technology. This framework enables researchers to build sophisticated models, automate data analysis, and gain a deeper understanding of the complexities of the nervous system, with advancements contributing to improvements in neural network architecture, computational neuroscience techniques, and machine learning algorithms.

1. What Is A Deep Learning Framework for Neuroscience?

A deep learning framework for neuroscience is a specialized set of tools and algorithms designed to analyze neural data and build models of brain function. These frameworks leverage deep learning techniques to extract meaningful patterns from complex datasets, offering new insights into neurological disorders and cognitive processes. Deep learning, a subset of machine learning, uses artificial neural networks with multiple layers (hence “deep”) to analyze data with the goal of recognizing patterns and making predictions. This is especially useful in neuroscience, where the data is often high-dimensional and non-linear.

1.1. What are the Key Components of a Deep Learning Framework for Neuroscience?

The framework typically includes several key components that work together to facilitate the analysis and modeling process:

- Data Preprocessing Tools: These tools help clean, normalize, and format neural data for use in deep learning models.

- Model Building Blocks: Libraries of pre-built neural network layers and architectures that can be customized for specific tasks.

- Training Algorithms: Optimization algorithms used to train deep learning models on neural data.

- Evaluation Metrics: Metrics for assessing the performance of trained models and comparing different approaches.

- Visualization Tools: Tools for visualizing neural data, model architectures, and model predictions.

1.2. Why Is Deep Learning Suitable for Neuroscience?

Deep learning excels in neuroscience for several reasons:

- Handling Complex Data: Neural data, such as EEG, fMRI, and electrophysiological recordings, is often high-dimensional and non-linear. Deep learning models can effectively capture these complex patterns.

- Feature Extraction: Deep learning algorithms can automatically learn relevant features from raw data, reducing the need for manual feature engineering.

- Scalability: Deep learning models can scale to handle large datasets, allowing researchers to analyze vast amounts of neural data.

- Pattern Recognition: Deep learning can identify subtle patterns and relationships in neural data that may be missed by traditional analysis methods.

Figure 1. Illustration of the dataset for a representative patient. The Figure shows an illustration of the denoised TOF image of a representative patient (A), the corresponding masked brain image (B) and the corresponding ground-truth image of the brain vessels (C).

2. What Are the Applications of Deep Learning in Neuroscience?

Deep learning has revolutionized numerous areas within neuroscience. Its ability to process complex datasets and extract relevant features makes it invaluable for a wide range of applications.

2.1. Neural Data Analysis

Deep learning models can be used to analyze various types of neural data, including:

- EEG (Electroencephalography): Analyzing brainwave patterns to detect seizures, sleep stages, and cognitive states.

- fMRI (Functional Magnetic Resonance Imaging): Decoding brain activity patterns associated with different tasks and stimuli.

- Electrophysiology: Analyzing neuronal spiking activity to understand neural circuits and coding mechanisms.

- MEG (Magnetoencephalography): Identifying the sources of neural activity and studying brain dynamics.

2.2. Neurological Disorder Diagnosis

Deep learning models have shown promise in diagnosing neurological disorders such as:

- Alzheimer’s Disease: Identifying early signs of Alzheimer’s disease from structural and functional brain imaging.

- Parkinson’s Disease: Detecting Parkinson’s disease based on motor symptoms and brain imaging data.

- Epilepsy: Predicting seizures and personalizing treatment strategies.

- Schizophrenia: Identifying biomarkers for schizophrenia using brain imaging and genetic data.

2.3. Brain-Computer Interfaces

Deep learning can enhance brain-computer interfaces (BCIs) by:

- Decoding Motor Intent: Improving the accuracy and speed of decoding motor intentions from brain signals.

- Adaptive Control: Developing BCIs that adapt to changes in brain activity and user behavior.

- Restoring Function: Assisting individuals with paralysis to control prosthetic devices and communicate.

2.4. Cognitive Modeling

Deep learning models can be used to simulate cognitive processes such as:

- Vision: Modeling how the brain processes visual information and recognizes objects.

- Memory: Understanding how memories are encoded, stored, and retrieved in the brain.

- Decision-Making: Simulating the neural mechanisms underlying decision-making processes.

- Language: Modeling how the brain processes and generates language.

2.5. Predictive Modeling in Cerebrovascular Disease

Deep learning models can predict outcomes and improve treatment strategies in cerebrovascular disease:

- Stroke Prediction: Identifying individuals at high risk of stroke based on clinical and imaging data.

- Treatment Response Prediction: Predicting how patients will respond to different stroke treatments.

- Outcome Prediction: Predicting long-term outcomes after stroke, such as disability and mortality.

- Vessel Segmentation: Automating the segmentation of brain vessels from medical images to assess vessel health and detect abnormalities.

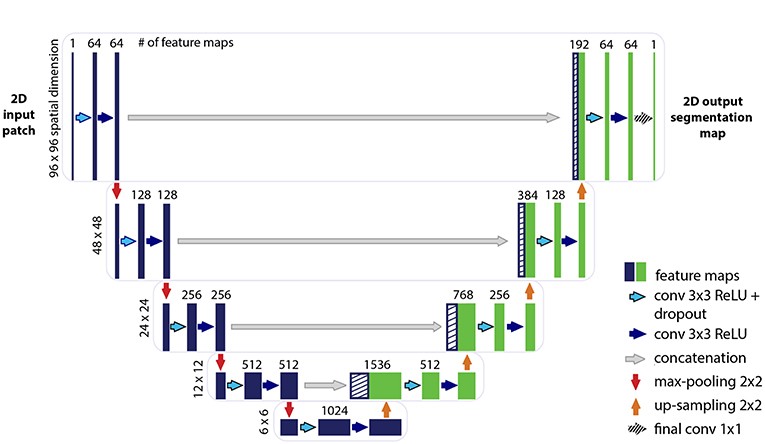

Figure 2. Illustration of the U-net architecture. The figure illustrates the U-net architecture with the largest patch-size of 96 × 96 voxels. The displayed U-net is an encoder-decoder network with a contracting path (encoding part, left side) that reduces the height and width of the input images and an expansive path (decoding part, right side) that recovers the original dimensions of the input images. Each box corresponds to a multi-channel feature map. The dashed boxes stand for the concatenated copied feature maps from the contractive path. The arrows stand for the different operations as listed in the right legend. The number of channels is denoted on top of the box and the image dimensionality (x-y-size) is denoted on the left edge. The half U-net is constructed likewise, with the only difference given by the halved number of channels throughout the network.

3. What Are the Popular Deep Learning Frameworks Used in Neuroscience?

Several deep learning frameworks are commonly used in neuroscience, each with its strengths and weaknesses.

3.1. TensorFlow

TensorFlow, developed by Google, is one of the most popular deep learning frameworks. It offers a flexible architecture and a wide range of tools for building and deploying deep learning models.

- Pros:

- Scalability: TensorFlow can run on CPUs, GPUs, and TPUs, making it suitable for large-scale neural data analysis.

- Flexibility: TensorFlow supports a variety of programming languages, including Python and C++.

- Ecosystem: TensorFlow has a large and active community, providing extensive documentation, tutorials, and pre-trained models.

- Cons:

- Complexity: TensorFlow can be complex to learn and use, especially for beginners.

- Debugging: Debugging TensorFlow models can be challenging.

3.2. PyTorch

PyTorch, developed by Facebook, is another widely used deep learning framework. It is known for its ease of use and dynamic computational graph.

- Pros:

- Ease of Use: PyTorch is relatively easy to learn and use, making it a good choice for beginners.

- Dynamic Graphs: PyTorch’s dynamic computational graph allows for more flexibility in model design and debugging.

- Research-Friendly: PyTorch is popular in the research community due to its flexibility and ease of use for experimentation.

- Cons:

- Scalability: PyTorch may not scale as well as TensorFlow for very large datasets.

- Deployment: Deploying PyTorch models can be more challenging than deploying TensorFlow models.

3.3. Keras

Keras is a high-level API that can run on top of TensorFlow, PyTorch, or other deep learning frameworks. It provides a simple and intuitive interface for building deep learning models.

- Pros:

- Simplicity: Keras is very easy to learn and use, making it ideal for beginners.

- Flexibility: Keras can be used with different backends, allowing users to switch between TensorFlow and PyTorch.

- Rapid Prototyping: Keras enables rapid prototyping of deep learning models.

- Cons:

- Limited Control: Keras provides less control over low-level details of model training.

- Performance: Keras models may not be as performant as models built directly in TensorFlow or PyTorch.

3.4. MXNet

MXNet, developed by Amazon, is a scalable and flexible deep learning framework that supports multiple programming languages.

- Pros:

- Scalability: MXNet is designed for large-scale distributed training.

- Flexibility: MXNet supports multiple programming languages, including Python, R, and Scala.

- Performance: MXNet is known for its high performance and efficient memory usage.

- Cons:

- Community: MXNet has a smaller community compared to TensorFlow and PyTorch.

- Documentation: MXNet’s documentation may not be as extensive as that of TensorFlow and PyTorch.

3.5. Deeplearning4j

Deeplearning4j is a deep learning library for Java and Scala. It is designed for use in enterprise environments and supports distributed training on Apache Spark and Hadoop.

- Pros:

- Java Integration: Deeplearning4j integrates well with Java and Scala ecosystems.

- Enterprise-Ready: Deeplearning4j is designed for use in enterprise environments.

- Distributed Training: Deeplearning4j supports distributed training on Apache Spark and Hadoop.

- Cons:

- Limited Adoption: Deeplearning4j has a smaller user base compared to Python-based frameworks.

- Complexity: Deeplearning4j can be complex to set up and configure.

| Framework | Programming Languages | Scalability | Ease of Use | Community Size | Primary Use Cases |

|---|---|---|---|---|---|

| TensorFlow | Python, C++ | High | Moderate | Large | Research, Production, Mobile |

| PyTorch | Python | Moderate | High | Large | Research, Rapid Prototyping |

| Keras | Python | Depends on Backend | Very High | Large | Rapid Prototyping, Education |

| MXNet | Python, R, Scala | High | Moderate | Moderate | Production, Distributed Training |

| Deeplearning4j | Java, Scala | High | Moderate | Small | Enterprise Applications, Distributed Training on Hadoop and Spark |

4. What Are the Essential Steps to Building a Deep Learning Model for Neuroscience?

Building a deep learning model for neuroscience involves several key steps, from data collection to model deployment.

4.1. Data Collection and Preprocessing

The first step is to collect relevant neural data. This may involve:

- Experiment Design: Designing experiments to collect the specific data needed to answer your research question.

- Data Acquisition: Acquiring neural data using techniques such as EEG, fMRI, or electrophysiology.

- Data Cleaning: Removing artifacts and noise from the data.

- Data Normalization: Scaling the data to a consistent range.

4.2. Feature Engineering (Optional)

In some cases, it may be beneficial to perform feature engineering to extract relevant features from the raw data. However, deep learning models can often learn these features automatically.

- Feature Selection: Selecting the most relevant features from the data.

- Feature Transformation: Transforming the features to improve model performance.

4.3. Model Selection

The next step is to choose an appropriate deep learning architecture for your task. This may involve:

- Convolutional Neural Networks (CNNs): CNNs are well-suited for analyzing image-like data, such as fMRI scans.

- Recurrent Neural Networks (RNNs): RNNs are useful for analyzing sequential data, such as EEG recordings.

- Autoencoders: Autoencoders can be used for dimensionality reduction and feature learning.

- U-Nets: U-Nets are particularly effective for image segmentation tasks, such as segmenting brain vessels from medical images.

4.4. Model Training

Once you have selected a model architecture, you need to train it on your data. This involves:

- Data Splitting: Splitting the data into training, validation, and test sets.

- Loss Function Selection: Choosing an appropriate loss function to measure the difference between the model’s predictions and the true values.

- Optimizer Selection: Choosing an optimization algorithm to update the model’s parameters.

- Hyperparameter Tuning: Tuning the model’s hyperparameters, such as learning rate and batch size, to optimize performance.

- Data Augmentation: Applying transformations to the training data to increase its size and variability. Common augmentation techniques include rotations, flips, and shears. As highlighted by Ronneberger et al. (2015), augmentation is crucial when training deep learning models, especially with limited data, to ensure the model is robust to various transformations.

4.5. Model Evaluation

After training, you need to evaluate the model’s performance on the test set. This involves:

- Metric Selection: Choosing appropriate metrics to evaluate the model’s performance, such as accuracy, precision, and recall.

- Performance Analysis: Analyzing the model’s performance to identify areas for improvement.

4.6. Model Deployment

If the model performs well, you can deploy it for use in real-world applications. This may involve:

- Model Optimization: Optimizing the model for speed and memory usage.

- API Development: Developing an API to allow other applications to access the model.

- Integration: Integrating the model into a larger system.

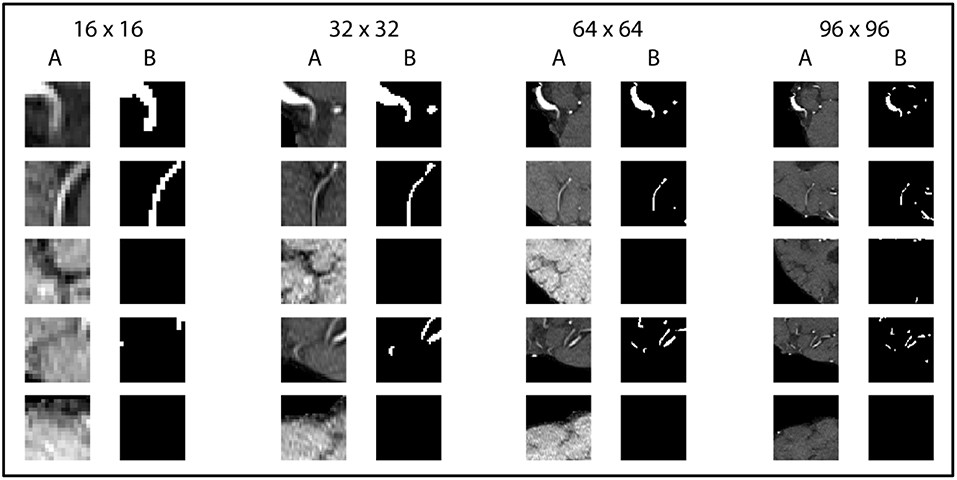

Figure 3. Exemplary patches used for training. Five pairs of random patches with increasing patch size from left to right are shown. “A” columns indicate the MRA-TOF-scans, whereas “B” columns indicate the ground truth label.

5. What Are the Challenges and Future Directions in Deep Learning for Neuroscience?

Despite the great progress in deep learning for neuroscience, several challenges need to be addressed.

5.1. Data Availability

One of the biggest challenges is the limited availability of labeled neural data. Collecting and labeling neural data can be time-consuming and expensive.

5.2. Interpretability

Deep learning models are often “black boxes,” making it difficult to understand how they arrive at their predictions. Improving the interpretability of deep learning models is an active area of research.

5.3. Generalization

Deep learning models can be prone to overfitting, meaning that they perform well on the training data but poorly on new data. Improving the generalization ability of deep learning models is an important goal.

5.4. Computational Resources

Training deep learning models can require significant computational resources, such as GPUs and large amounts of memory. Making deep learning more accessible to researchers with limited resources is an ongoing effort.

5.5. Ethical Considerations

As deep learning models become more powerful, it is important to consider the ethical implications of their use in neuroscience. This includes issues such as privacy, bias, and the potential for misuse.

5.6. Future Directions

Future research directions in deep learning for neuroscience include:

- Developing new deep learning architectures specifically tailored for neural data.

- Improving the interpretability and explainability of deep learning models.

- Developing methods for training deep learning models with limited labeled data.

- Exploring the use of unsupervised and self-supervised learning techniques.

- Integrating deep learning with other neuroscience techniques, such as computational modeling and experimental neuroscience.

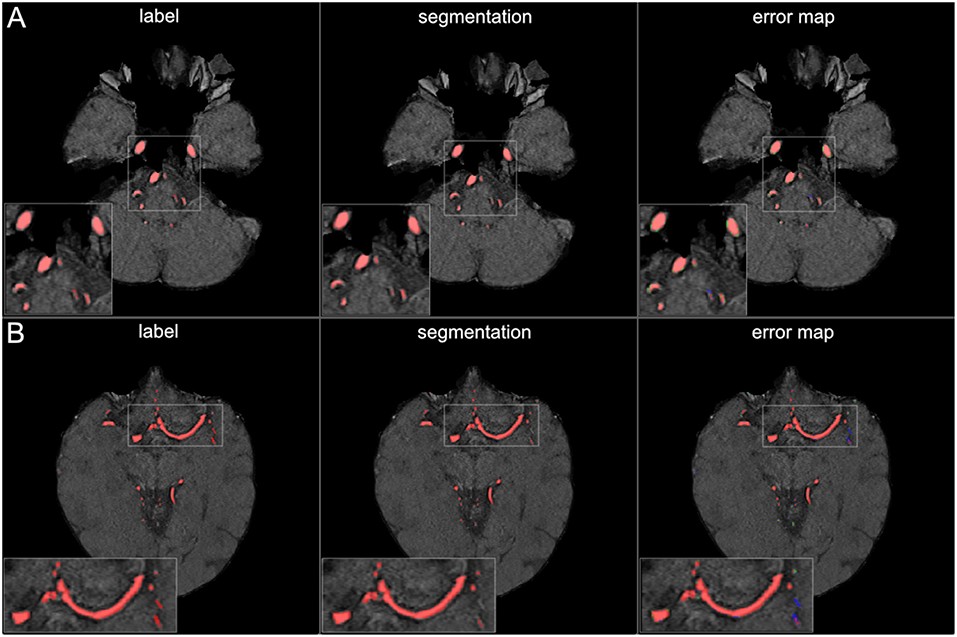

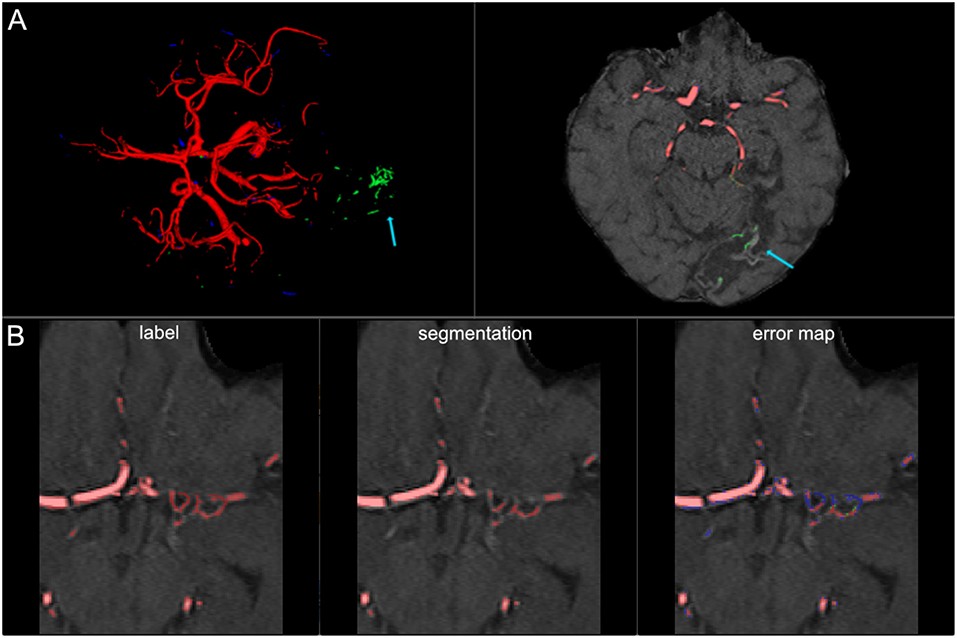

Figure 4. 3D projections of segmentation results. The figure illustrates exemplary segmentation results as 3D projections for two representative patients (A,B). Labels are shown in the first column and exemplary segmentation results are shown in the second column. The third column shows the error map, where red voxels indicate true positives, green voxels false positives, and blue voxels false negatives. Overall a high performance segmentation could be achieved. In the error maps it can be seen that false positives mainly presented as central venous structures and parts of meningeal arteries. (3D view is meant for an overall overview. Due to 3D interpolation, very small structures may appear differently in the images. This does not translate to real voxel-to-voxel differences. For direct voxel-wise comparison please use the 2D-images in Figures 5–8).

6. What Are Some Practical Tips for Using Deep Learning in Neuroscience?

To make the most of deep learning in neuroscience, consider these practical tips:

- Start with a clear research question: Define your goals and ensure deep learning is the right tool.

- Understand your data: Know the properties, limitations, and potential biases in your neural data.

- Choose the right framework: Select a framework that fits your expertise and computational resources.

- Start simple: Begin with simpler models and gradually increase complexity.

- Use pre-trained models: Leverage pre-trained models when possible to reduce training time and improve performance.

- Validate your results: Rigorously validate your models to ensure they generalize to new data.

- Seek collaboration: Collaborate with experts in deep learning and neuroscience to gain insights and overcome challenges.

- Keep learning: Stay updated with the latest advances in deep learning and neuroscience.

- Address class imbalance: When dealing with imbalanced datasets, use appropriate techniques like the Dice coefficient loss function, as it handles skewed ground-truth labels effectively without sample weighting, as noted by Milletari et al. (2016).

7. How Does LEARNS.EDU.VN Help in Mastering Deep Learning for Neuroscience?

LEARNS.EDU.VN is dedicated to providing comprehensive resources and educational materials to help you excel in deep learning for neuroscience.

7.1. Course Offerings

- Introductory Courses: Foundational courses covering the basics of deep learning and neuroscience.

- Advanced Courses: Specialized courses on advanced deep learning techniques for neural data analysis, brain-computer interfaces, and cognitive modeling.

- Hands-On Projects: Practical projects that allow you to apply your knowledge to real-world neuroscience problems.

7.2. Expert Instructors

- Experienced Professionals: Learn from instructors with extensive experience in both deep learning and neuroscience.

- Personalized Guidance: Receive personalized guidance and feedback to help you succeed.

7.3. Community Support

- Networking Opportunities: Connect with other learners and experts in the field.

- Collaborative Projects: Participate in collaborative projects to build your skills and network.

7.4. Resources and Tools

- Comprehensive Guides: Access in-depth guides on deep learning frameworks, neural data analysis techniques, and best practices.

- Code Libraries: Use pre-built code libraries to accelerate your projects.

- Datasets: Access curated datasets for training and evaluating your models.

LEARNS.EDU.VN aims to empower you with the knowledge and skills needed to make significant contributions to the field of neuroscience through deep learning.

8. What Are Some Advanced Techniques in Deep Learning for Neuroscience?

As the field advances, several sophisticated techniques are emerging, enhancing the capabilities of deep learning models in neuroscience.

8.1. Generative Adversarial Networks (GANs)

GANs can be used for data augmentation, generating synthetic neural data that lies in the same domain as the original data. According to Antoniou et al. (2017), GANs ensure a large variety of new data is generated, which can be ideal for segmentation tasks.

8.2. Transfer Learning

Transfer learning involves using pre-trained models on new, unseen vessel images by freezing the convolutional layers and focusing training on the rest of the model. This approach, as noted by Oquab et al. (2014), requires only a few labeled datasets for each new source and allows potential new tools to be easily adapted to various scanner settings, imaging modalities, and even new organs.

8.3. Recurrent Neural Networks (RNNs)

RNNs, especially those with Long Short-Term Memory (LSTM) layers, are well-suited for analyzing sequential neural data such as EEG recordings. By applying these techniques, an increase in small vessel segmentation performance might be possible.

8.4. 3D Convolutional Neural Networks (3D CNNs)

3D CNNs can process volumetric data, such as fMRI scans, more effectively than 2D CNNs. A systematic assessment of the necessary model complexity, particularly the number of feature channels, and 2.5D and 3D approaches is warranted in future studies to find the optimal approach for vessel segmentation.

8.5. Attention Mechanisms

Attention mechanisms allow deep learning models to focus on the most relevant parts of the input data, improving performance and interpretability. These mechanisms can be particularly useful in analyzing complex neural data with many irrelevant features.

9. What are the Ethical Considerations in Using Deep Learning for Neuroscience?

Using deep learning in neuroscience raises several ethical considerations that must be addressed.

9.1. Data Privacy

Protecting the privacy of individuals whose neural data is used to train deep learning models is paramount. Measures must be taken to ensure that data is anonymized and that individuals’ identities cannot be inferred from the data.

9.2. Bias

Deep learning models can perpetuate and amplify biases present in the training data. It is essential to carefully examine the training data for biases and to develop methods for mitigating their impact.

9.3. Misinterpretation

The results of deep learning models can be misinterpreted, leading to incorrect conclusions or decisions. It is crucial to interpret the results of deep learning models cautiously and to consider their limitations.

9.4. Accessibility

Ensuring that the benefits of deep learning for neuroscience are accessible to all, regardless of their background or resources, is an ethical imperative. This includes providing access to data, tools, and training.

10. What are Examples of Success Stories in Deep Learning for Neuroscience?

Several success stories demonstrate the potential of deep learning in neuroscience.

10.1. Improved Stroke Prediction

Deep learning models have been used to predict the risk of stroke based on clinical and imaging data. For example, Feng et al. (2018) reviewed how deep learning guides stroke management and improves clinical applications.

10.2. Enhanced Brain-Computer Interfaces

Deep learning has enhanced the accuracy and speed of decoding motor intentions from brain signals in brain-computer interfaces.

10.3. Better Understanding of Alzheimer’s Disease

Deep learning models have been used to identify early signs of Alzheimer’s disease from structural and functional brain imaging.

10.4. Automated Vessel Segmentation

Deep learning frameworks, particularly U-Net architectures, have enabled the fully automated segmentation of brain vessels from medical images, improving the assessment of vessel health and detection of abnormalities. As demonstrated by Livne et al. (2019), U-Net deep learning frameworks yield high performance for vessel segmentation in patients with cerebrovascular disease.

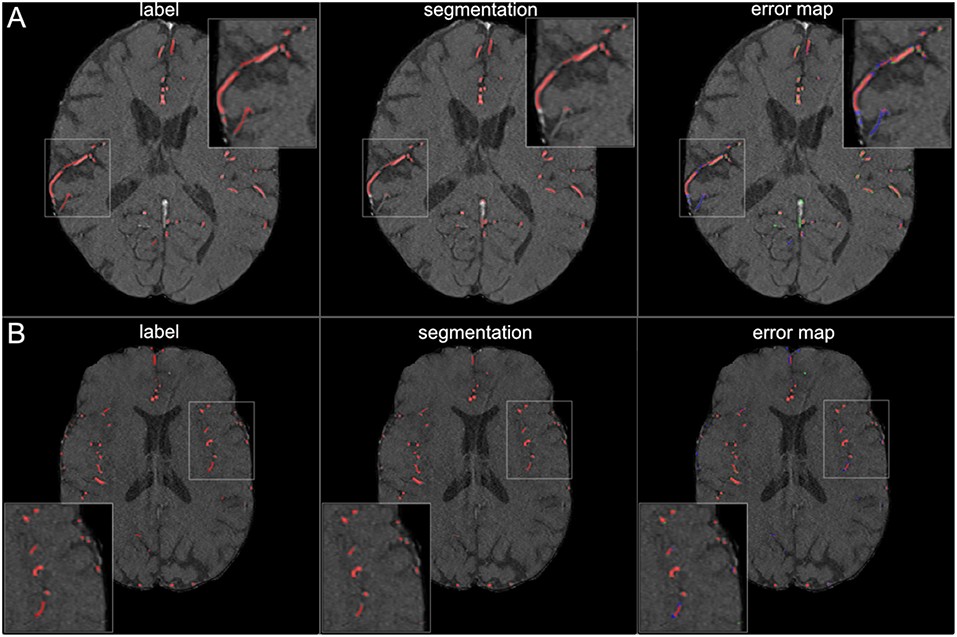

Figure 5. Segmentation results for large vessels. The figure illustrates exemplary segmentation results for large vessels for two representative patients (A,B). Labels are shown in the first column and exemplary segmentation results are shown in the second column. The third column shows the error map, where red voxels indicate true positives, green voxels false positives, and blue voxels false negatives. Only few false positive voxels can be seen in the border zones of the vessels.

10.5. Predicting Treatment Response

Deep learning models can predict how patients will respond to different stroke treatments, aiding in personalized treatment strategies.

FAQ: Deep Learning Framework for Neuroscience

1. What exactly is a deep learning framework for neuroscience?

A deep learning framework for neuroscience is a specialized toolkit that uses deep learning algorithms to analyze neural data and create models of brain functions, providing insights into neurological disorders and cognitive processes.

2. How does deep learning benefit neuroscience research?

Deep learning helps handle complex neural data, automatically extracts features, scales to large datasets, and identifies subtle patterns that traditional methods might miss.

3. Which deep learning frameworks are most popular in neuroscience?

TensorFlow, PyTorch, and Keras are among the most popular frameworks, each offering unique strengths in scalability, ease of use, and flexibility.

4. What types of neural data can deep learning models analyze?

Deep learning models can analyze EEG, fMRI, electrophysiology, and MEG data, extracting meaningful patterns from these complex datasets.

5. Can deep learning assist in diagnosing neurological disorders?

Yes, deep learning models can help diagnose disorders like Alzheimer’s, Parkinson’s, epilepsy, and schizophrenia by identifying biomarkers in brain imaging and genetic data.

6. How does deep learning improve brain-computer interfaces (BCIs)?

Deep learning enhances BCIs by improving the accuracy and speed of decoding motor intentions from brain signals, enabling adaptive control and restoring function for individuals with paralysis.

7. What are the main challenges in applying deep learning to neuroscience?

Challenges include limited availability of labeled neural data, the “black box” nature of models, ensuring generalization to new data, high computational resource requirements, and ethical considerations like data privacy and bias.

8. What steps are involved in building a deep learning model for neuroscience?

The key steps include data collection and preprocessing, feature engineering (optional), model selection, model training, model evaluation, and model deployment.

9. What future directions are anticipated in deep learning for neuroscience?

Future directions include developing specialized deep learning architectures, improving model interpretability, training models with limited data, and integrating deep learning with other neuroscience techniques.

10. How can LEARNS.EDU.VN assist in learning about deep learning for neuroscience?

LEARNS.EDU.VN offers comprehensive courses, expert instructors, community support, and resources like guides, code libraries, and datasets to help you master deep learning for neuroscience.

Deep learning frameworks offer tremendous potential for advancing our understanding of the brain and developing new treatments for neurological disorders. By staying informed, continuously learning, and collaborating with experts, you can contribute to this exciting field. At LEARNS.EDU.VN, we are committed to providing you with the resources and support you need to succeed in deep learning for neuroscience. Visit our website at learns.edu.vn or contact us at 123 Education Way, Learnville, CA 90210, United States or via Whatsapp at +1 555-555-1212 to explore our courses and resources. Start your journey towards mastering deep learning for neuroscience today and unlock new possibilities in brain research and healthcare. You can also find more details and studies in data science, machine learning, and neural networks. Also, visit our site to find opportunities in computational neuroscience, neural engineering, and cognitive science.

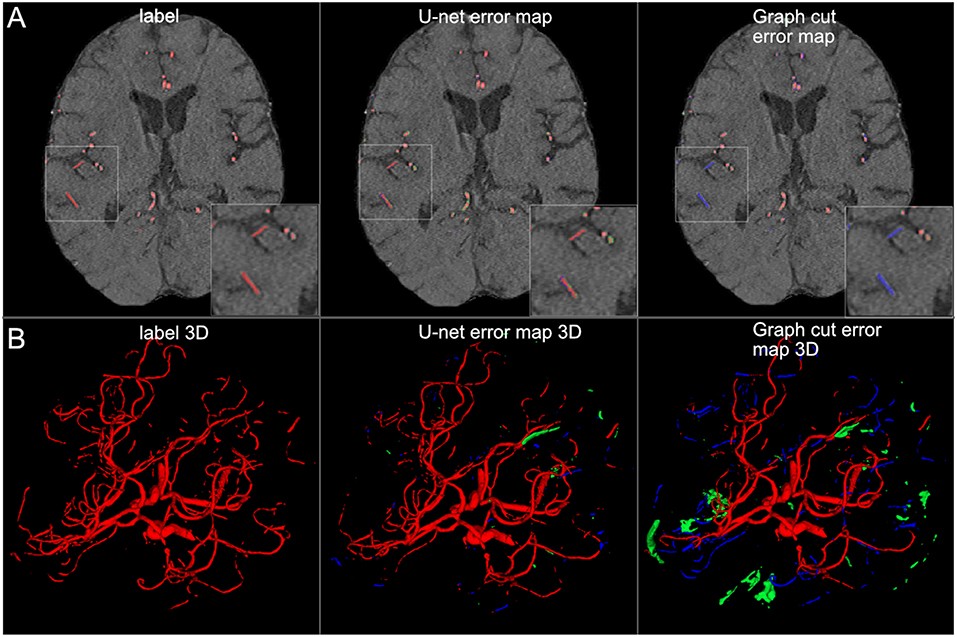

Figure 6. Segmentation results for small vessels. The figure illustrates exemplary segmentation results for small vessels for two representative patients (A,B). Labels are shown in the first column and exemplary segmentation results are shown in the second column. The third column shows the error map, where red voxels indicate true positives, green voxels false positives and blue voxels false negatives. In 7 patients (50%) also small vessels were segmented well, with only few false negatives (B). In the other patients, the small vessels were segmented only sufficiently, with both false positives and false negatives (A).

Figure 7. False labeling of specific cerebrovascular pathologies. (A) The error map of a 3D projection on the left shows falsely labeled structures in the posterior part of the brain (arrow). On a transversal slice (on the right) false labeling of parts of cortical laminar necrosis can be identified as the cause (arrow). (B) The rete mirabile network of small vessels was only partially depicted (false negative labeling in blue in the error map). A rete mirabile is a relatively rare occurrence, only 3 patients of 66 in our study presented with one (2 in the training set and one in the test-set).

Figure 8. Comparison of U-net and graph cut segmentations. (A) 2D comparison and (B) 3D comparison of labels, U-net error maps and graph cut error maps. The quantitative results are confirmed by visual inspection. The graph cut segmentation shows more false negatively (blue) and false positively (green) segmented voxels.